entrance

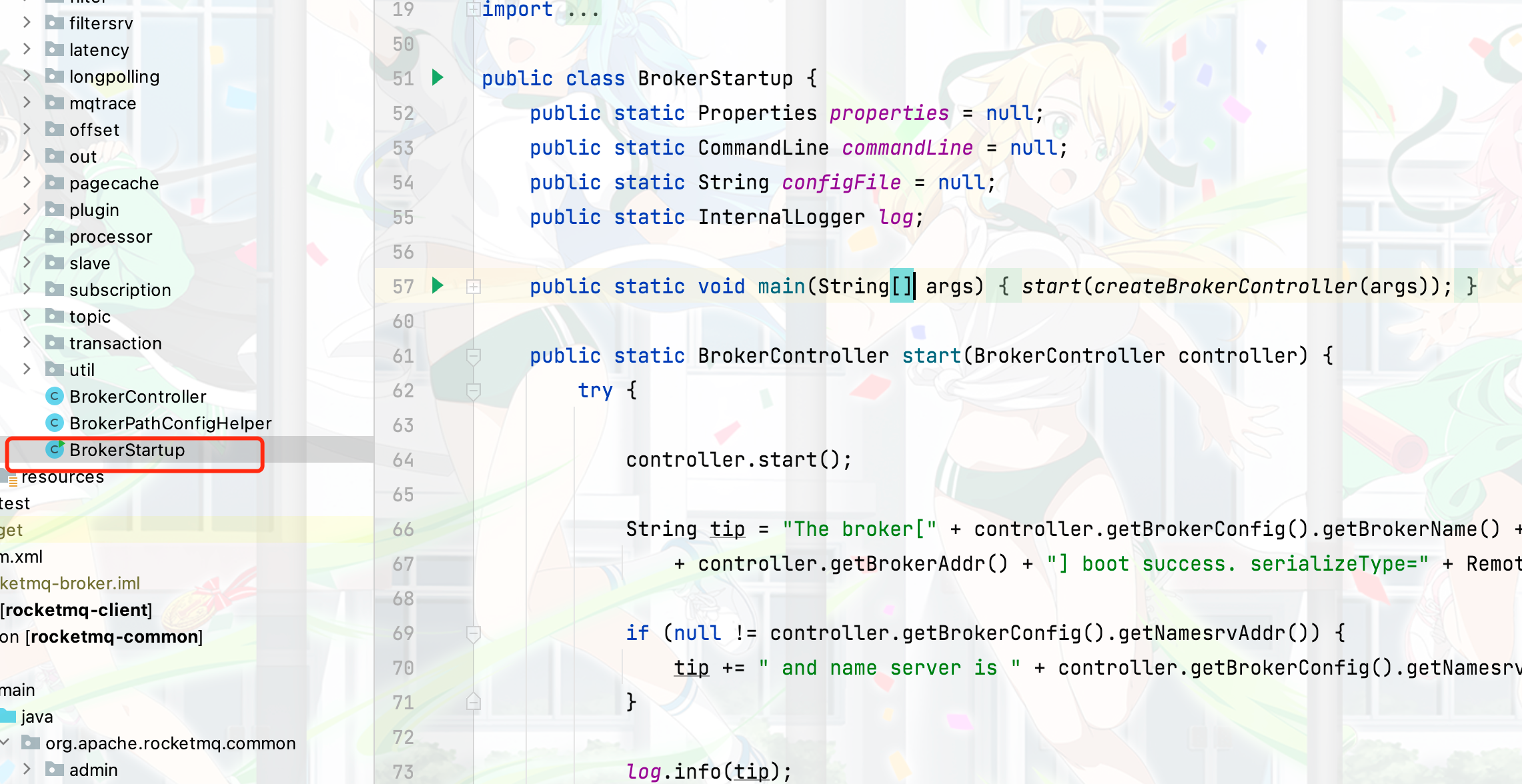

The source code entry for Broker startup is BrokerStartup.java

Core process

The main process is similar to NameServer startup

- Create broker controller

- Start the BrokerController

public static void main(String[] args) {

start(createBrokerController(args));

}

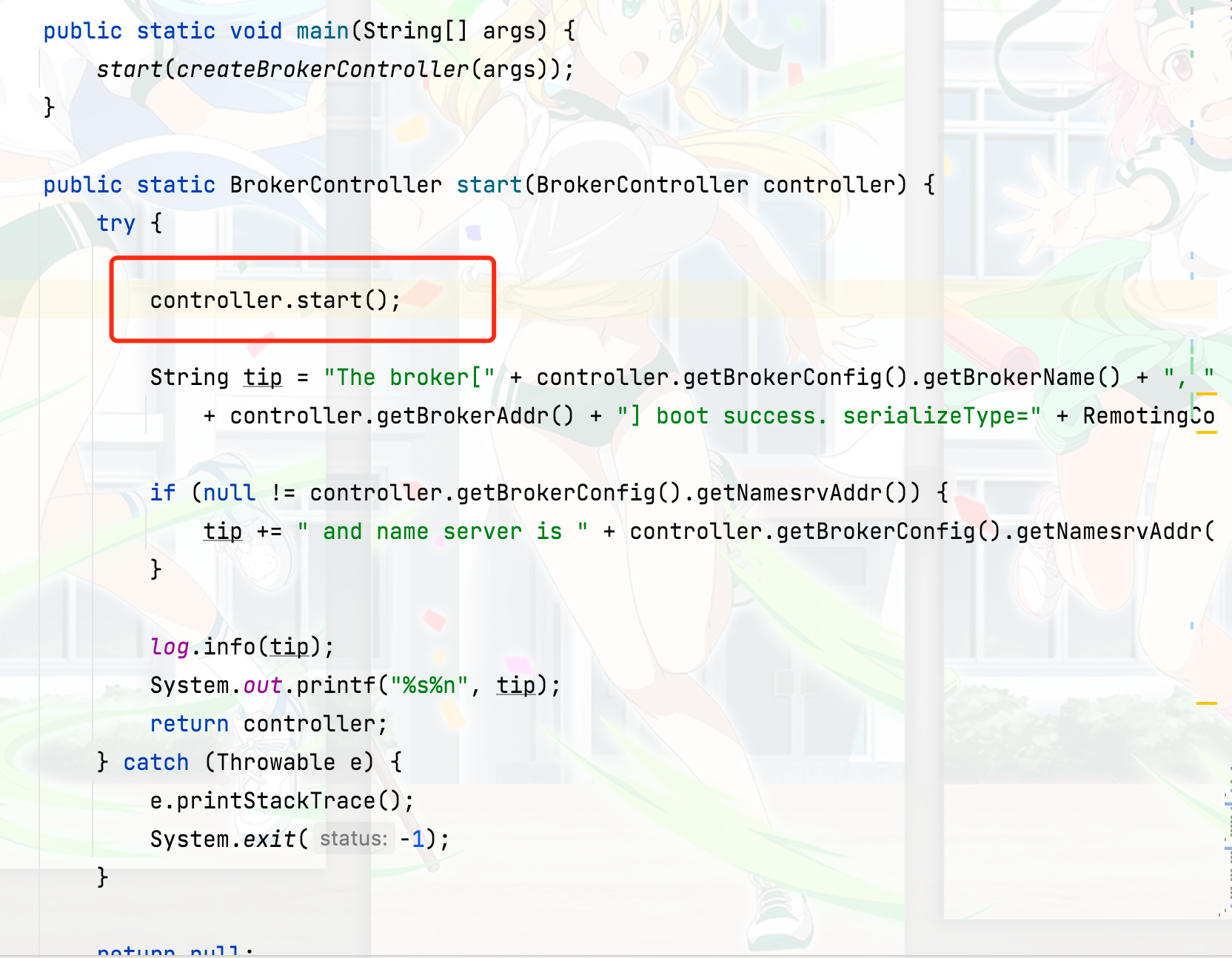

public static BrokerController start(BrokerController controller) {

try {

controller.start();

String tip = "The broker[" + controller.getBrokerConfig().getBrokerName() + ", "

+ controller.getBrokerAddr() + "] boot success. serializeType=" + RemotingCommand.getSerializeTypeConfigInThisServer();

if (null != controller.getBrokerConfig().getNamesrvAddr()) {

tip += " and name server is " + controller.getBrokerConfig().getNamesrvAddr();

}

log.info(tip);

System.out.printf("%s%n", tip);

return controller;

} catch (Throwable e) {

e.printStackTrace();

System.exit(-1);

}

return null;

}

How was the BrokerController created

public static BrokerController createBrokerController(String[] args) {

System.setProperty(RemotingCommand.REMOTING_VERSION_KEY, Integer.toString(MQVersion.CURRENT_VERSION));

if (null == System.getProperty(NettySystemConfig.COM_ROCKETMQ_REMOTING_SOCKET_SNDBUF_SIZE)) {

NettySystemConfig.socketSndbufSize = 131072;

}

// Set socket send buffer size

if (null == System.getProperty(NettySystemConfig.COM_ROCKETMQ_REMOTING_SOCKET_RCVBUF_SIZE)) {

NettySystemConfig.socketRcvbufSize = 131072;

}

try {

//PackageConflictDetect.detectFastjson();

// Parse startup parameter args

Options options = ServerUtil.buildCommandlineOptions(new Options());

commandLine = ServerUtil.parseCmdLine("mqbroker", args, buildCommandlineOptions(options),

new PosixParser());

if (null == commandLine) {

System.exit(-1);

}

// Broker core configuration class

final BrokerConfig brokerConfig = new BrokerConfig();

// Netty server configuration

final NettyServerConfig nettyServerConfig = new NettyServerConfig();

// Netty client configuration TLS

final NettyClientConfig nettyClientConfig = new NettyClientConfig();

// Set whether the Netty client uses

nettyClientConfig.setUseTLS(Boolean.parseBoolean(System.getProperty(TLS_ENABLE,

String.valueOf(TlsSystemConfig.tlsMode == TlsMode.ENFORCING))));

// Set the listening port of Netty server to 10911

nettyServerConfig.setListenPort(10911);

// Broker message configuration class

final MessageStoreConfig messageStoreConfig = new MessageStoreConfig();

// Whether the broker is a slave broker. If yes, the memory occupied by messages will be reduced by 10%, and the default is 40%. Messages exceeding memory will be replaced out of memory

if (BrokerRole.SLAVE == messageStoreConfig.getBrokerRole()) {

int ratio = messageStoreConfig.getAccessMessageInMemoryMaxRatio() - 10;

messageStoreConfig.setAccessMessageInMemoryMaxRatio(ratio);

}

// Read and parse startup configuration information

if (commandLine.hasOption('c')) {

String file = commandLine.getOptionValue('c');

if (file != null) {

configFile = file;

InputStream in = new BufferedInputStream(new FileInputStream(file));

properties = new Properties();

properties.load(in);

properties2SystemEnv(properties);

MixAll.properties2Object(properties, brokerConfig);

MixAll.properties2Object(properties, nettyServerConfig);

MixAll.properties2Object(properties, nettyClientConfig);

MixAll.properties2Object(properties, messageStoreConfig);

BrokerPathConfigHelper.setBrokerConfigPath(file);

in.close();

}

}

MixAll.properties2Object(ServerUtil.commandLine2Properties(commandLine), brokerConfig);

// Check ROCKETMQ_HOME environment variable. If you do not exit directly

if (null == brokerConfig.getRocketmqHome()) {

System.out.printf("Please set the %s variable in your environment to match the location of the RocketMQ installation", MixAll.ROCKETMQ_HOME_ENV);

System.exit(-2);

}

// Get Nameserver address,; The partition resolves to an array. Because Nameserver may be a cluster, there are multiple

String namesrvAddr = brokerConfig.getNamesrvAddr();

if (null != namesrvAddr) {

try {

String[] addrArray = namesrvAddr.split(";");

for (String addr : addrArray) {

RemotingUtil.string2SocketAddress(addr);

}

} catch (Exception e) {

System.out.printf(

"The Name Server Address[%s] illegal, please set it as follows, \"127.0.0.1:9876;192.168.0.1:9876\"%n",

namesrvAddr);

System.exit(-3);

}

}

// Judge the Broker role and handle it accordingly. The master has two ways to synchronize messages

switch (messageStoreConfig.getBrokerRole()) {

// Asynchronous replication: after the producer writes a message to the Master, it can return without waiting for the message to be copied to the slave. The message is copied asynchronously by the bypass thread

case ASYNC_MASTER:

/* Synchronous replication is similar to synchronous double write strategy. That is, after the Master writes the message, it needs to wait for the Slave to copy successfully.

Note: only one Slave needs to be copied successfully and replied successfully, so in this mode, if there are three Slave, when the producer obtains send_ When you answer OK,

It means that the message has reached Maser and a Slave (Note: this does not mean that it has been persisted to disk, but only proves that it must have reached PageCache,

Whether the disk can be swiped depends on whether the swiping strategy is synchronous or asynchronous, and the other two Slave cannot be guaranteed,

In addition, configuration is not supported here, that is, settings such as "more than half of synchronization" are not supported */

case SYNC_MASTER:

brokerConfig.setBrokerId(MixAll.MASTER_ID);

break;

/**

* Status of message sending except send_ In addition to OK, the following states will be added:

* FLUSH_SLAVE_TIMEOUT : The synchronization to the slave timed out, that is, it waited for the slave to report the synchronization progress, but the synchronization failed after the timeout.

* SLAVE_NOT_AVAILABLE: No slave is currently available. Note: if the slave lags behind the master too much,

* The slave will also be considered temporarily unavailable until it is synchronized to a close range,

* This unavailable threshold is determined by the broker configuration haSlaveFallbehindMax (1024 * 1024 * 256 by default)

*/

case SLAVE:

if (brokerConfig.getBrokerId() <= 0) {

System.out.printf("Slave's brokerId must be > 0");

System.exit(-3);

}

break;

default:

break;

}

// Is master-slave synchronization and CommitLog managed based on dleger technology

if (messageStoreConfig.isEnableDLegerCommitLog()) {

brokerConfig.setBrokerId(-1);

}

// Set HA listening port

messageStoreConfig.setHaListenPort(nettyServerConfig.getListenPort() + 1);

// Log correlation

LoggerContext lc = (LoggerContext) LoggerFactory.getILoggerFactory();

JoranConfigurator configurator = new JoranConfigurator();

configurator.setContext(lc);

lc.reset();

configurator.doConfigure(brokerConfig.getRocketmqHome() + "/conf/logback_broker.xml");

// Print startup parameter information

if (commandLine.hasOption('p')) {

InternalLogger console = InternalLoggerFactory.getLogger(LoggerName.BROKER_CONSOLE_NAME);

MixAll.printObjectProperties(console, brokerConfig);

MixAll.printObjectProperties(console, nettyServerConfig);

MixAll.printObjectProperties(console, nettyClientConfig);

MixAll.printObjectProperties(console, messageStoreConfig);

System.exit(0);

} else if (commandLine.hasOption('m')) {

InternalLogger console = InternalLoggerFactory.getLogger(LoggerName.BROKER_CONSOLE_NAME);

MixAll.printObjectProperties(console, brokerConfig, true);

MixAll.printObjectProperties(console, nettyServerConfig, true);

MixAll.printObjectProperties(console, nettyClientConfig, true);

MixAll.printObjectProperties(console, messageStoreConfig, true);

System.exit(0);

}

log = InternalLoggerFactory.getLogger(LoggerName.BROKER_LOGGER_NAME);

MixAll.printObjectProperties(log, brokerConfig);

MixAll.printObjectProperties(log, nettyServerConfig);

MixAll.printObjectProperties(log, nettyClientConfig);

MixAll.printObjectProperties(log, messageStoreConfig);

// Create a BrokerController based on the above configuration parameters

final BrokerController controller = new BrokerController(

brokerConfig,

nettyServerConfig,

nettyClientConfig,

messageStoreConfig);

// remember all configs to prevent discard

controller.getConfiguration().registerConfig(properties);

// Initialize BrokerController

boolean initResult = controller.initialize();

if (!initResult) {

controller.shutdown();

System.exit(-3);

}

// Print consumption time when hook thread JVM exits

Runtime.getRuntime().addShutdownHook(new Thread(new Runnable() {

private volatile boolean hasShutdown = false;

private AtomicInteger shutdownTimes = new AtomicInteger(0);

@Override

public void run() {

synchronized (this) {

log.info("Shutdown hook was invoked, {}", this.shutdownTimes.incrementAndGet());

if (!this.hasShutdown) {

this.hasShutdown = true;

long beginTime = System.currentTimeMillis();

controller.shutdown();

long consumingTimeTotal = System.currentTimeMillis() - beginTime;

log.info("Shutdown hook over, consuming total time(ms): {}", consumingTimeTotal);

}

}

}

}, "ShutdownHook"));

return controller;

} catch (Throwable e) {

e.printStackTrace();

System.exit(-1);

}

return null;

}

The above code mainly reads some configuration files and sets different enumeration states according to the configuration files, and then there is a core method in the above code

boolean initResult = controller.initialize();

Let's go in and have a look

The following code is very long. It can be simple. Let's make a summary here

The following code mainly configures and starts some thread pools, and then performs the operation of memory persistence to disk, and then configures some Netty, which roughly does these things. Don't pay too much attention to the details here. It's too difficult to understand every point of the source code. We mainly choose the core. I also made some comments on the following code

public boolean initialize() throws CloneNotSupportedException {

// Initialize topicConfigManager from disk

boolean result = this.topicConfigManager.load();

// Load consumption offset

result = result && this.consumerOffsetManager.load();

// Load consumption group information

result = result && this.subscriptionGroupManager.load();

// Load filter configuration

result = result && this.consumerFilterManager.load();

if (result) {

try {

// Create a message storage management component, and use commitLog to manage disk messages by default

this.messageStore =

new DefaultMessageStore(this.messageStoreConfig, this.brokerStatsManager, this.messageArrivingListener,

this.brokerConfig);

// If you use Dleger to manage commitlog initialization related information

if (messageStoreConfig.isEnableDLegerCommitLog()) {

DLedgerRoleChangeHandler roleChangeHandler = new DLedgerRoleChangeHandler(this, (DefaultMessageStore) messageStore);

((DLedgerCommitLog)((DefaultMessageStore) messageStore).getCommitLog()).getdLedgerServer().getdLedgerLeaderElector().addRoleChangeHandler(roleChangeHandler);

}

this.brokerStats = new BrokerStats((DefaultMessageStore) this.messageStore);

//load plugin

MessageStorePluginContext context = new MessageStorePluginContext(messageStoreConfig, brokerStatsManager, messageArrivingListener, brokerConfig);

this.messageStore = MessageStoreFactory.build(context, this.messageStore);

this.messageStore.getDispatcherList().addFirst(new CommitLogDispatcherCalcBitMap(this.brokerConfig, this.consumerFilterManager));

} catch (IOException e) {

result = false;

log.error("Failed to initialize", e);

}

}

result = result && this.messageStore.load();

if (result) {

// Start the Netty server

this.remotingServer = new NettyRemotingServer(this.nettyServerConfig, this.clientHousekeepingService);

NettyServerConfig fastConfig = (NettyServerConfig) this.nettyServerConfig.clone();

fastConfig.setListenPort(nettyServerConfig.getListenPort() - 2);

this.fastRemotingServer = new NettyRemotingServer(fastConfig, this.clientHousekeepingService);

this.sendMessageExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getSendMessageThreadPoolNums(),

this.brokerConfig.getSendMessageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.sendThreadPoolQueue,

new ThreadFactoryImpl("SendMessageThread_"));

this.pullMessageExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getPullMessageThreadPoolNums(),

this.brokerConfig.getPullMessageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.pullThreadPoolQueue,

new ThreadFactoryImpl("PullMessageThread_"));

this.replyMessageExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getProcessReplyMessageThreadPoolNums(),

this.brokerConfig.getProcessReplyMessageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.replyThreadPoolQueue,

new ThreadFactoryImpl("ProcessReplyMessageThread_"));

this.queryMessageExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getQueryMessageThreadPoolNums(),

this.brokerConfig.getQueryMessageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.queryThreadPoolQueue,

new ThreadFactoryImpl("QueryMessageThread_"));

this.adminBrokerExecutor =

Executors.newFixedThreadPool(this.brokerConfig.getAdminBrokerThreadPoolNums(), new ThreadFactoryImpl(

"AdminBrokerThread_"));

this.clientManageExecutor = new ThreadPoolExecutor(

this.brokerConfig.getClientManageThreadPoolNums(),

this.brokerConfig.getClientManageThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.clientManagerThreadPoolQueue,

new ThreadFactoryImpl("ClientManageThread_"));

this.heartbeatExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getHeartbeatThreadPoolNums(),

this.brokerConfig.getHeartbeatThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.heartbeatThreadPoolQueue,

new ThreadFactoryImpl("HeartbeatThread_", true));

this.endTransactionExecutor = new BrokerFixedThreadPoolExecutor(

this.brokerConfig.getEndTransactionThreadPoolNums(),

this.brokerConfig.getEndTransactionThreadPoolNums(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.endTransactionThreadPoolQueue,

new ThreadFactoryImpl("EndTransactionThread_"));

this.consumerManageExecutor =

Executors.newFixedThreadPool(this.brokerConfig.getConsumerManageThreadPoolNums(), new ThreadFactoryImpl(

"ConsumerManageThread_"));

this.registerProcessor();

final long initialDelay = UtilAll.computeNextMorningTimeMillis() - System.currentTimeMillis();

final long period = 1000 * 60 * 60 * 24;

// The broker status is recorded regularly. By default, it is 86400s once

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.getBrokerStats().record();

} catch (Throwable e) {

log.error("schedule record error.", e);

}

}

}, initialDelay, period, TimeUnit.MILLISECONDS);

// The offset is persisted at fixed time and once in 5S

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.consumerOffsetManager.persist();

} catch (Throwable e) {

log.error("schedule persist consumerOffset error.", e);

}

}

}, 1000 * 10, this.brokerConfig.getFlushConsumerOffsetInterval(), TimeUnit.MILLISECONDS);

// Timed persistent filter, synchronized once every 10s

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.consumerFilterManager.persist();

} catch (Throwable e) {

log.error("schedule persist consumer filter error.", e);

}

}

}, 1000 * 10, 1000 * 10, TimeUnit.MILLISECONDS);

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.protectBroker();

} catch (Throwable e) {

log.error("protectBroker error.", e);

}

}

}, 3, 3, TimeUnit.MINUTES);

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.printWaterMark();

} catch (Throwable e) {

log.error("printWaterMark error.", e);

}

}

}, 10, 1, TimeUnit.SECONDS);

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

log.info("dispatch behind commit log {} bytes", BrokerController.this.getMessageStore().dispatchBehindBytes());

} catch (Throwable e) {

log.error("schedule dispatchBehindBytes error.", e);

}

}

}, 1000 * 10, 1000 * 60, TimeUnit.MILLISECONDS);

if (this.brokerConfig.getNamesrvAddr() != null) {

this.brokerOuterAPI.updateNameServerAddressList(this.brokerConfig.getNamesrvAddr());

log.info("Set user specified name server address: {}", this.brokerConfig.getNamesrvAddr());

} else if (this.brokerConfig.isFetchNamesrvAddrByAddressServer()) {

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.brokerOuterAPI.fetchNameServerAddr();

} catch (Throwable e) {

log.error("ScheduledTask fetchNameServerAddr exception", e);

}

}

}, 1000 * 10, 1000 * 60 * 2, TimeUnit.MILLISECONDS);

}

if (!messageStoreConfig.isEnableDLegerCommitLog()) {

if (BrokerRole.SLAVE == this.messageStoreConfig.getBrokerRole()) {

if (this.messageStoreConfig.getHaMasterAddress() != null && this.messageStoreConfig.getHaMasterAddress().length() >= 6) {

this.messageStore.updateHaMasterAddress(this.messageStoreConfig.getHaMasterAddress());

this.updateMasterHAServerAddrPeriodically = false;

} else {

this.updateMasterHAServerAddrPeriodically = true;

}

} else {

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.printMasterAndSlaveDiff();

} catch (Throwable e) {

log.error("schedule printMasterAndSlaveDiff error.", e);

}

}

}, 1000 * 10, 1000 * 60, TimeUnit.MILLISECONDS);

}

}

if (TlsSystemConfig.tlsMode != TlsMode.DISABLED) {

// Register a listener to reload SslContext

try {

fileWatchService = new FileWatchService(

new String[] {

TlsSystemConfig.tlsServerCertPath,

TlsSystemConfig.tlsServerKeyPath,

TlsSystemConfig.tlsServerTrustCertPath

},

new FileWatchService.Listener() {

boolean certChanged, keyChanged = false;

@Override

public void onChanged(String path) {

if (path.equals(TlsSystemConfig.tlsServerTrustCertPath)) {

log.info("The trust certificate changed, reload the ssl context");

reloadServerSslContext();

}

if (path.equals(TlsSystemConfig.tlsServerCertPath)) {

certChanged = true;

}

if (path.equals(TlsSystemConfig.tlsServerKeyPath)) {

keyChanged = true;

}

if (certChanged && keyChanged) {

log.info("The certificate and private key changed, reload the ssl context");

certChanged = keyChanged = false;

reloadServerSslContext();

}

}

private void reloadServerSslContext() {

((NettyRemotingServer) remotingServer).loadSslContext();

((NettyRemotingServer) fastRemotingServer).loadSslContext();

}

});

} catch (Exception e) {

log.warn("FileWatchService created error, can't load the certificate dynamically");

}

}

initialTransaction();

initialAcl();

initialRpcHooks();

}

return result;

}

How does the Broker register with the NameServer

A key point is how the Broker registers with the NameServer. Let's take a look at this code

After creating BrokerController, the start method was called.

When we enter this method, we will see a timed thread pool

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.registerBrokerAll(true, false, brokerConfig.isForceRegister());

} catch (Throwable e) {

log.error("registerBrokerAll Exception", e);

}

}

}, 1000 * 10, Math.max(10000, Math.min(brokerConfig.getRegisterNameServerPeriod(), 60000)), TimeUnit.MILLISECONDS);

registerBrokerAll

Then enter the method registerBrokerAll

BrokerController.this.registerBrokerAll(true, false, brokerConfig.isForceRegister());

public synchronized void registerBrokerAll(final boolean checkOrderConfig, boolean oneway, boolean forceRegister) {

// Topic related configuration information

TopicConfigSerializeWrapper topicConfigWrapper = this.getTopicConfigManager().buildTopicConfigSerializeWrapper();

// Processing TopicConfig information

if (!PermName.isWriteable(this.getBrokerConfig().getBrokerPermission())

|| !PermName.isReadable(this.getBrokerConfig().getBrokerPermission())) {

ConcurrentHashMap<String, TopicConfig> topicConfigTable = new ConcurrentHashMap<String, TopicConfig>();

for (TopicConfig topicConfig : topicConfigWrapper.getTopicConfigTable().values()) {

TopicConfig tmp =

new TopicConfig(topicConfig.getTopicName(), topicConfig.getReadQueueNums(), topicConfig.getWriteQueueNums(),

this.brokerConfig.getBrokerPermission());

topicConfigTable.put(topicConfig.getTopicName(), tmp);

}

topicConfigWrapper.setTopicConfigTable(topicConfigTable);

}

// Judge whether to register with Broker

if (forceRegister || needRegister(this.brokerConfig.getBrokerClusterName(),

this.getBrokerAddr(),

this.brokerConfig.getBrokerName(),

this.brokerConfig.getBrokerId(),

this.brokerConfig.getRegisterBrokerTimeoutMills())) {

// Truly register Broker key entry

doRegisterBrokerAll(checkOrderConfig, oneway, topicConfigWrapper);

}

}

doRegisterBrokerAll

Let's go to doRegisterBrokerAll. Here's the core code for registering the Broker to NameServer. The code related to Netty network requests is encapsulated in the registerBrokerAll() method. After reading this code, we can go in and have a look

private void doRegisterBrokerAll(boolean checkOrderConfig, boolean oneway,

TopicConfigSerializeWrapper topicConfigWrapper) {

// Register the Broker with all nameservers. There may be multiple nameservers in the cluster, so here is the List

List<RegisterBrokerResult> registerBrokerResultList = this.brokerOuterAPI.registerBrokerAll(

this.brokerConfig.getBrokerClusterName(),

this.getBrokerAddr(),

this.brokerConfig.getBrokerName(),

this.brokerConfig.getBrokerId(),

this.getHAServerAddr(),

topicConfigWrapper,

this.filterServerManager.buildNewFilterServerList(),

oneway,

this.brokerConfig.getRegisterBrokerTimeoutMills(),

this.brokerConfig.isCompressedRegister());

// If the registration result is greater than 0, continue to process the relevant logic

if (registerBrokerResultList.size() > 0) {

RegisterBrokerResult registerBrokerResult = registerBrokerResultList.get(0);

if (registerBrokerResult != null) {

if (this.updateMasterHAServerAddrPeriodically && registerBrokerResult.getHaServerAddr() != null) {

this.messageStore.updateHaMasterAddress(registerBrokerResult.getHaServerAddr());

}

this.slaveSynchronize.setMasterAddr(registerBrokerResult.getMasterAddr());

if (checkOrderConfig) {

this.getTopicConfigManager().updateOrderTopicConfig(registerBrokerResult.getKvTable());

}

}

}

}

registerBrokerAll()

Here, let's take a look at the registerBrokerAll() method

public List<RegisterBrokerResult> registerBrokerAll(

final String clusterName,

final String brokerAddr,

final String brokerName,

final long brokerId,

final String haServerAddr,

final TopicConfigSerializeWrapper topicConfigWrapper,

final List<String> filterServerList,

final boolean oneway,

final int timeoutMills,

final boolean compressed) {

// It is used to store the returned results of each remote registration

final List<RegisterBrokerResult> registerBrokerResultList = new CopyOnWriteArrayList<>();

// Registered address of NameServer

List<String> nameServerAddressList = this.remotingClient.getNameServerAddressList();

if (nameServerAddressList != null && nameServerAddressList.size() > 0) {

// Build network request header

final RegisterBrokerRequestHeader requestHeader = new RegisterBrokerRequestHeader();

requestHeader.setBrokerAddr(brokerAddr);

requestHeader.setBrokerId(brokerId);

requestHeader.setBrokerName(brokerName);

requestHeader.setClusterName(clusterName);

requestHeader.setHaServerAddr(haServerAddr);

requestHeader.setCompressed(compressed);

// Build network request body

RegisterBrokerBody requestBody = new RegisterBrokerBody();

requestBody.setTopicConfigSerializeWrapper(topicConfigWrapper);

requestBody.setFilterServerList(filterServerList);

final byte[] body = requestBody.encode(compressed);

final int bodyCrc32 = UtilAll.crc32(body);

requestHeader.setBodyCrc32(bodyCrc32);

// Multithreaded registration improves efficiency

final CountDownLatch countDownLatch = new CountDownLatch(nameServerAddressList.size());

for (final String namesrvAddr : nameServerAddressList) {

//Custom thread pool

brokerOuterExecutor.execute(() -> {

try {

RegisterBrokerResult result = registerBroker(namesrvAddr,oneway, timeoutMills,requestHeader,body);

if (result != null) {

registerBrokerResultList.add(result);

}

log.info("register broker[{}]to name server {} OK", brokerId, namesrvAddr);

} catch (Exception e) {

log.warn("registerBroker Exception, {}", namesrvAddr, e);

} finally {

countDownLatch.countDown();

}

});

}

try {

countDownLatch.await(timeoutMills, TimeUnit.MILLISECONDS);

} catch (InterruptedException e) {

}

}

return registerBrokerResultList;

}

summary

We finished analyzing the Broker startup process here. We didn't look at it line by line, because some codes didn't know the actual business scenario, and we didn't know what it was for. Generally speaking, we looked at a core process of the whole source code, and the relevant details were not very in-depth, mainly because we had a general understanding of the Broker startup, If you need to modify or encounter related problems in the future, you can find the warehouse in well, and the problem troubleshooting will be easier