1, Automatic deployment keepalived

Experimental environment: server1 deploys keepalived to server2/3 so that server2 is the keepalived master and server3 is the keepalived backup.

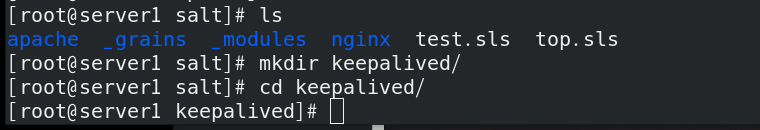

Create a keepalived directory under the salt directory

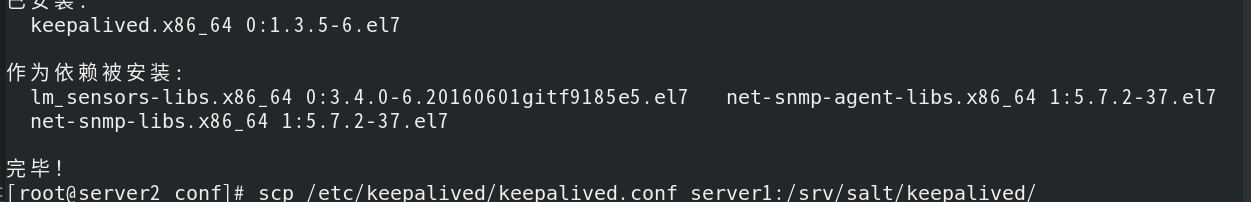

Pass the keepalived configuration file of sever2 to server1

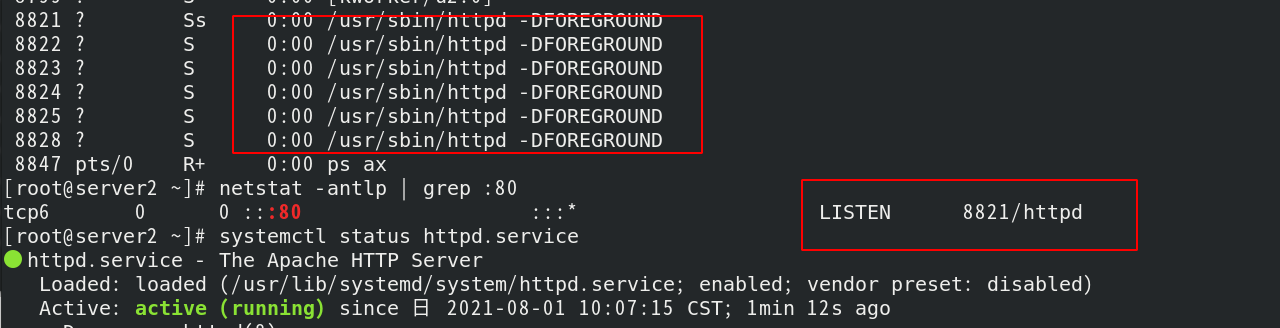

At this point, you can see that the kept service port on server1 has been opened

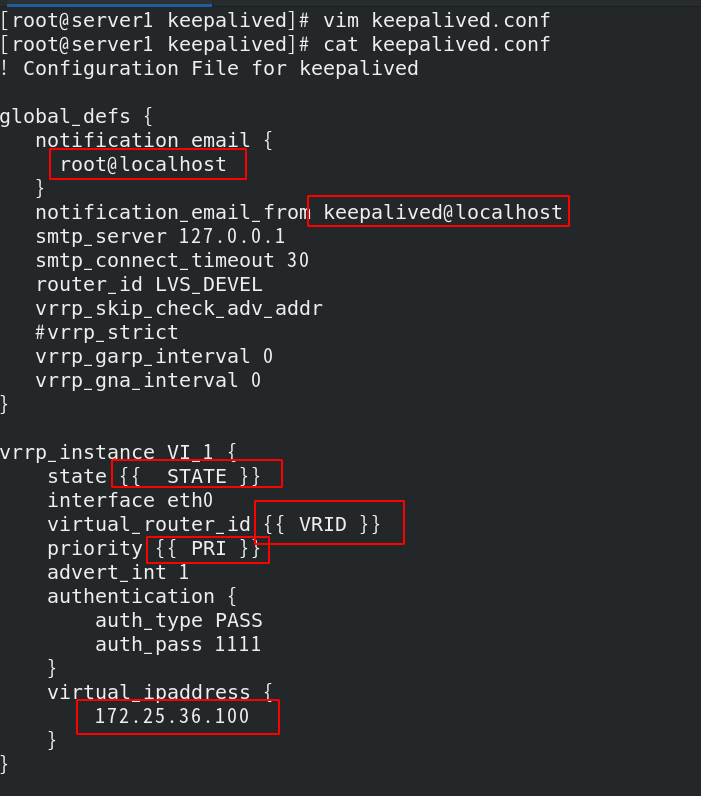

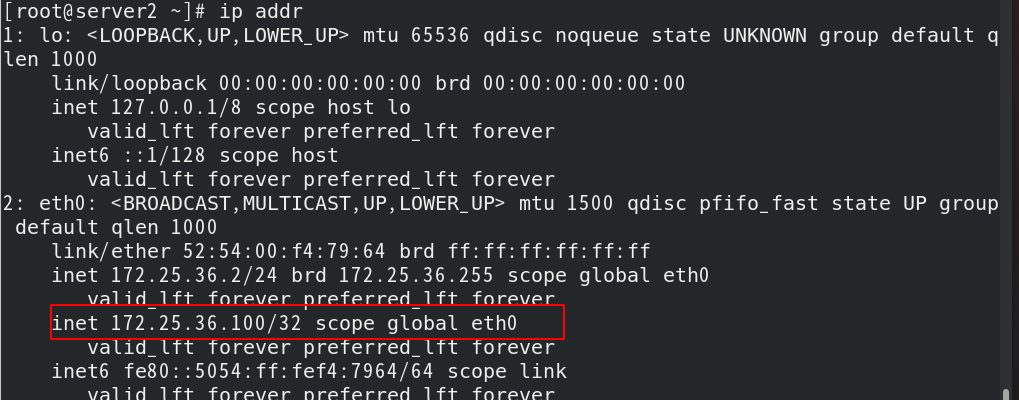

Modify the keepalived configuration file as follows: vip is 172.25 36.100;

#It should be noted that keepalived has its own firewall strategy. If it is not annotated, it will 404 be inaccessible;

Considering that the parameters in the configuration files of master and backup are different, we need to use variables when pushing at the same time, so we need to use pillar

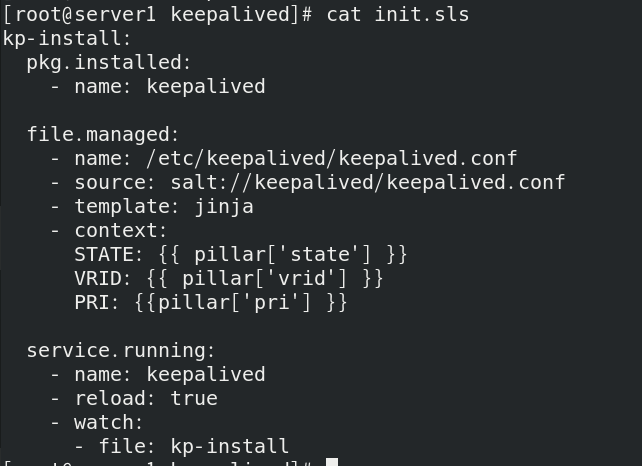

Write init SLS file

- template: jinja: add jinja template

- context:

State: {{pillar ['state']}}: the variable state is assigned to state

VRID: {{pillar ['VRID']}}: the variable VRID assigns VRID

Pri: {{pillar ['pri ']}: the variable PRI is assigned to pri

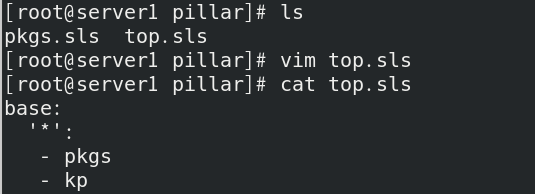

At / SRV / pillar / top In SLS file, kp module needs to be added;

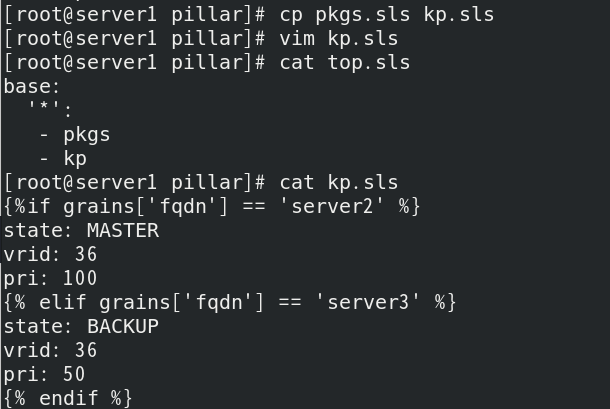

Set PKG The SLS file is renamed KP sls

Write KP SLS file, if the hostname is server2, the status is master, vrid is 36, and the priority is 100; If the hostname is server3, the status is backup, the vrid is 36, and the priority is 50

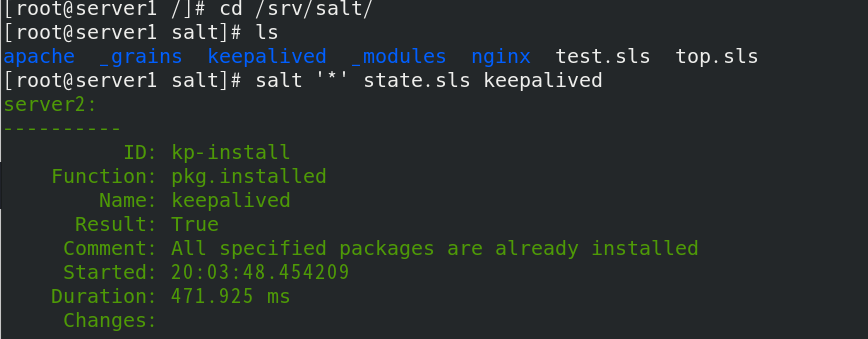

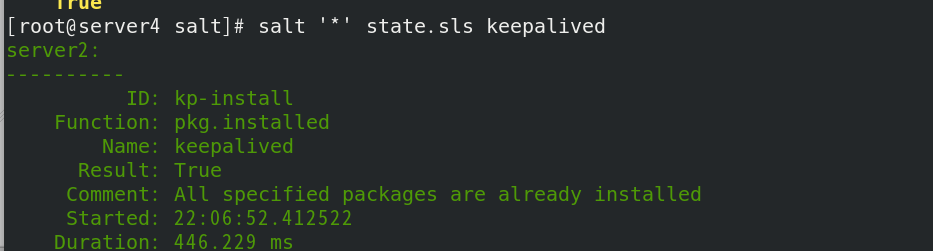

salt ‘’ state.sls keepalived push

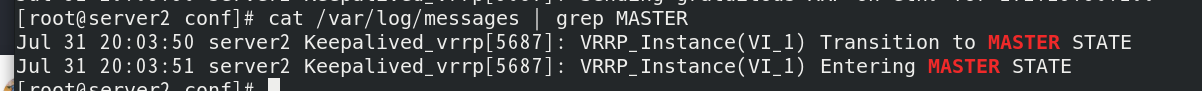

Check the minion side logs. server2 is the master and server3 is the backup

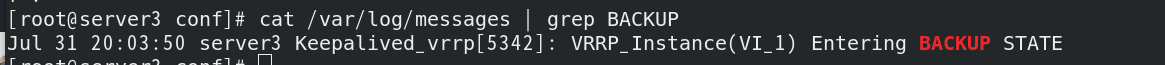

At this time, vip is on server2

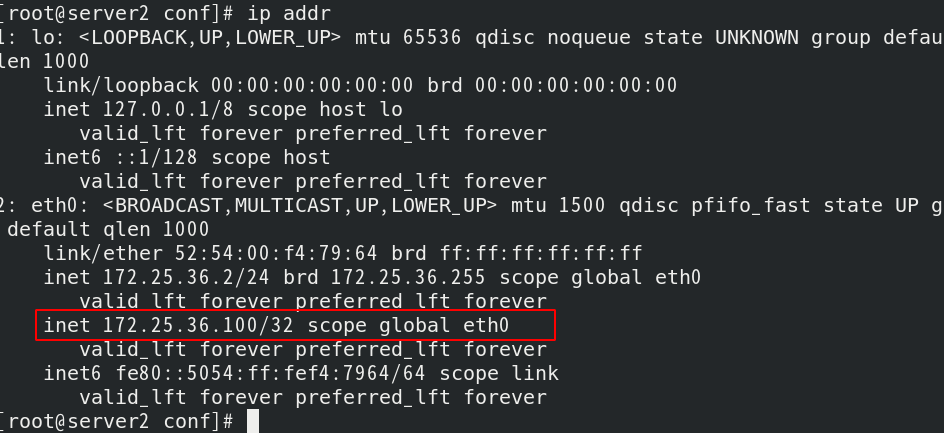

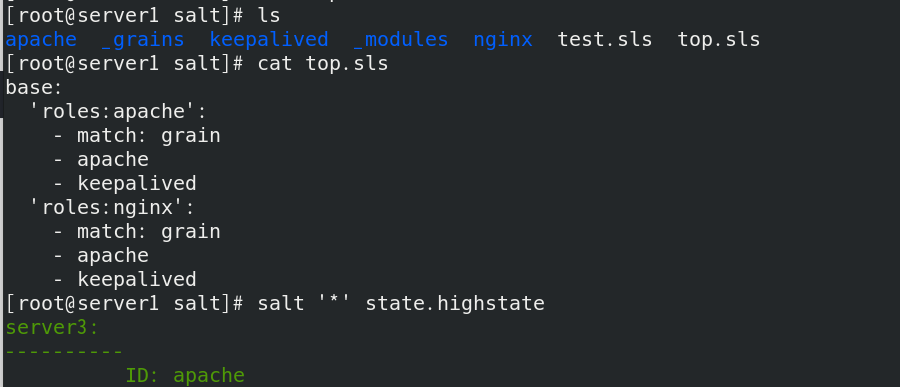

Modify top. Under base SLS file, execute the keepalived module after the host with the role of apache (server3) is matched; execute the keepalived module after the host with the role of nginx (server2) is matched.

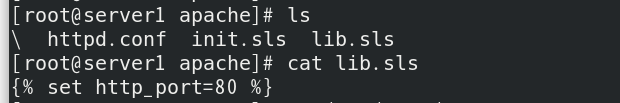

Set the previously set lib HTTP of SLS file_ Change port to 80

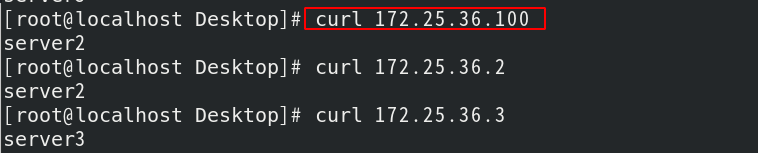

After setting, salt '' state Highstate push high, curl successful

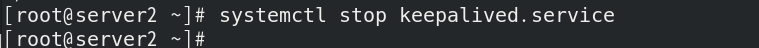

At this time, if the keepalived of server2 is stopped, test the high availability

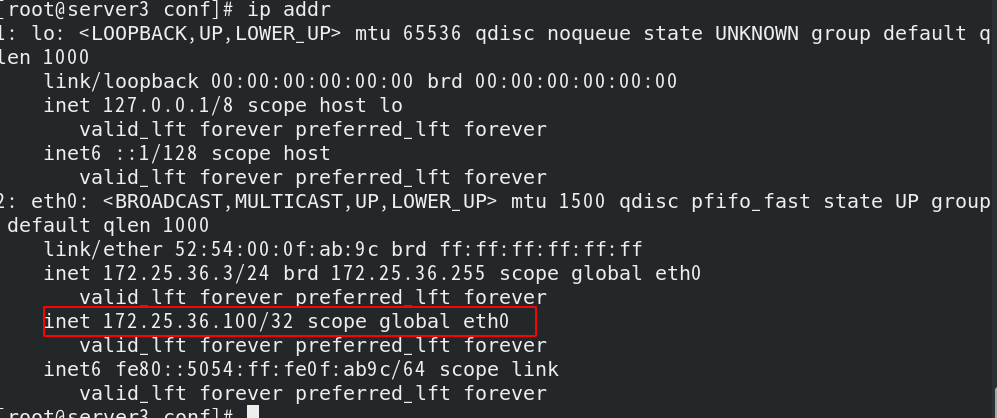

You will find vip floating on server3

Real machine test

Push again at this time

You will find that the keepalived of server2 is restored to master again, and the vip floats to server2!

2, Job management

1. Job introduction:

When the master issues an instruction task, the jid generated on the will be attached.

When minion receives an instruction to start execution, it will generate a file named jid in the local / var/cache/salt/minion/proc directory, which is used by the master to view the execution of the current task during execution.

After the instruction is executed and the result is transmitted to the master, delete the temporary file.

2. Job cache is saved for 24 hours by default:

Keep in vim /etc/salt/master_ Jobs: default

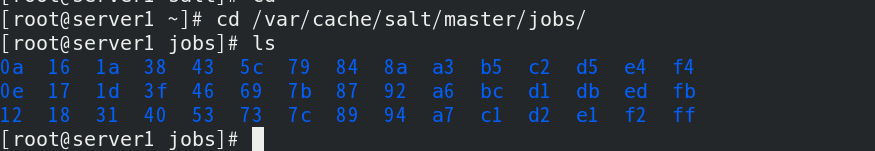

master side Job cache directory: / var/cache/salt/master/jobs

Store Job in database

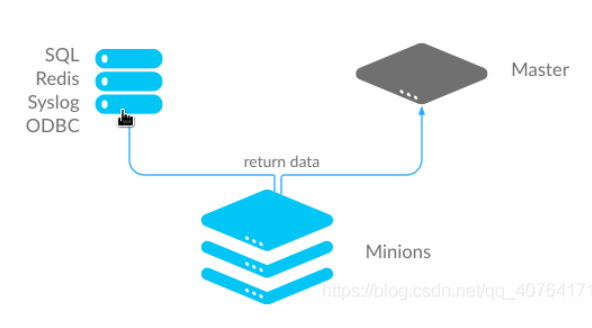

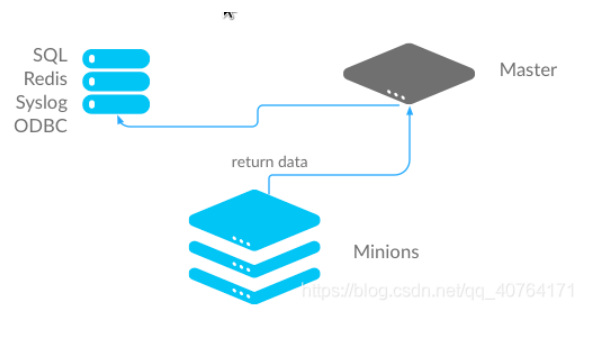

Method 1: the minion end stores data in the database: when the minion end returns data to the master, it will also give a copy to the database

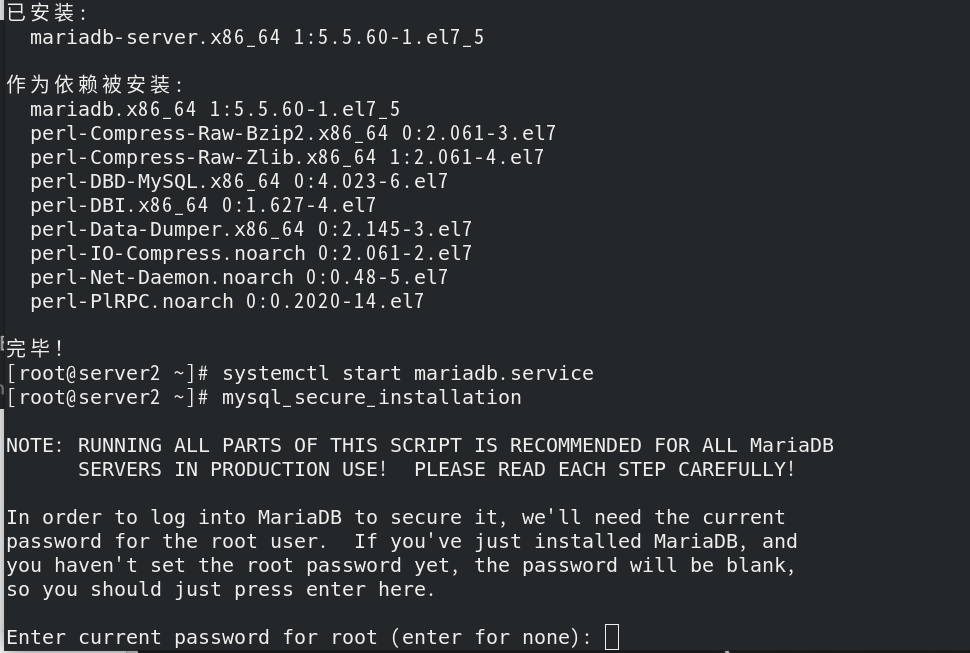

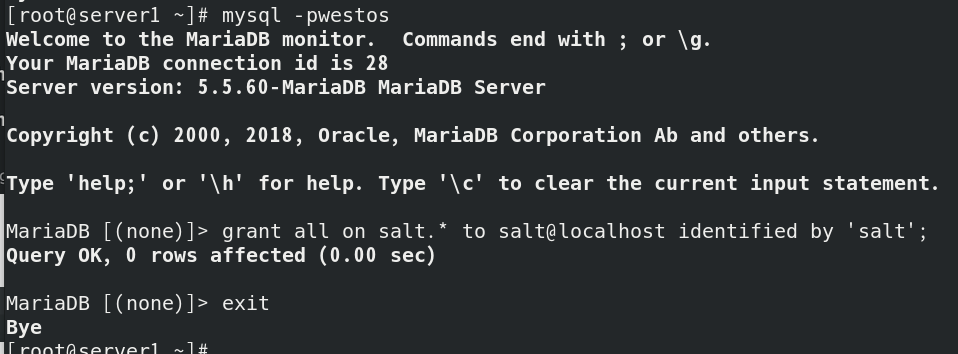

First, install and start MariaDB service in server1;

Secure initialization mysql_secure_installation, enter the database

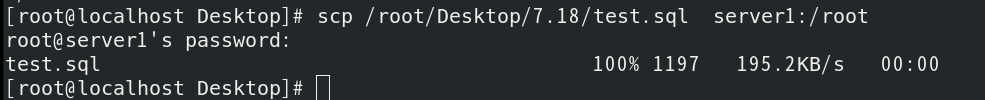

Test the real machine The SQL file is passed to sever1. You need to create a database and three tables in the database (you can see it in the test.sql file)

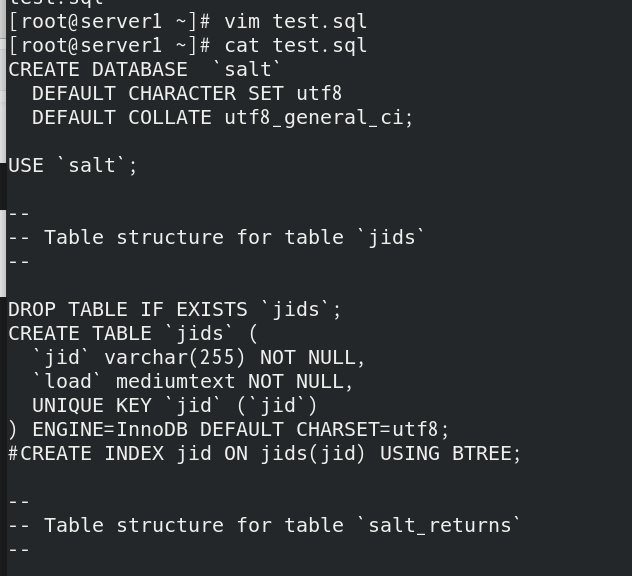

CREATE DATABASE 'salt': create a database;

CREATE TABLE 'jids': create a jid table;

CREATE TABLE ‘salt_returns': create salt_returns table;

CREATE TABLE ‘salt_events': create salt_events table

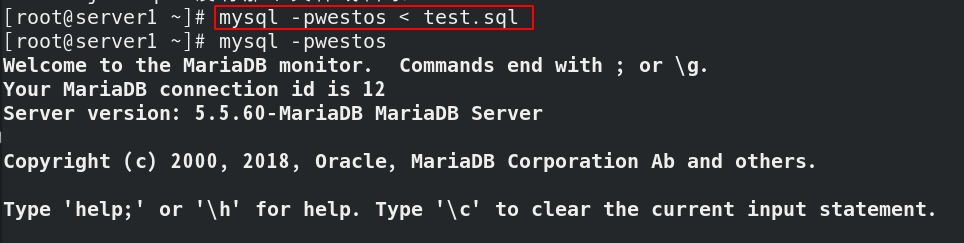

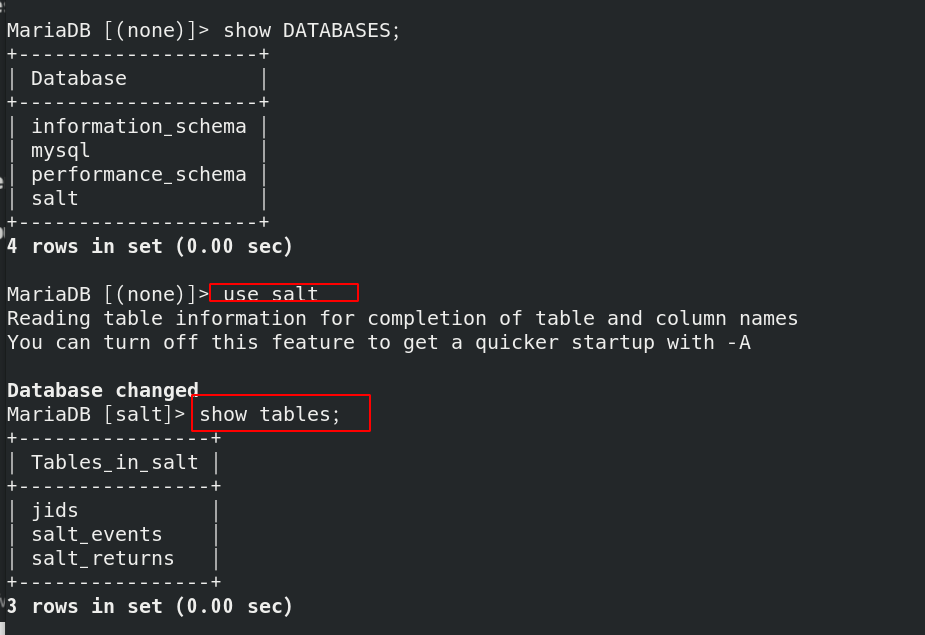

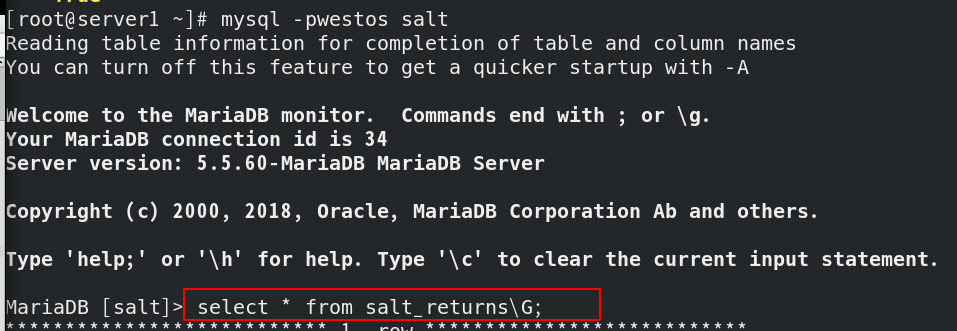

mysql -pwestos < jobs. Import the test file into MySQL database;

Enter the database and check the tables and libraries of the database:

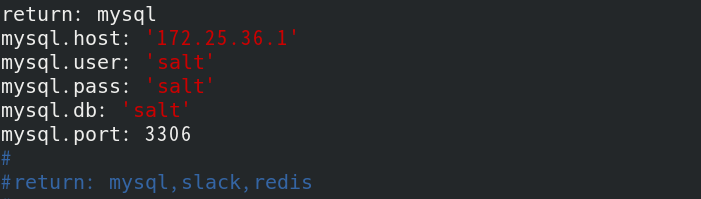

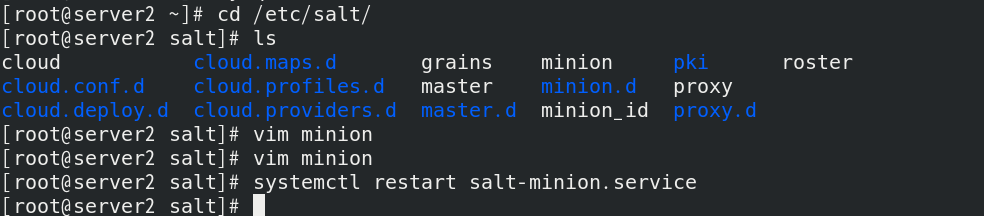

Modify the configuration file / etc/slat/minion of server2 and restart the minion side

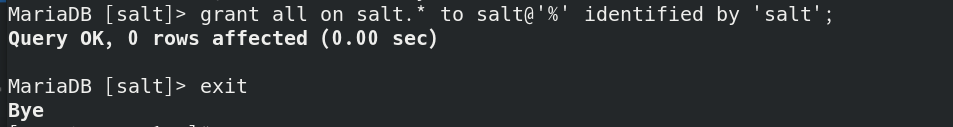

Add user authentication on server1 to authorize salt users to remotely operate salt* All operations of the table

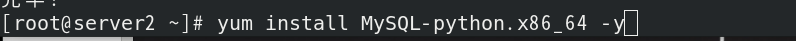

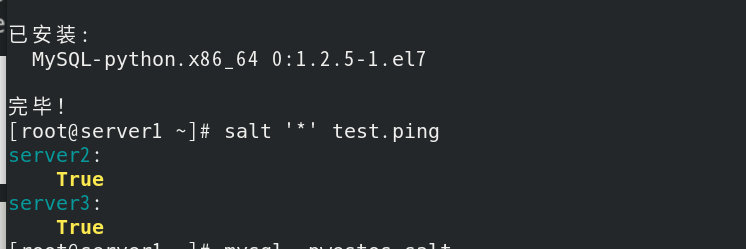

Install mysql-python.server2 x86_ 64 0:1.2. 5-1. EL7 (the written statement is Python language. Whoever writes to the database needs to install MySQL Python)

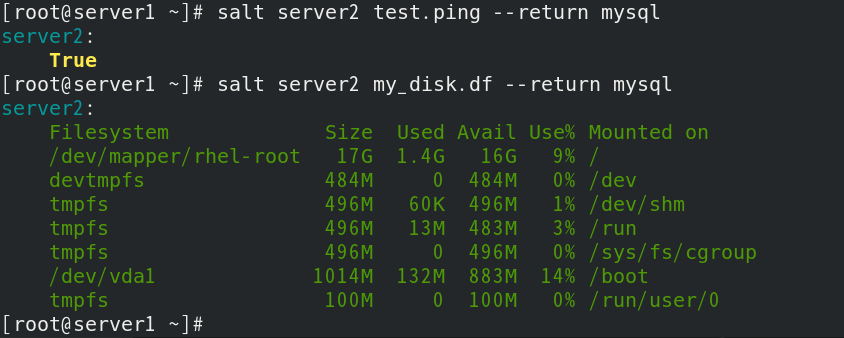

Test

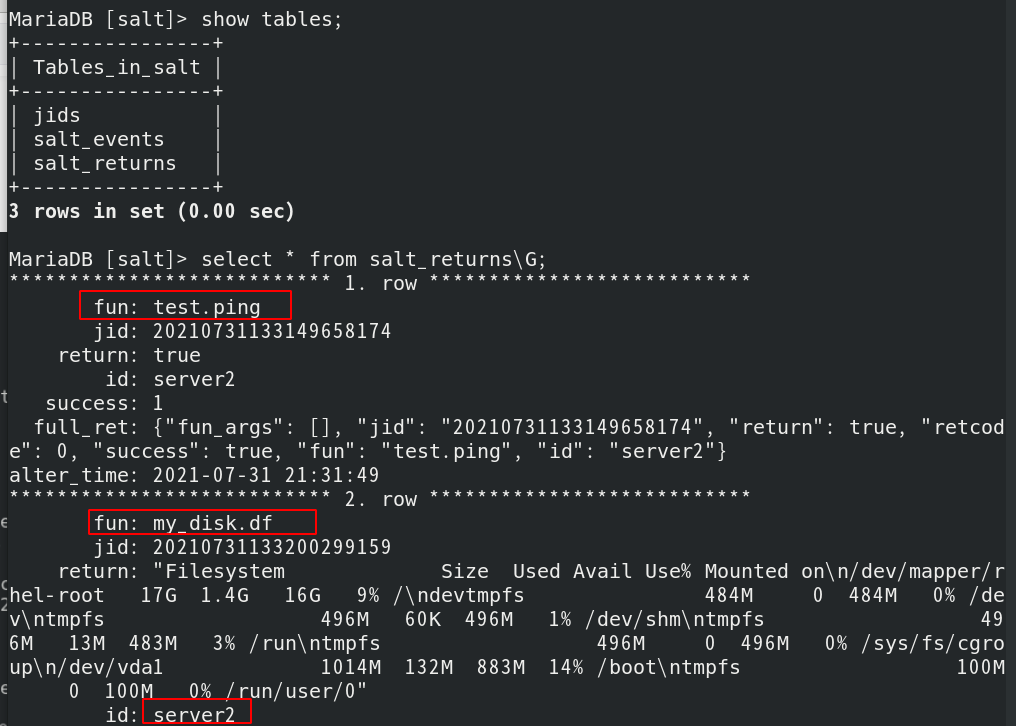

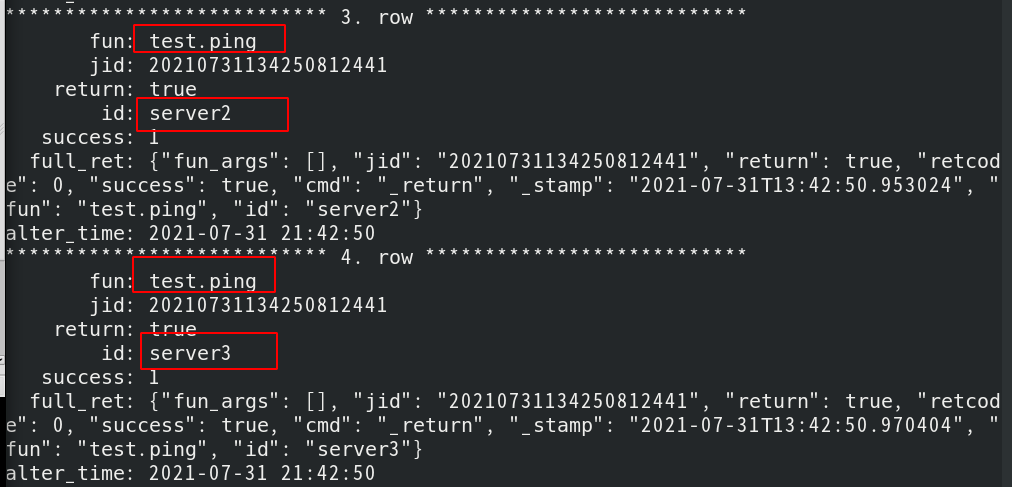

Return to server1 to see if there is any test information in the database, as shown below. The configuration is successful

Mode 2: the minion end normally returns data to the master end, and the master end sends it to the database;

Advantages: there is no need to modify the policy at each minion end, just set it at the master end;

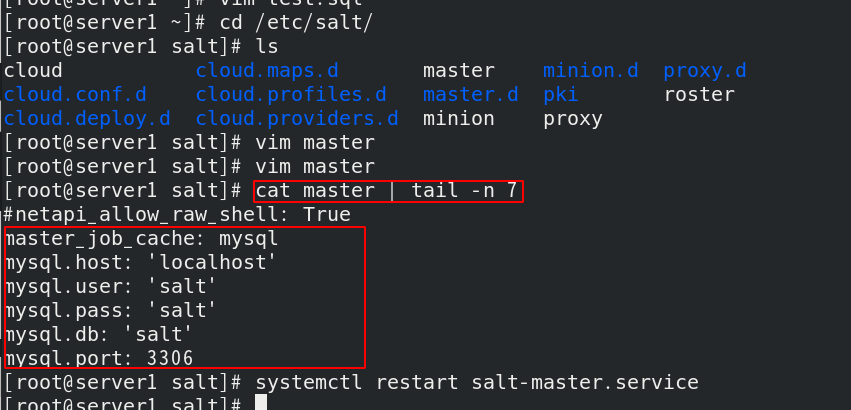

Modify the configuration file / etc/salt/master, add the following contents at the end of the file, and restart the salt master

Enter the database and authorize the local user to execute salt All operations of the table

Install MySQL python, salt 'test' Ping for testing

View records in mysql

Successfully saved to database

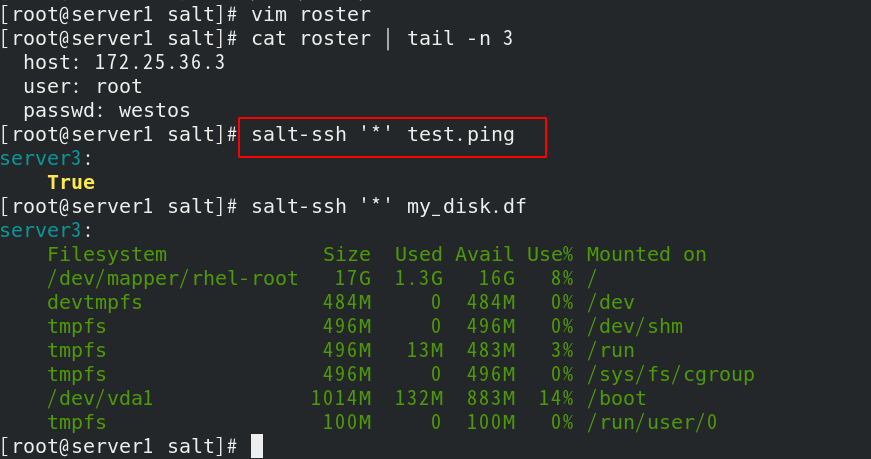

3, Salt SSH

Introduction:

Salt SSH can run independently without minion. It uses SSH pass for password interaction, works in serial mode, and its performance is degraded

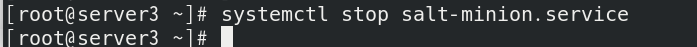

If some hosts cannot install the minion side, for example, if server3 turns off the minion side, you can use salt SSH

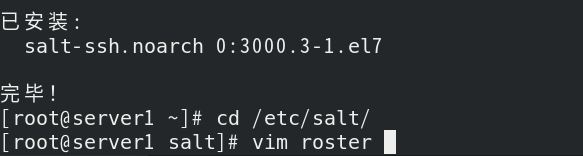

server1 install salt SSH

Modify the configuration file / etc/salt/roster;

Connect to the master side, and you can find server3 written in the configuration file

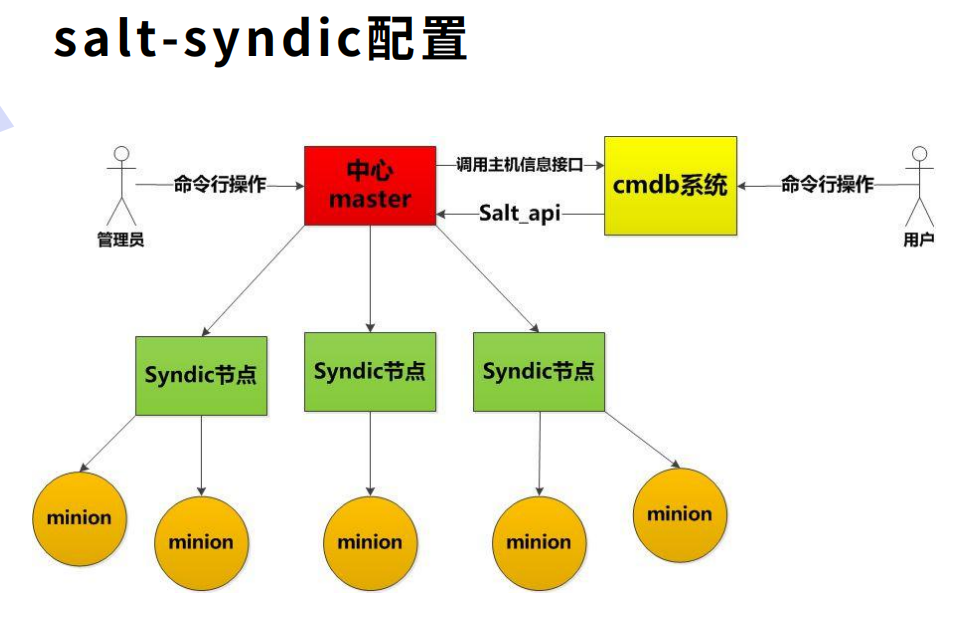

4, Salt syndic

Introduction:

1. Syndic is actually an agent that isolates the master from minion. Syndic must run on the master and then connect to another topmaster;

2. The status issued by the topmaster needs to be transferred to the subordinate master through syndic, and the data transferred from the minion to the master is also transferred to the topmaster by syndic;

3. Topmaster does not know how many minion s there are. syndic and topmaster file_roots and pillar_ The directory of roots should be consistent.

server1 is still a master. Now create a new server4 as the top-level master;

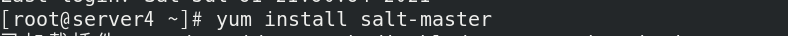

For the same server4, you need to configure yum source and install salt master

server4 modify the configuration file / etc/salt/master;

Restart the salt master. Now server4 is the top master

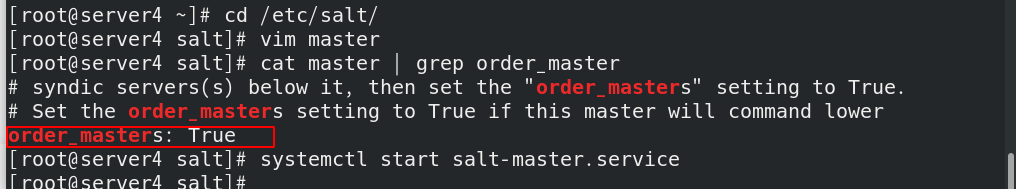

server1 install salt syndic Noarch, modify the configuration file and set server4 as its topmaster

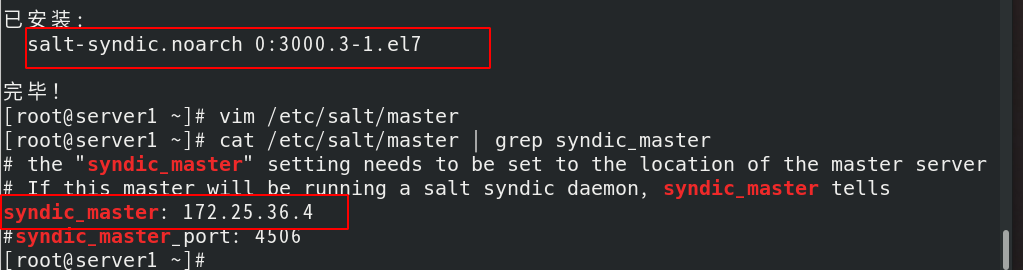

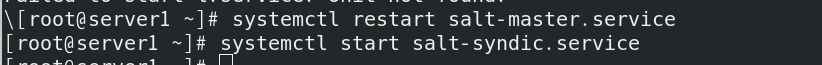

Restart salt master Service, start salt syndic service

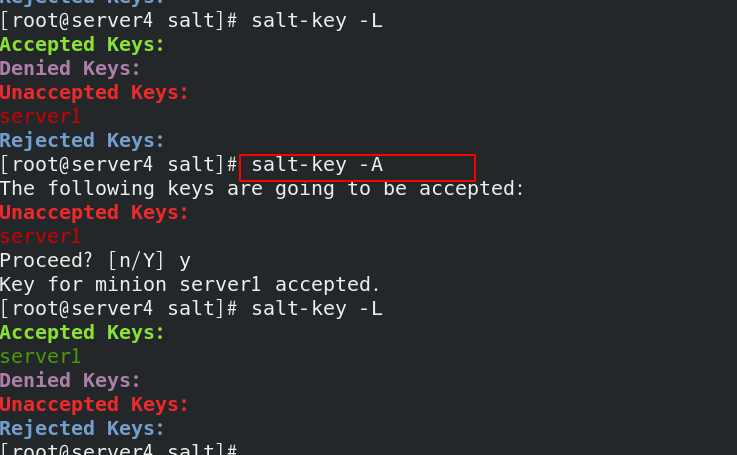

At this time, server4 uses salt key - L to view;

Salt key - a add to get the key

The topmaster successfully captures the master (server1), but cannot see the minion end

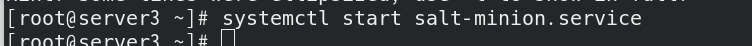

Note: the minion of server3 was turned off in the last ssh experiment and should be turned on now, otherwise server3 will not succeed

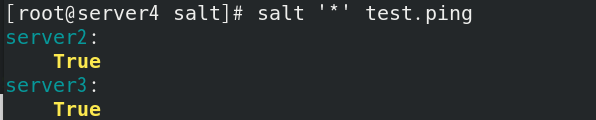

salt ‘*’ test.ping, test successful

server4 executes tasks as a top master and is sent to subordinate Masters (server1) for execution

5, Salt API configuration

Introduction:

SaltStack officially provides the Salt API project in REST API format, which will make the integration of Salt and third-party systems particularly simple;

Three api modules are officially available: rest_cherrypy,rest_tornado,rest_wsgi ;

Official link: https://docs.saltstack.com/en/latest/ref/netapi/all/index.html#all- netapi-modules

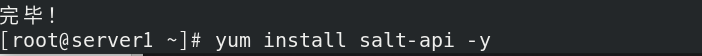

Server1 install salt API

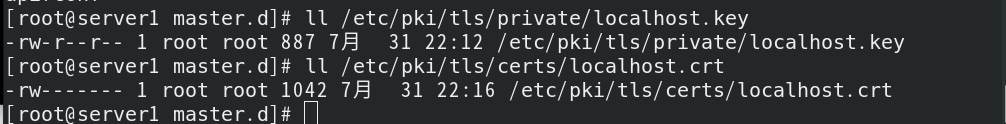

Create the key openssl genrsa 1024, and redirect the output to the file localhost Key

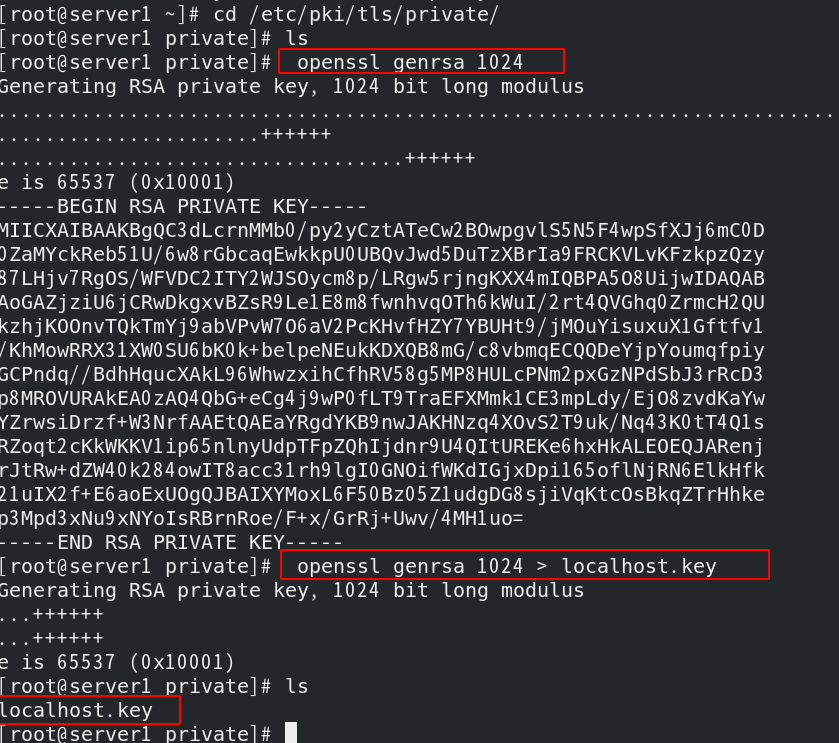

Generate certificate make testcert

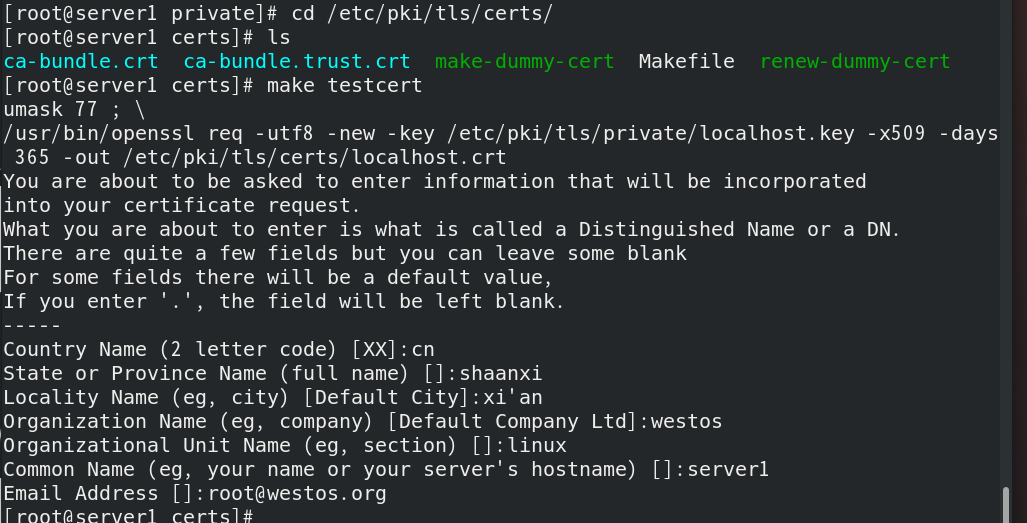

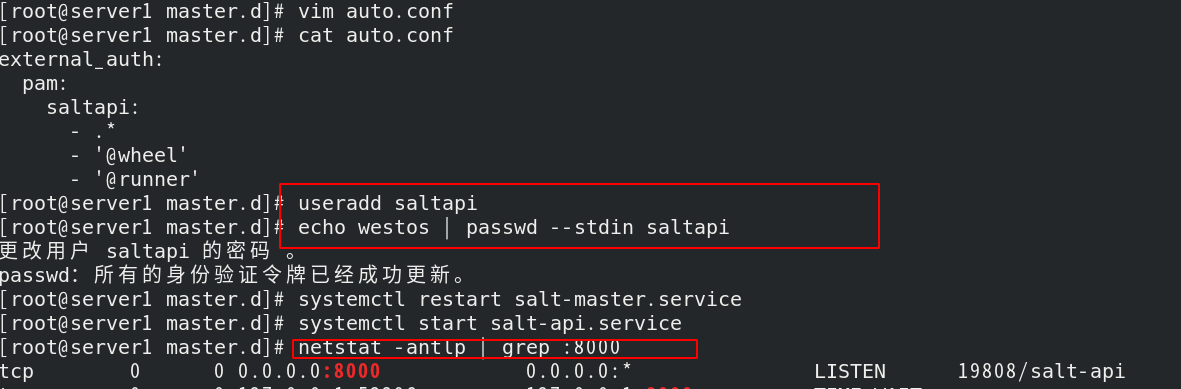

Activate rest_cherrypy module: / etc / salt / master d/api. Conf (create API configuration file)

The file path is as follows

Create / etc / salt / master d/auth. The conf authentication file uses the pam policy to fully open the permissions of saltapi users;

Create user and set password;

After restarting the service, you can successfully see the 8000 port of the api

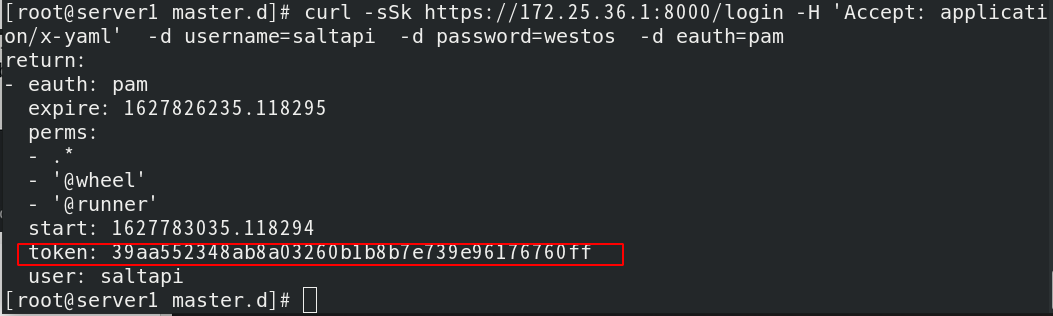

Get authentication token

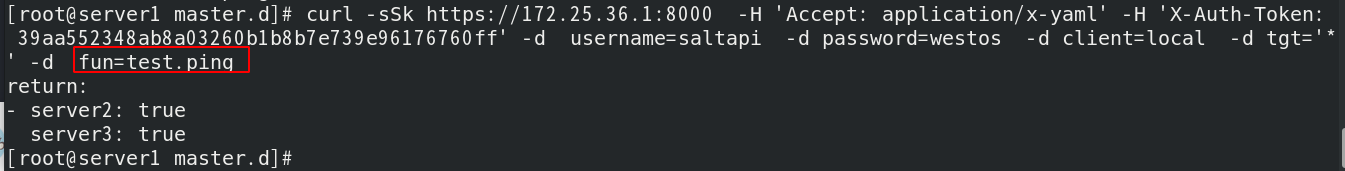

Then test (push task) and the connection is successful

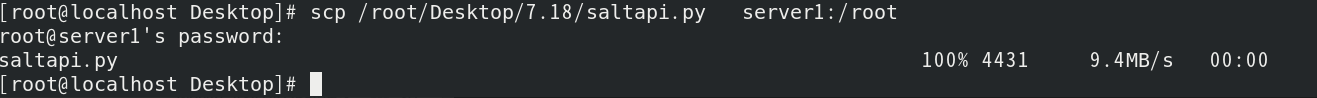

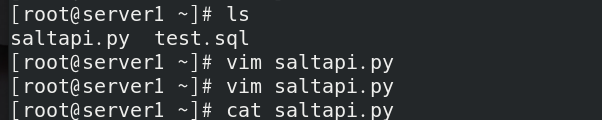

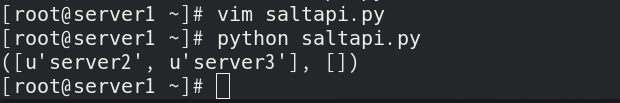

Combined with python script to push the task directly, the real machine's saltapi Py to server1

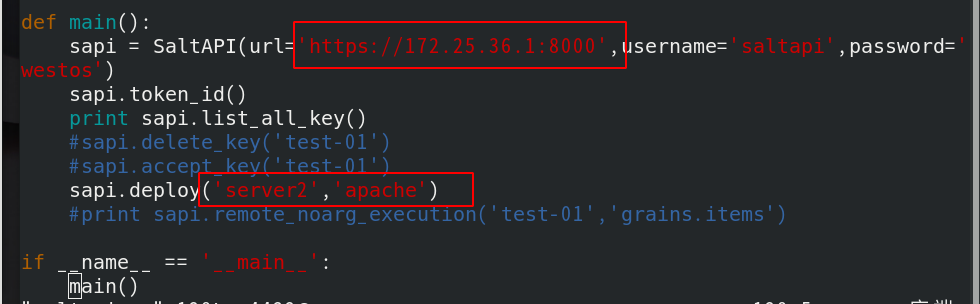

The contents of the document are as follows:

# -*- coding: utf-8 -*-

import urllib2,urllib

import time

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

try:

import json

except ImportError:

import simplejson as json

class SaltAPI(object):

__token_id = ''

def __init__(self,url,username,password):

self.__url = url.rstrip('/')

self.__user = username

self.__password = password

def token_id(self):

''' user login and get token id '''

params = {'eauth': 'pam', 'username': self.__user, 'password': self.__password}

encode = urllib.urlencode(params)

obj = urllib.unquote(encode)

content = self.postRequest(obj,prefix='/login')

try:

self.__token_id = content['return'][0]['token']

except KeyError:

raise KeyError

def postRequest(self,obj,prefix='/'):

url = self.__url + prefix

headers = {'X-Auth-Token' : self.__token_id}

req = urllib2.Request(url, obj, headers)

opener = urllib2.urlopen(req)

content = json.loads(opener.read())

return content

def list_all_key(self):

params = {'client': 'wheel', 'fun': 'key.list_all'}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

minions = content['return'][0]['data']['return']['minions']

minions_pre = content['return'][0]['data']['return']['minions_pre']

return minions,minions_pre

def delete_key(self,node_name):

params = {'client': 'wheel', 'fun': 'key.delete', 'match': node_name}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

ret = content['return'][0]['data']['success']

return ret

def accept_key(self,node_name):

params = {'client': 'wheel', 'fun': 'key.accept', 'match': node_name}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

ret = content['return'][0]['data']['success']

return ret

def remote_noarg_execution(self,tgt,fun):

''' Execute commands without parameters '''

params = {'client': 'local', 'tgt': tgt, 'fun': fun}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

ret = content['return'][0][tgt]

return ret

def remote_execution(self,tgt,fun,arg):

''' Command execution with parameters '''

params = {'client': 'local', 'tgt': tgt, 'fun': fun, 'arg': arg}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

ret = content['return'][0][tgt]

return ret

def target_remote_execution(self,tgt,fun,arg):

''' Use targeting for remote execution '''

params = {'client': 'local', 'tgt': tgt, 'fun': fun, 'arg': arg, 'expr_form': 'nodegroup'}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

jid = content['return'][0]['jid']

return jid

def deploy(self,tgt,arg):

''' Module deployment '''

params = {'client': 'local', 'tgt': tgt, 'fun': 'state.sls', 'arg': arg}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

return content

def async_deploy(self,tgt,arg):

''' Asynchronously send a command to connected minions '''

params = {'client': 'local_async', 'tgt': tgt, 'fun': 'state.sls', 'arg': arg}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

jid = content['return'][0]['jid']

return jid

def target_deploy(self,tgt,arg):

''' Based on the node group forms deployment '''

params = {'client': 'local_async', 'tgt': tgt, 'fun': 'state.sls', 'arg': arg, 'expr_form': 'nodegroup'}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

jid = content['return'][0]['jid']

return jid

def main():

sapi = SaltAPI(url='https://172.25.36.1:8000',username='saltapi',password='westos')

sapi.token_id()

print sapi.list_all_key()

#sapi.delete_key('test-01')

#sapi.accept_key('test-01')

sapi.deploy('server2','apache')

#print sapi.remote_noarg_execution('test-01','grains.items')

if __name__ == '__main__':

main()

Modify the following:

print sapi. list_ all_ When key() is opened, a push prompt will be output. When annotated, there is no prompt for the direct push service;

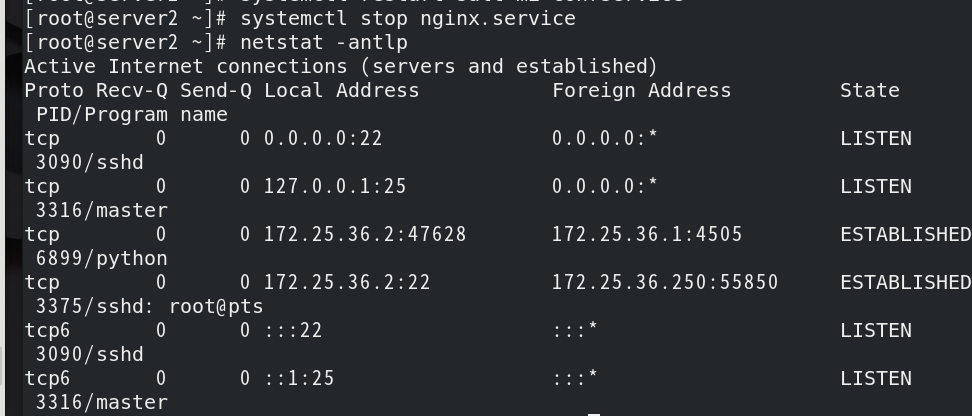

First stop the nginx service on the previous server2 (to avoid port conflicts when deploying the httpd service)

Push the script on server1

View the process on server2;

You can see that the httpd service is turned on