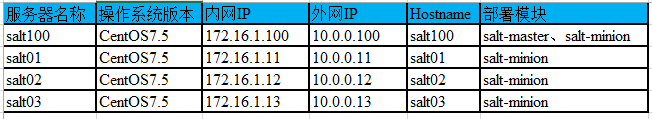

1. Host Planning

Pillar document

https://docs.saltstack.com/en/latest/topics/pillar/index.html

Matters needing attention

If the master or minion configuration file is modified, the corresponding service must be restarted.

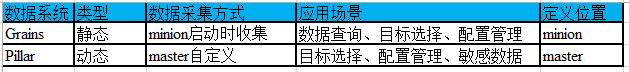

2. Grains VS Pillar

3. Pillar Basic Information

1 Pillar 2 Pillar data is the dynamic assignment of specific data to a particular minion. 3 Only the specified minion can see its own data [so it must have top.sls] 4 So it can be used for sensitive data 5 6 Pillar refresh: 7 salt'*'saltutil. sync_all # is available but not recommended 8 salt'*'saltutil. sync_pillar has an error and is suitable for master less mode 9 salt'*'saltutil. refresh_modules refresh modules, so it is not recommended to use them 10 salt'*'saltutil. refresh_pillar # Recommended use of 11 12 Special attention should be paid to: 13 If salt'*'saltutil. refresh_pillar is not executed to view information directly using salt'*' pillar. items, you can also see that the information is up-to-date. 14 But it's old information when looking at specific updates, so the pillar refresh command must be executed. 15 16 use: 17 1. Target selection 18 2. Configuration Management 19.3. Confidential Data [Sensitive Data]

4. Display system's own pillar

The pillar that comes with the system is not displayed by default.

Note: Restore it after reading, because there are more data. Mixed with custom data, inconvenient to view

4.1. Modify the configuration file and restart the service

1 [root@salt100 ~]# salt 'salt01' pillar.items # pillar information is not displayed by default 2 salt01: 3 ---------- 4 [root@salt100 ~]# vim /etc/salt/master 5 ……………… 6 # The pillar_opts option adds the master configuration file data to a dict in 7 # the pillar called "master". This is used to set simple configurations in the 8 # master config file that can then be used on minions. 9 #pillar_opts: False 10 pillar_opts: True 11 ……………… 12 [root@salt100 ~]# systemctl restart salt-master.service # Modified the configuration file to restart the service

4.2. Display pillar information

1 [root@salt100 ~]# salt 'salt01' pillar.items # Display system pillar information 2 salt01: 3 ---------- 4 master: 5 ---------- 6 __cli: 7 salt-master 8 __role: 9 master 10 allow_minion_key_revoke: 11 True 12 archive_jobs: 13 False 14 auth_events: 15 True 16 auth_mode: 17 1 18 auto_accept: 19 False 20 azurefs_update_interval: 21 60 22 cache: 23 localfs 24 cache_sreqs: 25 True 26 cachedir: 27 /var/cache/salt/master 28 clean_dynamic_modules: 29 True 30 cli_summary: 31 False 32 client_acl_verify: 33 True 34 cluster_masters: 35 cluster_mode: 36 False 37 con_cache: 38 False 39 conf_file: 40 /etc/salt/master 41 config_dir: 42 /etc/salt 43 cython_enable: 44 False 45 daemon: 46 False 47 decrypt_pillar: 48 decrypt_pillar_default: 49 gpg 50 decrypt_pillar_delimiter: 51 : 52 decrypt_pillar_renderers: 53 - gpg 54 default_include: 55 master.d/*.conf 56 default_top: 57 base 58 discovery: 59 False 60 django_auth_path: 61 django_auth_settings: 62 drop_messages_signature_fail: 63 False 64 dummy_pub: 65 False 66 eauth_acl_module: 67 eauth_tokens: 68 localfs 69 enable_gpu_grains: 70 False 71 enable_ssh_minions: 72 False 73 enforce_mine_cache: 74 False 75 engines: 76 env_order: 77 event_match_type: 78 startswith 79 event_return: 80 event_return_blacklist: 81 event_return_queue: 82 0 83 event_return_whitelist: 84 ext_job_cache: 85 ext_pillar: 86 extension_modules: 87 /var/cache/salt/master/extmods 88 external_auth: 89 ---------- 90 extmod_blacklist: 91 ---------- 92 extmod_whitelist: 93 ---------- 94 failhard: 95 False 96 file_buffer_size: 97 1048576 98 file_client: 99 local 100 file_ignore_glob: 101 file_ignore_regex: 102 file_recv: 103 False 104 file_recv_max_size: 105 100 106 file_roots: 107 ---------- 108 base: 109 - /srv/salt 110 fileserver_backend: 111 - roots 112 fileserver_followsymlinks: 113 True 114 fileserver_ignoresymlinks: 115 False 116 fileserver_limit_traversal: 117 False 118 fileserver_verify_config: 119 True 120 gather_job_timeout: 121 10 122 git_pillar_base: 123 master 124 git_pillar_branch: 125 master 126 git_pillar_env: 127 git_pillar_global_lock: 128 True 129 git_pillar_includes: 130 True 131 git_pillar_insecure_auth: 132 False 133 git_pillar_passphrase: 134 git_pillar_password: 135 git_pillar_privkey: 136 git_pillar_pubkey: 137 git_pillar_refspecs: 138 - +refs/heads/*:refs/remotes/origin/* 139 - +refs/tags/*:refs/tags/* 140 git_pillar_root: 141 git_pillar_ssl_verify: 142 True 143 git_pillar_user: 144 git_pillar_verify_config: 145 True 146 gitfs_base: 147 master 148 gitfs_disable_saltenv_mapping: 149 False 150 gitfs_env_blacklist: 151 gitfs_env_whitelist: 152 gitfs_global_lock: 153 True 154 gitfs_insecure_auth: 155 False 156 gitfs_mountpoint: 157 gitfs_passphrase: 158 gitfs_password: 159 gitfs_privkey: 160 gitfs_pubkey: 161 gitfs_ref_types: 162 - branch 163 - tag 164 - sha 165 gitfs_refspecs: 166 - +refs/heads/*:refs/remotes/origin/* 167 - +refs/tags/*:refs/tags/* 168 gitfs_remotes: 169 gitfs_root: 170 gitfs_saltenv: 171 gitfs_saltenv_blacklist: 172 gitfs_saltenv_whitelist: 173 gitfs_ssl_verify: 174 True 175 gitfs_update_interval: 176 60 177 gitfs_user: 178 hash_type: 179 sha256 180 hgfs_base: 181 default 182 hgfs_branch_method: 183 branches 184 hgfs_env_blacklist: 185 hgfs_env_whitelist: 186 hgfs_mountpoint: 187 hgfs_remotes: 188 hgfs_root: 189 hgfs_saltenv_blacklist: 190 hgfs_saltenv_whitelist: 191 hgfs_update_interval: 192 60 193 http_max_body: 194 107374182400 195 http_request_timeout: 196 3600.0 197 id: 198 salt01 199 interface: 200 0.0.0.0 201 ioflo_console_logdir: 202 ioflo_period: 203 0.01 204 ioflo_realtime: 205 True 206 ioflo_verbose: 207 0 208 ipc_mode: 209 ipc 210 ipc_write_buffer: 211 0 212 ipv6: 213 False 214 jinja_env: 215 ---------- 216 jinja_lstrip_blocks: 217 False 218 jinja_sls_env: 219 ---------- 220 jinja_trim_blocks: 221 False 222 job_cache: 223 True 224 job_cache_store_endtime: 225 False 226 keep_acl_in_token: 227 False 228 keep_jobs: 229 24 230 key_cache: 231 key_logfile: 232 /var/log/salt/key 233 key_pass: 234 None 235 keysize: 236 2048 237 local: 238 True 239 lock_saltenv: 240 False 241 log_datefmt: 242 %H:%M:%S 243 log_datefmt_console: 244 %H:%M:%S 245 log_datefmt_logfile: 246 %Y-%m-%d %H:%M:%S 247 log_file: 248 /var/log/salt/master 249 log_fmt_console: 250 [%(levelname)-8s] %(message)s 251 log_fmt_logfile: 252 %(asctime)s,%(msecs)03d [%(name)-17s:%(lineno)-4d][%(levelname)-8s][%(process)d] %(message)s 253 log_granular_levels: 254 ---------- 255 log_level: 256 warning 257 log_level_logfile: 258 warning 259 log_rotate_backup_count: 260 0 261 log_rotate_max_bytes: 262 0 263 loop_interval: 264 60 265 maintenance_floscript: 266 /usr/lib/python2.7/site-packages/salt/daemons/flo/maint.flo 267 master_floscript: 268 /usr/lib/python2.7/site-packages/salt/daemons/flo/master.flo 269 master_job_cache: 270 local_cache 271 master_pubkey_signature: 272 master_pubkey_signature 273 master_roots: 274 ---------- 275 base: 276 - /srv/salt-master 277 master_sign_key_name: 278 master_sign 279 master_sign_pubkey: 280 False 281 master_stats: 282 False 283 master_stats_event_iter: 284 60 285 master_tops: 286 ---------- 287 master_use_pubkey_signature: 288 False 289 max_event_size: 290 1048576 291 max_minions: 292 0 293 max_open_files: 294 100000 295 memcache_debug: 296 False 297 memcache_expire_seconds: 298 0 299 memcache_full_cleanup: 300 False 301 memcache_max_items: 302 1024 303 min_extra_mods: 304 minion_data_cache: 305 True 306 minion_data_cache_events: 307 True 308 minionfs_blacklist: 309 minionfs_env: 310 base 311 minionfs_mountpoint: 312 minionfs_update_interval: 313 60 314 minionfs_whitelist: 315 module_dirs: 316 nodegroups: 317 ---------- 318 on_demand_ext_pillar: 319 - libvirt 320 - virtkey 321 open_mode: 322 False 323 optimization_order: 324 - 0 325 - 1 326 - 2 327 order_masters: 328 False 329 outputter_dirs: 330 peer: 331 ---------- 332 permissive_acl: 333 False 334 permissive_pki_access: 335 False 336 pidfile: 337 /var/run/salt-master.pid 338 pillar_cache: 339 False 340 pillar_cache_backend: 341 disk 342 pillar_cache_ttl: 343 3600 344 pillar_includes_override_sls: 345 False 346 pillar_merge_lists: 347 False 348 pillar_opts: 349 True 350 pillar_roots: 351 ---------- 352 base: 353 - /srv/pillar 354 - /srv/spm/pillar 355 pillar_safe_render_error: 356 True 357 pillar_source_merging_strategy: 358 smart 359 pillar_version: 360 2 361 pillarenv: 362 None 363 ping_on_rotate: 364 False 365 pki_dir: 366 /etc/salt/pki/master 367 preserve_minion_cache: 368 False 369 pub_hwm: 370 1000 371 publish_port: 372 4505 373 publish_session: 374 86400 375 publisher_acl: 376 ---------- 377 publisher_acl_blacklist: 378 ---------- 379 python2_bin: 380 python2 381 python3_bin: 382 python3 383 queue_dirs: 384 raet_alt_port: 385 4511 386 raet_clear_remote_masters: 387 True 388 raet_clear_remotes: 389 False 390 raet_lane_bufcnt: 391 100 392 raet_main: 393 True 394 raet_mutable: 395 False 396 raet_port: 397 4506 398 raet_road_bufcnt: 399 2 400 range_server: 401 range:80 402 reactor: 403 reactor_refresh_interval: 404 60 405 reactor_worker_hwm: 406 10000 407 reactor_worker_threads: 408 10 409 regen_thin: 410 False 411 renderer: 412 yaml_jinja 413 renderer_blacklist: 414 renderer_whitelist: 415 require_minion_sign_messages: 416 False 417 ret_port: 418 4506 419 root_dir: 420 / 421 roots_update_interval: 422 60 423 rotate_aes_key: 424 True 425 runner_dirs: 426 runner_returns: 427 True 428 s3fs_update_interval: 429 60 430 salt_cp_chunk_size: 431 98304 432 saltenv: 433 None 434 saltversion: 435 2018.3.3 436 schedule: 437 ---------- 438 search: 439 serial: 440 msgpack 441 show_jid: 442 False 443 show_timeout: 444 True 445 sign_pub_messages: 446 True 447 signing_key_pass: 448 None 449 sock_dir: 450 /var/run/salt/master 451 sock_pool_size: 452 1 453 sqlite_queue_dir: 454 /var/cache/salt/master/queues 455 ssh_config_file: 456 /root/.ssh/config 457 ssh_identities_only: 458 False 459 ssh_list_nodegroups: 460 ---------- 461 ssh_log_file: 462 /var/log/salt/ssh 463 ssh_passwd: 464 ssh_port: 465 22 466 ssh_scan_ports: 467 22 468 ssh_scan_timeout: 469 0.01 470 ssh_sudo: 471 False 472 ssh_sudo_user: 473 ssh_timeout: 474 60 475 ssh_use_home_key: 476 False 477 ssh_user: 478 root 479 ssl: 480 None 481 state_aggregate: 482 False 483 state_auto_order: 484 True 485 state_events: 486 False 487 state_output: 488 full 489 state_output_diff: 490 False 491 state_top: 492 salt://top.sls 493 state_top_saltenv: 494 None 495 state_verbose: 496 True 497 sudo_acl: 498 False 499 svnfs_branches: 500 branches 501 svnfs_env_blacklist: 502 svnfs_env_whitelist: 503 svnfs_mountpoint: 504 svnfs_remotes: 505 svnfs_root: 506 svnfs_saltenv_blacklist: 507 svnfs_saltenv_whitelist: 508 svnfs_tags: 509 tags 510 svnfs_trunk: 511 trunk 512 svnfs_update_interval: 513 60 514 syndic_dir: 515 /var/cache/salt/master/syndics 516 syndic_event_forward_timeout: 517 0.5 518 syndic_failover: 519 random 520 syndic_forward_all_events: 521 False 522 syndic_jid_forward_cache_hwm: 523 100 524 syndic_log_file: 525 /var/log/salt/syndic 526 syndic_master: 527 masterofmasters 528 syndic_pidfile: 529 /var/run/salt-syndic.pid 530 syndic_wait: 531 5 532 tcp_keepalive: 533 True 534 tcp_keepalive_cnt: 535 -1 536 tcp_keepalive_idle: 537 300 538 tcp_keepalive_intvl: 539 -1 540 tcp_master_pub_port: 541 4512 542 tcp_master_publish_pull: 543 4514 544 tcp_master_pull_port: 545 4513 546 tcp_master_workers: 547 4515 548 test: 549 False 550 thin_extra_mods: 551 thorium_interval: 552 0.5 553 thorium_roots: 554 ---------- 555 base: 556 - /srv/thorium 557 timeout: 558 5 559 token_dir: 560 /var/cache/salt/master/tokens 561 token_expire: 562 43200 563 token_expire_user_override: 564 False 565 top_file_merging_strategy: 566 merge 567 transport: 568 zeromq 569 unique_jid: 570 False 571 user: 572 root 573 utils_dirs: 574 - /var/cache/salt/master/extmods/utils 575 verify_env: 576 True 577 winrepo_branch: 578 master 579 winrepo_cachefile: 580 winrepo.p 581 winrepo_dir: 582 /srv/salt/win/repo 583 winrepo_dir_ng: 584 /srv/salt/win/repo-ng 585 winrepo_insecure_auth: 586 False 587 winrepo_passphrase: 588 winrepo_password: 589 winrepo_privkey: 590 winrepo_pubkey: 591 winrepo_refspecs: 592 - +refs/heads/*:refs/remotes/origin/* 593 - +refs/tags/*:refs/tags/* 594 winrepo_remotes: 595 - https://github.com/saltstack/salt-winrepo.git 596 winrepo_remotes_ng: 597 - https://github.com/saltstack/salt-winrepo-ng.git 598 winrepo_ssl_verify: 599 True 600 winrepo_user: 601 worker_floscript: 602 /usr/lib/python2.7/site-packages/salt/daemons/flo/worker.flo 603 worker_threads: 604 5 605 zmq_backlog: 606 1000 607 zmq_filtering: 608 False 609 zmq_monitor: 610 False

5. Location of pillar files

1 [root@salt100 ~]# vim /etc/salt/master # Store the default path so that you don't need to modify the configuration file 2 # Salt Pillars allow for the building of global data that can be made selectively 3 # available to different minions based on minion grain filtering. The Salt 4 # Pillar is laid out in the same fashion as the file server, with environments, 5 # a top file and sls files. However, pillar data does not need to be in the 6 # highstate format, and is generally just key/value pairs. 7 #pillar_roots: 8 # base: 9 # - /srv/pillar # pillar file storage directory 10 #

6. Customize Pillar

6.1. pillar's sls file writing

A layer of grains is involved in the pillar SLS file

1 [root@salt100 web]# pwd # Define a file directory for later maintenance 2 /srv/pillar/web_pillar 3 [root@salt100 web]# cat apache.sls 4 {% if grains['os'] == 'CentOS' %} 5 apache: httpd 6 {% elif grains['os'] == 'redhat03' %} 7 apache: apache2 8 {% endif %}

Multilayer grains are involved in pillar SLS files

It also includes priority and or or or and

1 [root@salt100 web]# pwd # Define a file directory for later maintenance 2 /srv/pillar/web_pillar 3 [root@salt100 pillar]# cat web_pillar/service_appoint.sls # Note how to write: Multilayer specify, include priority, or or or and 4 {% if (grains['ip4_interfaces']['eth0'][0] == '172.16.1.11' and grains['host'] == 'salt01') 5 or (grains['ip4_interfaces']['eth0'][0] == '172.16.1.12' and grains['host'] == 'salt02') 6 or (grains['ip4_interfaces']['eth0'][0] == '172.16.1.13' and grains['host'] == 'salt03') 7 %} 8 service_appoint: www 9 {% elif grains['ip4_interfaces']['eth0'][0] == '172.16.1.100' %} 10 service_appoint: mariadb 11 {% endif %}

6.2. The top file of pillar [must have top.sls]

The pillar information is assigned to the selected minion; therefore, there must be a top file.

1 [root@salt100 pillar]# pwd 2 /srv/pillar 3 [root@salt100 pillar]# cat top.sls 4 base: 5 '*': 6 - web_pillar.service_appoint 7 8 # Use Wildcards 9 'salt0*': 10 - web_pillar.apache 11 # Specify specific minion s 12 'salt03': 13 - web_pillar.apache

6.3. pillar information refresh and view

If salt'*'saltutil.refresh_pillar is not executed and the information is viewed directly using salt'*'pillar.items, you can also see that the information is up-to-date, but when you view the specific update item, it is old information, so the pillar refresh command must be executed.

1 [root@salt100 pillar]# salt '*' saltutil.refresh_pillar # Refresh 2 salt100: 3 True 4 salt01: 5 True 6 salt02: 7 True 8 salt03: 9 True 10 [root@salt100 pillar]# salt '*' pillar.item apache # Look at specific ideas 11 salt100: 12 ---------- 13 service_appoint: 14 mariadb 15 salt01: 16 ---------- 17 apache: 18 apache3 19 service_appoint: 20 www 21 salt03: 22 ---------- 23 apache: 24 httpd 25 service_appoint: 26 www 27 salt02: 28 ---------- 29 apache: 30 httpd 31 service_appoint: 32 www

7. Level Relations Writing

7.1. pillar's sls file writing

1 [root@salt100 pillar]# cat /srv/pillar/web_pillar/user.sls 2 level1: 3 level2: 4 {% if grains['os'] == 'CentOS' %} 5 my_user: 6 - zhangsan01 7 - zhangsan02 8 {% elif grains['os'] == 'redhat03' %} 9 my_user: lisi001 10 {% endif %}

7.2. The top file of pillar [must have top.sls]

1 [root@salt100 pillar]# pwd 2 /srv/pillar 3 [root@salt100 pillar]# cat top.sls 4 # The following can be used directly, and sls supports annotations 5 base: 6 '*': 7 - web_pillar.service_appoint 8 9 # Use Wildcards 10 'salt0*': 11 - web_pillar.apache 12 - web_pillar.user # Quote 13 # Specify specific minion s 14 'salt03': 15 - web_pillar.apache 16 - web_pillar.user # Quote

7.3. pillar information refresh and view

1 [root@salt100 pillar]# salt '*' saltutil.refresh_pillar # Refresh pillar 2 ……………… 3 [root@salt100 pillar]# salt '*' pillar.items # View all information 4 salt03: 5 ---------- 6 apache: 7 httpd 8 level1: 9 ---------- # This row represents a hierarchy 10 level2: 11 ---------- 12 my_user: 13 - zhangsan01 14 - zhangsan02 15 service_appoint: 16 www 17 salt02: 18 ---------- 19 apache: 20 httpd 21 level1: 22 ---------- 23 level2: 24 ---------- 25 my_user: 26 - zhangsan01 27 - zhangsan02 28 service_appoint: 29 www 30 salt01: 31 ---------- 32 apache: 33 apache3 34 level1: 35 ---------- 36 level2: 37 ---------- 38 my_user: 39 lisi001 40 service_appoint: 41 www 42 salt100: 43 ---------- 44 service_appoint: 45 mariadb 46 [root@salt100 pillar]# salt '*' pillar.item level1 # View the information for the specified level 1 47 salt03: 48 ---------- 49 level1: 50 ---------- 51 level2: 52 ---------- 53 my_user: 54 - zhangsan01 55 - zhangsan02 56 salt02: 57 ---------- 58 level1: 59 ---------- 60 level2: 61 ---------- 62 my_user: 63 - zhangsan01 64 - zhangsan02 65 salt01: 66 ---------- 67 level1: 68 ---------- 69 level2: 70 ---------- 71 my_user: 72 lisi001 73 salt100: 74 ---------- 75 level1:

7.4. Multilevel Viewing

1 [root@salt100 pillar]# salt '*' pillar.item level1:level2 # Multilevel access 2 salt01: 3 ---------- 4 level1:level2: 5 ---------- 6 my_user: 7 lisi001 8 salt03: 9 ---------- 10 level1:level2: 11 ---------- 12 my_user: 13 - zhangsan01 14 - zhangsan02 15 salt02: 16 ---------- 17 level1:level2: 18 ---------- 19 my_user: 20 - zhangsan01 21 - zhangsan02 22 salt100: 23 ---------- 24 level1:level2: 25 [root@salt100 pillar]# salt '*' pillar.item level1:level2:my_user # Multilevel access 26 salt01: 27 ---------- 28 level1:level2:my_user: 29 lisi001 30 salt03: 31 ---------- 32 level1:level2:my_user: 33 - zhangsan01 34 - zhangsan02 35 salt02: 36 ---------- 37 level1:level2:my_user: 38 - zhangsan01 39 - zhangsan02 40 salt100: 41 ---------- 42 level1:level2:my_user: 43 [root@salt100 web_pillar]# salt '*' pillar.item level1:level2:my_user:0 # Take the first value in the list 44 salt03: 45 ---------- 46 level1:level2:my_user:0: 47 zhangsan01 48 salt01: 49 ---------- 50 level1:level2:my_user:0: 51 salt02: 52 ---------- 53 level1:level2:my_user:0: 54 zhangsan01 55 salt100: 56 ---------- 57 level1:level2:my_user:0:

8. Pillar usage

8.1. Query pillar's specified information

1 [root@salt100 pillar]# salt 'salt0*' pillar.item apache # Wildcard matching 2 salt03: 3 ---------- 4 apache: 5 httpd 6 salt02: 7 ---------- 8 apache: 9 httpd 10 salt01: 11 ---------- 12 apache: 13 apache3 14 [root@salt100 pillar]# salt 'salt0*' pillar.item level1:level2:my_user # Multilayer query 15 salt01: 16 ---------- 17 level1:level2:my_user: 18 lisi 19 salt02: 20 ---------- 21 level1:level2:my_user: 22 zhangsan 23 salt03: 24 ---------- 25 level1:level2:my_user: 26 zhangsan 27 [root@salt100 web_pillar]# salt '*' pillar.item level1:level2:my_user:0 # Take the first value in the list 28 salt03: 29 ---------- 30 level1:level2:my_user:0: 31 zhangsan01 32 salt01: 33 ---------- 34 level1:level2:my_user:0: 35 salt02: 36 ---------- 37 level1:level2:my_user:0: 38 zhangsan01 39 salt100: 40 ---------- 41 level1:level2:my_user:0:

8.2. Query information through pillar

1 [root@salt100 pillar]# salt -I 'apache:httpd' cmd.run 'echo "zhangliang $(date +%Y)"' # Configuration via pillar 2 salt02: 3 zhangliang 2018 4 salt03: 5 zhangliang 2018 6 [root@salt100 pillar]# salt -I 'level1:level2:my_user:lisi' cmd.run 'whoami' # pillar multilevel matching 7 salt01: 8 root

9. Use pillar in top file of state SLS

9.1. top.sls

1 [root@salt100 salt]# pwd 2 /srv/salt 3 [root@salt100 salt]# cat top.sls 4 base: 5 # Using pillar matching, add the following lines 6 'level1:level2:my_user': 7 - match: pillar 8 - web.apache

9.2. state.highstate execution

1 [root@salt100 salt]# salt 'salt01' state.highstate test=True # Normal pre-execution 2 [root@salt100 salt]# salt 'salt01' state.highstate # Normal execution