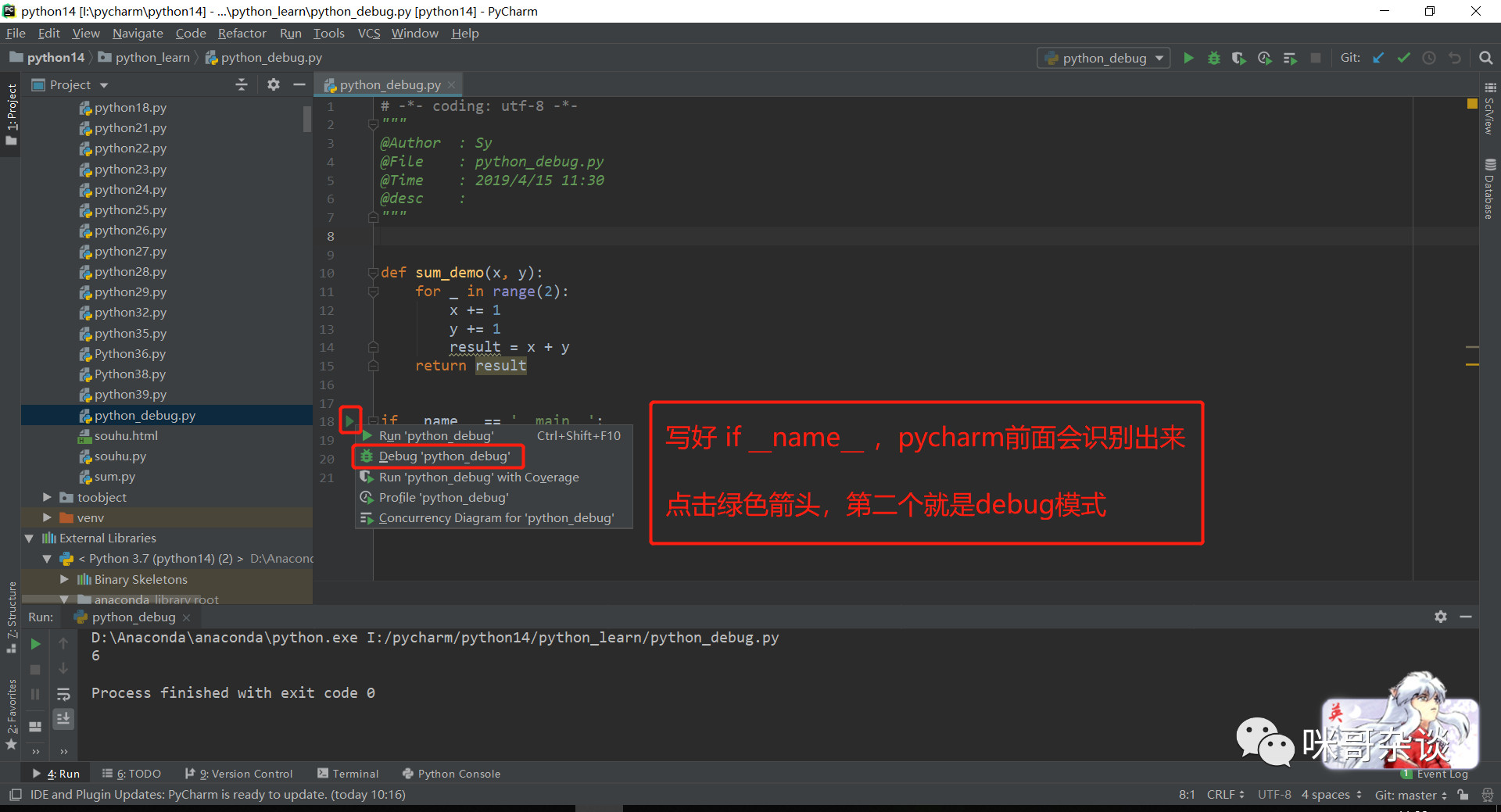

1. Debug the program with breakpoints in pycharm to understand the logic of each line of code

How to enable debug debugging:

if name = = 'main': (referenced in the figure below) https://mp.weixin.qq.com/s/YNfoI-KbUg6jLaWUya-21g)

The meaning of setting breakpoints: breakpoint debugging is actually that you mark a breakpoint at a certain place in the code during the automatic operation of the program. When the program runs to the breakpoint you set, it will be interrupted. At this time, you can see all the program variables that have been run before

Common shortcut keys:

step over (F8 shortcut key): during single step execution, when a sub function is encountered in the function, it will not enter the sub function for single step execution, but stop the whole sub function after execution, that is, take the whole sub function as one step. The effect is the same as step into when there is no sub function. Simply put, the program code crosses the sub function, but the sub function will execute and will not enter.

Step into (F7 shortcut key): when stepping into execution, you will enter and continue to step into execution when encountering sub functions, and some will jump into the source code for execution.

step into my code (Alt+Shift+F7 shortcut): when stepping into execution, you will enter and continue stepping into the sub function, and will not enter the source code.

step out (Shift+F8 shortcut): if you enter a function body, you see two lines of code and don't want to see it, jump out of the current function body and return to the place where this function is called, that is, you can use this function.

Resume program(F9 shortcut): continue to resume the program and run directly to the next breakpoint.

The general operation steps are to set the breakpoint, debug, and then F8 step-by-step debugging. When you encounter the function you want to enter, F7 goes in, figure it out, shift + F8, skip the place you don't want to see, directly set the next breakpoint, and then F9

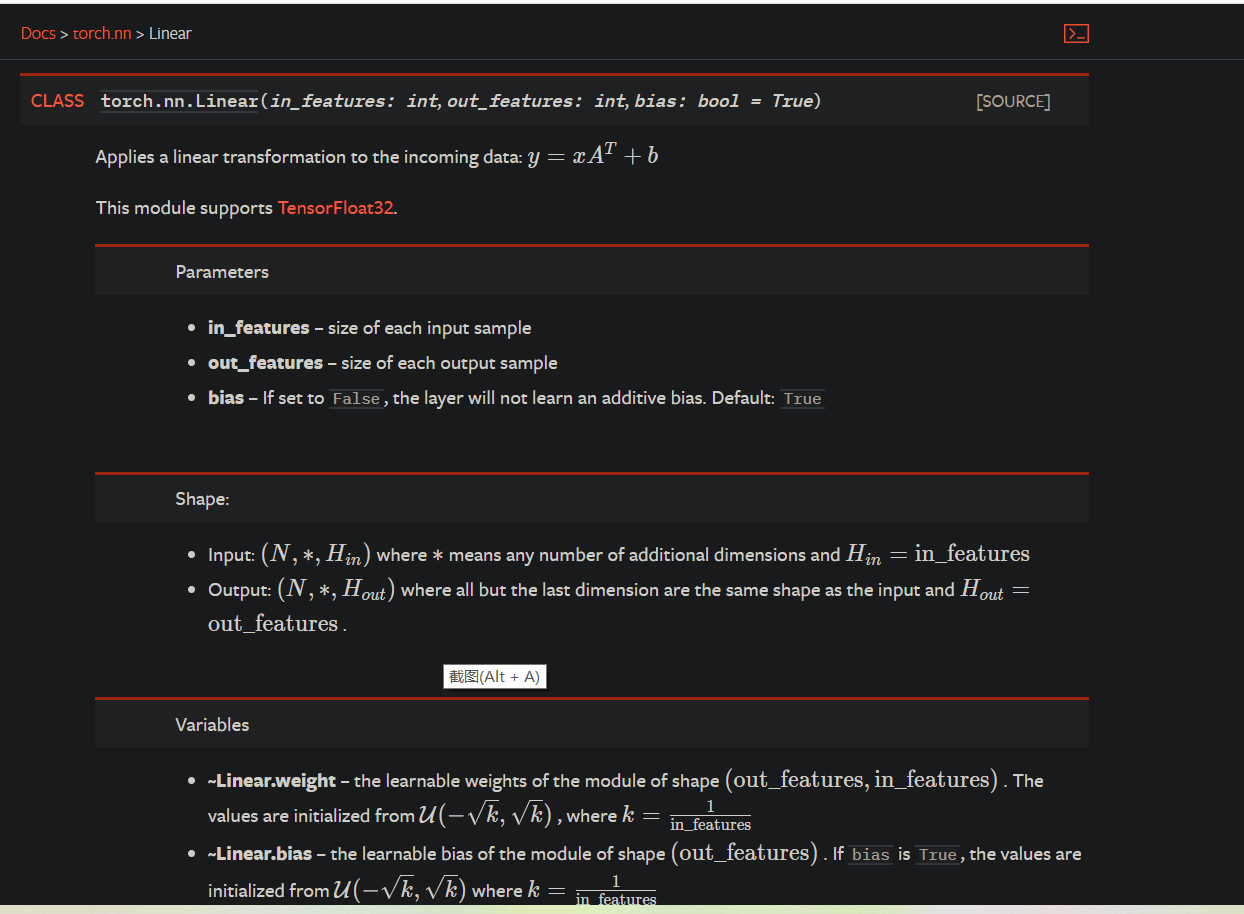

2. Full connection layer: nn.Linear usage (written clearly in official documents)

3. nn.Module use

To build a neural network under the pytorch framework, you need to define a class and inherit the torch.nn.Module module. The general structure is as follows:

class Model(nn.Module):

def __init__(self):

super().__init__()

...

def...

return

def forward(self, inputdata):

...

return

In essence, it is also a class. After inheriting the Module, it has some special properties. You can clearly explain it with a basic example code:

import torch

import torch.nn as nn

#The input model is a 3 * 5 matrix

inputdata = torch.rand(3, 5)

class Testnet(nn.Module):

def __init__(self):

super().__init__()

self.fc = nn.Linear(5, 2)

def forward(self, inputdata):

out = self.fc(inputdata)

return out

test = Testnet()

out = test(inputdata)

print(out)

Output:

tensor([[-0.7191, 0.6140],

[-0.1250, 0.7199],

[-0.0656, 0.5232]], grad_fn=<AddmmBackward>)

4. Understanding of torch.rand() multidimensional tensor

torch.randn(2,3,4,5)#First, this is the 4th dimension, then 4 and 5 are the innermost layer, 3 is the outer layer, and 2 is the outermost layer. There are two (simple understanding: "[[[" has two large parts, each part has three '[[', there are four '[' in '[[', and the dimension is 4 * 5)

tensor([[[[-0.5819, 1.0541, 1.2122, 0.1487, -1.3239],

[ 1.1498, 0.1537, 1.3365, -0.5458, 2.4623],

[-0.2419, 0.9619, -1.7176, 0.6234, -0.1420],

[ 0.2708, 1.2968, 0.3590, 1.4835, -0.4068]],

[[-1.5560, 1.2271, 0.1556, -0.7206, -3.6874],

[-1.2283, -0.4955, -0.0591, 0.7332, -0.3467],

[-1.0715, -0.8225, -0.3180, -0.9774, -0.6425],

[ 0.0962, -0.4811, -1.2161, 0.6909, -0.4036]],

[[ 1.9039, 0.0585, 0.5491, -0.3894, 0.0350],

[-0.1628, 0.0697, -0.2491, 1.1777, 1.3530],

[-0.3784, -0.0743, -0.6657, -0.5710, 0.2267],

[-1.9573, 0.1118, 1.4209, 0.3095, -1.0523]]],

[[[ 1.1964, 0.8547, -0.7742, -0.5260, -0.1902],

[-0.2960, 0.7014, -0.1351, 1.3705, 0.9462],

[-0.4928, 0.3687, -0.8138, -0.3793, 1.2148],

[ 0.7936, 0.6168, -0.3903, 0.4030, -1.4236]],

[[-0.5191, -1.3978, -0.7809, 0.1161, -0.5701],

[ 1.7385, -0.8792, -0.7399, 0.4146, -0.2882],

[ 1.6423, -0.2982, 0.5043, 0.8092, 1.5948],

[ 1.6171, 0.2906, -0.2790, -0.4758, -1.4615]],

[[-0.8722, 0.7420, 0.3168, 0.9529, -0.7665],

[-0.4354, -0.4272, 0.7883, -2.2822, -0.2489],

[-0.3527, -0.9323, 0.2115, 0.6318, 0.6811],

[-0.6773, -0.3727, 0.2425, -1.0979, -0.7501]]]])

5. Meaning of torch.nn.softmax parameter