1, VirtualService (rich routing control)

1.1 URL redirection (redirect and rewrite)

- Redirect: redirect.

- Rewrite: that is, rewrite. It can not only redirect redirect on the url, but also directly rewrite the actual file of the request channel and more additional functions.

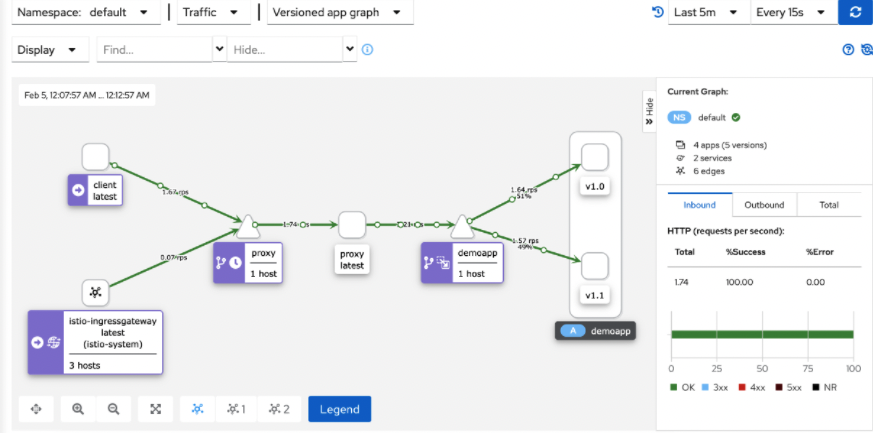

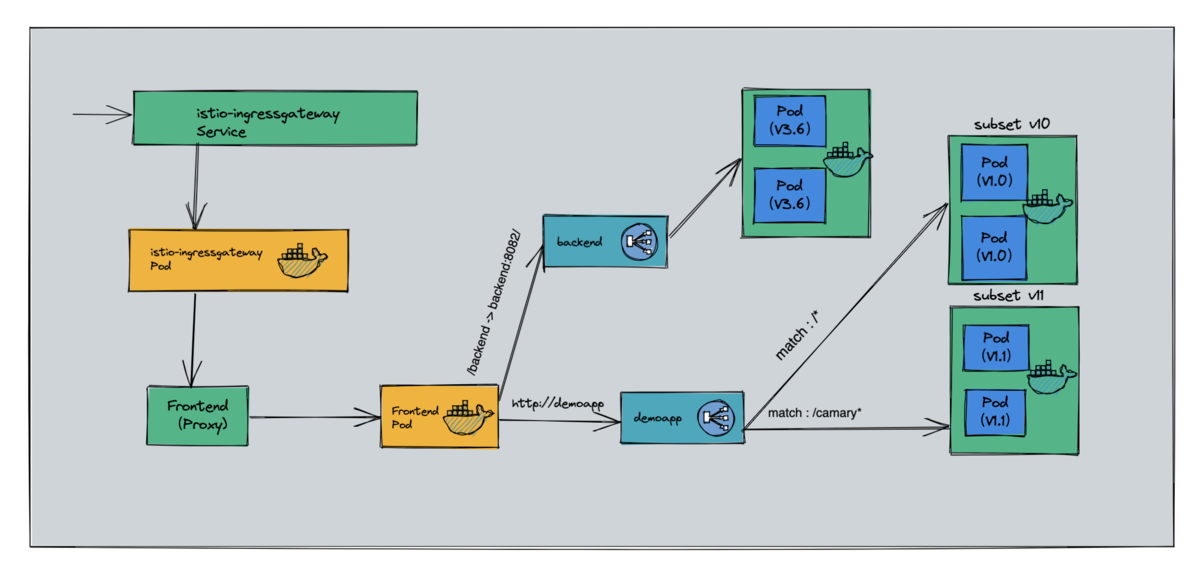

1.1.1. Case illustration

- proxy-gateway -> virtualservices/proxy -> virtualservices/demoapp(/backend) -> backend:8082 (Cluster)

1.1.2 case experiment

1,virtualservice Add routing policy

# cat virtualservice-demoapp.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp

spec:

hosts:

- demoapp # The hosts name should be consistent with the default route generated by the back-end service name (it should be consistent with the DOMAINS search domain name of istioctl proxy config routes $demoapp)

# Seven layer routing mechanism

http:

# rewrite policy: http://demoapp/canary -rewrite - > http://demoapp/

- name: rewrite

match:

- uri:

prefix: /canary

rewrite:

uri: /

# Routing target

route:

# v11 subset of clusters scheduled to demoapp

- destination:

host: demoapp

subset: v11

# redirect policy: http://demoapp/backend -redirect - > http://demoapp/

- name: redirect

match:

- uri:

prefix: "/backend"

redirect:

uri: /

authority: backend # The effect is similar to that of destination, so the backend service is also required

port: 8082

- name: default

# Routing target

route:

# v10 subset of clusters scheduled to demoapp

- destination:

host: demoapp

subset: v10

2,deploy backend service

# cat deploy-backend.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: backend

version: v3.6

name: backendv36

spec:

progressDeadlineSeconds: 600

replicas: 2

selector:

matchLabels:

app: backend

version: v3.6

template:

metadata:

creationTimestamp: null

labels:

app: backend

version: v3.6

spec:

containers:

- image: registry.cn-wulanchabu.aliyuncs.com/daizhe/gowebserver:v0.1.0

imagePullPolicy: IfNotPresent

name: gowebserver

env:

- name: "SERVICE_NAME"

value: "backend"

- name: "SERVICE_PORT"

value: "8082"

- name: "SERVICE_VERSION"

value: "v3.6"

ports:

- containerPort: 8082

name: web

protocol: TCP

resources:

limits:

cpu: 50m

---

apiVersion: v1

kind: Service

metadata:

name: backend

spec:

ports:

- name: http-web

port: 8082

protocol: TCP

targetPort: 8082

selector:

app: backend

version: v3.6

# kubectl apply -f deploy-backend.yaml

deployment.apps/backendv36 created

service/backend created

# kubectl get pods

NAME READY STATUS RESTARTS AGE

backendv36-64bd9dd97c-2qtcj 2/2 Running 0 7m47s

backendv36-64bd9dd97c-wmtd9 2/2 Running 0 7m47s

...

# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

backend ClusterIP 10.100.168.13 <none> 8082/TCP 8m1s

...

3,proxy View routing policy on

# istioctl proxy-config routes proxy-6b567ff76f-27c4k | grep -E "demoapp|backend"

# backend does not really show the redirection effect

8082 backend, backend.default + 1 more... /*

8080 demoapp, demoapp.default + 1 more... /canary* demoapp.default

8080 demoapp, demoapp.default + 1 more... /* demoapp.default

80 demoapp.default.svc.cluster.local /canary* demoapp.default

80 demoapp.default.svc.cluster.local /* demoapp.default

4,Within cluster client visit frontend proxy (Add: what really gives play to grid traffic scheduling is egress listener)

# kubectl run client --image=registry.cn-wulanchabu.aliyuncs.com/daizhe/admin-box -it --rm --restart=Never --command -- /bin/sh

If you don't see a command prompt, try pressing enter.

root@client # curl proxy

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-fdwf4, ServerIP: 10.220.104.187!

- Took 331 milliseconds.

root@client # curl proxy/canary

Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-qsw5z, ServerIP: 10.220.104.166!

- Took 40 milliseconds.

# The route that really defines the customer's access to the backend is defined on the demoapp, so the backend can implement the rewrite policy only when the url is sent to the backend. Therefore, it defines which service can be requested on which service;

root@client # curl proxy/backend

Proxying value: <!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 3.2 Final//EN">

<title>404 Not Found</title>

<h1>Not Found</h1>

<p>The requested URL was not found on the server. If you entered the URL manually please check your spelling and try again.</p>

- Took 41 milliseconds.

5,take backend Routing rewrite policy definition frontend proxy On the grid, define the parameters that fit inside the grid proxy VirtualService (-> frontend proxy -redirect-> backend)

# cat virtualservice-proxy.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: proxy

spec:

hosts:

- proxy

http:

- name: redirect

match:

- uri:

prefix: "/backend"

redirect:

uri: /

authority: backend

port: 8082

- name: default

route:

- destination:

host: proxy

# kubectl apply -f virtualservice-proxy.yaml

virtualservice.networking.istio.io/proxy configured

# istioctl proxy-config routes proxy-6b567ff76f-27c4k | grep -E "demoapp|backend"

8082 backend, backend.default + 1 more... /*

8080 demoapp, demoapp.default + 1 more... /canary* demoapp.default

8080 demoapp, demoapp.default + 1 more... /* demoapp.default

80 demoapp.default.svc.cluster.local /canary* demoapp.default

80 demoapp.default.svc.cluster.local /* demoapp.default

80 proxy, proxy.default + 1 more... /backend* proxy.default

6,Test the cluster again client visit frontend proxy

# kubectl run client --image=registry.cn-wulanchabu.aliyuncs.com/daizhe/admin-box -it --rm --restart=Never --command -- /bin/sh

If you don't see a command prompt, try pressing enter.

root@client # curl proxy

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-fdwf4, ServerIP: 10.220.104.187!

- Took 331 milliseconds.

root@client # curl proxy/canary

Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-qsw5z, ServerIP: 10.220.104.166!

root@client # curl -I proxy/backend

HTTP/1.1 301 Moved Permanently

location: http://backend:8082/

date: Sat, 29 Jan 2022 04:32:08 GMT

server: envoy

transfer-encoding: chunked

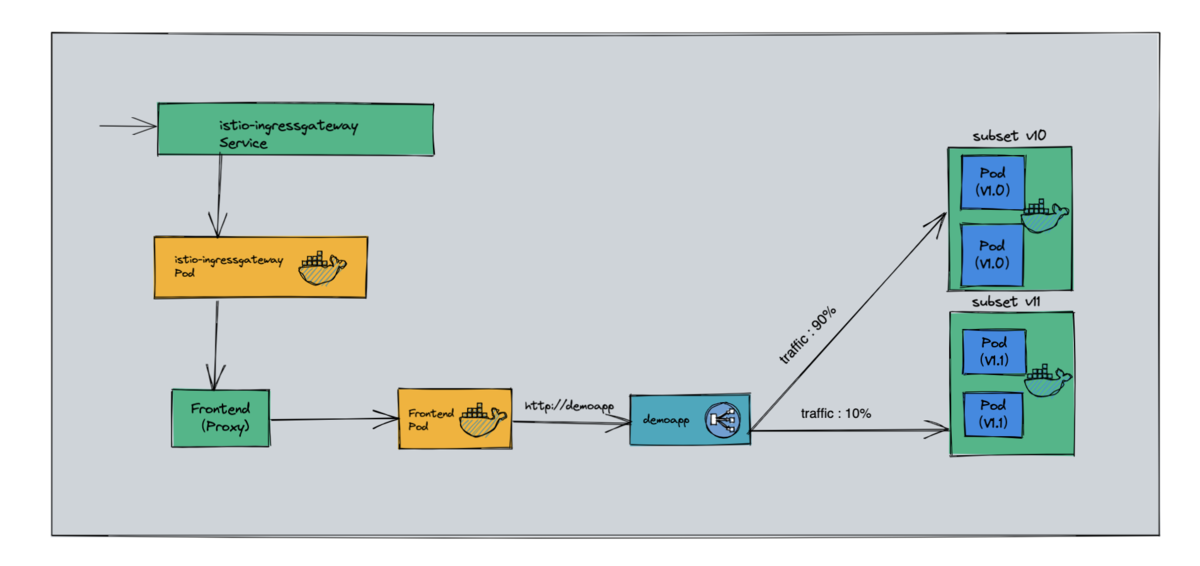

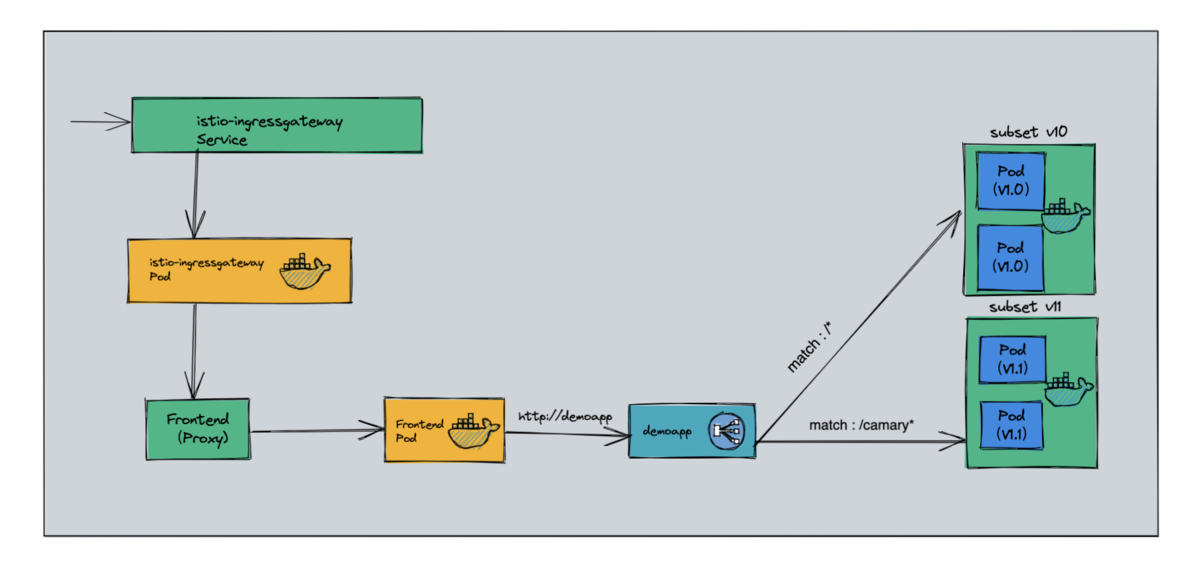

1.2. Traffic separation (weight based routing)

1.2.1 case diagram

1.2.2 case experiment

1,definition VirtualService

# cat virtualservice-demoapp.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp

spec:

# (advanced configuration of the virtual host automatically generated by demoapp). It needs to be consistent with the domain search domain name of istioctl proxy config routes $demoapp

# Access to demoapp service

hosts:

- demoapp

# Seven layer routing mechanism

http:

# The routing policy is a list item and can be set to multiple

# Route name, starting with a name

- name: weight-based-routing

# Route target - no match route matching condition is set, which means that the traffic is sent in rotation in the two clusters

route:

- destination:

host: demoapp # v10 subset of clusters scheduled to demoapp

subset: v10

weight: 90 # Carrying weight 90% flow

- destination:

host: demoapp # v11 subset of clusters scheduled to demoapp

subset: v11

weight: 10 # Carrying weight 10% flow

# kubectl apply -f virtualservice-demoapp.yaml

virtualservice.networking.istio.io/demoapp created

2,View defined VS Effective or not

# DEMOAPP=$(kubectl get pods -l app=demoapp -o jsonpath={.items[0].metadata.name})

# echo $DEMOAPP

demoappv10-5c497c6f7c-24dk4

# istioctl proxy-config routes $DEMOAPP | grep demoapp

80 demoapp.default.svc.cluster.local /* demoapp.default

8080 demoapp, demoapp.default + 1 more... /* demoapp.default

3,client visit frontend proxy (Add: what really gives play to grid traffic scheduling is egress listener)

# 9:1 ratio

# kubectl run client --image=registry.cn-wulanchabu.aliyuncs.com/daizhe/admin-box -it --rm --restart=Never --command -- /bin/sh

If you don't see a command prompt, try pressing enter.

root@client # curl proxy

Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-9bzmv, ServerIP: 10.220.104.177!

- Took 230 milliseconds.

root@client # curl proxy

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-24dk4, ServerIP: 10.220.104.155!

- Took 38 milliseconds.

root@client # curl proxy

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ks5hk, ServerIP: 10.220.104.133!

- Took 22 milliseconds.

root@client # curl proxy

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-fdwf4, ServerIP: 10.220.104.150!

- Took 22 milliseconds.

root@client # curl proxy

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-24dk4, ServerIP: 10.220.104.155!

- Took 15 milliseconds.

root@client # curl proxy

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ks5hk, ServerIP: 10.220.104.133!

- Took 6 milliseconds.

root@client # curl proxy

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-fdwf4, ServerIP: 10.220.104.150!

- Took 13 milliseconds.

root@client # curl proxy

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-24dk4, ServerIP: 10.220.104.155!

- Took 4 milliseconds.

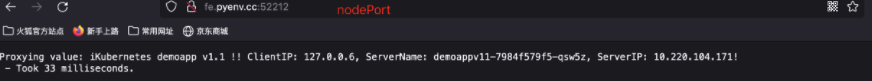

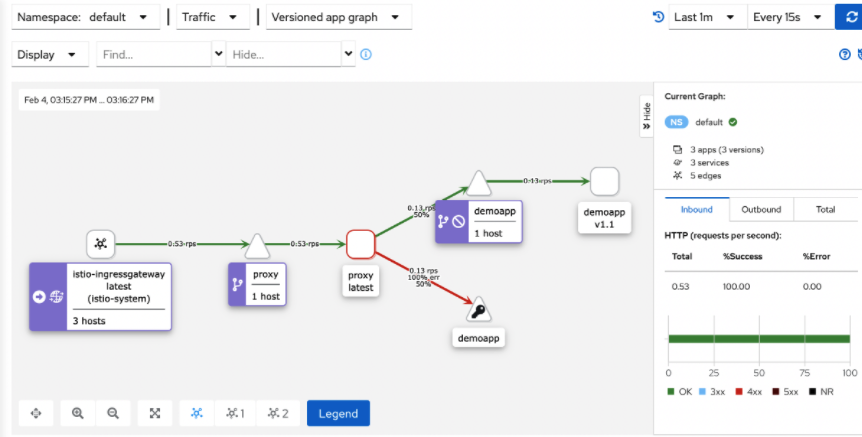

- 4. Cluster external access proxy (9:1)

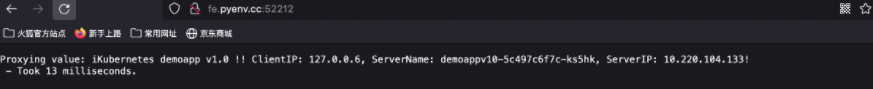

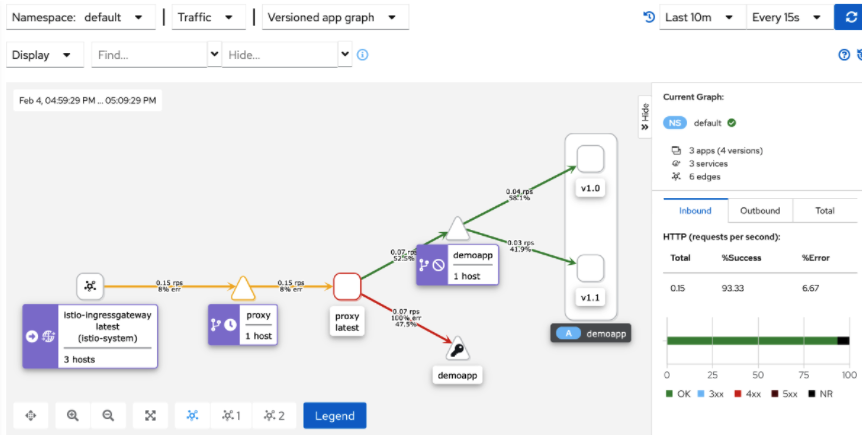

- 5. kiali graph can draw graphics in real time according to the flow (when the sample is large enough, the flow ratio is 9:1)

Cluster internal access

Cluster external access

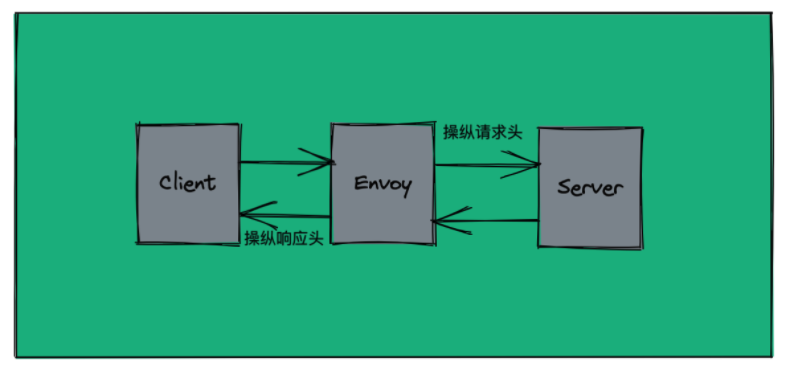

1.3. Headers Operation (manipulation header - > request message & response message)

- Headers provide Istio with a way to operate the HTTP Header, which is used to operate the Request or Response header in the HTTP Request message;

- The headers field supports two embedded fields: request and response;

- Request: manipulate the header in the request message sent to Destination;

- Response: manipulate the header in the response message sent to the client;

- The above two corresponding types are headeroptions, which are supported

set,add,remove

Field manipulation specified header:- set: use the Key and Value on the map to overwrite the corresponding Header in the Request or Response;

- add: append the Key and Value on the map to the original Header;

- remove: delete the Header specified in the list;

- The headers field supports two embedded fields: request and response;

1.3.1 case diagram

- Traffic request

- Client <-> SidecarProxy Envoy <-> ServerPod

1.3.2 case experiment

1,definition VirtualService Complete pair demoapp service Request message&Manipulation of response message

# cat virtualservice-demoapp.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp

spec:

# Host: access to demoapp service

hosts:

- demoapp

# http seven layer routing mechanism

http:

# The routing policy is a list item and can be set to multiple

# Route name, starting with a name

- name: canary

# Matching conditions

match: # match the request header part: if the request header x-canary: true, route it to the v11 subset, manipulate the request header, modify user agent: chrome in the request message, manipulate the response header and add the x-canary: true header;

# Match request header condition

- headers:

x-canary:

exact: "true"

# Routing target

route:

- destination:

host: demoapp

subset: v11

# Manipulation header

headers:

# Request header

request:

# Modify header value

set:

User-Agent: Chrome

# response header

response:

# Add new header

add:

x-canary: "true"

# Route name: identifies the default route that does not match the above conditions and sends it to the v10 subset;

- name: default

# Manipulation header: the effective range of the defined location here indicates that the response message manipulation under the following conditions is carried out for all default routing targets

headers:

# response header

response:

# Manipulate the response header sent from the downstream to add x-envy: test

add:

X-Envoy: test

# Routing target

route:

- destination:

host: demoapp

subset: v10

# kubectl apply -f virtualservice-demoapp.yaml

virtualservice.networking.istio.io/demoapp configured

2,In order to verify header manipulation, we need to pass client Direct to demoapp Send request

# Simulate the header matching condition of match. If the header x-canary: true, it will be routed to the demoapp V11 subset

root@client /# curl -H "x-canary: true" demoapp:8080

iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-msn2w, ServerIP: 10.220.104.159!

root@client /# curl -H "x-canary: true" demoapp:8080

iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-9pm74, ServerIP: 10.220.104.164!

# View response header

root@client /# curl -I -H "x-canary: true" demoapp:8080

HTTP/1.1 200 OK

content-type: text/html; charset=utf-8

content-length: 116

server: envoy

date: Fri, 04 Feb 2022 05:49:44 GMT

x-envoy-upstream-service-time: 2

x-canary: true

# Check the browser type in the request message after operation

root@client /# curl -H "x-canary: true" demoapp:8080/user-agent

User-Agent: Chrome

---

# Request default default route demoapp V10 subset

# Default browser type

root@client /# curl demoapp:8080/user-agent

User-Agent: curl/7.67.0

# Manipulate the response header sent from the downstream to add x-envy: test

root@client /# curl -I demoapp:8080/user-agent

HTTP/1.1 200 OK

content-type: text/html; charset=utf-8

content-length: 24

server: envoy

date: Fri, 04 Feb 2022 05:53:09 GMT

x-envoy-upstream-service-time: 18

x-envoy: test

1.4 fault injection (test service toughness)

1.4.1 case diagram

1.4.2 case experiment

1,adopt VirtualService yes demoapp Conduct fault injection test

# cat virtualservice-demoapp.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp

spec:

hosts:

- demoapp

http:

- name: canary

match:

- uri:

prefix: /canary

rewrite:

uri: /

route:

- destination:

host: demoapp

subset: v11

# fault injection

fault:

# Fault type: interrupt fault

abort:

# How much flow is fault injected

percentage:

# Fault injection at 20% flow

value: 20

# The status code 555 of the injected fault response to the client

httpStatus: 555

- name: default

route:

- destination:

host: demoapp

subset: v10

# fault injection

fault:

# Fault type: delay fault

delay:

# How much flow is fault injected

percentage:

# Carry out fault injection on 20% of the flow for 3s delay fault

value: 20

fixedDelay: 3s

# kubectl apply -f virtualservice-demoapp.yaml

virtualservice.networking.istio.io/demoapp configured

2,External access proxy Dispatch to demoapp v10 subset

# default delay fault

daizhe@daizhedeMacBook-Pro ~ % while true; do curl fe.toptops.top:52212; sleep 0.$RANDOM; done

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-7fklt, ServerIP: 10.220.104.154!

- Took 6 milliseconds.

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ncnn6, ServerIP: 10.220.104.165!

- Took 13 milliseconds.

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-7fklt, ServerIP: 10.220.104.154!

- Took 3099 milliseconds. # If the traffic base is large enough, it can be observed that 20% of the traffic is injected with a 3s delay

3,Within cluster client visit frontend proxy Dispatch to demoapp v11 Subset (supplement: what really gives play to grid traffic scheduling is egress listener)

# canary interrupt fault

root@client /# while true; do curl proxy/canary; sleep 0.$RANDOM; done

Proxying value: fault filter abort - Took 1 milliseconds.

Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-9pm74, ServerIP: 10.220.104.164!

- Took 3 milliseconds.

Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-msn2w, ServerIP: 10.220.104.159!

- Took 4 milliseconds.

Proxying value: fault filter abort - Took 6 milliseconds. # If the traffic base is large enough, it can be observed that 20% of the traffic is injected with interrupt fault, and the response client status code is 555

Proxying value: fault filter abort - Took 2 milliseconds.

- 4. kiali graph can draw the graph in real time according to the flow (the fault proportion is 20%)

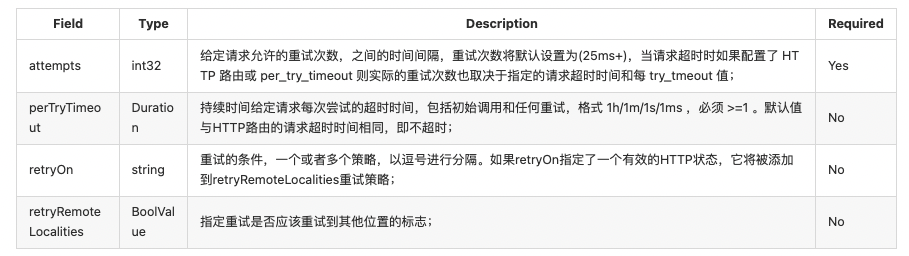

1.5. HTTP retry (timeout retry)

- Configuration parameters related to retry request

- HTTP request retry condition (route. Retry Policy)

- Retry condition (same as x-envy-retry-on header)

- 5xx: the upstream host returns a 5xx response code or does not respond at all (disconnection / retry / read timeout);

- Gateway error: gateway error, similar to 5xx policy, but only for 502, 503 and 504 applications to retry;

- Connection failure: retry in case of timeout failure of establishing connection with upstream service at TCP level;

- Retrieable 4xx: retry when the upstream server returns a repeatable 4xx response code;

- REFUSED stream: the upstream service will retry when using the REFUSED stream error code to reset;

- Retrieable status codes: retry when the response code of the upstream server matches the response code defined in the retry policy or x-envy-retrieable status codes header value;

- Reset: when the upstream host does not respond at all (disconnect/reset/read timeout), Envoy will retry;

- Retrieable headers: if the upstream server response message matches the retry policy or any header contained in the x-envy-retrieable-header-names header, envy will try to retry;

- Envy ratelimited: retry when x-envy-ratelimited exists in the header;

- Retry condition 2 (same as x-envy-retry-grpc-on header)

- Cancelled: retry when the status code in the grpc response header is "cancelled";

- Deadline exceeded: retry when the status code in the grpc response header is "deadline exceeded";

- Internal: retry when the status code in the grpc response header is "internal";

- Resource exhausted: retry when the status code in the grpc response header is "resource exhausted";

- Unavailable: retry when the status code in the grpc response header is "unavailable";

- By default, Envoy will not retry any type of retry operation unless explicitly defined;

- Retry condition (same as x-envy-retry-on header)

1,Adjustment pair demoapp Fault proportion of fault injection for access 50%,so that HTTP Rerty Better viewing effect

# cat virtualservice-demoapp.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp

spec:

hosts:

- demoapp

http:

- name: canary

match:

- uri:

prefix: /canary

rewrite:

uri: /

route:

- destination:

host: demoapp

subset: v11

fault:

abort:

percentage:

value: 50

httpStatus: 555

- name: default

route:

- destination:

host: demoapp

subset: v10

fault:

delay:

percentage:

value: 50

fixedDelay: 3s

# kubectl apply -f virtualservice-demoapp.yaml

virtualservice.networking.istio.io/demoapp configured

2,Cluster external access through Gateway Real call to access demoapp The client of the cluster is frontend proxy ,So in proxy A fault-tolerant mechanism is added

# Define VirtualService for Proxy for Ingress Gateway

# cat virtualservice-proxy.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: proxy

spec:

# It is used to define which route is related to the virtual host, so you need to specify hosts, which must be consistent with or include the hosts in the GW

hosts:

- "fe.toptops.top" # Corresponding to gateways / proxy gateway

# (associated with gateway)

# gateways is used to specify that the vs is the received stack traffic defined in the Ingress Gateway, and specify the GW name

gateways:

- istio-system/proxy-gateway # The relevant definitions apply only to the Ingress Gateway

#- mesh

# http layer 7 routing

http:

# Routing policy name

- name: default

# Routing target

route:

- destination:

# The proxy cluster is automatically generated because there is a Service with the same name inside the cluster and the cluster itself exists on the ingess gateway

# The Service name of the internal cluster, but the traffic will not be sent directly to the Service, but to the cluster composed of services (the seven layer scheduling traffic here is no longer sent through the Service)

host: proxy # proxy is to initiate a request for demoapp

# The timeout duration is set to 1s. If the server needs to process more than 1s before replying to the response, it will immediately respond to the client as timeout;

timeout: 1s

# Retry policy

retries:

# retry count

attempts: 5

# The timeout length of the retry operation. If the retry exceeds 1s, the client will respond as timeout;

perTryTimeout: 1s

# Retry condition: for the 5xx series response status code returned by the back-end server, it will be transferred to the retries retry strategy;

retryOn: 5xx,connect-failure,refused-stream

# kubectl apply -f virtualservice-proxy.yaml

virtualservice.networking.istio.io/proxy configured

3,Access outside the cluster proxy test

# The external access proxy is dispatched to the demoapp v10 subset. The default routing strategy injects 50% of the traffic into the delay fault for 3s. If the request exceeds 1s, it will directly return to the upstream request timeout request timeout;

daizhe@daizhedeMacBook-Pro ~ % while true; do curl fe.toptops.top:52212; sleep 0.$RANDOM; done

upstream request timeoutProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-qv9ws, ServerIP: 10.220.104.160!

- Took 3 milliseconds.

upstream request timeoutupstream request timeoutupstream request timeoutProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ncnn6, ServerIP: 10.220.104.165!

- Took 4 milliseconds.

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-7fklt, ServerIP: 10.220.104.154!

- Took 32 milliseconds.

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-7fklt, ServerIP: 10.220.104.154!

- Took 4 milliseconds.

# The external access proxy is dispatched to the demoapp v11 subset. The canary routing strategy injects 50% of the traffic into the interrupt fault and returns the client status code of 555, which meets the retry strategy set by the gateway and retries for 5 times;

daizhe@daizhedeMacBook-Pro ~ % while true; do curl fe.toptops.top:52212/canary; sleep 0.$RANDOM; done

Proxying value: fault filter abort - Took 1 milliseconds.

Proxying value: fault filter abort - Took 2 milliseconds.

Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-9pm74, ServerIP: 10.220.104.164!

- Took 6 milliseconds.

Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-msn2w, ServerIP: 10.220.104.159!

- Took 6 milliseconds.

Proxying value: fault filter abort - Took 1 milliseconds.

Proxying value: fault filter abort - Took 2 milliseconds.

Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-msn2w, ServerIP: 10.220.104.159!

- Took 4 milliseconds.

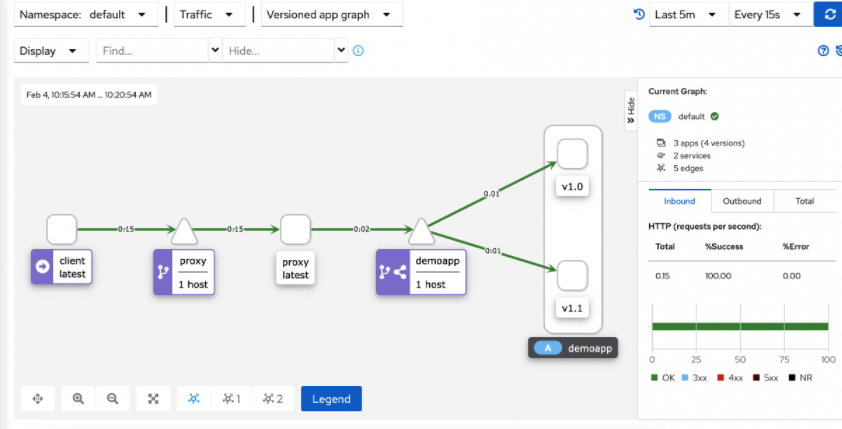

- 4. kiali graph can draw graphics in real time according to traffic

1.6. HTTP traffic image

1,Adjustment pair demoapp Access policy, increase traffic mirroring policy

# cat virtualservice-demoapp.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp

spec:

# Host: access to demoapp service

hosts:

- demoapp

# http layer 7 routing

http:

- name: traffic-mirror

# Routing target: all normal traffic is sent to the demoapp v10 subset

route:

- destination:

host: demoapp

subset: v10

# And mirror a copy of all normal traffic to the demoapp v11 subset

mirror:

host: demoapp

subset: v11

# kubectl apply -f virtualservice-demoapp.yaml

virtualservice.networking.istio.io/demoapp configured

2,Within cluster client visit frontend proxy Dispatch to demoapp v10 Subset and mirror a copy to demoapp v11 Subset (supplement: what really gives play to grid traffic scheduling is egress listener)

root@client /# while true; do curl proxy; sleep 0.$RANDOM; done

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ncnn6, ServerIP: 10.220.104.165!

- Took 15 milliseconds.

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-7fklt, ServerIP: 10.220.104.154!

- Took 8 milliseconds.

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-qv9ws, ServerIP: 10.220.104.160!

- Took 8 milliseconds.

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ncnn6, ServerIP: 10.220.104.165!

- Took 3 milliseconds.

Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ncnn6, ServerIP: 10.220.104.165!

- Took 8 milliseconds.

# External access proxy

daizhe@daizhedeMacBook-Pro ~ % while true; do curl fe.toptops.top:52212; sleep 0.$RANDOM; done

# The traffic image needs to be viewed from kiali below

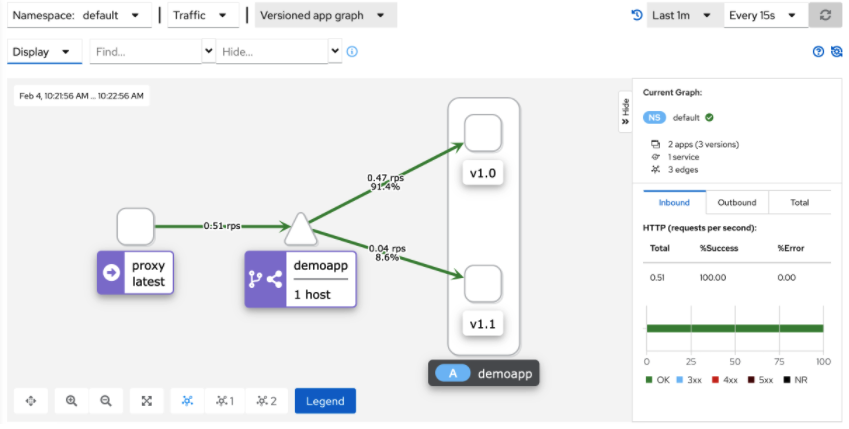

- 3. kiali graph can draw graphics in real time according to traffic