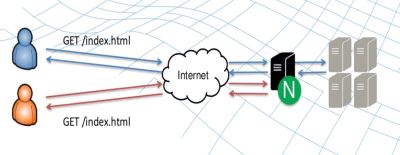

1, Advantages of nginx caching

As shown in the figure, nginx caching can reduce the processing pressure of the source server to a certain extent.

Because many static files (such as css, js, pictures) are not updated frequently. nginx uses proxy cache to cache users' requests to a local directory. The next same request can directly fetch the cache file, so it is unnecessary to request the server.

After all, the processing of IO intensive services is a strength of nginx.

2, How to set

Start with a chestnut:

http{ proxy_connect_timeout 10; proxy_read_timeout 180; proxy_send_timeout 5; proxy_buffer_size 16k; proxy_buffers 4 32k; proxy_busy_buffers_size 96k; proxy_temp_file_write_size 96k; proxy_temp_path /tmp/temp_dir; proxy_cache_path /tmp/cache levels=1:2 keys_zone=cache_one:100m inactive=1d max_size=10g; server { listen 80 default_server; server_name localhost; root /mnt/blog/; location / { } #To cache the suffix of a file, you can set it below. location ~ .*\.(gif|jpg|png|css|js)(.*) { proxy_pass http://ip address: 90; proxy_redirect off; proxy_set_header Host $host; proxy_cache cache_one; proxy_cache_valid 200 302 24h; proxy_cache_valid 301 30d; proxy_cache_valid any 5m; expires 90d; add_header wall "hey!guys!give me a star."; } } # blog port without nginx cache server { listen 90; server_name localhost; root /mnt/blog/; location / { } } }

Because I was experimenting on one server, I used two ports 80 and 90 to simulate the interaction between the two servers.

Port 80 is connected to a common domain name( http://wangxiaokai.vip ) access.

Port 90 handles resource access from port 80 proxy.

The equivalent is that port 90 is the source server and port 80 is the nginx reverse cache proxy server.

Let's talk about configuration items:

2.1 http Layer Settings

proxy_connect_timeout 10; proxy_read_timeout 180; proxy_send_timeout 5; proxy_buffer_size 16k; proxy_buffers 4 32k; proxy_busy_buffers_size 96k; proxy_temp_file_write_size 96k; proxy_temp_path /tmp/temp_dir; proxy_cache_path /tmp/cache levels=1:2 keys_zone=cache_one:100m inactive=1d max_size=10g;

- Proxy connect timeout server connection timeout

- Wait for the response time of the backend server after the proxy read timeout connection is successful

- Proxy send timeout data return time of backend server

- Size of the proxy buffer size buffer

- proxy_buffers the number of buffers set for each connection is number, and the size of each buffer is size

- After the proxy ﹣ busy ﹣ buffers ﹣ size function is enabled, when the write buffer reaches a certain size without reading all the responses, nginx will send the response to the client until the buffer is less than this value.

- Proxy > temp > File > write > size sets the size limit for nginx to write data to temporary files every time

- Proxy temp path the path to store temporary files received from the backend server

- Proxy cache path sets the path of the cache and other parameters. If the cached data is not accessed within the time specified by the inactive parameter (currently 1 day), it will be removed from the cache

2.2 server layer settings

2.2.1 reverse cache proxy server

server { listen 80 default_server; server_name localhost; root /mnt/blog/; location / { } #To cache the suffix of a file, you can set it below. location ~ .*\.(gif|jpg|png|css|js)(.*) { proxy_pass http://ip address: 90; proxy_redirect off; proxy_set_header Host $host; proxy_cache cache_one; proxy_cache_valid 200 302 24h; proxy_cache_valid 301 30d; proxy_cache_valid any 5m; expires 90d; add_header wall "hey!guys!give me a star."; } }

- No resource can be found in proxy pass nginx cache. Forward the request to the address, get new resource and cache it

- Proxy [redirect] sets the replacement text of the backend server's "Location" response header and "Refresh" response header

- Proxy set header allows you to redefine or add request headers to back-end servers

- Proxy? Cache specifies the shared memory used for page caching, corresponding to the keys? Zone set by the http layer

- Proxy cache valid sets different cache times for different response status codes

- expires cache time

Here I set the static resources of pictures, css and js for caching.

When user input http://wangxiaokai.vip When the domain name is used, the access address of ip:port is obtained. Port defaults to 80. Therefore, the page request will be intercepted by the current server for request processing.

When a static resource is parsed to the end of the above file name, the static resource is obtained in the cache.

If the corresponding resource is obtained, the data will be returned directly.

If not, the request is forwarded to the address pointed to by proxy pass for processing.

2.2.2 source server

server { listen 90; server_name localhost; root /mnt/blog/; location / { } }

Here, the request received by port 90 is processed directly, and the resource is fetched under the local directory / mnt/blog of the server to respond.

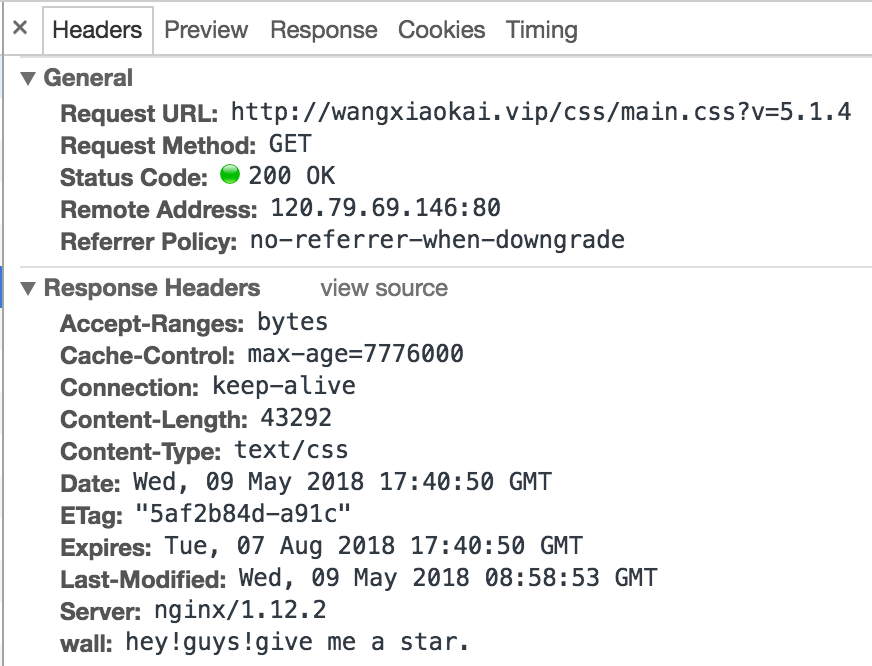

3, How to verify whether the cache is valid

Careful readers should find that in the second part of the chestnut, I left an egg "Hey! Guys! Give me a star.".

Add header is used to set customized information in the header.

Therefore, if the cache is valid, the header returned by the static resource must carry this information.

Visit http://wangxiaokai.vip The results are as follows:

If the cache succeeds: the http status is 304