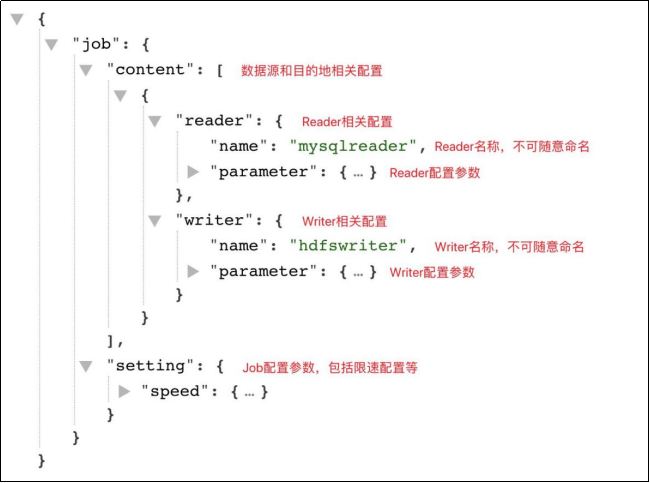

1. DataX template

Method 1: DataX configuration file template

python bin/datax.py -r mysqlreader -w hdfswriter

Method 2: Official Documents https://github.com/alibaba/DataX/blob/master/README.md

2. Synchronize Mysql data to HDFS cases

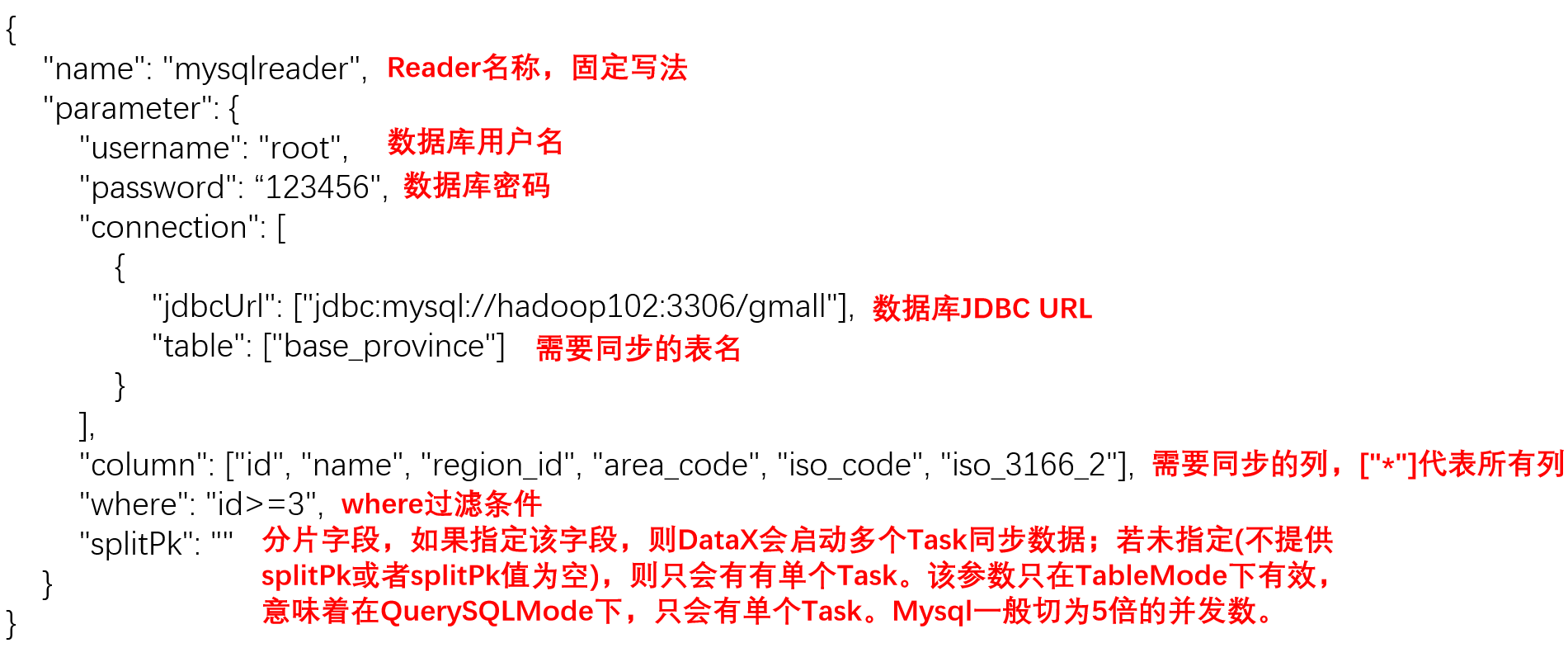

2.1 TableMode of mysqlreader

Use attributes such as table, column, and where to declare the data to be synchronized

mysql_to_hdfs_T.json

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": [

"id",

"name",

"region_id",

"area_code",

"iso_code",

"iso_3166_2"

],

"where": "id>=3",

"connection": [

{

"jdbcUrl": [

"jdbc:mysql://hadoop102:3306/gmall"

],

"table": [

"base_province"

]

}

],

"password": "123456",

"splitPk": "",

"username": "root"

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"column": [

{

"name": "id",

"type": "bigint"

},

{

"name": "name",

"type": "string"

},

{

"name": "region_id",

"type": "string"

},

{

"name": "area_code",

"type": "string"

},

{

"name": "iso_code",

"type": "string"

},

{

"name": "iso_3166_2",

"type": "string"

}

],

"compress": "gzip",

"defaultFS": "hdfs://hadoop102:8020",

"fieldDelimiter": "\t",

"fileName": "base_province",

"fileType": "text",

"path": "/base_province",

"writeMode": "append"

}

}

}

],

"setting": {

"speed": {

"channel": 1

}

}

}

}

MysqlReader – TableMode format:

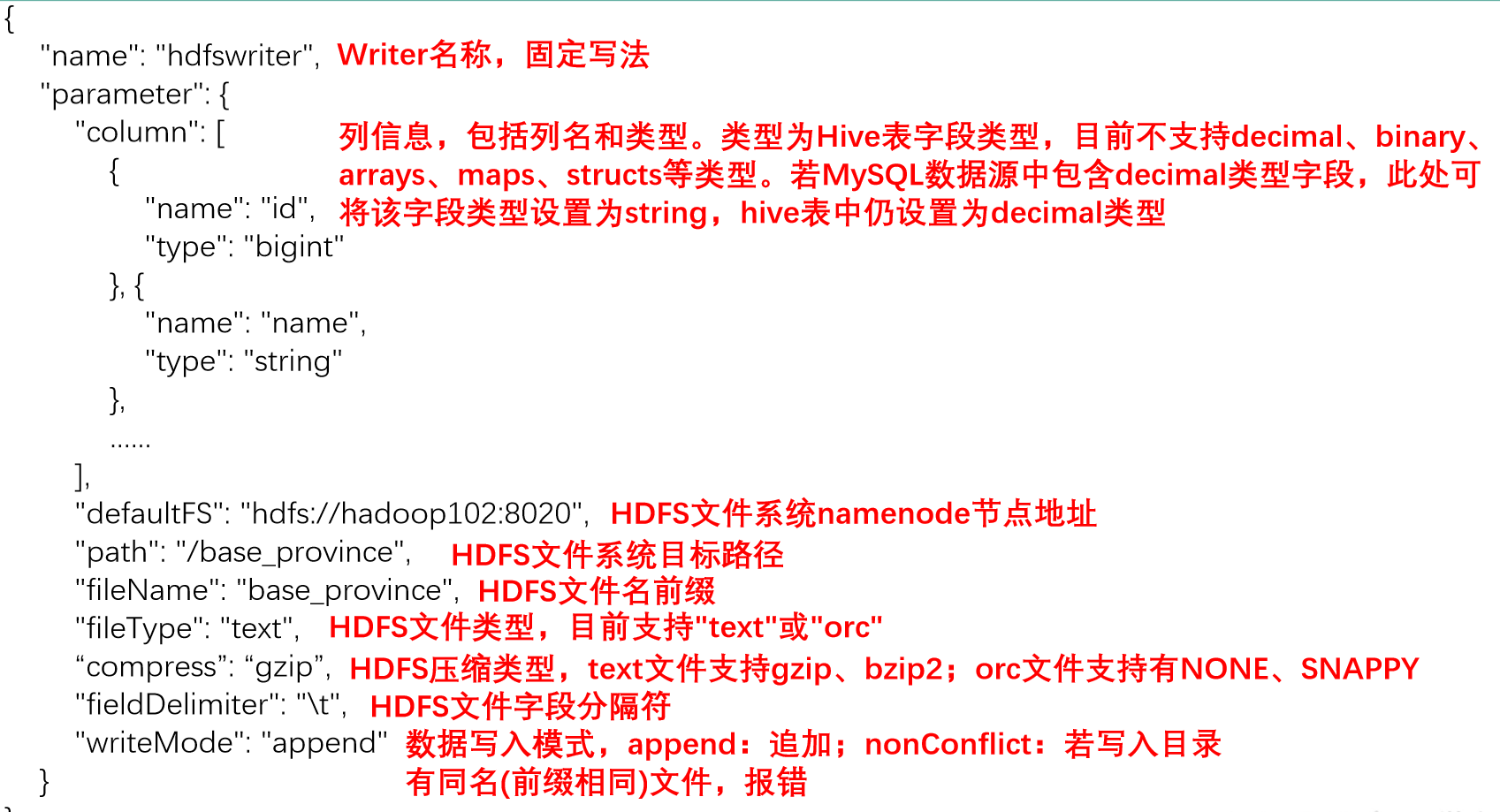

HDFSWriter format:

The HFDS Writer does not provide the nullFormat parameter: that is, the user cannot customize the storage format in which null values are written to the HFDS file. By default, HFDS Writer will store null values as empty strings (''), while Hive's default null value storage format is \ N. Therefore, there will be a problem in importing DataX synchronized files into Hive table later.

Solution

-

Modify the source code of DataX HDFS Writer and add logic to customize the null value storage format https://blog.csdn.net/u010834071/article/details/105506580

-

When creating a table in Hive, specify null value storage format as empty string (')

DROP TABLE IF EXISTS base_province; CREATE EXTERNAL TABLE base_province ( `id` STRING COMMENT 'number', `name` STRING COMMENT 'Province name', `region_id` STRING COMMENT 'region ID', `area_code` STRING COMMENT 'Area code', `iso_code` STRING COMMENT 'Old edition ISO-3166-2 Coding for visualization', `iso_3166_2` STRING COMMENT 'new edition IOS-3166-2 Coding for visualization' ) COMMENT 'Province table' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' NULL DEFINED AS '' LOCATION '/base_province/';

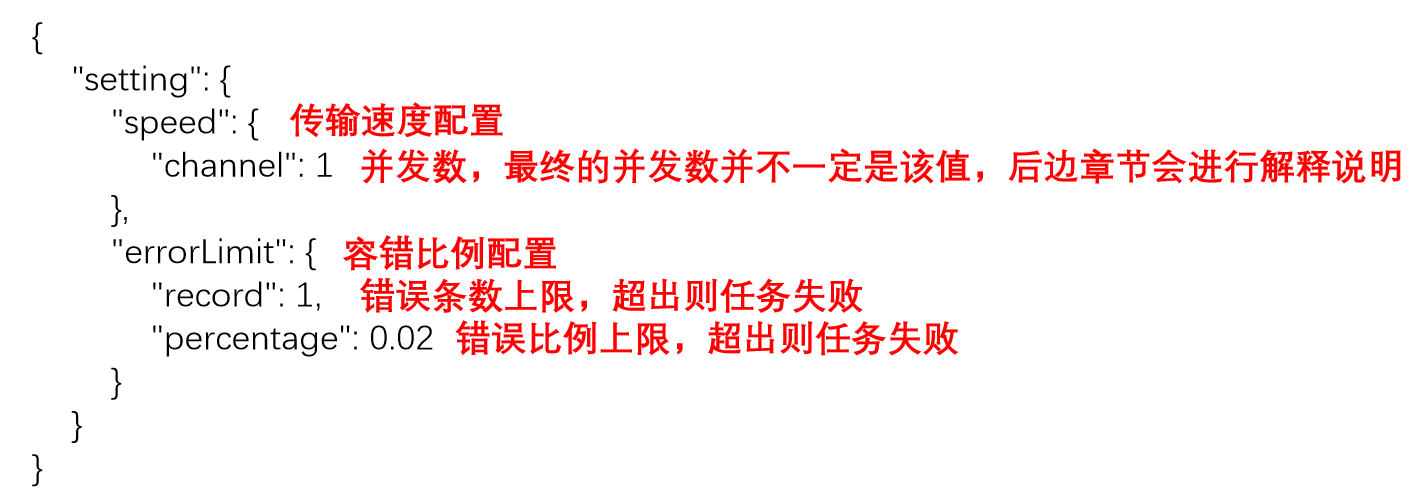

Setting parameter description:

The fault tolerance ratio configuration is set to 0

Submit task test

-

You need to create the specified directory on hdfs first

hadoop fs -mkdir /base_province

You can create a directory by modifying the DataX source code

-

Execute the command to import the data in mysql into hdfs

python bin/datax.py job/mysql_to_hdfs_T.json

-

View hdfs

hadoop fs -cat /base_province/* | zcat

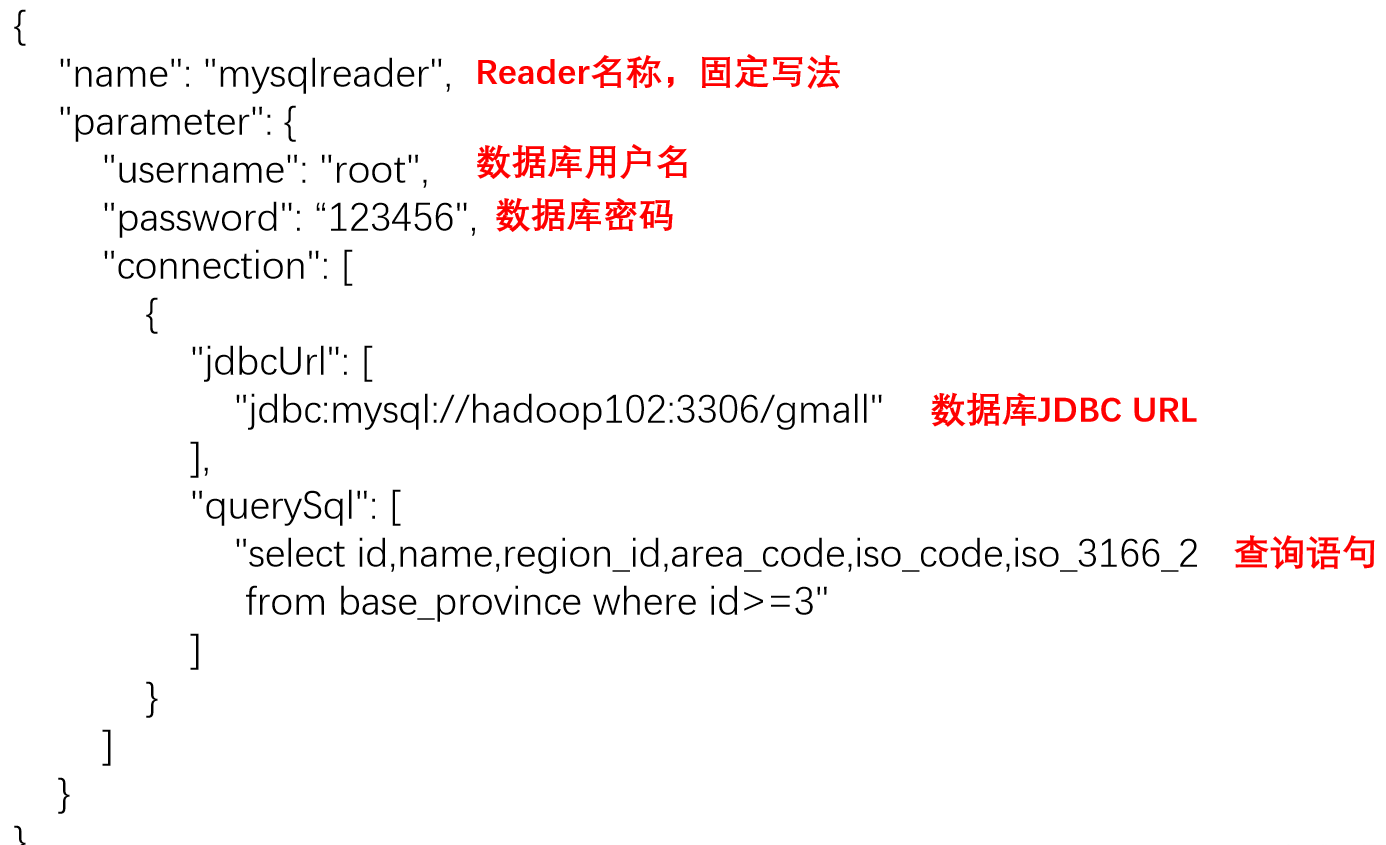

2.2 QuerySQLMode of mysqlreader

Declare the data to be synchronized by using an SQL query statement.

mysql_to_hdfs_sql.json

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"connection": [

{

"jdbcUrl": [

"jdbc:mysql://hadoop102:3306/gmall"

],

"querySql": [

"select id,name,region_id,area_code,iso_code,iso_3166_2 from base_province where id>=3"

]

}

],

"password": "123456",

"username": "root"

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"column": [

{

"name": "id",

"type": "bigint"

},

{

"name": "name",

"type": "string"

},

{

"name": "region_id",

"type": "string"

},

{

"name": "area_code",

"type": "string"

},

{

"name": "iso_code",

"type": "string"

},

{

"name": "iso_3166_2",

"type": "string"

}

],

"compress": "gzip",

"defaultFS": "hdfs://hadoop102:8020",

"fieldDelimiter": "\t",

"fileName": "base_province",

"fileType": "text",

"path": "/base_province",

"writeMode": "append"

}

}

}

],

"setting": {

"speed": {

"channel": 1

}

}

}

}

MysqlReader – QuerySQLMode format:

Submit task test

-

Delete / base_ All files under Province /

hadoop fs -rm -r -f /base_province/*

-

Execute command

python bin/datax.py job/mysql_to_hdfs_sql.json

-

Viewing HDFS files

hadoop fs -cat /base_province/* | zcat

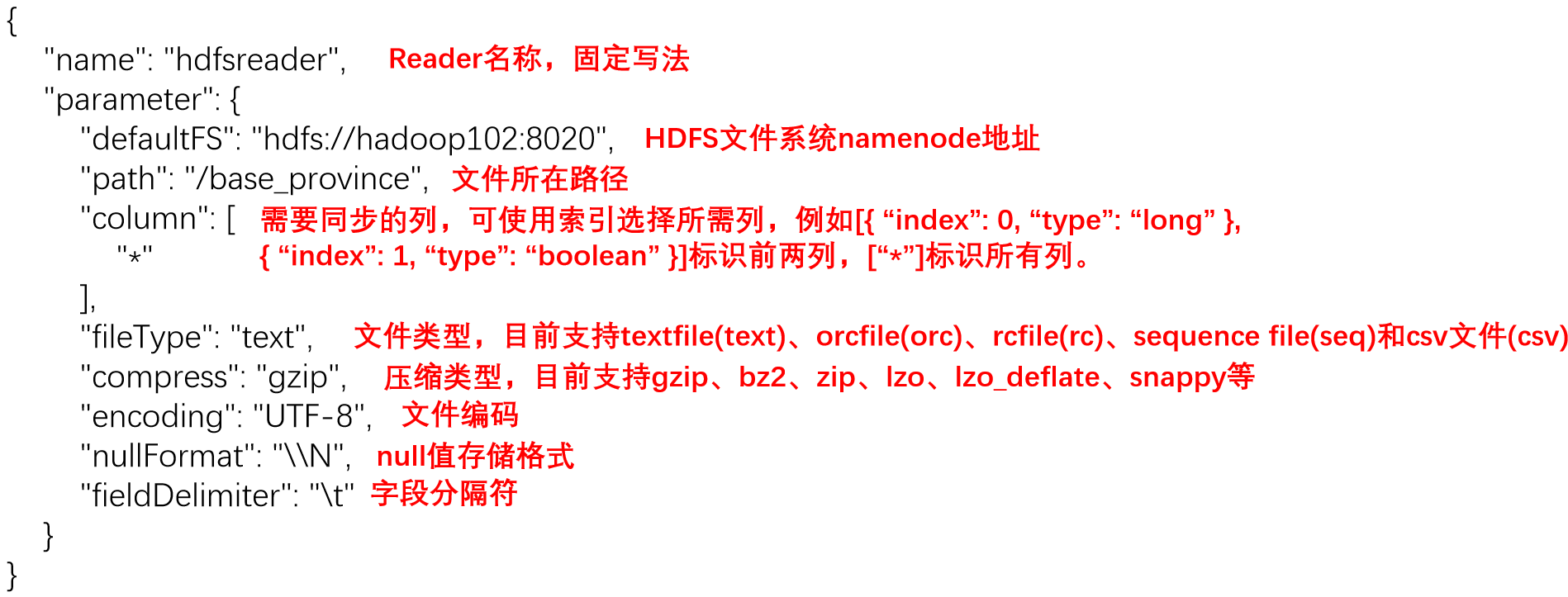

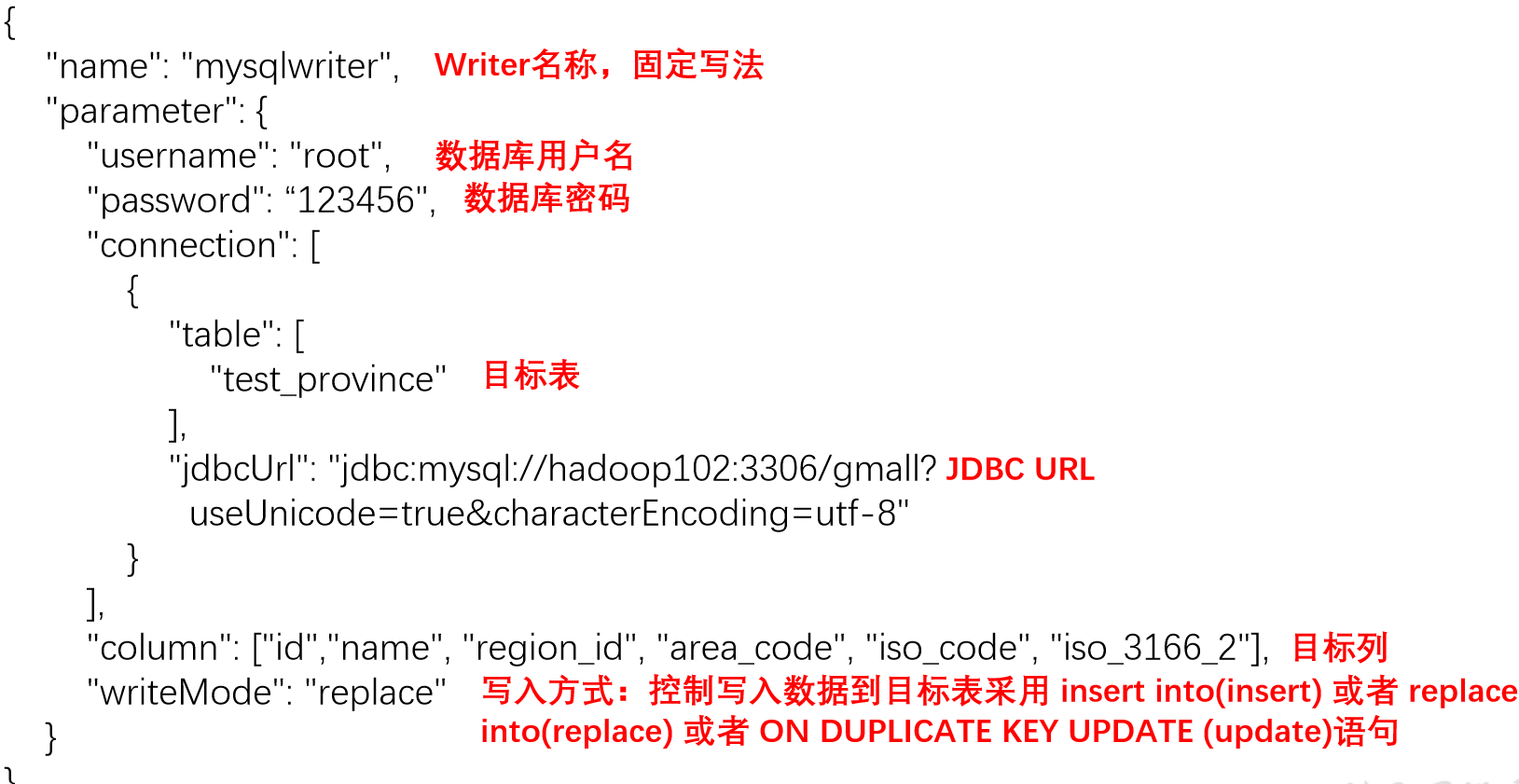

3. Synchronize HDFS data to Mysql case

hdfs_to_mysql.json

{

"job": {

"content": [

{

"reader": {

"name": "hdfsreader",

"parameter": {

"defaultFS": "hdfs://hadoop102:8020",

"path": "/base_province",

"column": [

"*"

],

"fileType": "text",

"compress": "gzip",

"encoding": "UTF-8",

"nullFormat": "\\N",

"fieldDelimiter": "\t",

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"username": "root",

"password": "123456",

"connection": [

{

"table": [

"test_province"

],

"jdbcUrl": "jdbc:mysql://hadoop102:3306/gmall?useUnicode=true&characterEncoding=utf-8"

}

],

"column": [

"id",

"name",

"region_id",

"area_code",

"iso_code",

"iso_3166_2"

],

"writeMode": "replace"

}

}

}

],

"setting": {

"speed": {

"channel": 1

}

}

}

}

HDFSReader:

MySQLWriter:

Submit task test

-

Create test in Mysql_ Province table

DROP TABLE IF EXISTS `test_province`; CREATE TABLE `test_province` ( `id` bigint(20) NOT NULL, `name` varchar(20) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL, `region_id` varchar(20) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL, `area_code` varchar(20) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL, `iso_code` varchar(20) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL, `iso_3166_2` varchar(20) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL, PRIMARY KEY (`id`) ) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;

Tables can be created using the navigate tool

-

Execute command

python bin/datax.py job/test_province.json

-

Refresh and view mysql data

4. DataX parameter transfer case

The offline data synchronization task needs to be executed regularly and repeatedly every day, so the target path on HDFS usually contains a layer of dates to distinguish the daily synchronized data, that is, the target path of daily synchronized data is not fixed, so the value of the path parameter of HDFS Writer in DataX configuration file should be dynamic.

Usage:

Use ${param} reference parameter in JSON configuration file, and use - p"-Dparam=value" to pass in parameter value when submitting task.

test_parameter.json

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"connection": [

{

"jdbcUrl": [

"jdbc:mysql://hadoop102:3306/gmall"

],

"querySql": [

"select id,name,region_id,area_code,iso_code,iso_3166_2 from base_province where id>=3"

]

}

],

"password": "123456",

"username": "root"

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"column": [

{

"name": "id",

"type": "bigint"

},

{

"name": "name",

"type": "string"

},

{

"name": "region_id",

"type": "string"

},

{

"name": "area_code",

"type": "string"

},

{

"name": "iso_code",

"type": "string"

},

{

"name": "iso_3166_2",

"type": "string"

}

],

"compress": "gzip",

"defaultFS": "hdfs://hadoop102:8020",

"fieldDelimiter": "\t",

"fileName": "base_province",

"fileType": "text",

"path": "/base_province/${dt}",

"writeMode": "append"

}

}

}

],

"setting": {

"speed": {

"channel": 1

}

}

}

}

Submit task test

-

Create path on hdfs

hadoop fs -mkdir /base_province/2022-01-11

-

Execute command

python bin/datax.py job/test_parameter.json -p"-Ddt=2020-06-14"

-

View on hdfs