Simple Practice of Disruptor Framework

- https://github.com/LMAX-Exchange/disruptor/wiki/Introduction

- https://github.com/LMAX-Exchange/disruptor/wiki/Getting-Started

- http://lmax-exchange.github.io/disruptor/files/Disruptor-1.0.pdf

Basic knowledge

The best way to understand what Disruptor is is to compare it with what is currently very well understood and very similar.

In the case of Disruptor, this would be Java's Blocking Queue. Like queues, Disruptors are designed to move data (such as messages or events) between threads in the same process.

However, Disruptor provides some key functions to distinguish it from queues. They are:

- Consumer multicast events with a consumer dependency graph.

- Pre-allocate memory for events.

- Choose lock-free.

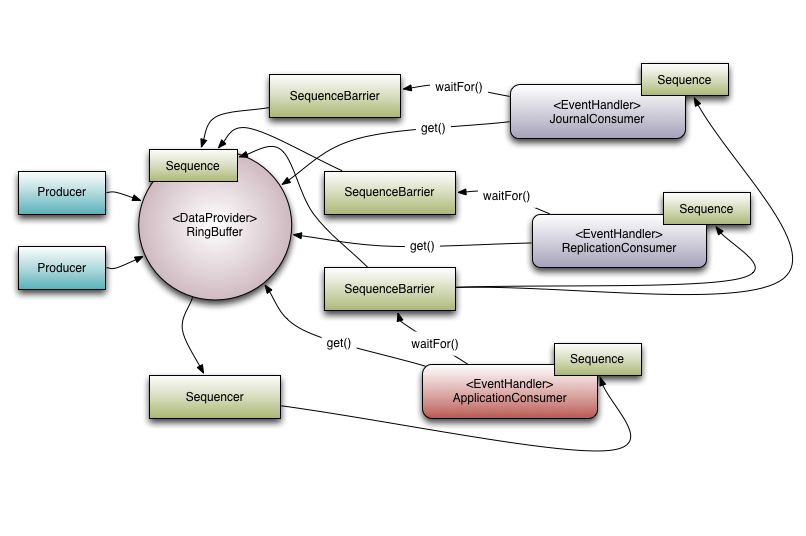

Architecture diagram

Core concept

Ring Buffer

Sequence

Wait Strategy

EventHandler

Simple practice

- Task class

package morning.cat; import lombok.Data; import lombok.ToString; /** * task model */ @Data @ToString public class Task { private Long taskId; private String taskName; private String taskContent; }

- Task Event Class

package morning.cat; /** * Task event */ public class TaskEvent { private Task value; public void set(Task task) { this.value = task; } public void clear() { value = null; } @Override public String toString() { return value.toString(); } }

- Event Factory Class

package morning.cat; import com.lmax.disruptor.EventFactory; /** * Task Event Factory Class */ public class TaskEventFactory implements EventFactory<TaskEvent> { @Override public TaskEvent newInstance() { return new TaskEvent(); } }

- Consumer EventHandler

package morning.cat.handle; import com.lmax.disruptor.EventHandler; import morning.cat.TaskEvent; /** * Event Processor (Consumer) */ public class TaskEventHandle implements EventHandler<TaskEvent> { /** * Business process * * @param event * @param sequence * @param endOfBatch * @throws Exception */ @Override public void onEvent(TaskEvent event, long sequence, boolean endOfBatch) throws Exception { System.out.println("Business process: " + event); } }

// Clean handle

package morning.cat.handle; import com.lmax.disruptor.EventHandler; import morning.cat.TaskEvent; /** * When transferring data through Disruptor, the life of the object may exceed expectations. To avoid this, you may need to clear the event after handling the event. * If you have an event handler that clears values from the same handler, that's enough. * If you have a series of event handlers, you may need to place a specific handler at the end of the chain to handle the cleanup object. * <p> * Clearing objects from ring buffers */ public class CleanTaskEventHandle implements EventHandler<TaskEvent> { @Override public void onEvent(TaskEvent event, long sequence, boolean endOfBatch) throws Exception { // Failing to call clear here will result in the // object associated with the event to live until // it is overwritten once the ring buffer has wrapped // around to the beginning. event.clear(); } }

- Producer

package morning.cat.producer; import com.lmax.disruptor.RingBuffer; import morning.cat.Task; import morning.cat.TaskEvent; /** * Publishing Using the Legacy API (Old Edition Producer) */ public class TaskEventProducer { private final RingBuffer<TaskEvent> ringBuffer; public TaskEventProducer(RingBuffer<TaskEvent> ringBuffer) { this.ringBuffer = ringBuffer; } public void onData(Task bb) { long sequence = ringBuffer.next(); // Grab the next sequence try { TaskEvent event = ringBuffer.get(sequence); // Get the entry in the Disruptor // for the sequence event.set(bb); // Fill with data } finally { ringBuffer.publish(sequence); } } }

package morning.cat.producer; import com.lmax.disruptor.EventTranslatorOneArg; import com.lmax.disruptor.RingBuffer; import morning.cat.Task; import morning.cat.TaskEvent; /** * Publishing Using Translators (3.x Producer after version) */ public class TaskEventProducerWithTranslator { private final RingBuffer<TaskEvent> ringBuffer; public TaskEventProducerWithTranslator(RingBuffer<TaskEvent> ringBuffer) { this.ringBuffer = ringBuffer; } private static final EventTranslatorOneArg<TaskEvent, Task> TRANSLATOR = new EventTranslatorOneArg<TaskEvent, Task>() { @Override public void translateTo(TaskEvent event, long sequence, Task bb) { event.set(bb); } }; public void onData(Task bb) { //ringBuffer.publishEvent(TRANSLATOR, bb); ringBuffer.publishEvent((event, sequence) -> event.set(bb)); } }

- Principal class

package morning.cat.main; import com.lmax.disruptor.BlockingWaitStrategy; import com.lmax.disruptor.RingBuffer; import com.lmax.disruptor.YieldingWaitStrategy; import com.lmax.disruptor.dsl.Disruptor; import com.lmax.disruptor.dsl.ProducerType; import com.lmax.disruptor.util.DaemonThreadFactory; import morning.cat.Task; import morning.cat.TaskEvent; import morning.cat.TaskEventFactory; import morning.cat.handle.CleanTaskEventHandle; import morning.cat.handle.TaskEventHandle; import morning.cat.producer.TaskEventProducer; import morning.cat.producer.TaskEventProducerWithTranslator; import morning.cat.utils.DisruptorUtil; public class TaskMain { public static void main(String[] args) throws Exception { // The factory for the event TaskEventFactory factory = new TaskEventFactory(); // Specify the size of the ring buffer, must be power of 2. int bufferSize = 1024; // Construct the Disruptor Disruptor<TaskEvent> disruptor = new Disruptor<>(factory, bufferSize, DaemonThreadFactory.INSTANCE); // The default wait strategy used by the Disruptor is the BlockingWaitStrategy. // Internally, Blocking WaitStrategy uses typical lock and conditional variables to handle thread wake-up. // Blocking Wait Strategy is the slowest waiting strategy available, but it is the most conservative in CPU utilization and will provide the most consistent behavior in the broadest deployment options. However, knowing the deployed system again can achieve additional performance. // Construct the Disruptor with a SingleProducerSequencer Disruptor<TaskEvent> disruptor2 = new Disruptor( factory, bufferSize, DaemonThreadFactory.INSTANCE, ProducerType.SINGLE, new YieldingWaitStrategy()); // Sleeping WaitStrategy - > Like Blocking WaitStrategy, SleepWaitStrategy tries to conserve CPU usage by using a simple busy wait loop, but uses LockSupport.parkNanos (1) call in the middle of the loop. On a typical Linux system, this will cause the thread to pause for about 60 mus. However, it has the following advantages: the production process does not need to take any other action to increase the appropriate counter, and does not need to send signals to inform the cost of conditional variables. However, the average latency of moving events between producer and consumer threads is higher. It works best without low latency, but has little impact on the production process. The common use case is asynchronous logging. // Yielding Wait Strategy - > Yielding Wait Strategy is one of the two waiting strategies that can be used in low latency systems, among which CPU cycles can be recorded with the goal of increasing latency. Yielding WaitStrategy will be busy waiting for the sequence to increase to the appropriate value. Thread.yield() is called inside the loop to allow other queued threads to run. When very high performance is required and the number of event handler threads is less than the total number of logical cores, this is the recommended waiting strategy, for example, you have hyperthreading enabled. // BusySpinWaitStrategy - > BusySpinWaitStrategy is the highest performance waiting strategy, but imposes the highest restrictions on the deployment environment. This wait strategy should be used only if the number of event handler threads is less than the number of physical cores in the box. For example. Hyperthreading should be disabled. // Connect the handler disruptor.handleEventsWith(new TaskEventHandle()) // Then (new CleanTaskEventHandle ()// / Clean objects from the ring buffer ; // Start the Disruptor, starts all threads running // disruptor.start(); if (!DisruptorUtil.isStarted(disruptor)) { disruptor.start(); } // Get the ring buffer from the Disruptor to be used for publishing. RingBuffer<TaskEvent> ringBuffer = disruptor.getRingBuffer(); // Producer TaskEventProducer producer = new TaskEventProducer(ringBuffer); TaskEventProducerWithTranslator translator = new TaskEventProducerWithTranslator(ringBuffer); for (long l = 0; true; l++) { Task task = new Task(); task.setTaskId(System.currentTimeMillis()); task.setTaskName(Thread.currentThread().getName() + task.getTaskId()); task.setTaskContent("Hello World,this is disruptor"); producer.onData(task); Thread.sleep(100); Task task2 = new Task(); task2.setTaskId(System.currentTimeMillis()); task2.setTaskName(Thread.currentThread().getName() + task.getTaskId()); task2.setTaskContent("Hello World,this is task2"); translator.onData(task2); } } }