Simulated landing

note

1. Simulated Login: crawl user information based on some users.

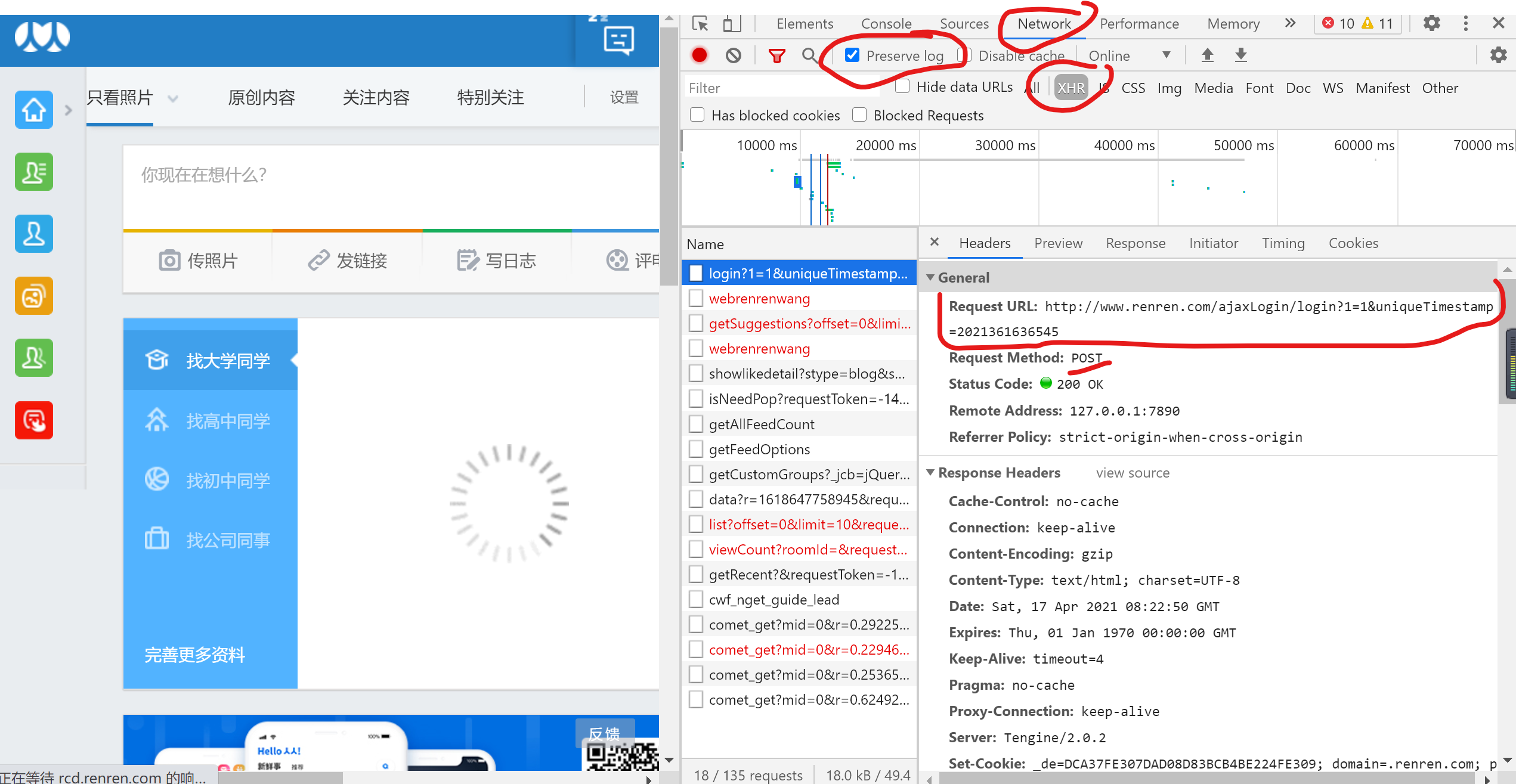

2. Demand: simulated Login of Renren.

-① After clicking the login button, a post request will be initiated

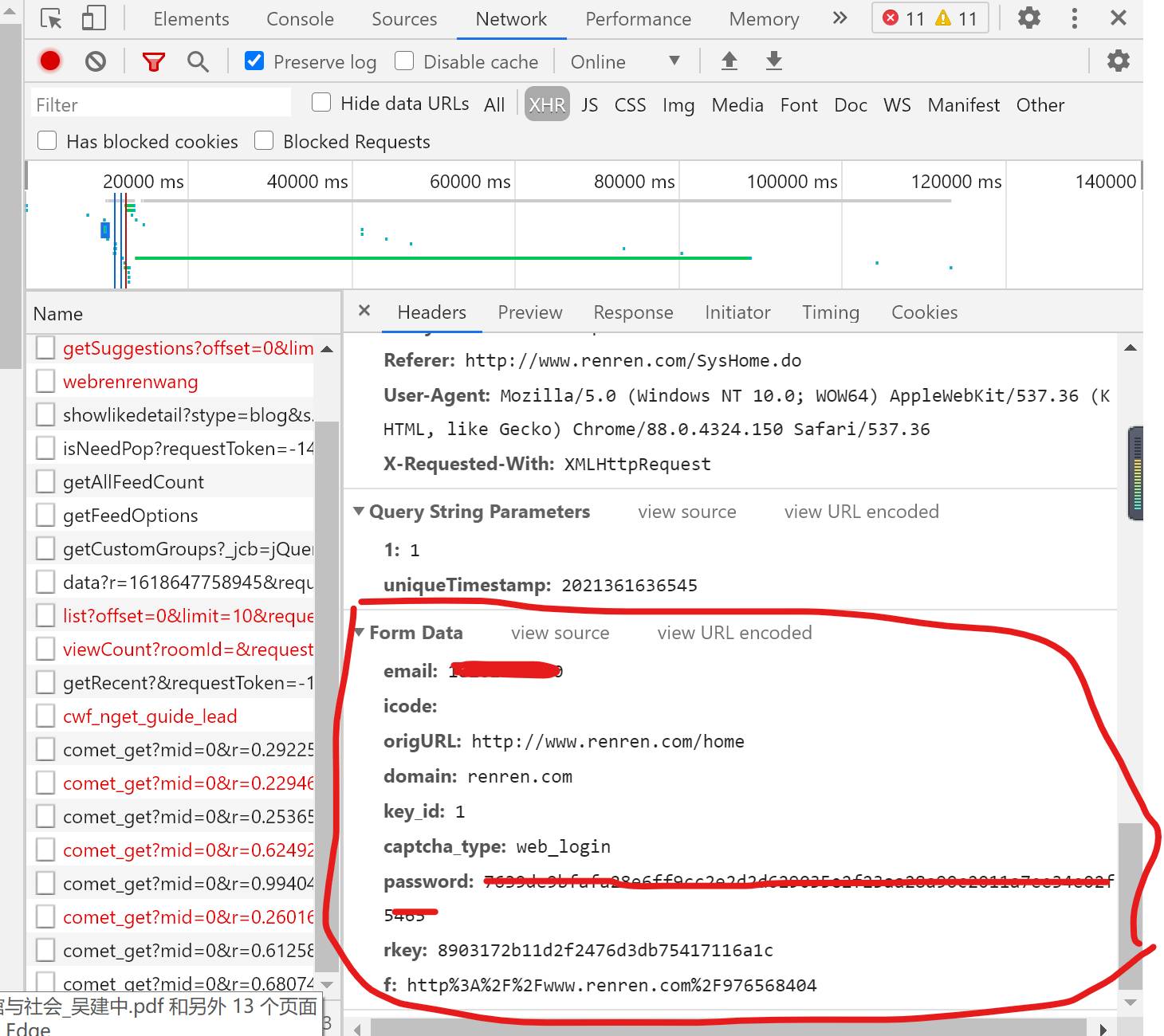

-② The post request will carry the relevant login information (user name, password, verification code...) entered before login

-③ Verification Code: each request will change dynamically

3. Requirements: crawl the relevant user information of the current user (user information displayed in the personal home page)

4.http/https protocol features: stateless.

5. Why the corresponding page data is not requested: when the second request based on the personal home page is initiated, the server does not know that the request is based on the request in login status

6.cookie: used to let the server record the relevant status of the client.

- Manual processing: obtain the cookie value through the packet capture tool and encapsulate the value into headers (not recommended)

- Automatic processing:

- Where does the cookie value come from?

- After simulating the login post request, it is created by the server

- session object:

- ① Function: 1 The request can be sent; 2. If the cookie is carried during the request / session, the cookie will be automatically generated

- ② Create a session object: session = requests Session()

- ③ Use the session object to simulate login and send the post request (the cookie will be stored in the session)

- ④ The session object sends the get request corresponding to the personal homepage (carrying a cookie)

7. Proxy: crack the anti crawling mechanism of ip

① What is proxy: proxy server

② The role of agent: break through the restriction of its own ip access; You can hide your real ip from attack

③ Type of proxy ip: - http: applied to the url corresponding to HTTP protocol- https: apply to the url corresponding to https protocol

④ Anonymity of proxy ip:

Transparency: the server knows that the proxy is used for the request and the real ip address corresponding to the request

Anonymity: I know that the proxy is used, but I don't know the real ip address

Gao Ni: I don't know the proxy is used, let alone the real ip address

actual combat

Simulated Login to Renren

import requests

from lxml import etree

from CodeClass import Chaojiying_Client

def getCodeText(imgPath, codeType):

chaojiying = Chaojiying_Client('user name', 'password', 'Software ID') # User center > > software ID generates a replacement 96001

im = open(imgPath, 'rb').read() # Local image file path to replace a.jpg. Sometimes WIN system needs to//

return chaojiying.PostPic(im, codeType) # 1902 verification code type official website > > price system version 3.4 + print to add ())

#1. Capture and identify the verification code picture

url = 'http://www.renren.com/SysHome.do'

headers = {

'User-Agent':

'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:86.0) Gecko/20100101 Firefox/86.0',

}

page_text = requests.get(url = url,headers=headers).text

tree = etree.HTML(page_text)

code_img_src = tree.xpath('//*[@id="verifyPic_login"]/@src')[0]

code_img_data = requests.get(url=code_img_src,headers=headers).content

with open('./code.jpg','wb') as fp:

fp.write(code_img_data)

#Use the example code provided by super eagle to identify the verification code picture

result = getCodeText('code.jpg',1005)

#Sending of post request (simulated Login)

login_url = 'http://www.renren.com/ajaxLogin/login?1=1&uniqueTimestamp=2021361636545'

data = {

'email': 'user name',

'icode':result,

'origURL': 'http://www.renren.com/home',

'domain': 'renren.com',

'key_id': 1,

'captcha_type': 'web_login',

'password': '',

'rkey': 'd0cf42c2d3d337f9e5d14083f2d52cb2',

'f': 'http%3A%2F%2Fwww.renren.com%2F976568404%2Fprofile'

}

response = requests.post(url = login_url,headers = headers,data=data)

print(response.status_code)

login_page_text = response.text

with open('renren.html','w',encoding = 'utf-8') as fp:

res = fp.write(login_page_text)

Crawl the personal details page data of current users of Renren

#Coding process:

#1. Identify the verification code and obtain the text data of the verification code picture

#2 send the post request (processing request parameters)

#3 persistent storage of corresponding data

import requests

from lxml import etree

form CodeClass import Chaojiying_Client

def getCodeText(imgPath,codeType):

chaojiying = Chaojiying_Client('user name', 'password', 'Software ID') # User center > > software ID generates a replacement 96001

im = open(imgPath, 'rb').read() # Local image file path to replace a.jpg. Sometimes WIN system needs to//

return chaojiying.PostPic(im, codeType) # 1902 verification code type official website > > price system version 3.4 + print to add ())

#Create a session object

session = requests.Session()

#1 capture and identify the verification code picture

url = 'http://www.renren.com/SysHome.do'

headers = {

'User-Agent':

'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:86.0) Gecko/20100101 Firefox/86.0',

}

page_text = requests.get(url=url,headers=headers).text

tree =etree.HTML(page_text)

code_img_src = tree.xpath('//*[@id="verifyPic_login"]/@src')[0]

code_img_data = requests.get(url=code_img_src,headers=headers).content

with open('./code.jpg','wb') as fp:

fp.write(code_img_data)

#Use the sample code provided by super eagle to identify the verification code picture

result = getCodeText('code.jpg',1005)

#Sending of post request (simulated Login)

login_url = 'http://www.renren.com/ajaxLogin/login?1=1&uniqueTimestamp=2021361636545'

data = {

'email': 'user name',

'icode':result,

'origURL': 'http://www.renren.com/home',

'domain': 'renren.com',

'key_id': 1,

'captcha_type': 'web_login',

'password': '',

'rkey': 'd0cf42c2d3d337f9e5d14083f2d52cb2',

'f': 'http%3A%2F%2Fwww.renren.com%2F976568404%2Fprofile'

}

#Send post request using session

response = session.post(url=login_url,headers=headers,data=data)

print(response.status_code)

#Crawl the page data corresponding to the current user's personal home page

detail_url = 'http://www.renren.com/976568404/profile'

#Manual cookie processing (not universal)

#headers = {'cookis':''}

#Send get request using session with cookie

detail_page_text = session.get(url=detail_url,headers=headers).text

with open('jj.html','w',encoding='utf-8') as fp:

fp.write(detail_page_text)

enclosure

Super Eagle code

File library name CodeClass

import requests

from hashlib import md5

class Chaojiying_Client(object):

def __init__(self, username, password, soft_id):

self.username = username

password = password.encode('utf8')

self.password = md5(password).hexdigest()

self.soft_id = soft_id

self.base_params = {

'user': self.username,

'pass2': self.password,

'softid': self.soft_id,

}

self.headers = {

'Connection': 'Keep-Alive',

'User-Agent': 'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0)',

}

def PostPic(self, im, codetype):

"""

im: Picture byte

codetype: Topic type reference http://www.chaojiying.com/price.html

"""

params = {

'codetype': codetype,

}

params.update(self.base_params)

files = {'userfile': ('ccc.jpg', im)}

r = requests.post('http://upload.chaojiying.net/Upload/Processing.php', data=params, files=files, headers=self.headers)

return r.json()

def ReportError(self, im_id):

"""

im_id:Pictures of wrong topics ID

"""

params = {

'id': im_id,

}

params.update(self.base_params)

r = requests.post('http://upload.chaojiying.net/Upload/ReportError.php', data=params, headers=self.headers)

return r.json()