Sony camera API official website Collection

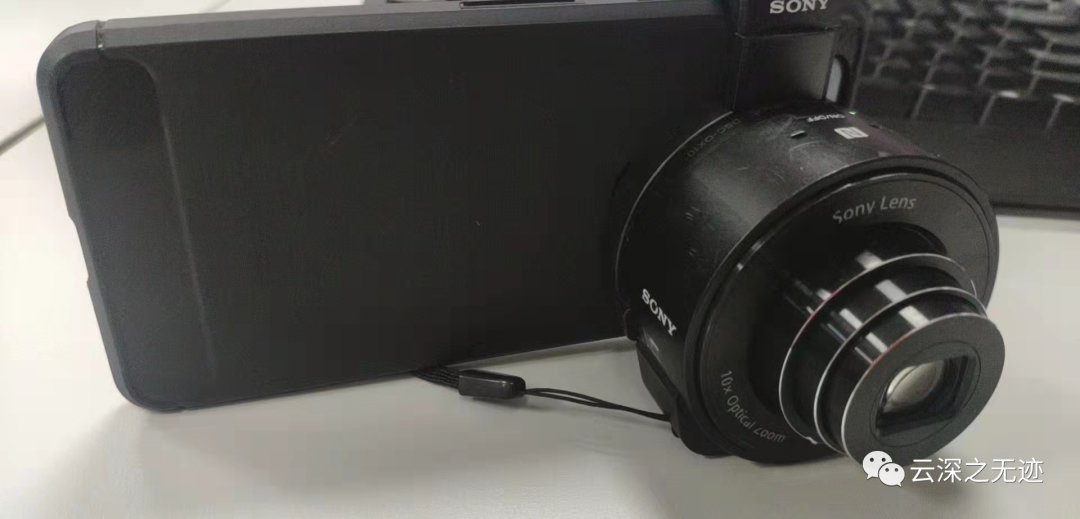

It's such a thing. It's cruel

Put it on your mobile phone

It's ready

WiFi connection real-time transmission

There is an old man who can beat Mount Everest with QX10

On the API page, QX10 and QX100 are put together. I don't know if the two codes can be mixed? I'll test it.

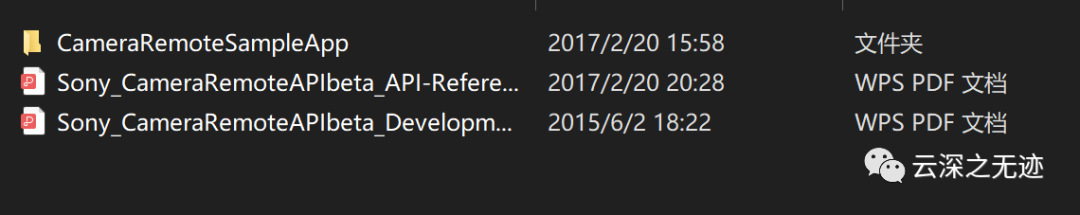

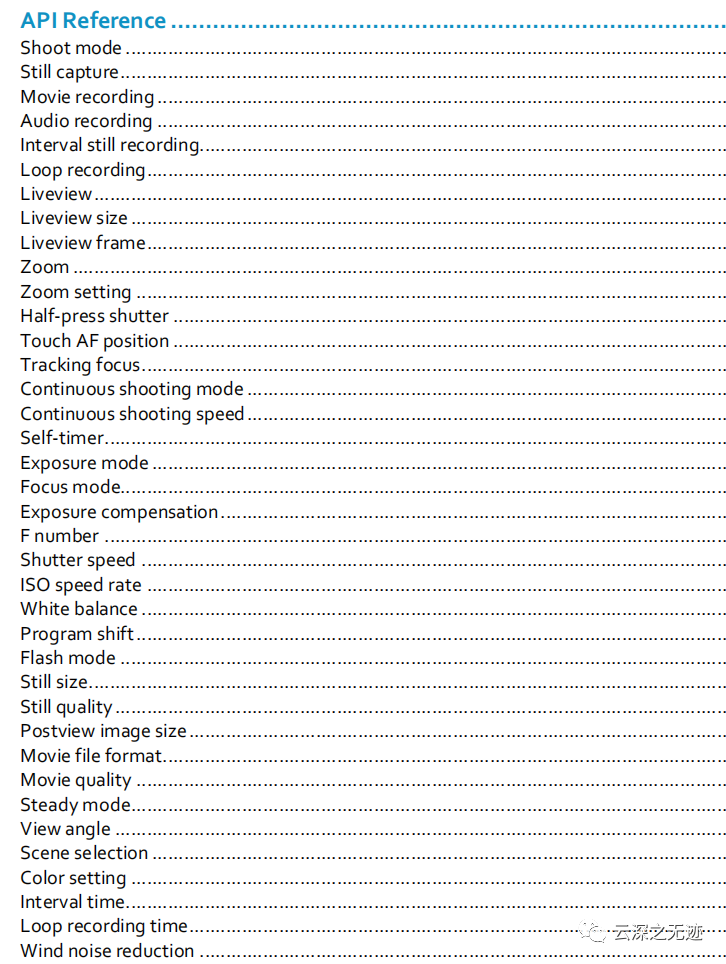

We have a list of API s

There are Android interfaces and, of course, Apple's

pip install opencv-python

pip install requests

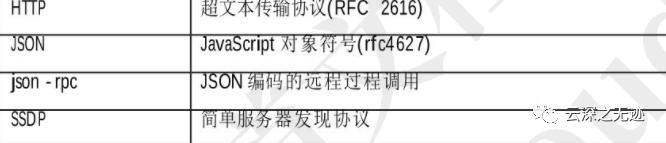

Using these four protocols, SSDP is used to let the host discover the camera, HTTP to connect for a long time, and then json sends the control sequence. I like the word sequence so much.

The WiFi password is inside

zP2EbQVr

Zhenima ghost animal, this code....

https://sourceforge.net/projects/sony-desktop-dsc-qx10/

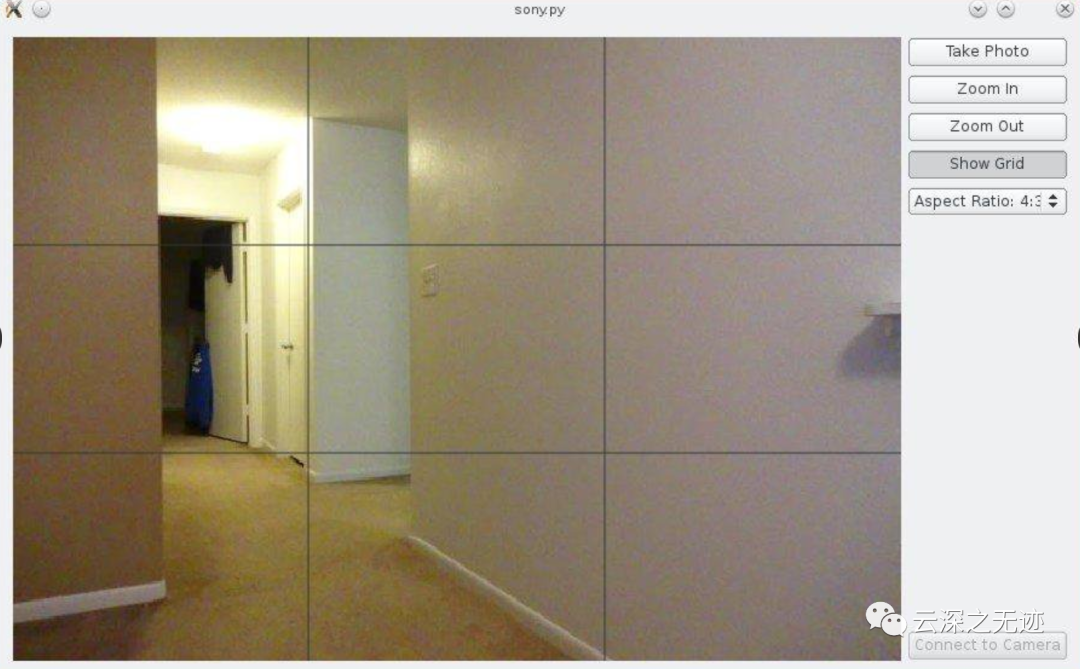

QT4 write interface

Take a good look at other people's white cameras

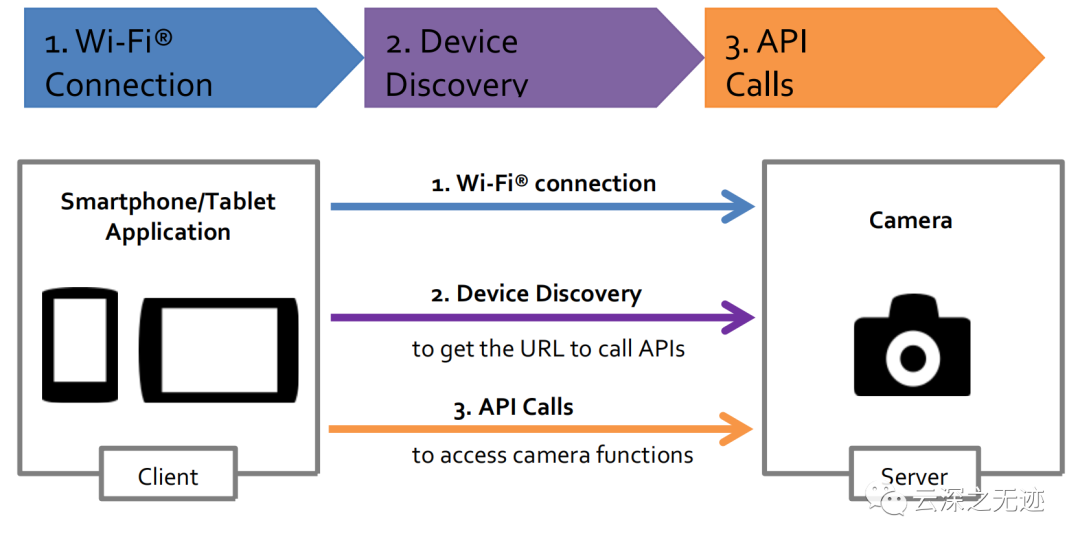

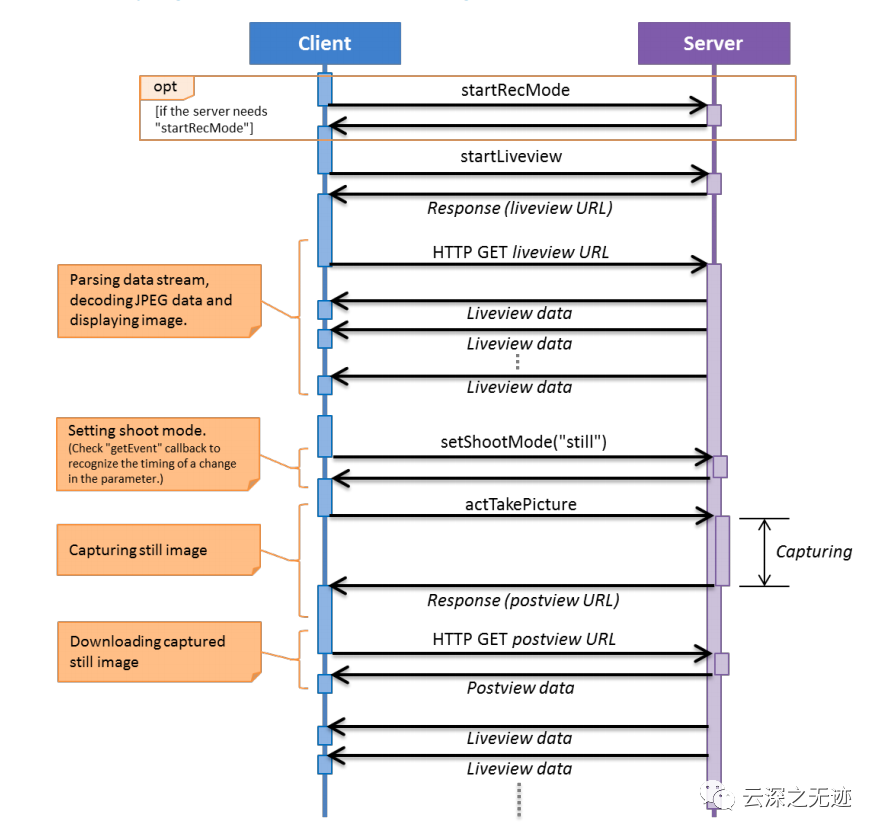

This is the connection diagram of the latest SDK

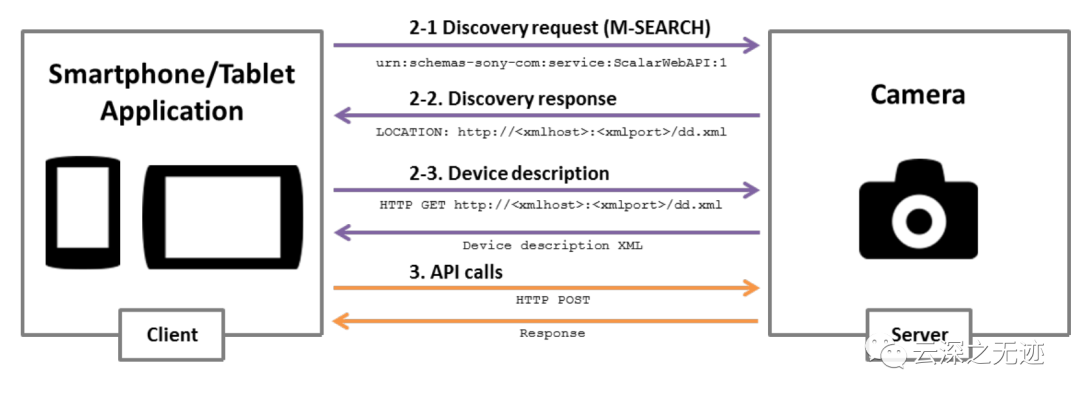

This is the previous connection diagram, which is actually the same

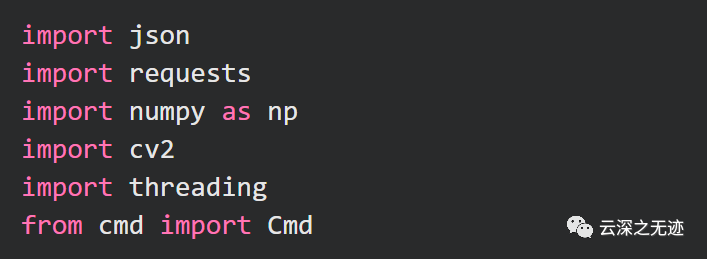

- json decoding and sending instructions

- requests to package the HTTP protocol

- Numpy is an efficient array, which is used with opencv library

- cv2 is the name of opencv

- There are also multithreaded libraries

- cmd is the calling console protocol

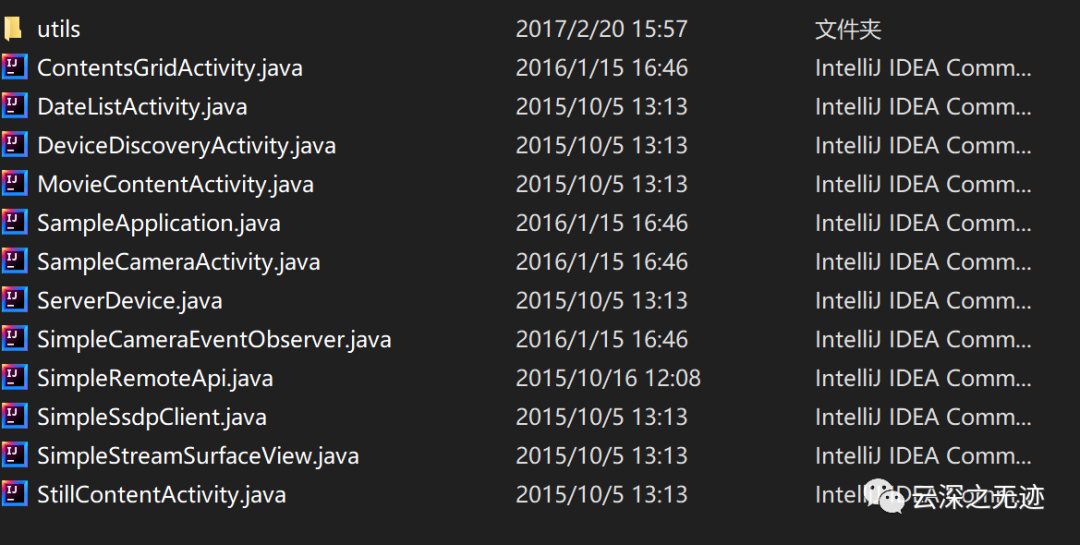

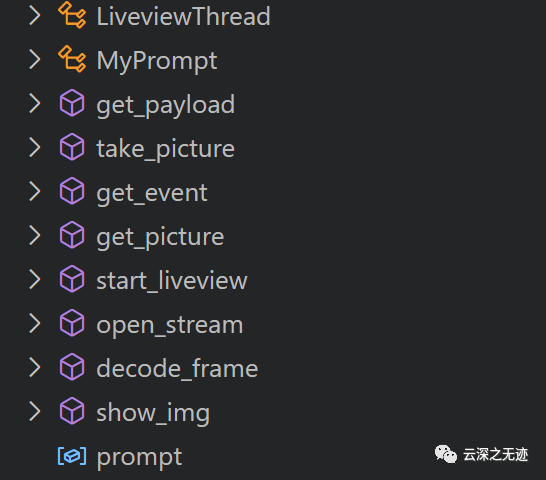

Complete collection of functions and classes

Take out the displayed work separately

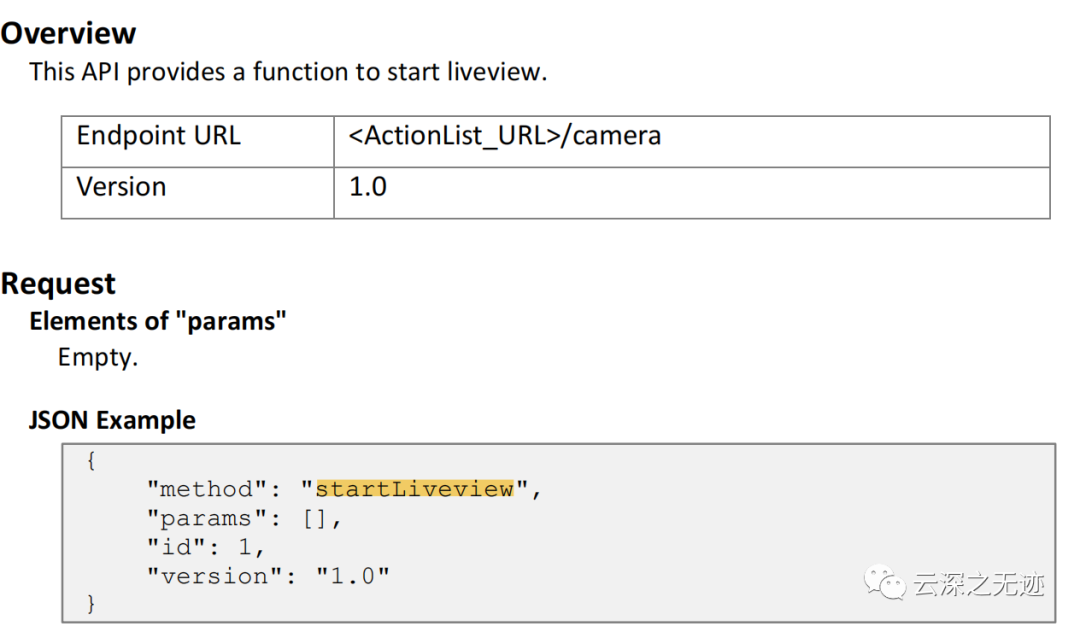

Can you see the API I'm interested in in in the API

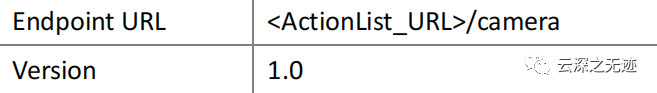

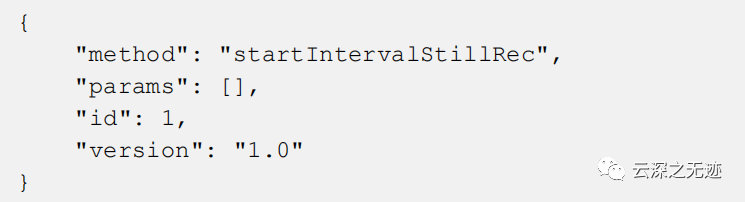

The first method, preview screen:

Ending method

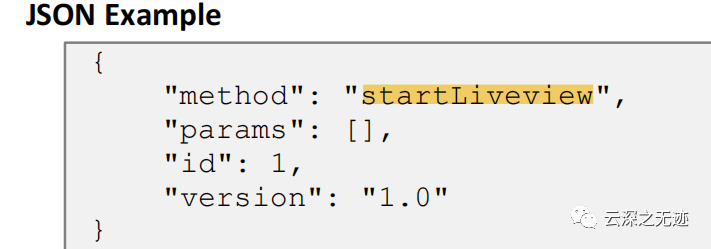

json example

Reply after execution

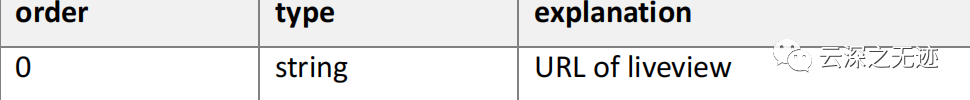

error code

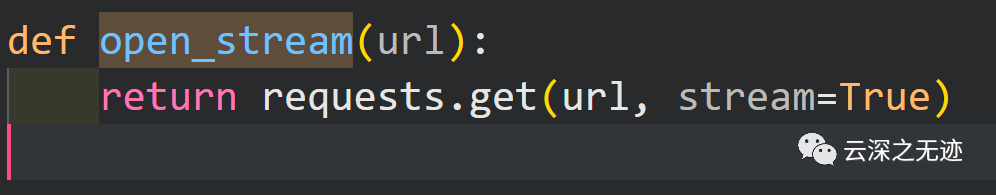

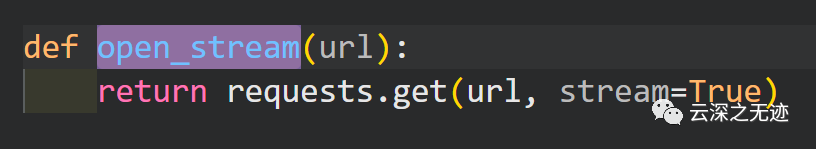

Let's first write a method to obtain video stream, just like a water pipe interface

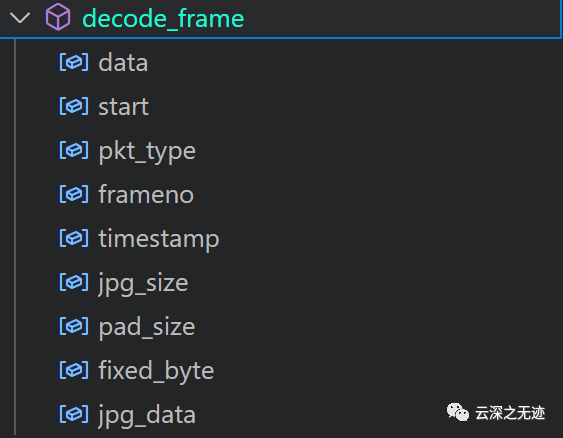

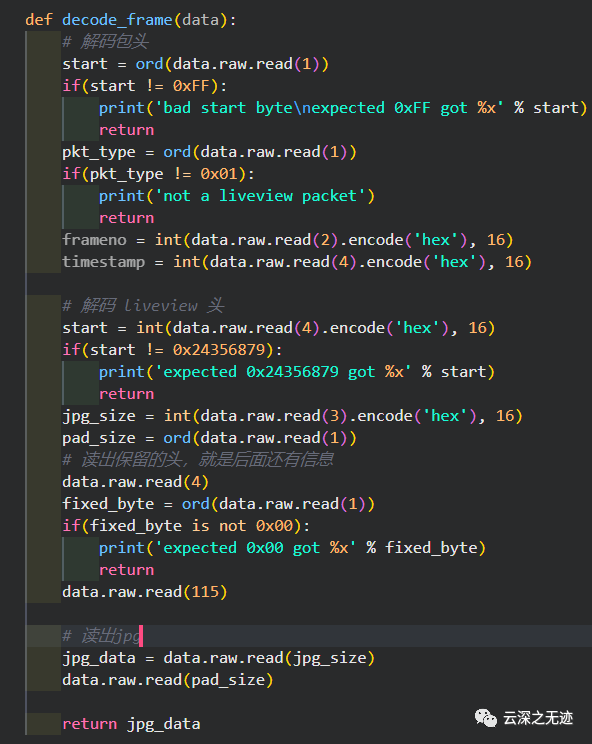

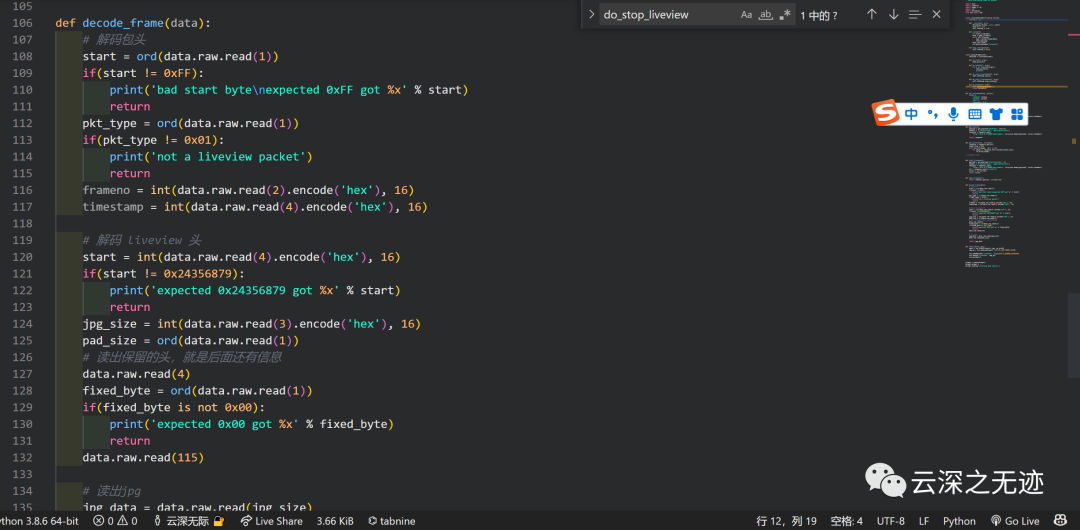

When you get this data stream, we should decode it

Next, decode

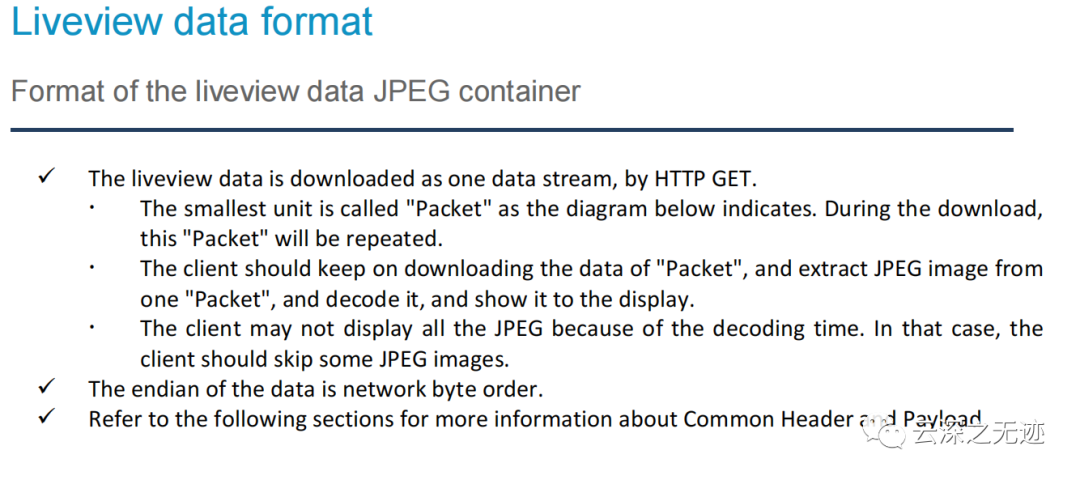

download the real-time view data as a data stream through HTTP GET.

The smallest unit is called "data packet", as shown in the figure below. This "packet" will be repeated during the download process.

‡ the client shall continuously download the data of "Packet" and receive it from a "Packet", decode it and display it on the display.

The client may not be able to display all JPEG due to decoding time. under these circumstances,

The client should skip some JPEG images.

The byte order of data is the network byte order.

Whether you understand it or not, you can find that what the machine transmits is a single frame

Your head to head is the first step of the long march. You have to see if its data part can decode something.

The Common Header consists of the following 8 bytes.

‡ start byte: 1 [B]

‡ 0xFF, fixed

Payload type: 1 [b] - indicates the type of Payload

‡ 0x01 = for live view images

‡ 0x02 = frame information for live view

‡ serial number: 2 [B]

‡ frame number, 2-byte integer, incremented per frame

‡ this frame number will be repeated.

timestamp: 4 [B]

‡ 4-byte integer in Payload type

‡ if Payload type = 0x01, the timestamp of Common Header is in milliseconds. The start time may not start from zero, depending on the server.

Payload head

The payload header format will be 128 bytes as follows. start code: 4[B]

‡ fixed (0x24, 0x35, 0x68, 0x79)

‡ this can be used to detect payload headers. payload data size without fill size: 3[B]

‡ bytes.

if Payload Type = 0x01, the size represents the size of JPEG in Payload data.

if Payload Type = 0x02, the size indicates the size of Frame information data in Payload data.

filling size: 1[B]

‡ padding size of Payload data after JPEG data, bytes.

If Payload Type = 0x01, the header format is as follows. reserved: 4[B]

‡ sign: 1[B]

‡ the value is set to 0x00

‡ other values are reserved

reserved: 115[B]

‡ all fixed, 0x00

If Payload Type = 0x02, the header format is as follows. frame information data version: 2[B]

‡ data version of frame information data.

‡ the upper 1 byte indicates the major version and the lower 1 byte indicates the minor version

edition.

‡ 0x01, 0x00: version 1.0

The client should use the data version to ignore the data it does not understand.

number of frames: 2[B]

‡ number of frame data.

single frame data size: 2[B]

‡ single size frame data. If the data version is 1.0, the data size is 16[B].

The client can read each frame using the data size.

reserved: 114[B]

‡ all fixed, 0x00

Maybe that's it. First see if the head is the right head, and then read the following

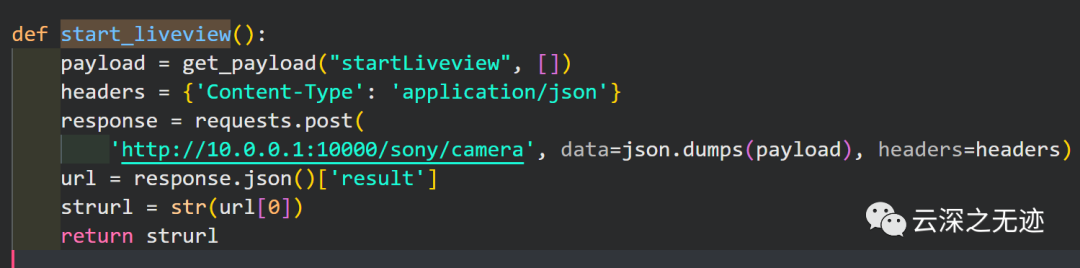

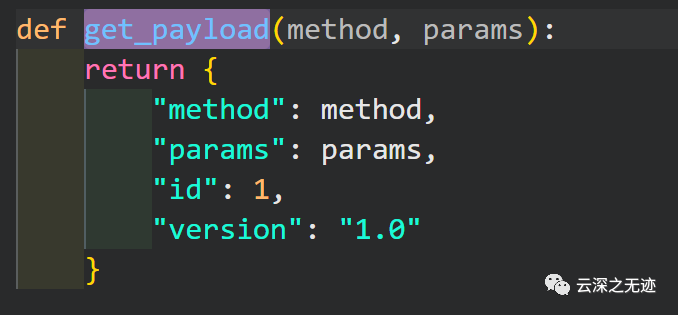

Give this command to get to start the video stream_ The function payload()

Here

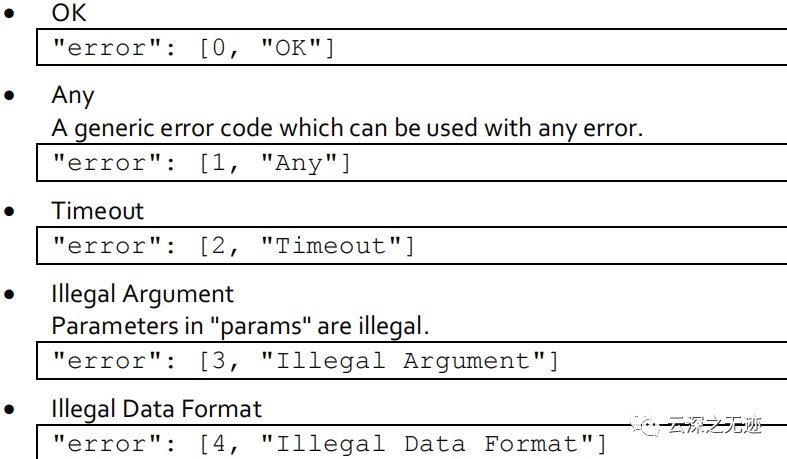

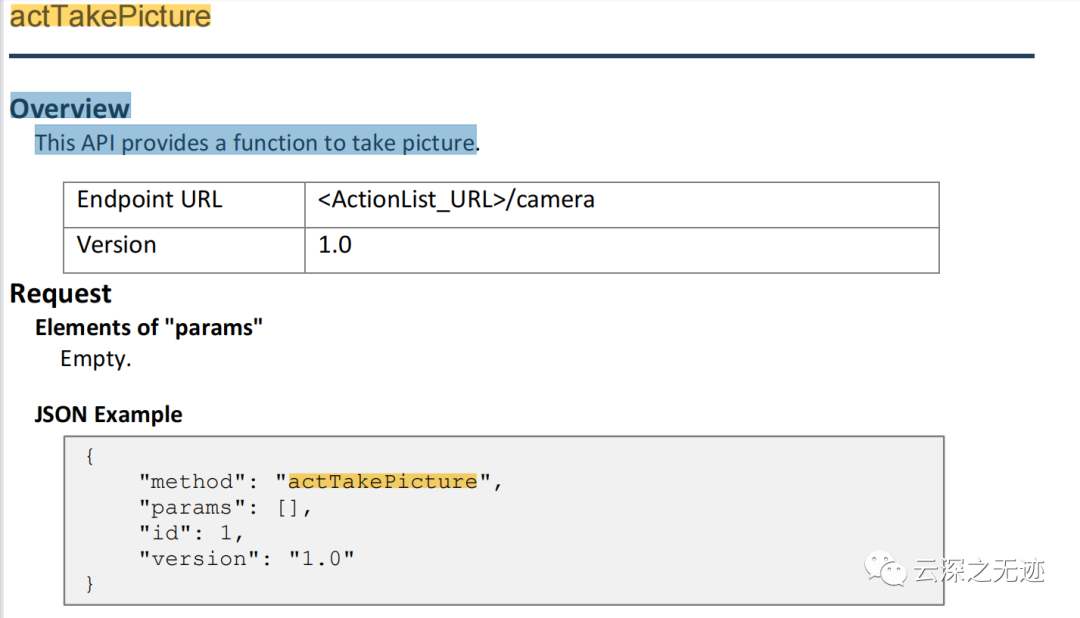

This function is packaged like json

Is it as like as two peas?

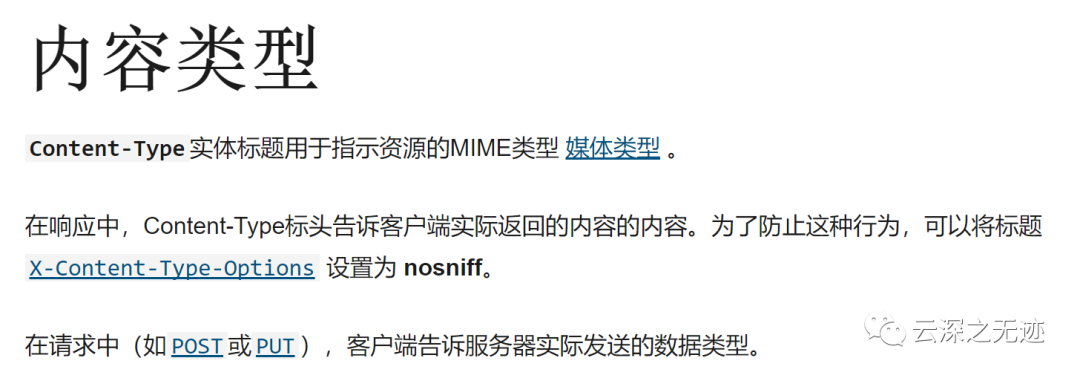

https://developer.mozilla.org/zh-CN/docs/Web/HTTP/Headers/Content-Type

Then the front-end knowledge came

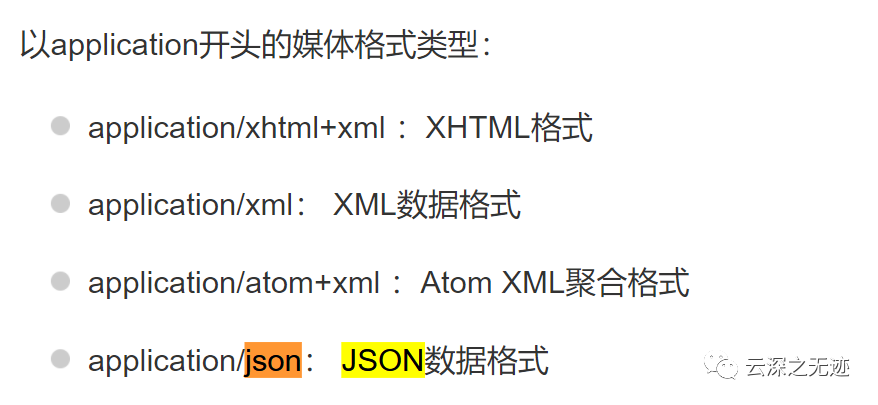

headers = {'Content-Type': 'application/json'}So it's json

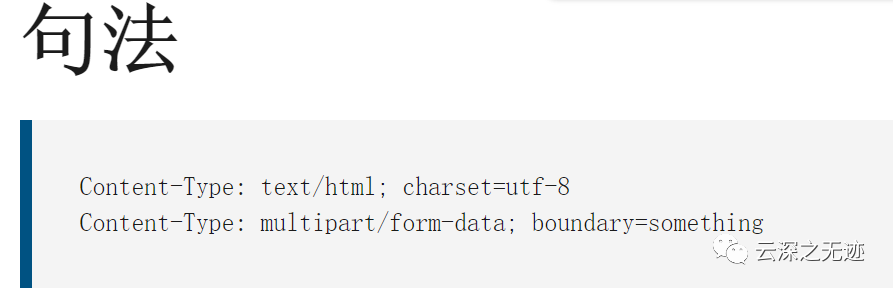

Look at the grammar. There's nothing wrong with it

This is even more correct

We send a data out like this

https://docs.python-requests.org/zh_CN/latest/user/quickstart.html

Document here

Order to take a picture

The get method is to get some data

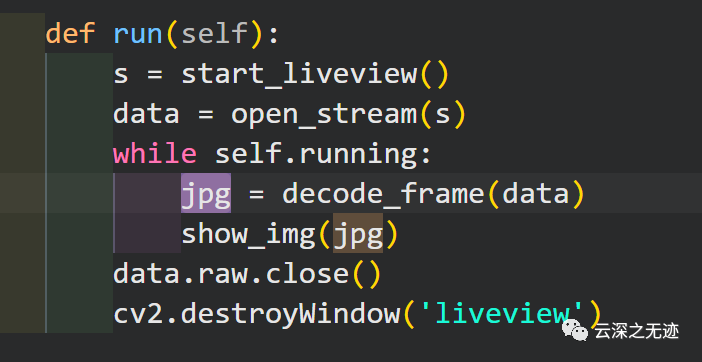

- Start the video stream. The return of this method is a strurl

- Then I opened the video stream

- Then the following is the decoding work

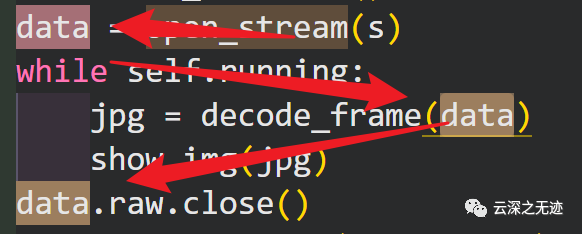

Get the data from the camera and give the decoding method

This decoding method gives the video data to the following method

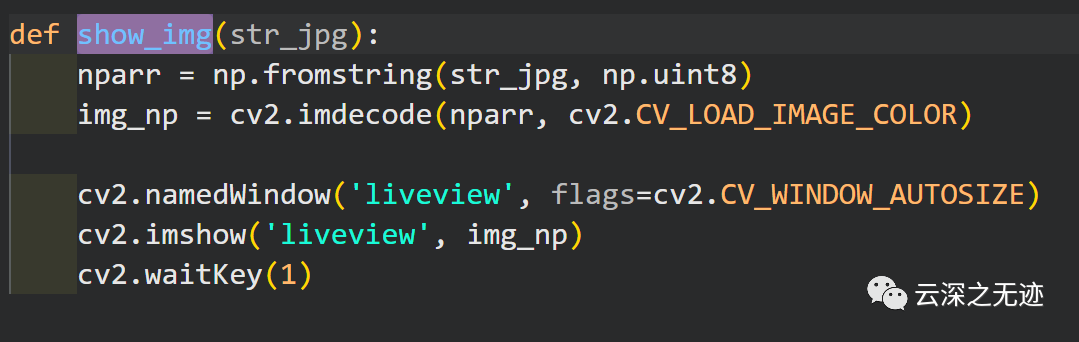

Display, Numpy's Library transforms the data, and then passes it to our cv2, which is displayed by imshow

This may look so clear

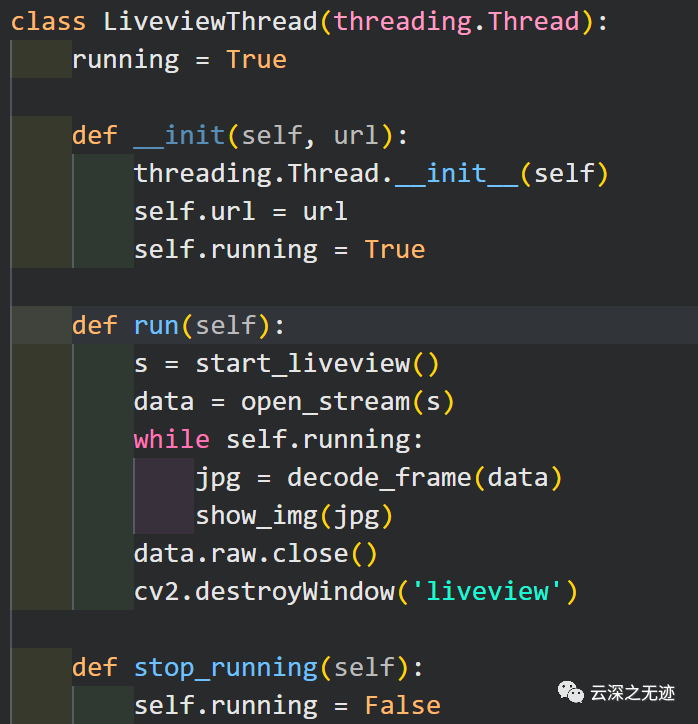

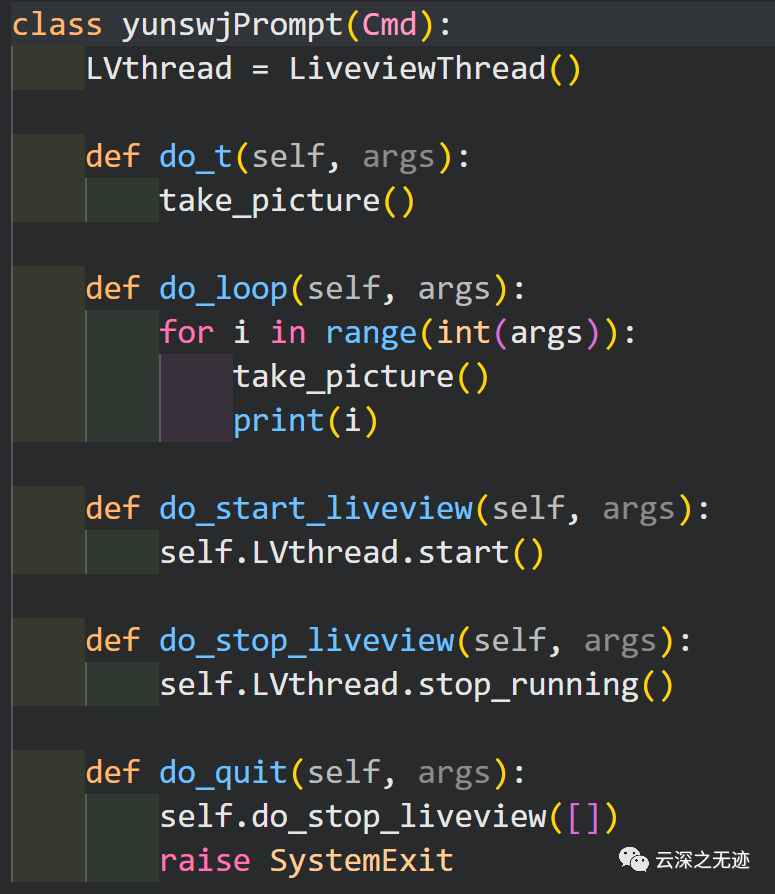

It encapsulates a class for camera connection and data decoding. Then I'm writing something to manage the state of the data flow, such as taking a picture and stopping it.

Next, you can create a new object, which is actually

The above class has been called

"""QX10 interfacing code for python"""

import json

import requests

import numpy as np

import cv2

import threading

from cmd import Cmd

class LiveviewThread(threading.Thread):

running = True

def __init(self, url):

threading.Thread.__init__(self)

self.url = url

self.running = True

def run(self):

s = start_liveview()

data = open_stream(s)

while self.running:

jpg = decode_frame(data)

show_img(jpg)

data.raw.close()

cv2.destroyWindow('liveview')

def stop_running(self):

self.running = False

class yunswjPrompt(Cmd):

LVthread = LiveviewThread()

def do_t(self, args):

take_picture()

def do_loop(self, args):

for i in range(int(args)):

take_picture()

print(i)

def do_start_liveview(self, args):

self.LVthread.start()

def do_start_liveview(self, args):

self.LVthread.stop_running()

def do_quit(self, args):

self.do_stop_liveview([])

raise SystemExit

def get_payload(method, params):

return {

"method": method,

"params": params,

"id": 1,

"version": "1.0"

}

def take_picture():

payload = get_payload("actTakePicture", [])

headers = {'Content-Type': 'application/json'}

response = requests.post(

'http://10.0.0.1:10000/sony/camera', data=json.dumps(payload), headers=headers)

url = response.json()['result']

strurl = str(url[0][0])

return strurl

def get_event():

payload = get_payload("getEvent", [False])

headers = {'Content-Type': 'application/json'}

response = requests.post(

'http://10.0.0.1:10000/sony/camera', data=json.dumps(payload), headers=headers)

return response

def get_picture(url, filename):

response = requests.get(url)

chunk_size = 1024

with open(filename, 'wb') as fd:

for chunk in response.iter_content(chunk_size):

fd.write(chunk)

# LIVEVIEW STUFF

def start_liveview():

payload = get_payload("startLiveview", [])

headers = {'Content-Type': 'application/json'}

response = requests.post(

'http://10.0.0.1:10000/sony/camera', data=json.dumps(payload), headers=headers)

url = response.json()['result']

strurl = str(url[0])

return strurl

def open_stream(url):

return requests.get(url, stream=True)

def decode_frame(data):

# Decoded packet header

start = ord(data.raw.read(1))

if(start != 0xFF):

print('bad start byte\nexpected 0xFF got %x' % start)

return

pkt_type = ord(data.raw.read(1))

if(pkt_type != 0x01):

print('not a liveview packet')

return

frameno = int(data.raw.read(2).encode('hex'), 16)

timestamp = int(data.raw.read(4).encode('hex'), 16)

# Decode liveview header

start = int(data.raw.read(4).encode('hex'), 16)

if(start != 0x24356879):

print('expected 0x24356879 got %x' % start)

return

jpg_size = int(data.raw.read(3).encode('hex'), 16)

pad_size = ord(data.raw.read(1))

# Read out the reserved header, that is, there is information behind it

data.raw.read(4)

fixed_byte = ord(data.raw.read(1))

if(fixed_byte is not 0x00):

print('expected 0x00 got %x' % fixed_byte)

return

data.raw.read(115)

# Read jpg

jpg_data = data.raw.read(jpg_size)

data.raw.read(pad_size)

return jpg_data

def show_img(str_jpg):

nparr = np.fromstring(str_jpg, np.uint8)

img_np = cv2.imdecode(nparr, cv2.CV_LOAD_IMAGE_COLOR)

cv2.namedWindow('liveview', flags=cv2.CV_WINDOW_AUTOSIZE)

cv2.imshow('liveview', img_np)

cv2.waitKey(1)

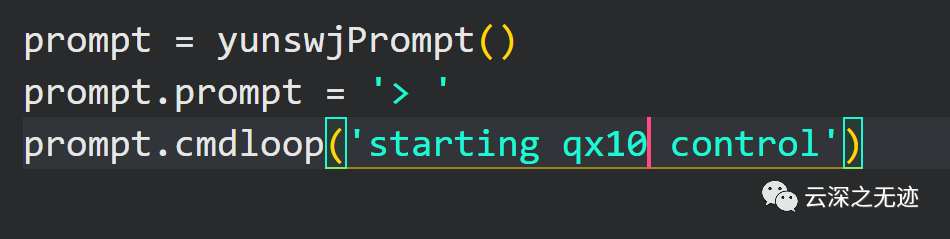

prompt = yunswjPrompt()

prompt.prompt = '> '

prompt.cmdloop('starting qx10 control')Code attached

VSCode and pycham always feel shortcomings

There is something wrong with the gateway in this place. I didn't figure it out...