catalogue

1. Member variables

//Maximum limit for hash table arrays

private static final int MAXIMUM_CAPACITY = 1 << 30;

//Hash table defaults

private static final int DEFAULT_CAPACITY = 16;

static final int MAX_ARRAY_SIZE = Integer.MAX_VALUE - 8;

//Concurrency level: jdk7 historical problems, which are only used during initialization, and do not really represent concurrency level

private static final int DEFAULT_CONCURRENCY_LEVEL = 16;

//The load factor, ConcurrentHashMap in JDK1.8, is a fixed value

private static final float LOAD_FACTOR = 0.75f;

//Tree threshold. If the specified bucket list length reaches 8, tree operation may occur.

static final int TREEIFY_THRESHOLD = 8;

//Threshold value of transforming red black tree into linked list

static final int UNTREEIFY_THRESHOLD = 6;

//Joint tree_ Threshold controls whether buckets are trealized. Only when the length of the table array reaches 64 and the length of the linked list in a bucket reaches 8 can they be truly trealized

static final int MIN_TREEIFY_CAPACITY = 64;

//The minimum step size of thread migration data controls the minimum interval of thread migration tasks

private static final int MIN_TRANSFER_STRIDE = 16;

//An identification stamp generated during capacity expansion calculation

private static int RESIZE_STAMP_BITS = 16;

//The result is 65535, indicating the maximum number of threads for concurrent expansion

private static final int MAX_RESIZERS = (1 << (32 - RESIZE_STAMP_BITS)) - 1;

//Capacity expansion correlation

private static final int RESIZE_STAMP_SHIFT = 32 - RESIZE_STAMP_BITS;

//When node hash=-1 indicates that the current node has been migrated, fwd node

static final int MOVED = -1; // hash for forwarding nodes

//node hash=-2 indicates that the current node has been trealized and the current node is a treebin object. The agent operates the red black tree

static final int TREEBIN = -2; // hash for roots of trees

static final int RESERVED = -3; // hash for transient reservations

//The conversion to binary is actually 31 1s. A negative number can be obtained by displacement operation

static final int HASH_BITS = 0x7fffffff; // usable bits of normal node hash

//Number of CPUs in the current system

static final int NCPU = Runtime.getRuntime().availableProcessors();

//In order to be compatible with version 7 of chp, the core code is not used

private static final ObjectStreamField[] serialPersistentFields = {

new ObjectStreamField("segments", Segment[].class),

new ObjectStreamField("segmentMask", Integer.TYPE),

new ObjectStreamField("segmentShift", Integer.TYPE)

};

//Hash table, the length must be the power of 2

transient volatile Node<K,V>[] table;

//During the capacity expansion, the new table in the capacity expansion will be assigned to nextTable to keep the reference. After the capacity expansion, it will be set to Null

private transient volatile Node<K,V>[] nextTable;

//When the baseCount in the LongAdder does not compete or the current LongAdder is locked, the increment is accumulated into the baseCount

private transient volatile long baseCount;

/**

* sizeCtl < 0

* 1. -1 Indicates that the current table is initializing (a thread is creating a table array), and the current thread needs to wait

* 2.Indicates that the current table array is being expanded. The high 16 bits indicate the ID stamp of the expansion. The low 16 bits indicate: (1 + nThread) the number of threads currently participating in concurrent expansion

*

* sizeCtl = 0,Indicates that default is used when creating a table array_ Capability is the size

*

* sizeCtl > 0

*

* 1. If the table is not initialized, it indicates the initialization size

* 2. If the table has been initialized, it indicates the trigger condition (threshold) for the next capacity expansion

*/

private transient volatile int sizeCtl;

/**

*

* Record the current progress during capacity expansion. All threads need to allocate interval tasks from transferIndex to execute their own tasks.

*/

private transient volatile int transferIndex;

/**

* LongAdder cellsBuzy 0 in indicates that the current LongAdder object is unlocked, and 1 indicates that the current LongAdder object is locked

*/

private transient volatile int cellsBusy;

/**

* LongAdder The cells array in. When the baseCount competes, the cells array will be created,

* The thread will get its own cell by calculating the hash value and accumulate the increment to the specified cell

* Total = sum(cells) + baseCount

*/

private transient volatile CounterCell[] counterCells;

// Unsafe mechanics

private static final sun.misc.Unsafe U;

/**Indicates the memory offset address of sizeCtl attribute in ConcurrentHashMap*/

private static final long SIZECTL;

/**Indicates the memory offset address of the transferIndex attribute in the ConcurrentHashMap*/

private static final long TRANSFERINDEX;

/**Indicates the memory offset address of baseCount attribute in ConcurrentHashMap*/

private static final long BASECOUNT;

/**Indicates the memory offset address of cellsBusy attribute in ConcurrentHashMap*/

private static final long CELLSBUSY;

/**Indicates the memory offset address of the cellValue property in CounterCell*/

private static final long CELLVALUE;

/**Represents the offset address of the first element of the array*/

private static final long ABASE;

private static final int ASHIFT;

static {

try {

U = sun.misc.Unsafe.getUnsafe();

Class<?> k = ConcurrentHashMap.class;

SIZECTL = U.objectFieldOffset

(k.getDeclaredField("sizeCtl"));

TRANSFERINDEX = U.objectFieldOffset

(k.getDeclaredField("transferIndex"));

BASECOUNT = U.objectFieldOffset

(k.getDeclaredField("baseCount"));

CELLSBUSY = U.objectFieldOffset

(k.getDeclaredField("cellsBusy"));

Class<?> ck = CounterCell.class;

CELLVALUE = U.objectFieldOffset

(ck.getDeclaredField("value"));

Class<?> ak = Node[].class;

ABASE = U.arrayBaseOffset(ak);

//Represents the space occupied by array cells, and scale represents the space occupied by each cell in the Node [] array

int scale = U.arrayIndexScale(ak);

//1 0000 & 0 1111 = 0

if ((scale & (scale - 1)) != 0)

throw new Error("data type scale not a power of two");

//numberOfLeadingZeros() returns the current value. After it is converted into binary, count from high to low to see how many zeros are in a row.

//8 => 1000 numberOfLeadingZeros(8) = 28

//4 => 100 numberOfLeadingZeros(4) = 29

//ASHIFT = 31 - 29 = 2 ??

//ABASE + (5 << ASHIFT)

ASHIFT = 31 - Integer.numberOfLeadingZeros(scale);

} catch (Exception e) {

throw new Error(e);

}

}

2. Basic method

2.1 spread

Get hash value

The upper 16 bits and the lower 16 bits are XORed, and then the result is converted to a positive number. Through the spread method, the high bit can also participate in the addressing operation.

static final int spread(int h) {

return (h ^ (h >>> 16)) & HASH_BITS;

}

2.2 tabAt

obtain i The head node of the bucket.

This method obtains the value of the object field corresponding to the offset address in the object. In fact, the meaning of this code is equivalent to tab[i], but why not use it directly tab[i]?

getObjectVolatile, once you see volatile Keyword, which means visibility. Because yes volatile Write operation happen-before to volatile Read operation, so other threads table All modifications are correct get Read visible;

although table The array itself is increased volatile Property, but "Volatile" The array of has volatile only for the reference to the array Its semantics, not its elements ". Therefore, if other threads write to the elements of this array, the current thread may not be able to read the latest value when reading. For performance reasons, Doug Lea Directly through Unsafe Class to table Perform the operation.

static final <K,V> Node<K,V> tabAt(Node<K,V>[] tab, int i) {

return (Node<K,V>)U.getObjectVolatile(tab, ((long)i << ASHIFT) + ABASE);

}

2.3 casTabAt

cas sets the current node as the bucket head node

adopt CAS Mode replacement i Node of bucket, c Represents the expected value, v Represents the value to modify to.

Modify successfully return true, failure returns false.

static final <K,V> boolean casTabAt(Node<K,V>[] tab, int i,

Node<K,V> c, Node<K,V> v) {

return U.compareAndSwapObject(tab, ((long)i << ASHIFT) + ABASE, c, v);

}

2.4 setTabAt

set up i The value of bucket position is v.

static final <K,V> void setTabAt(Node<K,V>[] tab, int i, Node<K,V> v) {

U.putObjectVolatile(tab, ((long)i << ASHIFT) + ABASE, v);

}

2.5 resizeStamp

Get capacity expansion identification stamp

The capacity expansion stamp indicates table In progress from n => 2n Expansion. A thread if you want to help table For capacity expansion, you must get the capacity expansion identification stamp. You can participate in capacity expansion only when the capacity expansion identification stamp is consistent.

static final int resizeStamp(int n) {

return Integer.numberOfLeadingZeros(n) | (1 << (RESIZE_STAMP_BITS - 1));

}

Integer.numberOfLeadingZeros This method returns an unsigned integer n Highest non 0 Bit front 0 Number of.

such as ten Binary is 0000 0000 0000 0000 0000 1010, then the value returned by this method is 28.

according to resizeStamp Let's deduce the operation logic of if n=16, then resizeStamp(16)=32796 converted to binary is [0000 0000 1000 0000 0001 1100]

Next, when the first thread attempts to expand capacity, the following code will be executed:

U.compareAndSwapInt(this, SIZECTL, sc, (rs << RESIZE_STAMP_SHIFT) + 2) rs Shift left sixteen Bit, equivalent to the original binary low bit has become a high bit 1000 0000 0001 1100 0000 0000 00000000

Then + 2 = 1000 0000 0001 1100 0000 0000 0000 0000+10=1000 0000 0001 1100 0000 00000000 0010

The upper 16 bits represent the mark for capacity expansion, and the lower 16 bits represent the number of threads for parallel capacity expansion

What are the benefits of this storage?

1. First CHM Concurrent capacity expansion is supported in. That is, if the current array needs capacity expansion, multiple threads can be responsible for it

2. It can ensure that a unique generation stamp is generated for each expansion. Each new expansion has a different generation stamp n. The generated stamp is based on n To calculate a number, n Different, this number is also different

Why is + 2 when the first thread tries to expand capacity

because one Indicates initialization, 2 Indicates that a thread is performing capacity expansion, and sizeCtl The operations of are based on bit operations, so we don't care about its own value, only its value in binary, not sc + one Will be low sixteen Bit plus 1.

2.6 tableSizeFor

Return greater than or equal to c The smallest of two Power of

/**

* Returns a power of two table size for the given desired capacity.

* See Hackers Delight, sec 3.2

* Returns the minimum power of 2 for > = C

* c=28

* n=27 => 0b 11011

* 11011 | 01101 => 11111

* 11111 | 00111 => 11111

* ....

* => 11111 + 1 =100000 = 32

*/

private static final int tableSizeFor(int c) {

int n = c - 1;

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1;

}

3. Construction method

public ConcurrentHashMap() {

}

public ConcurrentHashMap(int initialCapacity) {

if (initialCapacity < 0)

throw new IllegalArgumentException();

//If the specified capacity exceeds the maximum allowed, set it to the maximum

int cap = ((initialCapacity >= (MAXIMUM_CAPACITY >>> 1)) ?

MAXIMUM_CAPACITY :

tableSizeFor(initialCapacity + (initialCapacity >>> 1) + 1));

/**

* sizeCtl > 0

* When the table is not initialized at present, sizeCtl indicates the initialization capacity

*/

this.sizeCtl = cap;

}

public ConcurrentHashMap(Map<? extends K, ? extends V> m) {

this.sizeCtl = DEFAULT_CAPACITY;

putAll(m);

}

public ConcurrentHashMap(int initialCapacity, float loadFactor) {

this(initialCapacity, loadFactor, 1);

}

public ConcurrentHashMap(int initialCapacity,

float loadFactor, int concurrencyLevel) {

//Parameter verification

if (!(loadFactor > 0.0f) || initialCapacity < 0 || concurrencyLevel <= 0)

throw new IllegalArgumentException();

//If the initial capacity is less than the concurrency level, set the initial capacity to the concurrency level

if (initialCapacity < concurrencyLevel)

initialCapacity = concurrencyLevel;

//16/0.75 +1 = 22

long size = (long)(1.0 + (long)initialCapacity / loadFactor);

// 22 - > 32

int cap = (size >= (long)MAXIMUM_CAPACITY) ?

MAXIMUM_CAPACITY : tableSizeFor((int)size);

/**

* sizeCtl > 0

* When the table is not initialized at present, sizeCtl indicates the initialization capacity

*/

this.sizeCtl = cap;

}

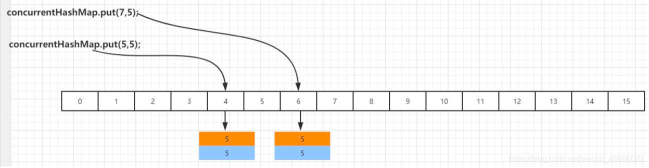

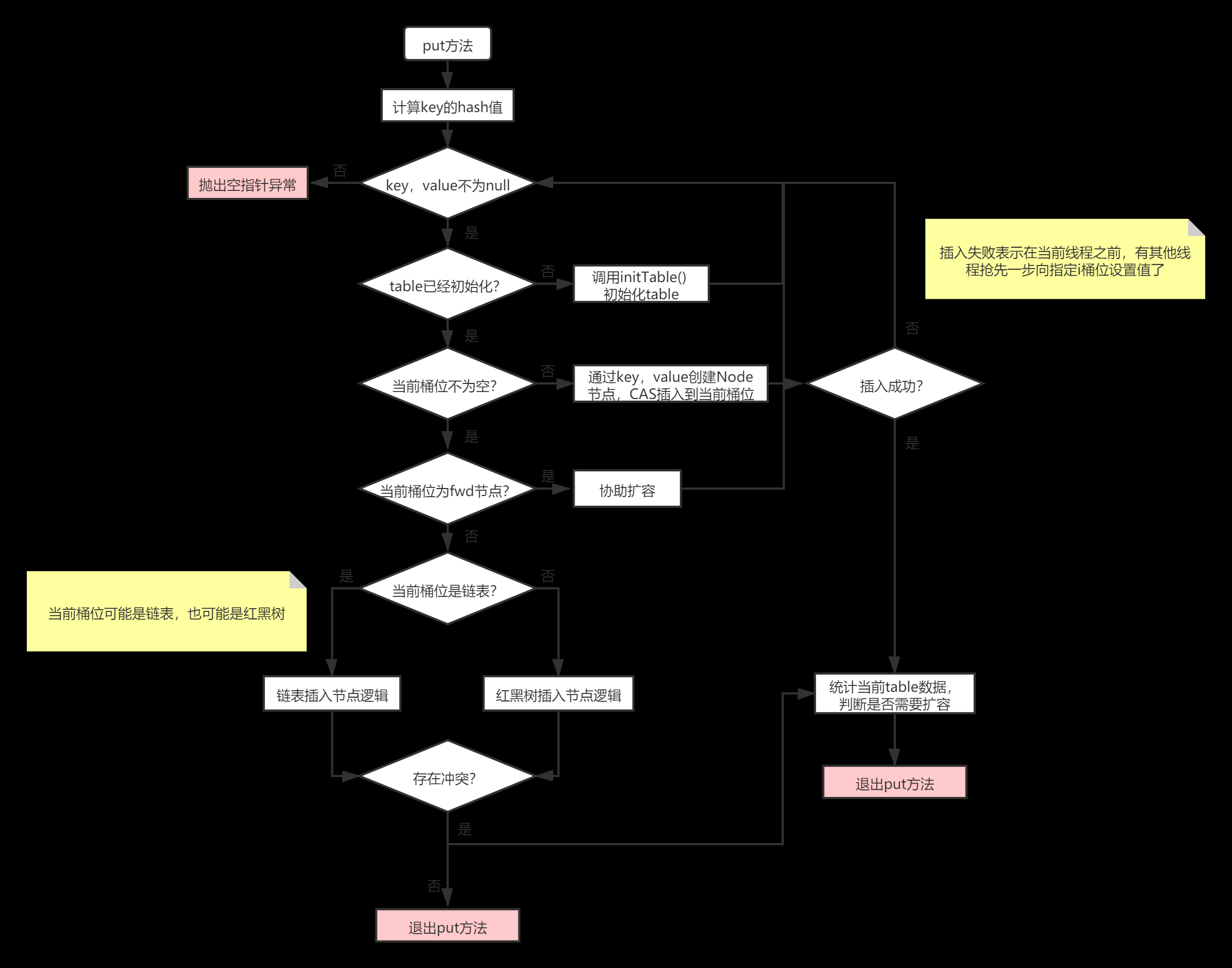

4.put

public V put(K key, V value) {

//If the key already exists, overwrite it. The default value is false

return putVal(key, value, false);

}

5 putVal

final V putVal(K key, V value, boolean onlyIfAbsent) {

//Control k and v cannot be null

if (key == null || value == null) throw new NullPointerException();

//Through the spread method, the high bit can also participate in the addressing operation.

int hash = spread(key.hashCode());

//binCount indicates the subscript position of the linked list in the bucket after the current k-v is encapsulated into node and inserted into the specified bucket

//0 means that the current bucket bit is null, and the node can be placed directly

//2 indicates that the current bucket level may already be a red black tree

int binCount = 0;

//tab refers to the table of the map object

//spin

for (Node<K,V>[] tab = table;;) {

//f represents the head node of the bucket

//n represents the length of the hash table array

//i indicates the bucket subscript obtained after the key is calculated through addressing

//fh represents the hash value of the bucket head node

Node<K,V> f; int n, i, fh;

//CASE1: true, indicating that the table in the current map has not been initialized

if (tab == null || (n = tab.length) == 0)

//Finally, the current thread will get the latest map.table reference.

tab = initTable();

//CASE2: i means that the key uses the routing addressing algorithm to obtain the subscript position of the table array corresponding to the key, and tabAt obtains the header node f of the specified bucket

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {

//Enter the CASE2 code block precondition when the current table array i bucket is Null.

//Use CAS to set the bucket bit of the specified array i to new node < K, V > (hash, key, value, null), and the expected value is null

//If the cas operation is successful, it means ok. Just break for loop

//The cas operation failed, which means that other threads have set the value to the specified i bucket before the current thread.

//The current thread can only spin again to take other logic.

if (casTabAt(tab, i, null,

new Node<K,V>(hash, key, value, null)))

break; // no lock when adding to empty bin

}

//CASE3: precondition: the header node of bucket bit must not be null.

//If the condition is true, it means that the head node of the current bucket is FWD node, which means that the map is in the process of capacity expansion

else if ((fh = f.hash) == MOVED)

//After seeing the fwd node, the current node is obliged to help the current map object complete data migration

//Help expand capacity

tab = helpTransfer(tab, f);

//CASE4: the current bucket may be a linked list or a red black tree proxy node TreeBin

else {

//When the inserted key exists, the old value will be assigned to oldVal and returned to the put method call

V oldVal = null;

//Using sync to lock "head node" is theoretically "head node"

synchronized (f) {

//Why do you want to compare to see if the header node of the current bucket is the header node obtained before?

//In order to prevent other threads from modifying the head node of the bucket, the current thread has a problem locking from sync. After that, you don't have to do all the operations.

if (tabAt(tab, i) == f) {//If the conditions are true, it means that the object we lock has no problem and can be built in!

//If the condition is true, it indicates that the current bucket is an ordinary linked list bucket.

if (fh >= 0) {

//1. When the current inserted key is inconsistent with the keys of all elements in the linked list, the current insertion operation is to append to the end of the linked list, and binCount represents the length of the linked list

//2. When the current inserted key is consistent with the key of an element in the linked list, the current insertion operation may be replacement. binCount indicates the conflict location (binCount - 1)

binCount = 1;

//Iterate over the linked list of the current bucket. e is the processing node of each cycle.

for (Node<K,V> e = f;; ++binCount) {

//Current cycle node key

K ek;

//Condition 1: e.hash == hash holds, indicating that the hash value of the current element of the loop is consistent with the hash value of the inserted node, which needs further judgment

//Condition 2: ((EK = e.key) = = key | (EK! = null & & key. Equals (EK)))

// True: indicates that the current node of the loop is consistent with the key of the inserted node, and there is a conflict

if (e.hash == hash &&

((ek = e.key) == key ||

(ek != null && key.equals(ek)))) {

//Assign the value of the element of the current loop to oldVal

oldVal = e.val;

if (!onlyIfAbsent)

e.val = value;

break;

}

//When the current element is inconsistent with the key of the inserted element, the following procedure will be followed.

//1. Update the cyclic processing node to the next node of the current node

//2. Judge whether the next node is null. If it is null, it means that the current node is already at the end of the queue, and the inserted data needs to be appended to the end of the queue node.

Node<K,V> pred = e;

if ((e = e.next) == null) {

pred.next = new Node<K,V>(hash, key,

value, null);

break;

}

}

}

//Precondition: this bucket must not be a linked list

//If the condition is true, it indicates that the current bucket is the red black tree proxy node TreeBin

else if (f instanceof TreeBin) {

//p means that if there is a conflict node in the red black tree with the key of the node you inserted, the putTreeVal method will return the reference of the conflict node.

Node<K,V> p;

//binCount is forcibly set to 2. Because binCount < = 1 has other meanings, it is set to 2 here

binCount = 2;

//Condition 1: true, indicating that the key of the currently inserted node is consistent with the key of a node in the red black tree, and conflicts

if ((p = ((TreeBin<K,V>)f).putTreeVal(hash, key,

value)) != null) {

//Assign the value of the conflict node to oldVal

oldVal = p.val;

if (!onlyIfAbsent)

p.val = value;

}

}

}

}

//This indicates that the current bucket is not null. It may be a red black tree or a linked list

if (binCount != 0) {

//If bincount > = 8, the bucket bit processed must be a linked list

if (binCount >= TREEIFY_THRESHOLD)

//Call the method of transforming linked list into red black tree

treeifyBin(tab, i);

//This indicates that the data key inserted by the current thread conflicts with the original k-v, and the original data v needs to be returned to the caller.

if (oldVal != null)

return oldVal;

break;

}

}

}

//1. Count the total data in the current table

//2. Judge whether the capacity expansion threshold standard is reached and trigger capacity expansion.

addCount(1L, binCount);

return null;

}

6 initTable

Array initialization method, which is relatively simple, is to initialize an array of appropriate size.

sizeCtl : This sign is in Node A control bit identifier during array initialization or expansion. A negative number indicates that initialization or expansion is in progress.

-1 Represents initializing

-N Representative has N-1 A thread is expanding capacity, which is not simply understood here n Threads, sizeCtl It's - n

0 identification Node The array has not been initialized. A positive number represents the size of initialization or next expansion

/**

* Initializes table, using the size recorded in sizeCtl.

* * sizeCtl < 0

* * 1. -1 Indicates that the current table is initializing (a thread is creating a table array), and the current thread needs to wait

* * 2.Indicates that the current table array is being expanded. The high 16 bits indicate the ID stamp of the expansion. The low 16 bits indicate: (1 + nThread) the number of threads currently participating in concurrent expansion

* *

* * sizeCtl = 0,Indicates that default is used when creating a table array_ Capability is the size

* *

* * sizeCtl > 0

* *

* * 1. If the table is not initialized, it indicates the initialization size

* * 2. If the table has been initialized, it indicates the trigger condition (threshold) for the next capacity expansion

*/

private final Node<K,V>[] initTable() {

//tab reference map.table

//Temporary value of sc sizeCtl

Node<K,V>[] tab; int sc;

//Spin condition: map.table has not been initialized

while ((tab = table) == null || tab.length == 0) {

if ((sc = sizeCtl) < 0)

//The high probability is - 1, indicating that other threads are in the process of creating a table, and the current thread does not compete for the lock to initialize the table.

Thread.yield(); // lost initialization race; just spin

//1.sizeCtl = 0, which means default is used when creating table array_ Capability is the size

//2. If the table is not initialized, it indicates the initialization size

//3. If the table has been initialized, it indicates the trigger condition (threshold) for the next capacity expansion

else if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {

try {

//Why judge here? Prevent other threads from initializing, and then the current thread initializes again.. resulting in data loss.

//If the condition is true, it means that no other thread has entered this if block, and the current thread has the right to initialize the table.

if ((tab = table) == null || tab.length == 0) {

//sc is greater than 0. When creating a table, use sc as the specified size, otherwise use the default value of 16

int n = (sc > 0) ? sc : DEFAULT_CAPACITY;

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];

//Finally assigned to map.table

table = tab = nt;

//N > > > 2 = > equals 1 / 4 N - (1 / 4) n = 3 / 4 N = > 0.75 * n

//sc 0.75 n indicates the trigger condition for the next expansion.

sc = n - (n >>> 2);

}

} finally {

//1. If the current thread is the thread that creates the map.table for the first time, sc represents the threshold of the next capacity expansion

//2. Indicates that the current thread is not the thread that created the map.table for the first time. When the current thread enters the else if block, it will

//If sizeCtl is set to - 1, you need to modify it to the value at the time of entry.

sizeCtl = sc;

}

break;

}

}

return tab;

}

7 addCount

current table Number of elements plus x (use) LongAdder Implementation), and then judge table Whether capacity expansion is required or whether the current thread needs assistance in capacity expansion.

private final void addCount(long x, int check) {

//as stands for LongAdder.cells

//b stands for LongAdder.base

//s indicates the number of elements in the current map.table

CounterCell[] as; long b, s;

//Condition 1: true - > indicates that cells have been initialized. The current thread should use hash addressing to find a suitable cell to accumulate data

// False - > indicates that the current thread should accumulate data to the base

//Condition 2: false - > indicates that the base is written successfully, and the data is added to the base. At present, the competition is not fierce, and there is no need to create cells

// True - > indicates that writing to the base failed and competed with other threads on the base. The current thread should try to create cells.

if ((as = counterCells) != null ||

!U.compareAndSwapLong(this, BASECOUNT, b = baseCount, s = b + x)) {

//How many situations can you enter the if block?

//1. True - > indicates that cells have been initialized. The current thread should use hash addressing to find a suitable cell to accumulate data

//2. True - > indicates that writing to the base failed and competed with other threads on the base. The current thread should try to create cells.

//a indicates the cell hit by hash addressing of the current thread

CounterCell a;

//v indicates the expected value when the current thread writes the cell

long v;

//m represents the length of the current cells array

int m;

//True - > no contention false - > contention occurs

boolean uncontended = true;

//Condition 1: as = = null | (M = as. Length - 1) < 0

//True - > indicates that the current thread enters the if block after writing the base contention failure. You need to call the fullAddCount method to expand or retry.. LongAdder.longAccumulate

//Condition 2: a = as [threadlocalrandom. Getprobe() & M]) = = null precondition: cells have been initialized

//True - > indicates that the cell table hit by the current thread is empty. The current thread needs to enter the fullAddCount method to initialize the cell and put it into the current location

//Condition 3:! (uncontended = U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x)

// False - > get false by negation, indicating that the current thread successfully updates the currently hit cell using cas

// True - > get true by negation, which means that the current thread failed to update the currently hit cells using cas. It needs to enter fullAddCount to retry or expand the cells.

if (as == null || (m = as.length - 1) < 0 ||

(a = as[ThreadLocalRandom.getProbe() & m]) == null ||

!(uncontended = U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x))

) {

fullAddCount(x, uncontended);

//Considering that things in fullAddCount are tired, let the current thread not participate in the logic related to capacity expansion and return directly to the call point.

return;

}

if (check <= 1)

return;

//Gets the number of current hash table elements, which is an expected value

s = sumCount();

}

//Indicates that it must be an addCount called by a put operation

if (check >= 0) {

//tab represents map.table

//nt means map.nextTable

//n represents the length of the map.table array

//sc represents the temporary value of sizeCtl

Node<K,V>[] tab, nt; int n, sc;

/**

* sizeCtl < 0

* 1. -1 Indicates that the current table is initializing (a thread is creating a table array), and the current thread needs to wait

* 2.Indicates that the current table array is being expanded. The high 16 bits indicate the ID stamp of the expansion. The low 16 bits indicate: (1 + nThread) the number of threads currently participating in concurrent expansion

*

* sizeCtl = 0,Indicates that default_capability is used as the size when creating a table array

*

* sizeCtl > 0

*

* 1. If the table is not initialized, it indicates the initialization size

* 2. If the table has been initialized, it indicates the trigger condition (threshold) for the next capacity expansion

*/

//spin

//Condition 1: s > = (long) (SC = sizectl)

// True - > 1. The current sizeCtl is a negative number, indicating that capacity expansion is in progress

// 2. The current sizeCtl is a positive number, indicating the capacity expansion threshold

// False - > indicates that the current table has not reached the capacity expansion condition

//Condition 2: (tab = table)! = null

// Constant true

//Condition 3: (n = tab. Length) < maximum_capability

// True - > if the length of the current table is less than the maximum limit, the capacity can be expanded.

while (s >= (long)(sc = sizeCtl) && (tab = table) != null &&

(n = tab.length) < MAXIMUM_CAPACITY) {

//Unique identification stamp of expansion batch

//16 - > 32 capacity expansion ID: 1000 0000 0001 1011

int rs = resizeStamp(n);

//Condition holds: indicates that the current table is being expanded

// In theory, the current thread should assist table in capacity expansion

if (sc < 0) {

//Condition 1: (SC > > > resize_stamp_shift)! = RS

// True - > indicates that the unique identification stamp of capacity expansion obtained by the current thread is not the capacity expansion of this batch

// False - > indicates that the unique identification stamp of capacity expansion obtained by the current thread is the capacity expansion of this batch

//Condition 2: there is a bug in JDK1.8. JIRA has proposed it. In fact, it wants to express = SC = = (RS < < 16) + 1

// True - > indicates that the capacity expansion is completed and the current thread does not need to participate

// False - > capacity expansion is still in progress, and the current thread can participate

//Condition 3: JIRA has proposed a bug in JDK1.8. In fact, it wants to express = SC = = (RS < < 16) + max_resizers

// True - > indicates that the current thread participating in concurrent capacity expansion has reached the maximum value of 65535 - 1

// False - > indicates that the current thread can participate

//Condition 4: (nt = nextTable) == null

// True - > indicates the end of this expansion

// False - > capacity expansion in progress

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || (nt = nextTable) == null ||

transferIndex <= 0)

break;

//Precondition: the current table is being expanded.. the current thread has the opportunity to participate in the expansion.

//If the condition is true, it indicates that the current thread successfully participates in the capacity expansion task, and adds 1 to the low 16 bit value of sc, indicating that one more thread participates in the work

//Condition failure: 1. At present, many threads are trying to modify sizeCtl here, and another thread has successfully modified it, resulting in the inconsistency between your sc expectation and the value in memory. The modification fails

// 2. The thread inside the transfer task also modified sizeCtl.

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1))

//Assist in expanding the thread and hold the nextTable parameter

transfer(tab, nt);

}

//1000 0000 0001 1011 0000 0000 0000 0000 +2 => 1000 0000 0001 1011 0000 0000 0000 0010

//If the condition is true, it indicates that the current thread is the first thread to trigger capacity expansion, and some capacity expansion preparations need to be done in the transfer method

else if (U.compareAndSwapInt(this, SIZECTL, sc,

(rs << RESIZE_STAMP_SHIFT) + 2))

//The thread triggering the expansion condition does not hold nextTable

transfer(tab, null);

s = sumCount();

}

}

}

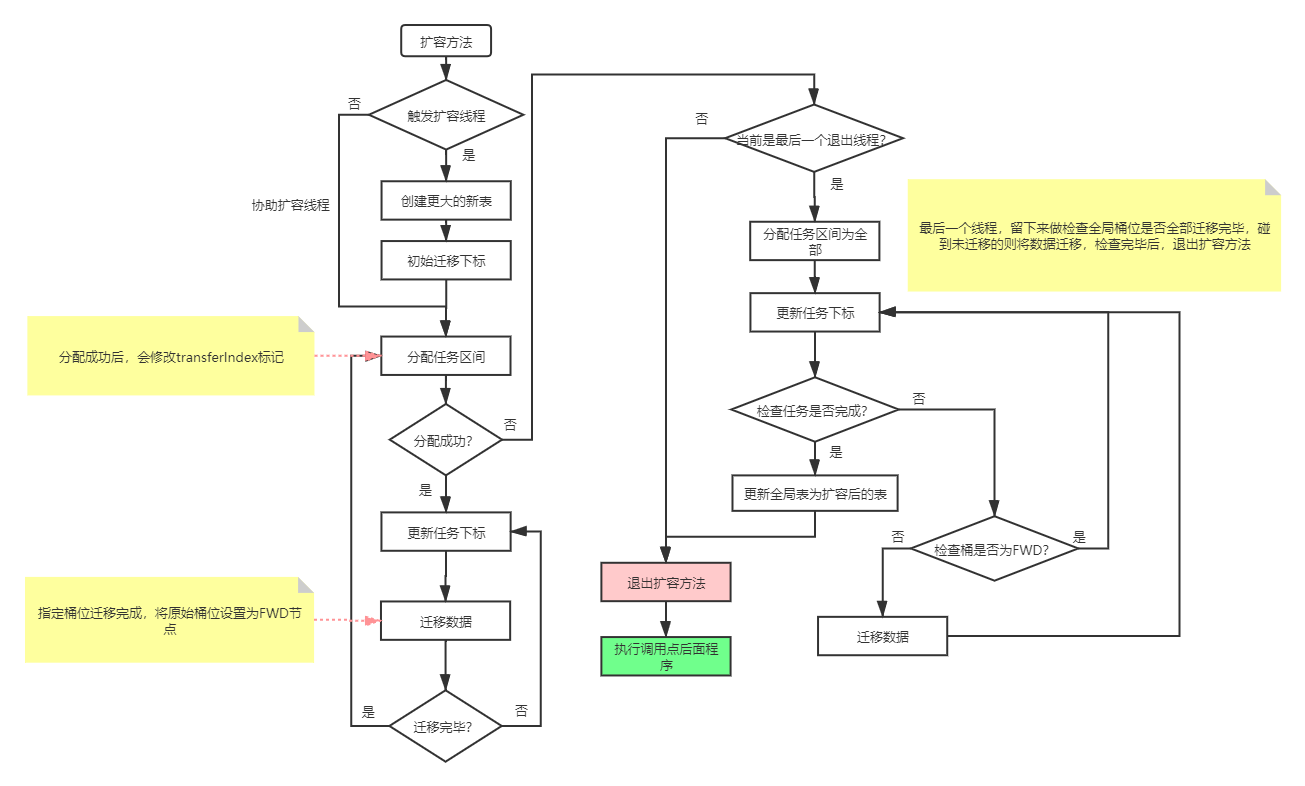

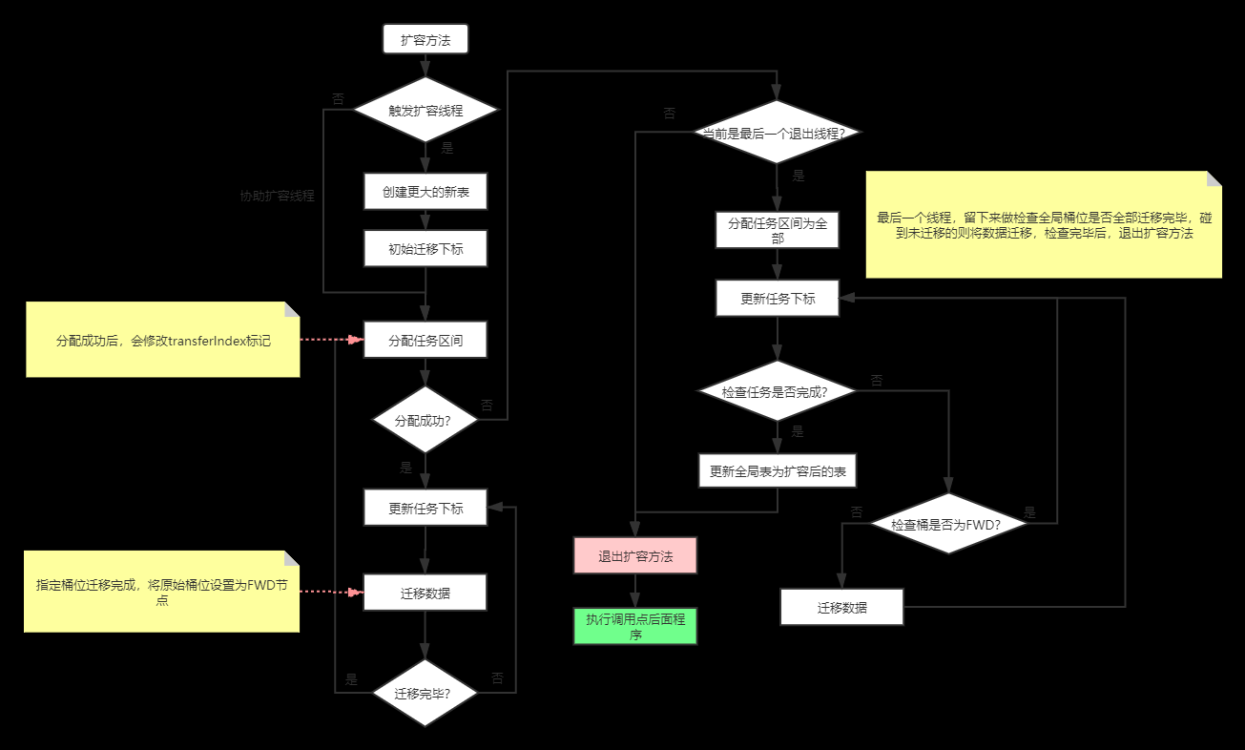

8. transfer

ConcurrentHashMap Support concurrent capacity expansion. The implementation method is Node The array is split so that each thread processes its own region, assuming table The total length of the array is 64. By default, each thread can be assigned to sixteen individual bucket. Then migrate the processing range of each thread in reverse order.

adopt for The linked list elements in each slot are processed by self circulation. By default advace True, through CAS set up transferIndex property value and initialize i and bound Value, I It refers to the serial number of the currently processed slot, bound It refers to the slot boundary to be processed. The slot shall be processed first thirty-one Node of; (bound,i) = (16,31) from thirty-one Push forward from your position.

After each thread performs the capacity expansion operation, it passes the cas implement sc-1.

Then judge (SC-2)! = resizestamp (n)<< RESIZE_STAMP_SHIFT ; If it is equal, it indicates that it is the current of the entire capacity expansion operation The last thread means that the entire capacity expansion operation is over; if it is not equal, it means that it has to continue.

On the one hand, the purpose of this is to prevent the same between different capacity expansion sizeCtl, on the other hand, can also avoid sizeCtl of ABA The expansion overlap caused by the problem.

Expansion diagram

Judge whether capacity expansion is needed, that is, the total number of key value pairs after updating baseCount >= threshold sizeCtl There are two logics in rehash.

- If you are currently in the capacity expansion phase, the current thread will join and assist in capacity expansion.

- If there is no capacity expansion currently, the capacity expansion operation will be triggered directly.

The core of the capacity expansion operation is the data transfer. In the single thread environment, the data transfer is very simple, which is nothing more than migrating the data in the old array to the new array. However, in a multithreaded environment, other threads may be adding elements during capacity expansion. What if the capacity expansion is triggered? The first solution you may think of is to add a mutex lock to lock the transfer process. Although it is a feasible solution, it will bring large performance overhead. Because the mutex lock will cause all threads accessing the critical area to fall into a blocking state, the longer the thread holding the lock takes, the other competing threads will always be blocked, resulting in low throughput. It may also cause deadlock.

and ConcurrentHashMap Instead of locking directly, it adopts CAS The most important part of implementing concurrent strategy without locking is that it can make use of multiple threads to coordinate expansion.

It put Node The array is regarded as a task queue shared by multiple threads, and then a pointer is maintained to divide the interval responsible for each thread lock. Each thread expands its capacity through interval reverse traversal. A migrated bucket will be replaced with a ForwardingNode node to mark that the current bucket has been migrated by other threads. Next, analyze its source code implementation.

fwd: this class is an identification class used to point to a new table. Other threads will actively skip this class when they encounter this class, because this class is either in progress or has completed the expansion migration. That is, this class should ensure thread safety before operation.

Advance: this variable is used to prompt the code whether to carry out advance processing, that is, the identification of the next bucket after the current bucket is processed.

finishing: this variable is used to prompt whether the expansion is over.

private final void transfer(Node<K,V>[] tab, Node<K,V>[] nextTab) {

//n indicates the length of the table array before capacity expansion

//String represents the step size assigned to the thread task

int n = tab.length, stride;

// The stripe is fixed to 16

if ((stride = (NCPU > 1) ? (n >>> 3) / NCPU : n) < MIN_TRANSFER_STRIDE)

stride = MIN_TRANSFER_STRIDE; // subdivide range

//If the condition is true, it means that the current thread is the thread that triggers this capacity expansion, and some capacity expansion preparations need to be done

//Condition not valid: indicates that the current thread is a thread that assists in capacity expansion

if (nextTab == null) { // initiating

try {

//A table twice as large as before capacity expansion is created

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n << 1];

nextTab = nt;

} catch (Throwable ex) { // try to cope with OOME

sizeCtl = Integer.MAX_VALUE;

return;

}

//Assign the value to the object attribute nextTable to facilitate the expansion thread to get the new table

nextTable = nextTab;

//A marker that records the overall location of migrated data. The index count is calculated from 1.

transferIndex = n;

}

//Represents the length of the new array

int nextn = nextTab.length;

//fwd node: after a bucket data is processed, set this bucket as a fwd node. Other write threads or read threads will have different logic when they see it.

ForwardingNode<K,V> fwd = new ForwardingNode<K,V>(nextTab);

//Push mark

boolean advance = true;

//Completion mark

boolean finishing = false; // to ensure sweep before committing nextTab

//i indicates the bucket bit allocated to the current thread task

//Bound indicates the lower bound limit assigned to the current thread task

int i = 0, bound = 0;

//spin

for (;;) {

//f head node of bucket position

//hash of fh header node

Node<K,V> f; int fh;

/**

* 1.Assign a task interval to the current thread

* 2.Maintain the current thread task progress (i represents the bucket currently processed)

* 3.Maintain global progress of map objects

*/

while (advance) {

//Start subscript of assigned task

//End subscript of assignment task

int nextIndex, nextBound;

//CASE1:

//Condition 1: - I > = bound

//True: indicates that the task of the current thread has not been completed and there are bucket bits in the corresponding interval to be processed, -i let the current thread process the next bucket bit

//Not tenable: indicates that the current thread task has been completed or not allocated

if (--i >= bound || finishing)

advance = false;

//CASE2:

//Precondition: the current thread task has been completed or not allocated

//If the condition is true, it means that the bucket bits in the global scope of the object have been allocated, and there is no interval to allocate. Set the i variable of the current thread to - 1. After jumping out of the loop, execute the program related to exiting the migration task

//If the condition is not tenable, it means that the bucket bits within the global scope of the object have not been allocated, and there are still ranges to be allocated

else if ((nextIndex = transferIndex) <= 0) {

i = -1;

advance = false;

}

//CASE3:

//Preconditions: 1. The current thread needs to allocate a task interval. 2. There are buckets in the global range that have not been migrated

//If the condition is true, it indicates that the task is successfully assigned to the current thread

//Condition failure: it indicates that the allocation to the current thread failed. It should compete with other threads

else if (U.compareAndSwapInt

(this, TRANSFERINDEX, nextIndex,

nextBound = (nextIndex > stride ?

nextIndex - stride : 0))) {

bound = nextBound;

i = nextIndex - 1;

advance = false;

}

}

//CASE1:

//Condition 1: I < 0

//True: indicates that the current thread is not assigned to a task

if (i < 0 || i >= n || i + n >= nextn) {

//Variable to hold sizeCtl

int sc;

if (finishing) {

nextTable = null;

table = nextTab;

sizeCtl = (n << 1) - (n >>> 1);

return;

}

//If the condition is true, it means that setting the low 16 bit - 1 of sizeCtl is successful, and the current thread can exit normally

if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) {

//1000 0000 0001 1011 0000 0000 0000 0000

//Condition holds: it indicates that the current thread is not the last thread to exit the transfer task

if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT)

//Normal exit

return;

finishing = advance = true;

i = n; // recheck before commit

}

}

//Preconditions: [CASE2~CASE4] the current thread task has not been processed and is in progress

//CASE2:

//If the condition is true: it indicates that no data is stored in the current bucket. You only need to set this as a fwd node.

else if ((f = tabAt(tab, i)) == null)

advance = casTabAt(tab, i, null, fwd);

//CASE3:

//Condition holds: it indicates that the current bucket has been migrated, and the current thread does not need to process any more. Directly update the task index of the current thread again, and process the next bucket or other operations again

else if ((fh = f.hash) == MOVED)

advance = true; // already processed

//CASE4:

//Precondition: the current bucket has data, and the node node is not a fwd node, indicating that these data need to be migrated.

else {

//sync locks the header node of the current bucket

synchronized (f) {

//Prevent the header object of the current bucket from being modified by other write threads before you lock the header object, resulting in an error in your current locking object

if (tabAt(tab, i) == f) {

//ln indicates the low linked list reference

//hn indicates the high-order linked list reference

Node<K,V> ln, hn;

//Condition true: indicates that the current bucket location is a linked list bucket location

if (fh >= 0) {

//lastRun

//You can get the node whose continuous high order remains unchanged at the end of the current linked list

int runBit = fh & n;

Node<K,V> lastRun = f;

for (Node<K,V> p = f.next; p != null; p = p.next) {

int b = p.hash & n;

if (b != runBit) {

runBit = b;

lastRun = p;

}

}

//The condition holds: if the linked list referenced by lastRun is a low-level linked list, let ln point to the low-level linked list

if (runBit == 0) {

ln = lastRun;

hn = null;

}

//Otherwise, if the linked list referenced by lastRun is a high-order linked list, let hn point to the high-order linked list

else {

hn = lastRun;

ln = null;

}

for (Node<K,V> p = f; p != lastRun; p = p.next) {

int ph = p.hash; K pk = p.key; V pv = p.val;

if ((ph & n) == 0)

ln = new Node<K,V>(ph, pk, pv, ln);

else

hn = new Node<K,V>(ph, pk, pv, hn);

}

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

setTabAt(tab, i, fwd);

advance = true;

}

//Condition true: indicates that the current bucket is the red black tree proxy node TreeBin

else if (f instanceof TreeBin) {

//Convert header node to treeBin reference t

TreeBin<K,V> t = (TreeBin<K,V>)f;

//The low two-way linked list lo points to the head of the low linked list and loTail points to the tail of the low linked list

TreeNode<K,V> lo = null, loTail = null;

//The high-order bidirectional linked list lo points to the head of the high-order linked list and loTail points to the tail of the high-order linked list

TreeNode<K,V> hi = null, hiTail = null;

//lc indicates the number of low linked list elements

//hc indicates the number of high-order linked list elements

int lc = 0, hc = 0;

//Iterate the two-way linked list in TreeBin, from the beginning node to the end node

for (Node<K,V> e = t.first; e != null; e = e.next) {

// h represents a hash that loops through the current element

int h = e.hash;

//A new TreeNode built using the current node

TreeNode<K,V> p = new TreeNode<K,V>

(h, e.key, e.val, null, null);

//Condition holds: indicates that the current cycle node belongs to the low order chain node

if ((h & n) == 0) {

//If the condition is true, it indicates that there is no data in the current low-level linked list

if ((p.prev = loTail) == null)

lo = p;

//It indicates that the low-level linked list already has data. At this time, the current element is appended to the end of the low-level linked list

else

loTail.next = p;

//Point the low end pointer to the p node

loTail = p;

++lc;

}

//The current node belongs to the high chain node

else {

if ((p.prev = hiTail) == null)

hi = p;

else

hiTail.next = p;

hiTail = p;

++hc;

}

}

ln = (lc <= UNTREEIFY_THRESHOLD) ? untreeify(lo) :

(hc != 0) ? new TreeBin<K,V>(lo) : t;

hn = (hc <= UNTREEIFY_THRESHOLD) ? untreeify(hi) :

(lc != 0) ? new TreeBin<K,V>(hi) : t;

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

setTabAt(tab, i, fwd);

advance = true;

}

}

}

}

}

}

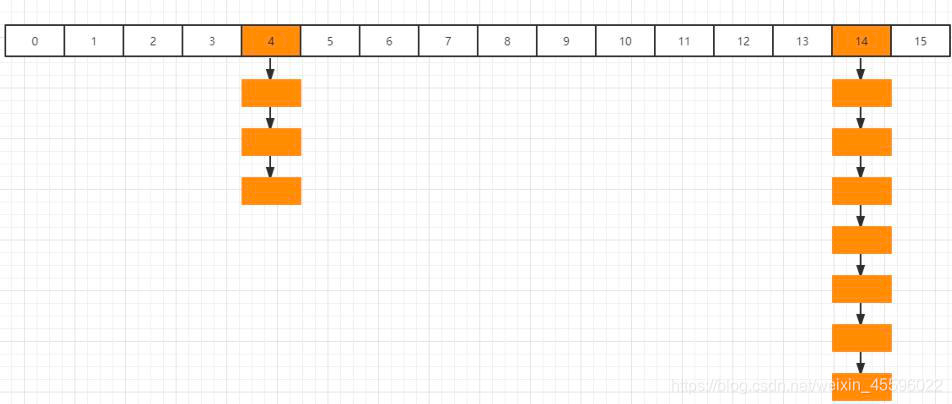

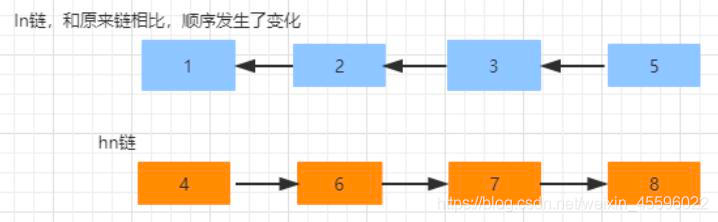

Linked list migration principle

1) Principle analysis of high and low position

ConcurrentHashMap When migrating the linked list, it will be implemented with high and low bits. Here are two problems to be analyzed

1. How to distinguish high and low linked lists

If there is such a queue

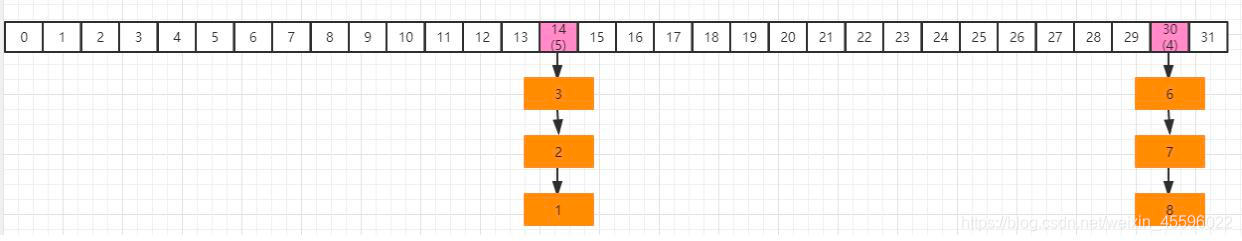

The first fourteen After inserting new nodes into slots, the number of linked list elements has reached 8, and the array length is 16. Give priority to capacity expansion to alleviate the problem of long linked list

If the current thread is processing, the slot is fourteen It is a linked list structure. In the code, first define two variable nodes ln and hn, actually lowNode and HighNode, save separately hash Value x Bit 0 Sum is not equal to 0 Node of

adopt fn&n The elements in this linked list can be divided into two categories, A Class is hash Value X Bit 0,B Class is hash Value x Bit is not equal to 0 (as for why we should distinguish this, we will analyze it later), and pass lastRun Record the last node to be processed. The ultimate goal is, A Class, B The linked list of class is 14 + 16 (length increased by expansion) = 30

hold fourteen The linked list of slots is displayed in blue fn&n=0 If the classification of the linked list is like this

for (Node<K,V> p = f.next; p != null; p = p.next) {

int b = p.hash & n;

if (b != runBit) {

runBit = b;

lastRun = p;

}

}

Traversal through the above code will record runBit as well as lastRun, according to the above structure, then runBit It should be a blue node, lastRun It should be the second six Nodes are then traversed through this code to generate ln Chain and hn chain

for (Node<K,V> p = f; p != lastRun; p = p.next) {

int ph = p.hash; K pk = p.key; V pv = p.val;

if ((ph & n) == 0)

ln = new Node<K,V>(ph, pk, pv, ln);

else

hn = new Node<K,V>(ph, pk, pv, hn);

}

Then, through CAS Operation, put hn Chain on i+n that is 14+16 Location of, ln The chain remains in its original position. And set the current node as fwd, indicating that it has been migrated by the current thread.

setTabAt(nextTab, i, ln); setTabAt(nextTab, i + n, hn); setTabAt(tab, i, fwd);

The data distribution after migration is as follows

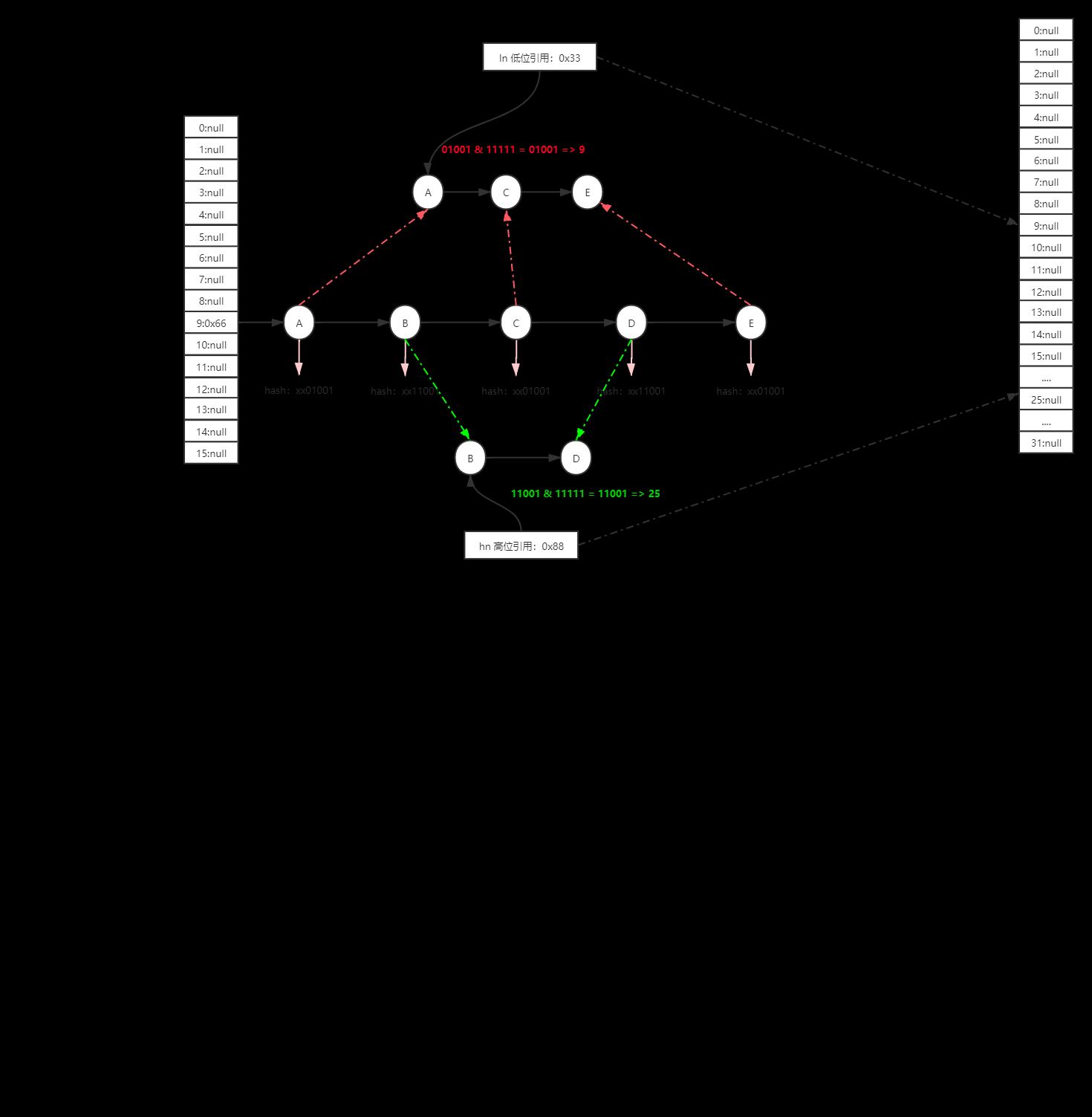

2) Why do we divide high and low

To understand the purpose of this design, we need to start from ConcurrentHashMap According to the algorithm of obtaining objects by subscript putVal Method one thousand and eighteen that 's ok:

(f = tabAt(tab, i = (n - 1) & hash)) == null

Pass (n-1) & hash To get in table To obtain node data, the [& operation is a binary operator, 1& 1 = 1, others are 0].

9.helpTransfer

If the corresponding node exists, judge the status of this node hash Is it equal to MOVED(-1), indicating that the current node is ForwardingNode Node, which means that other threads are expanding capacity. Now directly help it expand capacity, so call helpTransfer method.

final Node<K,V>[] helpTransfer(Node<K,V>[] tab, Node<K,V> f) {

//nextTab refers to fwd.nextTable == map.nextTable, theoretically.

//sc save map.sizeCtl

Node<K,V>[] nextTab; int sc;

//Condition 1: tab= Null is true

//Condition 2: (f instanceof ForwardingNode) constant true

//Condition 3: ((forwardingnode < K, V >) f). Nexttable)= Null is true

if (tab != null && (f instanceof ForwardingNode) &&

(nextTab = ((ForwardingNode<K,V>)f).nextTable) != null) {

//Take the length of the current target to obtain the expansion identification stamp. Suppose 16 - > 32 expansion: 1000 0000 0001 1011

int rs = resizeStamp(tab.length);

//Condition 1: nextTab == nextTable

//Established: indicates that the current capacity expansion is in progress

//Not tenable: 1.nextTable is set to Null. After capacity expansion, it will be set to Null

// 2. Set out again for capacity expansion... The nextTab we got has expired

//Condition 2: table == tab

//Establishment: indicates that the capacity expansion is in progress and has not been completed

//Not tenable: indicates that the expansion has ended. After the expansion, the last exiting thread will set nextTable to table

//Condition 3: (SC = sizectl) < 0

//Establishment: indicates that capacity expansion is in progress

//Not tenable: it indicates that sizeCtl is currently a number greater than 0. At this time, it represents the threshold of the next capacity expansion. The current capacity expansion has ended.

while (nextTab == nextTable && table == tab &&

(sc = sizeCtl) < 0) {

//Condition 1: (SC > > > resize_stamp_shift)= rs

// True - > indicates that the unique identification stamp of capacity expansion obtained by the current thread is not the capacity expansion of this batch

// False - > indicates that the unique identification stamp of capacity expansion obtained by the current thread is the capacity expansion of this batch

//Condition 2: there is a bug in JDK1.8. JIRA has proposed it. In fact, it wants to express = SC = = (RS < < 16) + 1

// True - > indicates that the capacity expansion is completed and the current thread does not need to participate

// False - > capacity expansion is still in progress, and the current thread can participate

//Condition 3: there is a bug in JDK1.8. JIRA has proposed it. In fact, it wants to express = SC = = (RS < < 16) + max_ RESIZERS

// True - > indicates that the current thread participating in concurrent capacity expansion has reached the maximum value of 65535 - 1

// False - > indicates that the current thread can participate

//Condition 4: transferindex < = 0

// True - > indicates that the tasks in the global scope of the map object have been allocated, and the current thread has no work

// False - > there are tasks to assign.

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || transferIndex <= 0)

break;

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1)) {

transfer(tab, nextTab);

break;

}

}

return nextTab;

}

return table;

}

10.get

public V get(Object key) {

//tab reference map.table

//e current element

//Ptarget node

//n table array length

//eh current element hash

//ek current element key

Node<K,V>[] tab; Node<K,V> e, p; int n, eh; K ek;

//The hash value is more hashed after perturbation operation

int h = spread(key.hashCode());

//Condition 1: (tab = table)= null

//True - > indicates that the data has been put and the table inside the map has been initialized

//False - > indicates that no data has been put after the map is created. The table inside the map is delayed initialization. The creation logic will be triggered only when the data is written for the first time.

//Condition 2: (n = tab. Length) > 0 true - > indicates that the table has been initialized

//Condition 3: (E = tabat (tab, (n - 1) & H))= null

//True - > the bucket addressed by the current key has a value

//False - > the bucket addressed by the current key is null. If it is null, null will be returned directly

if ((tab = table) != null && (n = tab.length) > 0 &&

(e = tabAt(tab, (n - 1) & h)) != null) {

//Precondition: there is data in the current bucket

//Compare whether the hash of the header node is consistent with the hash of the query key

//If the condition is true, it indicates that the hash value of the header node is completely consistent with that of the query Key

if ((eh = e.hash) == h) {

//Completely compare the query key with the key of the header node

//If the condition is true, it means that the header node is the query data

if ((ek = e.key) == key || (ek != null && key.equals(ek)))

return e.val;

}

//Conditions are satisfied:

//1. - 1 FWD indicates that the current table is being expanded and the data of the bucket currently queried has been migrated

//2. - 2 TreeBin node, you need to use the find method provided by TreeBin to query.

else if (eh < 0)

return (p = e.find(h, key)) != null ? p.val : null;

//The current bucket position has formed a linked list

while ((e = e.next) != null) {

if (e.hash == h &&

((ek = e.key) == key || (ek != null && key.equals(ek))))

return e.val;

}

}

return null;

}

11.remove

public V remove(Object key) {

return replaceNode(key, null, null);

}

12.replaceNode

final V replaceNode(Object key, V value, Object cv) {

//Calculate the hash of the key after perturbation operation

int hash = spread(key.hashCode());

//spin

for (Node<K,V>[] tab = table;;) {

//f represents the bucket head node

//n indicates the length of the current table array

//i indicates that the hash hit the bucket subscript

//fh indicates bucket head node hash

Node<K,V> f; int n, i, fh;

//CASE1:

//Condition 1: tab = = null true - > indicates that the current map.table has not been initialized.. false - > has been initialized

//Condition 2: (n = tab. Length) = = 0 true - > indicates that the current map.table has not been initialized.. false - > has been initialized

//Condition 3: (F = tabat (tab, I = (n - 1) & hash)) = = null, true - > indicates that the hit bucket bit is null, directly break, and will return

if (tab == null || (n = tab.length) == 0 ||

(f = tabAt(tab, i = (n - 1) & hash)) == null)

break;

//CASE2:

//Preconditions CASE2 ~ CASE3: the current bucket bit is not null

//If the condition is true, it means that the current table is being expanded and is currently a write operation, so the current thread needs to assist the table to complete the expansion.

else if ((fh = f.hash) == MOVED)

tab = helpTransfer(tab, f);

//CASE3:

//Preconditions CASE2 ~ CASE3: the current bucket bit is not null

//The current bucket location may be a "linked list" or a "red black tree" TreeBin

else {

//Retain data references before replacing

V oldVal = null;

//check mark

boolean validated = false;

//Lock the current bucket head node. After locking successfully, it will enter the code block.

synchronized (f) {

//Judge whether the sync lock is the current bucket head node to prevent other threads from modifying the bucket head node before the current thread locks successfully.

//The condition holds: the current bucket head node is still f and has not been modified by other threads.

if (tabAt(tab, i) == f) {

//Condition holds: indicates that the bucket location is a linked list or a single node

if (fh >= 0) {

validated = true;

//e represents the current loop processing element

//pred represents the previous node of the current loop node

Node<K,V> e = f, pred = null;

for (;;) {

//Current node key

K ek;

//Condition 1: e.hash = = hash true - > indicates that the hash of the current node is consistent with the hash of the lookup node

//Condition 2: ((EK = e.key) = = key | (EK! = null & & key. Equals (EK)))

//if the condition is true, it indicates that the key is completely consistent with the query key.

if (e.hash == hash &&

((ek = e.key) == key ||

(ek != null && key.equals(ek)))) {

//value of current node

V ev = e.val;

//Condition 1: CV = = null true - > if the replaced value is null, it is a deletion operation

//Condition 2: CV = = EV | (EV! = null & & cv. Equals (EV)), then it is a replacement operation

if (cv == null || cv == ev ||

(ev != null && cv.equals(ev))) {

//Delete or replace

//Assign the value of the current node to oldVal, which will be used in subsequent returns

oldVal = ev;

//Condition holds: indicates that the current operation is a replacement operation

if (value != null)

//Direct replacement

e.val = value;

//Condition holds: indicates that the current node is not the head node

else if (pred != null)

//The previous node of the current node, pointing to the next node of the current node.

pred.next = e.next;

else

//This indicates that the current node is the head node. You only need to set the bucket bit as the next node of the head node.

setTabAt(tab, i, e.next);

}

break;

}

pred = e;

if ((e = e.next) == null)

break;

}

}

//Condition holds: TreeBin node.

else if (f instanceof TreeBin) {

validated = true;

//Convert to actual type TreeBin t

TreeBin<K,V> t = (TreeBin<K,V>)f;

//r represents the root node of red black tree

//p indicates that a node with the same corresponding key is found in the red black tree

TreeNode<K,V> r, p;

//Condition 1: (r = t.root)= Null is theoretically true

//Condition 2: TreeNode.findTreeNode takes the current node as the entry and looks down for the key (including its own node)

// True - > indicates that the node node corresponding to the corresponding key is found. Will be assigned to p

if ((r = t.root) != null &&

(p = r.findTreeNode(hash, key, null)) != null) {

//Save p.val to pv

V pv = p.val;

//Condition 1: cv == null holds: you do not have to replace or delete value

//Condition 2: CV = = PV | (PV! = null & & cv. Equals (PV)) holds: it indicates that the "comparison value" is consistent with the value of the current p node

if (cv == null || cv == pv ||

(pv != null && cv.equals(pv))) {

//Replace or delete operation

oldVal = pv;

//Condition holds: replace

if (value != null)

p.val = value;

//Delete operation

else if (t.removeTreeNode(p))

//There's no judgment here. I'm confused

setTabAt(tab, i, untreeify(t.first));

}

}

}

}

}

//When another thread has modified the bucket head node, and the sync head node of the current thread locks the wrong object, the validated value is false, and the next for spin will be entered

if (validated) {

if (oldVal != null) {

//The replaced value is null, indicating that it is currently a deletion operation, oldVal= Null is true, indicating that the deletion is successful, and the counter for the number of current elements is updated.

if (value == null)

addCount(-1L, -1);

return oldVal;

}

break;

}

}

}

return null;

}

13.TreeBin

13.1 properties

//Red black tree root node

TreeNode<K,V> root;

//Head node of linked list

volatile TreeNode<K,V> first;

//Waiting thread (current lockState is read lock state)

volatile Thread waiter;

/**

* 1.The write lock state is exclusive. From the hash table, there is only one write thread entering TreeBin at the same time. one

* 2.Read lock status read lock is shared. Multiple threads can enter the TreeBin object to obtain data at the same time. Each thread will give lockStat + 4

* 3.Wait state (the write thread is waiting). When a read thread in TreeBin is currently reading data and the write thread cannot modify the data, set the lowest 2 bits of lockState to 0b 10

*/

volatile int lockState;

// values for lockState

static final int WRITER = 1; // set while holding write lock

static final int WAITER = 2; // set when waiting for write lock

static final int READER = 4; // increment value for setting read lock

13.2 constructor

TreeBin(TreeNode<K,V> b) {

//Setting the node hash to - 2 indicates that this node is a TreeBin node

super(TREEBIN, null, null, null);

//Use first to refer to the treeNode linked list

this.first = b;

//r root node reference of red black tree

TreeNode<K,V> r = null;

//x represents the current node traversed

for (TreeNode<K,V> x = b, next; x != null; x = next) {

next = (TreeNode<K,V>)x.next;

//Force the left and right subtrees of the currently inserted node to be null

x.left = x.right = null;

//If the condition is true: it indicates that the current red black tree is an empty tree, then set the inserted element as the root node

if (r == null) {

//The parent node of the root node must be null

x.parent = null;

//Change color to black

x.red = false;

//Let r refer to the object pointed to by x.

r = x;

}

else {

//If it is not the first cycle, the else branch will be brought. At this time, the red black tree already has data

//k represents the key of the inserted node

K k = x.key;

//h indicates the hash of the inserted node

int h = x.hash;

//kc indicates the class type of the inserted node key

Class<?> kc = null;

//p represents a temporary node that finds the parent node of the inserted node

TreeNode<K,V> p = r;

for (;;) {

//dir (-1, 1)

//-1 indicates that the hash value of the inserted node is greater than that of the current p node

//1 indicates that the hash value of the inserted node is less than the hash value of the current p node

//ph p is the hash of a temporary node that finds the parent node of the inserted node

int dir, ph;

//Temporary node key

K pk = p.key;

//The hash value of the inserted node is less than the current node

if ((ph = p.hash) > h)

//To insert a node, you may need to insert it into the left child of the current node or continue to find it in the left child tree

dir = -1;

//The hash value of the inserted node is greater than the current node

else if (ph < h)

//To insert a node, you may need to insert it into the right child node of the current node or continue to find it in the right child tree

dir = 1;

//If case 3 is executed, it indicates that the hash of the currently inserted node is consistent with that of the current node, and the final sorting will be made in case 3. final

//The dir obtained must not be 0, (- 1, 1)

else if ((kc == null &&

(kc = comparableClassFor(k)) == null) ||

(dir = compareComparables(kc, k, pk)) == 0)

dir = tieBreakOrder(k, pk);

//What xp wants to represent is the parent node of the inserted node

TreeNode<K,V> xp = p;

//Condition holds: indicates that the current p node is the parent node of the inserted node

//If the condition is not tenable: it indicates that there is a hierarchy under the p node. It is necessary to point p to the left or right child node of p, indicating that the search continues.

if ((p = (dir <= 0) ? p.left : p.right) == null) {

//Set the parent node of the inserted node as the current node

x.parent = xp;

//If it is smaller than the P node, it needs to be inserted into the left child node of the P node

if (dir <= 0)

xp.left = x;

//If it is larger than the P node, it needs to be inserted into the right child node of the P node

else

xp.right = x;

//After inserting a node, the nature of the red black tree may be destroyed, so you need to call the balance method

r = balanceInsertion(r, x);

break;

}

}

}

}

//Assign r to the root reference of the TreeBin object.

this.root = r;

assert checkInvariants(root);

}

13.3 putTreeVal

final TreeNode<K,V> putTreeVal(int h, K k, V v) {

Class<?> kc = null;

boolean searched = false;

for (TreeNode<K,V> p = root;;) {

int dir, ph; K pk;

if (p == null) {

first = root = new TreeNode<K,V>(h, k, v, null, null);

break;

}

else if ((ph = p.hash) > h)

dir = -1;

else if (ph < h)

dir = 1;

else if ((pk = p.key) == k || (pk != null && k.equals(pk)))

return p;

else if ((kc == null &&

(kc = comparableClassFor(k)) == null) ||

(dir = compareComparables(kc, k, pk)) == 0) {

if (!searched) {

TreeNode<K,V> q, ch;

searched = true;

if (((ch = p.left) != null &&

(q = ch.findTreeNode(h, k, kc)) != null) ||

((ch = p.right) != null &&

(q = ch.findTreeNode(h, k, kc)) != null))

return q;

}

dir = tieBreakOrder(k, pk);

}

TreeNode<K,V> xp = p;

if ((p = (dir <= 0) ? p.left : p.right) == null) {

//The current loop node xp is the father of the x node

//x means insert node

//f old head node

TreeNode<K,V> x, f = first;

first = x = new TreeNode<K,V>(h, k, v, f, xp);

//Condition holds: it indicates that the linked list has data

if (f != null)

//Set the pre reference of the old header node to the current header node.

f.prev = x;

if (dir <= 0)

xp.left = x;

else

xp.right = x;

if (!xp.red)

x.red = true;

else {

//Indicates that after the current newly inserted node, the newly inserted node forms a "red connection" with the parent node

lockRoot();

try {

//Balance the red and black trees to meet the specifications again.

root = balanceInsertion(root, x);

} finally {

unlockRoot();

}

}

break;

}

}

assert checkInvariants(root);

return null;

}

13.4 find

final Node<K,V> find(int h, Object k) {

if (k != null) {

//e represents the current node of the loop iteration, and the iteration is the linked list referenced by the first

for (Node<K,V> e = first; e != null; ) {

//s saves the temporary state of lock

//The key of the current node in the ek linked list

int s; K ek;

//(WAITER|WRITER) => 0010 | 0001 => 0011

//lockState & 0011 != 0 condition is true: it indicates that there is a waiting thread in the current TreeBin or a write thread is locking

if (((s = lockState) & (WAITER|WRITER)) != 0) {

if (e.hash == h &&

((ek = e.key) == k || (ek != null && k.equals(ek))))

return e;

e = e.next;

}

//Precondition: there is no waiting thread or writing thread in the current TreeBin

//If the condition is true, it indicates that the read lock is successfully added

else if (U.compareAndSwapInt(this, LOCKSTATE, s,

s + READER)) {

TreeNode<K,V> r, p;

try {

//Query operation

p = ((r = root) == null ? null :

r.findTreeNode(h, k, null));

} finally {

//w indicates the waiting thread

Thread w;

//U.getAndAddInt(this, LOCKSTATE, -READER) == (READER|WAITER)

//1. The current thread ends querying the red black tree. Releasing the read lock of the current thread is to make the lockstate value - 4

//(reader|waiter) = 0110 = > indicates that there is only one thread reading and "there is a thread waiting"

//The current read thread is the last read thread in TreeBin.

//2.(w = waiter) != null indicates that a write thread is waiting for all read operations to end.

if (U.getAndAddInt(this, LOCKSTATE, -READER) ==

(READER|WAITER) && (w = waiter) != null)

//Use unpark to restore the write thread to running state.

LockSupport.unpark(w);

}

return p;

}

}

}

return null;

}

summary

In java8, concurrent HashMap uses the combination of array + linked list + red black tree, and uses cas and synchronized to ensure the security of concurrent writing.

The reason why red black tree is introduced: the time complexity of linked list query is On, but the query time complexity of red black tree is O(log(n)), so when there are many nodes, using red black tree can greatly improve the performance.

A linked bucket is a linked list of node nodes. The tree bucket is a red black tree composed of TreeNode nodes. The root node entered is of TreeBin type.

When the length of the linked list is greater than 8 and the length of the entire hash list is greater than 64, it will be converted to TreeBin. As the root node, TreeBin is actually a red black tree object. In the table array of ConcurrentHashMap, the TreeBin object is stored instead of the TreeNoe object.

The array table is lazily loaded and will be initialized only when the element is added for the first time, so initTable() has a thread safety problem.

The important attribute is sizeCtl, which is used to control the initialization and expansion of table:

● - 1 means that table is initializing, and other threads directly join and wait.

● - N means that N-1 threads are expanding. Strictly speaking, when it is negative, only the lower 16 bits are used. If the lower 16 bits are m, M-1 threads are expanding.

● There are two cases when it is greater than 0: if the table is not initialized, it indicates the initialization size of the table. If the table is initialized, it indicates the capacity of the table. The default is three-quarters of the size of the table.

Transfer capacity expansion

Transfer table data to nextTable. The core of capacity expansion operation is data transfer, which migrates the data in the old array to the new array. The essence of ConcurrentHashMap is that it can make use of multi thread for collaborative expansion. In simple terms, it regards the table array as a shared task queue among multiple threads, and then maintains a pointer to divide the interval responsible for each thread, and each thread realizes the capacity expansion by reverse traversal of the interval. The Bucket will be replaced with a Forwarding node to mark that the current Bucket has been migrated by other threads.

helpTransfer() helps to expand capacity

When concurrent HashMap adds elements concurrently, if it is expanding, other threads will help expand, that is, multi-threaded expansion.

When adding elements for the first time, the default initial length is 16. When adding elements to the table, the Bucket position of the array is determined by taking the remainder of the Hash value and the array length. If the elements are placed in the same position, they will be stored in the form of a linked list first. The number of elements in the same position reaches more than 8. If the length of the array is less than 64, the array will be expanded. If the length of the array is greater than or equal to 64, the linked list of the node will be converted into a tree.

These nodes are dispersed by expanding the array. Then copy these elements to the new array after capacity expansion. The elements in the same Bucket are repositioned through the array length bits of the Hash value, either in the original position or in a new position. Moreover, after the capacity expansion is completed, if a node was a tree before, but now the number of "key value pairs" of the node is less than or equal to 6, the tree will be turned into a linked list.

put()

JDK1.8 when using CAS spin to complete the setting of the bucket, use the synchronized built-in lock to ensure the thread safety of concurrent operations in the bucket. Although the thread contention for the same Map operation will be very intense, the thread contention in the same bucket is usually not very intense, so using CAS spin and synchronized will not reduce the performance of ConcurrentHashMap. Why not use ReentrantLock explicit locks? If per Create a ReentrantLock for each bucket For example, it will lead to a large amount of memory consumption. Conversely, using CAS spin and synchronized will lead to a smaller increase in memory consumption.

get()

get() reads the elements in the array through getObjectVolatile() of UnSafe. Why? Although the reference of HashEntry array is of volatile type, the number of elements in the array Use is not a volatile type, so multithreading pairs The modification of array elements is not safe, and element objects that have not been constructed may be read in the array. The get () method uses the getObjectVolatile method of UnSafe to ensure the reading safety of elements. Calling getObjectVolatile () to read array elements requires first obtaining the offset of elements in the array. Here, the get () method calculates the offset of u according to the hash code, and then attempts to read the value through the offset U.