Catalog

1,FastHashCode / identity_hash_value_for

4,omAlloc / omRelease / omFlush

5,InduceScavenge / deflate_idle_monitors / deflate_monitor / walk_monitor_list

There are five local methods in java.lang.Object, including hashCode, wait, notify, notifyAll and clone. ObjectSynchronizer is the bottom implementation of the first four local methods. This blog will explain the implementation of these five local methods in detail.

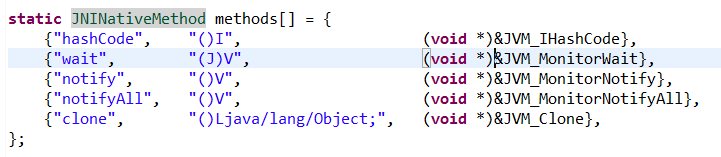

1, Object.c

The local method implementations in java.lang.Object are all in jdk\src\share\native\java\lang\Object.c. The JNINativeMethod, which is responsible for defining the corresponding relationship between the native method and the underlying C method, is as follows:

The first four methods are implemented as follows:

JVM_ENTRY(jint, JVM_IHashCode(JNIEnv* env, jobject handle))

JVMWrapper("JVM_IHashCode");

//Resolves the corresponding oop

return handle == NULL ? 0 : ObjectSynchronizer::FastHashCode (THREAD, JNIHandles::resolve_non_null(handle)) ;

JVM_END

JVM_ENTRY(void, JVM_MonitorWait(JNIEnv* env, jobject handle, jlong ms))

JVMWrapper("JVM_MonitorWait");

Handle obj(THREAD, JNIHandles::resolve_non_null(handle));

JavaThreadInObjectWaitState jtiows(thread, ms != 0);

if (JvmtiExport::should_post_monitor_wait()) {

//Publish JVMTI events

JvmtiExport::post_monitor_wait((JavaThread *)THREAD, (oop)obj(), ms);

}

ObjectSynchronizer::wait(obj, ms, CHECK);

JVM_END

JVM_ENTRY(void, JVM_MonitorNotify(JNIEnv* env, jobject handle))

JVMWrapper("JVM_MonitorNotify");

Handle obj(THREAD, JNIHandles::resolve_non_null(handle));

ObjectSynchronizer::notify(obj, CHECK);

JVM_END

JVM_ENTRY(void, JVM_MonitorNotifyAll(JNIEnv* env, jobject handle))

JVMWrapper("JVM_MonitorNotifyAll");

Handle obj(THREAD, JNIHandles::resolve_non_null(handle));

ObjectSynchronizer::notifyall(obj, CHECK);

JVM_END

#define JVM_ENTRY(result_type, header) \

extern "C" { \

result_type JNICALL header { \

JavaThread* thread=JavaThread::thread_from_jni_environment(env); \

//Switch thread state and check safety point

ThreadInVMfromNative __tiv(thread); \

debug_only(VMNativeEntryWrapper __vew;) \

VM_ENTRY_BASE(result_type, header, thread)

#define VM_ENTRY_BASE(result_type, header, thread) \

TRACE_CALL(result_type, header) \

//It is responsible for recovering the original HandleMark after method execution, that is, releasing the temporarily allocated Handle during method execution

HandleMarkCleaner __hm(thread); \

Thread* THREAD = thread; \

os::verify_stack_alignment(); \2, JVM? Clone

The implementation of JVM clone method is as follows. The core of object replication is to convert the data of the object into jlong array, and then copy the contents of the array. Therefore, the implementation of JVM clone is called shallow copy.

JVM_ENTRY(jobject, JVM_Clone(JNIEnv* env, jobject handle))

JVMWrapper("JVM_Clone");

//Get object oop to be copied

Handle obj(THREAD, JNIHandles::resolve_non_null(handle));

//Get klass of the object to be copied

const KlassHandle klass (THREAD, obj->klass());

JvmtiVMObjectAllocEventCollector oam;

//Judge whether this class can be clone. Array objects can be clone by default

if (!klass->is_cloneable()) {

ResourceMark rm(THREAD);

//Throw an exception if clone is not allowed

THROW_MSG_0(vmSymbols::java_lang_CloneNotSupportedException(), klass->external_name());

}

// Make shallow object copy

ReferenceType ref_type = REF_NONE;

const int size = obj->size();

oop new_obj_oop = NULL;

if (obj->is_array()) {

//If it's an array

const int length = ((arrayOop)obj())->length();

//Assign a new array of objects

new_obj_oop = CollectedHeap::array_allocate(klass, size, length, CHECK_NULL);

} else {

ref_type = InstanceKlass::cast(klass())->reference_type();

//Both conditions are either true or false, that is, they are either normal Java objects or Reference and its subclasses

assert((ref_type == REF_NONE) ==

!klass->is_subclass_of(SystemDictionary::Reference_klass()),

"invariant");

//Assign a new object

new_obj_oop = CollectedHeap::obj_allocate(klass, size, CHECK_NULL);

}

assert(MinObjAlignmentInBytes >= BytesPerLong, "objects misaligned");

//The bottom layer of atom copying object data is implemented by assembly instruction fildll and fistpll, because at this time, some other thread may be modifying object properties

//The purpose of conversion to jlong array replication is to ensure that the 32-bit system can also copy 8 bytes of data atomically, and the 32-bit system can copy up to 4 bytes of data at the next time

Copy::conjoint_jlongs_atomic((jlong*)obj(), (jlong*)new_obj_oop,

(size_t)align_object_size(size) / HeapWordsPerLong);

//Set object header

new_obj_oop->init_mark();

BarrierSet* bs = Universe::heap()->barrier_set();

assert(bs->has_write_region_opt(), "Barrier set does not have write_region");

//Mark the corresponding area of card table as dirty

bs->write_region(MemRegion((HeapWord*)new_obj_oop, size));

if (ref_type != REF_NONE) {

//If it is a Reference and its subclass, special treatment shall be made to its related properties

fixup_cloned_reference(ref_type, obj(), new_obj_oop);

}

Handle new_obj(THREAD, new_obj_oop);

//If the clone object is a Java Lang invoke membername instance, the class can only be accessed in the invoke package class

if (java_lang_invoke_MemberName::is_instance(new_obj()) &&

java_lang_invoke_MemberName::is_method(new_obj())) {

Method* method = (Method*)java_lang_invoke_MemberName::vmtarget(new_obj());

// MemberName may be unresolved, so doesn't need registration until resolved.

if (method != NULL) {

methodHandle m(THREAD, method);

m->method_holder()->add_member_name(new_obj(), false);

}

}

if (klass->has_finalizer()) {

//If this class overrides the finalize method of the Object

assert(obj->is_instance(), "should be instanceOop");

//Assign an associated Finalizer instance to this instance. The register Finalizer method returns new obj. The Finalizer.register method called at the bottom is void

new_obj_oop = InstanceKlass::register_finalizer(instanceOop(new_obj()), CHECK_NULL);

new_obj = Handle(THREAD, new_obj_oop);

}

//Returns a local JNI reference

return JNIHandles::make_local(env, new_obj());

JVM_END

bool Klass::is_cloneable() const {

return _access_flags.is_cloneable() ||//The array class klass and the common Java class that implements the java.lang.clonable interface are marked as clone

is_subtype_of(SystemDictionary::Cloneable_klass()); //Only for Reference and its subclasses

}

static void fixup_cloned_reference(ReferenceType ref_type, oop src, oop clone) {

#if INCLUDE_ALL_GCS

if (UseG1GC) {

oop referent = java_lang_ref_Reference::referent(clone);

if (referent != NULL) {

//If it's G1 algorithm, it needs to queue non empty referent s

G1SATBCardTableModRefBS::enqueue(referent);

}

}

#endif // INCLUDE_ALL_GCS

if ((java_lang_ref_Reference::next(clone) != NULL) ||

(java_lang_ref_Reference::queue(clone) == java_lang_ref_ReferenceQueue::ENQUEUED_queue())) {

// If the clone is inactive, set its queue to ReferenceQueue.NULL, because the currently replicated instance is not actually added to ReferenceQueue

java_lang_ref_Reference::set_queue(clone, java_lang_ref_ReferenceQueue::NULL_queue());

}

//Set the discovered and next properties to NULL

java_lang_ref_Reference::set_discovered(clone, NULL);

java_lang_ref_Reference::set_next(clone, NULL);

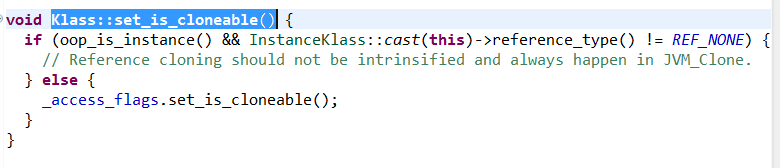

}The implementation of the Klass:: set ﹣ is ﹣ clonable() method corresponding to the Klass:: is ﹣ clonable() method is as follows:

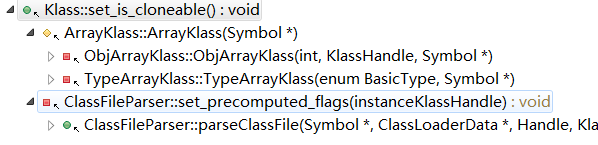

If it is a Reference class and its subclass, it will enter the if branch. The ReferenceType of ordinary non Reference classes is ref [none], and others will enter the else branch. The call chain of this method is as follows:

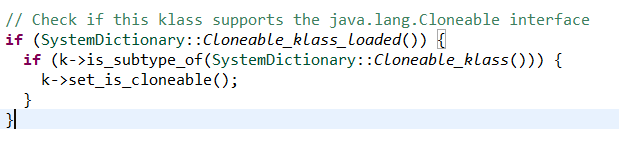

ArrayKlass is a direct call with no condition judgment, that is, all array classes can be clone by default, and ClassFileParser calls with conditions, as shown in the following figure:

The Cloneable Klass loaded method determines whether the java.lang.Clonable interface Klass has been loaded, which is loaded when the JVM starts; is subtype of determines whether the Clonable interface has been implemented, if it has been implemented, it is marked in access flags, which supports Clone.

3, Shallow copy and deep copy

Shallow copy means that when copying objects, only attributes themselves are copied. On the basis of shallow copy, deep copy also copies the objects pointed by reference type attributes, so that the corresponding reference type attributes point to new objects. The test cases are as follows:

package jni;

import org.junit.Test;

class TestA implements Cloneable {

@Override

public TestA clone() throws CloneNotSupportedException {

return (TestA)super.clone();

}

}

class TestB implements Cloneable {

@Override

public TestB clone() throws CloneNotSupportedException {

return (TestB)super.clone();

}

}

class TestC implements Cloneable{

public TestA testA;

public TestB testB;

public TestC(TestA testA, TestB testB) {

this.testA = testA;

this.testB = testB;

}

@Override

public TestC clone() throws CloneNotSupportedException {

//Use the default implementation of Object

return (TestC) super.clone();

}

}

class TestD implements Cloneable{

public TestA testA;

public TestB testB;

public TestD(TestA testA, TestB testB) {

this.testA = testA;

this.testB = testB;

}

@Override

public TestD clone() throws CloneNotSupportedException {

//Use the default implementation of Object to copy non reference type properties

TestD testD= (TestD) super.clone();

//Copy two reference type properties respectively

testD.testA=testA.clone();

testD.testB=testB.clone();

return testD;

}

}

public class CloneTest {

@Test

public void test() throws Exception {

TestA a=new TestA();

TestB b=new TestB();

TestC c=new TestC(a,b);

TestC c2=c.clone();

//Shallow copy test, c2 is a new TestC object, so it returns false

System.out.println(c2==c);

//The reference type property of c2 is the same as that of c, so the following two return true

System.out.println(c2.testA==c.testA);

System.out.println(c2.testB==c.testB);

}

@Test

public void test2() throws Exception {

TestA a=new TestA();

TestB b=new TestB();

TestD c=new TestD(a,b);

TestD c2=c.clone();

//Deep copy, all pointing to different objects, all three return false

System.out.println(c2==c);

System.out.println(c2.testA==c.testA);

System.out.println(c2.testB==c.testB);

}

}

The implementation of the above-mentioned deep copy requires that the corresponding class of each reference type property must override the Object's clone method. If the parent class of these classes contains private reference type properties, the parent class also needs to override the Object's clone method to handle these private reference type properties, which is troublesome. The deep copy can be realized in the following two ways:

@Test

public void test3() throws Exception {

TestA a=new TestA();

TestB b=new TestB();

TestC c=new TestC(a,b);

TestC c2=null;

try(PipedOutputStream out=new PipedOutputStream();

PipedInputStream in=new PipedInputStream(out);

ObjectOutputStream bo=new ObjectOutputStream(out);

ObjectInputStream bi=new ObjectInputStream(in)) {

//Using the method of object serialization, all classes are required to implement the Serializable interface

bo.writeObject(c);

c2=(TestC) bi.readObject();

} catch (Exception e) {

e.printStackTrace();

}

//All points to the new object and returns false

System.out.println(c2==c);

System.out.println(c2.testA==c.testA);

System.out.println(c2.testB==c.testB);

}

@Test

public void test4() throws Exception {

TestA a=new TestA();

TestB b=new TestB();

TestC c=new TestC(a,b);

//Using JSON serialization to realize deep copy

String str= JSON.toJSONString(c);

TestC c2=JSON.parseObject(str,TestC.class);

//All points to the new object and returns false

System.out.println(c2==c);

System.out.println(c2.testA==c.testA);

System.out.println(c2.testB==c.testB);

}There are many different open source implementations of object serialization and JSON serialization. In addition to these two, there is another way. Through reflection, we can traverse all properties. If it is a basic type, we can copy the value directly. If it is a reference type property, we can get the referenced class. By reflecting a new class, we can process the properties of the new class in the same way The performance loss is large and complex. It is better to use object serialization directly. JSON serialization is not suitable for classes with complex nested structure.

4, ObjectSynchronizer

The definition of ObjectSynchronizer is located in hotspot\src\share\vm\runtime\synchronizer.hpp, and its properties are as follows:

- static ObjectMonitor* gBlockList; / / the global list of all ObjectMonitor arrays. Each element is the header element of an ObjectMonitor array. You can traverse all ObjectMonitor instances based on it

- static ObjectMonitor * volatile gFreeList; / / global free ObjectMonitor linked list

- static ObjectMonitor * volatile gOmInUseList; / / a global list of in use objectmonitors with values only when MonitorInUseLists is true

- Static int gominusecount; / / number of elements contained in gominuselist

- static volatile intptr_t ListLock = 0; / / operate the lock of gFreeList

- static volatile int MonitorFreeCount = 0; / / number of elements contained in gFreeList

- static volatile int MonitorPopulation = 0; / / total number of objectmonitors created

This class is used to implement the wait / notify method of the Object, the bottom monitorenter / monitorexit bytecode instruction of the synchronized keyword, the interface JNI ﹣ monitorenter / JNI ﹣ monitorexit used to obtain the lock, and the Unsafe ﹣ monitorenter of the Unsafe class/ This blog focuses on several methods related to the implementation of local methods of Object.

1,FastHashCode / identity_hash_value_for

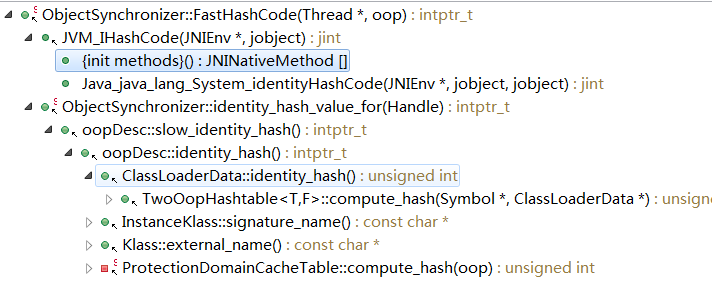

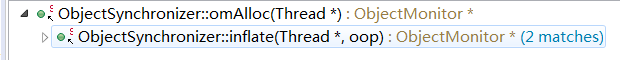

FastHashCode is the underlying implementation of the hashCode method of the Object class and the identityHashCode method of the System class. The identity "hash" value "for method is used internally by the JVM to obtain the hash code of an oop, which is used to obtain the class name of an anonymous class or to calculate the key when an oop is put into a Map. This method is also implemented based on FastHashCode. The call chain of FastHashCode is as follows:

It is as follows:

intptr_t ObjectSynchronizer::identity_hash_value_for(Handle obj) {

return FastHashCode (Thread::current(), obj()) ;

}

intptr_t ObjectSynchronizer::FastHashCode (Thread * Self, oop obj) {

//UseBiasedLocking indicates whether biased locking is enabled

if (UseBiasedLocking) {

if (obj->mark()->has_bias_pattern()) {

// Box and unbox the raw reference just in case we cause a STW safepoint.

Handle hobj (Self, obj) ;

// Relaxing assertion for bug 6320749.

assert (Universe::verify_in_progress() ||

!SafepointSynchronize::is_at_safepoint(),

"biases should not be seen by VM thread here");

//Undo biased lock

BiasedLocking::revoke_and_rebias(hobj, false, JavaThread::current());

obj = hobj() ;

assert(!obj->mark()->has_bias_pattern(), "biases should be revoked by now");

}

}

// hashCode() is a heap mutator ...

// Relaxing assertion for bug 6320749.

assert (Universe::verify_in_progress() ||

!SafepointSynchronize::is_at_safepoint(), "invariant") ;

assert (Universe::verify_in_progress() ||

Self->is_Java_thread() , "invariant") ;

assert (Universe::verify_in_progress() ||

((JavaThread *)Self)->thread_state() != _thread_blocked, "invariant") ;

ObjectMonitor* monitor = NULL;

markOop temp, test;

intptr_t hash;

//If the object is in the process of lock inflation, wait for the lock inflation to complete by spinning or blocking

markOop mark = ReadStableMark (obj);

// object should remain ineligible for biased locking

assert (!mark->has_bias_pattern(), "invariant") ;

if (mark->is_neutral()) {

//If the object does not hold a lock

hash = mark->hash(); // this is a normal header

if (hash) { // if it has hash, just return it

//If the hash code is saved in the object header, the hash code obtained from the object header will be returned directly

return hash;

}

//There is no hash code in the object header. Calculate a new hash code

hash = get_next_hash(Self, obj); // allocate a new hash code

//Save hash code to object header

temp = mark->copy_set_hash(hash); // merge the hash code into header

//Reset object header of atom

test = (markOop) Atomic::cmpxchg_ptr(temp, obj->mark_addr(), mark);

if (test == mark) {

//Reset success

return hash;

}

//Reset failed. Another thread has modified the cash header of the object

} else if (mark->has_monitor()) {

//If the monitor lock is a heavyweight lock, get the pointer of the monitor from the object header

monitor = mark->monitor();

//Get the original object header of the object saved by ObjectMonitor

temp = monitor->header();

assert (temp->is_neutral(), "invariant") ;

hash = temp->hash();

if (hash) {

//If there is a hash code in the object header, it will directly return

return hash;

}

// No hash code in object header

} else if (Self->is_lock_owned((address)mark->locker())) {

//If a lightweight lock is held, the lock representation in the object header is removed. At this time, the pointer contained in the object header is an address pointing to the thread stack

//This address saves the original object header of the object. The displayed mark helper is to obtain the original object header

temp = mark->displaced_mark_helper(); // this is a lightweight monitor owned

assert (temp->is_neutral(), "invariant") ;

hash = temp->hash(); // by current thread, check if the displaced

if (hash) { // header contains hash code

return hash;

}

}

//Expand the lock to obtain the ObjectMonitor associated with the object

monitor = ObjectSynchronizer::inflate(Self, obj);

//Get the object header of the object

mark = monitor->header();

assert (mark->is_neutral(), "invariant") ;

hash = mark->hash();

if (hash == 0) {

//If there is no hash code, generate a

hash = get_next_hash(Self, obj);

//Save hash code

temp = mark->copy_set_hash(hash); // merge hash code into header

assert (temp->is_neutral(), "invariant") ;

test = (markOop) Atomic::cmpxchg_ptr(temp, monitor, mark);

if (test != mark) {

//If the update of the atom fails, the original hash code will be returned

hash = test->hash();

assert (test->is_neutral(), "invariant") ;

assert (hash != 0, "Trivial unexpected object/monitor header usage.");

}

}

// We finally get the hash

return hash;

}

static markOop ReadStableMark (oop obj) {

markOop mark = obj->mark() ;

if (!mark->is_being_inflated()) {

//If it is not in the process of lock inflation, it will return directly. Lock inflation is a very short intermediate state

return mark ; // normal fast-path return

}

int its = 0 ;

//Equivalent to while(true) loop

for (;;) {

markOop mark = obj->mark() ;

if (!mark->is_being_inflated()) {

//If not in the process of lock expansion, return directly

return mark ; // normal fast-path return

}

//If the object is in the process of lock inflation, the caller must wait for the lock inflation to complete

++its ;

if (its > 10000 || !os::is_MP()) {

//More than 1000 cycles or single core system

if (its & 1) {

//If it is an odd number of loops, let the current thread yeld

os::NakedYield() ;

TEVENT (Inflate: INFLATING - yield) ;

} else {

//Calculate the index of the pointer array used to represent the expansion lock according to the object address

int ix = (cast_from_oop<intptr_t>(obj) >> 5) & (NINFLATIONLOCKS-1) ;

int YieldThenBlock = 0 ;

assert (ix >= 0 && ix < NINFLATIONLOCKS, "invariant") ;

assert ((NINFLATIONLOCKS & (NINFLATIONLOCKS-1)) == 0, "invariant") ;

//Obtain the corresponding expansion lock

Thread::muxAcquire (InflationLocks + ix, "InflationLock") ;

while (obj->mark() == markOopDesc::INFLATING()) {

// If it's still expanding

if ((YieldThenBlock++) >= 16) {

//Make the current thread park

Thread::current()->_ParkEvent->park(1) ;

} else {

os::NakedYield() ;

}

}

//Release expansion lock

Thread::muxRelease (InflationLocks + ix ) ;

TEVENT (Inflate: INFLATING - yield/park) ;

}

} else {

//Execute spin, actually return to 0 directly

SpinPause() ; // SMP-polite spinning

}

}

}

bool is_being_inflated() const { return (value() == 0); }

static markOop INFLATING() { return (markOop) 0; }

//Pointer array, the function of expanding lock is realized by modifying the value of array element by atom

#define NINFLATIONLOCKS 256

static volatile intptr_t InflationLocks [NINFLATIONLOCKS] ;

bool is_neutral() const { return (mask_bits(value(), biased_lock_mask_in_place) == unlocked_value); }

bool has_monitor() const {

return ((value() & monitor_value) != 0);

}

bool Thread::is_lock_owned(address adr) const {

return on_local_stack(adr);

}

markOop displaced_mark_helper() const {

assert(has_displaced_mark_helper(), "check");

//Remove the lock identification bit from the object header

intptr_t ptr = (value() & ~monitor_value);

//Note that here is to convert ptr to markOop * pointer, and then obtain the value pointed to by the pointer, that is, the original object header of the object

return *(markOop*)ptr;

}This method will do different processing according to whether the object holds the lock and the type of lock. If it is unlocked, it will directly return the hash code contained in the object header. If there is no hash code in the object header, it will create one and save it in the object header. If it holds the lightweight lock, it will find the original object of the object according to the pointer saved in the object header to the current thread calling stack frame Header, if there is hash code in the object header, it will be returned. If there is no hash code, the lock of the current object needs to be expanded to monitor lock, and a new hash code will be generated to save in the object header. If the monitor lock is held, that is, the heavyweight lock, the associated ObjectMonitor will be obtained according to the pointer of ObjectMonitor, which represents the monitor lock, and the saved object's The original object header is returned if it contains a hash code. If it does not, a new hash code is created and saved in the object header.

2,get_next_hash

The get next hash method is used to generate a new hash code. According to the configuration parameter hashCode, there are different implementations. Starting from JDK6, the default value of the parameter is 5. At this time, the Marsaglia's XOR shift random algorithm is used to generate a hash code for an object, which has nothing to do with the object's memory address. The implementation is as follows:

static inline intptr_t get_next_hash(Thread * Self, oop obj) {

intptr_t value = 0 ;

//hashCode is a configuration option, starting with JDK6, with a default value of 5

if (hashCode == 0) {

//Get random number

value = os::random() ;

} else

if (hashCode == 1) {

//Calculated by object address

intptr_t addrBits = cast_from_oop<intptr_t>(obj) >> 3 ;

value = addrBits ^ (addrBits >> 5) ^ GVars.stwRandom ;

} else

if (hashCode == 2) {

value = 1 ; // for sensitivity testing

} else

if (hashCode == 3) {

//Returns a sequence value

value = ++GVars.hcSequence ;

} else

if (hashCode == 4) {

//Return object address directly

value = cast_from_oop<intptr_t>(obj) ;

} else {

// Marsaglia's XOR shift algorithm, default implementation

//_hashStateX is a random number generated when a thread is created,

unsigned t = Self->_hashStateX ;

t ^= (t << 11) ;

//_Hashstatey, hashstatez, fixed value when hashstatew is initialized

//Change the properties of hashStateX, hashStateY, and hashStateZ dynamically by resetting hashStateW

Self->_hashStateX = Self->_hashStateY ;

Self->_hashStateY = Self->_hashStateZ ;

Self->_hashStateZ = Self->_hashStateW ;

unsigned v = Self->_hashStateW ;

v = (v ^ (v >> 19)) ^ (t ^ (t >> 8)) ;

Self->_hashStateW = v ;

value = v ;

}

//Because the hash value is saved in the specific bit of the object header, here and operate to check whether the hash value of the corresponding bit is 0

value &= markOopDesc::hash_mask;

if (value == 0) value = 0xBAD ;

assert (value != markOopDesc::no_hash, "invariant") ;

TEVENT (hashCode: GENERATE) ;

return value;

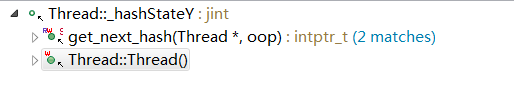

}The call chain of Thread's ﹐ hashStateY property is as follows:

The initialization logic in the Thread::Thread() method is as follows:

The above method is the default implementation of generating hash code. In practical application, you can rewrite the implementation of the default hash code method on demand. Taking the hash code of String as an example, in the database sub database sub table scenario, you usually need to calculate a record according to the hash value of a String and store it in that table. In this scenario, you need to ensure that the same String is in different operating systems Next, always return the same hash code, refer to the following test case:

public class StringTest {

public static void main(String[] args) {

System.out.println("Hello World".hashCode());

}

}No matter how many times the above code runs on Windows and Linux, it will always return - 862545276. If it is implemented by the default hashCode method, it is sure that the return value is different each time it runs. How does String achieve the same hashCode of the same String? Refer to the implementation of its hashCode method, as follows:

public int hashCode() {

//hash is a property of String class, initialized to 0

int h = hash;

if (h == 0 && value.length > 0) {

//If the hash is uninitialized and the string is not an empty string

char val[] = value;

//It is calculated according to the characters contained in the string, so the strings are the same, and the calculated hash value is always the same

for (int i = 0; i < value.length; i++) {

h = 31 * h + val[i];

}

hash = h;

}

return h;

}3,inflate / inflate_helper

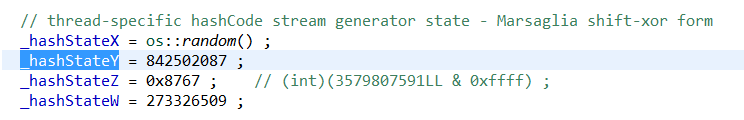

The inflate method is used to inflate the lightweight lock held by an object into a heavyweight lock, that is, a monitor lock. The inflate ﹐ helper is used by other classes within the JVM, and is also implemented based on the inflate method. Its call chain is as follows:

It is as follows:

ObjectMonitor* ObjectSynchronizer::inflate_helper(oop obj) {

markOop mark = obj->mark();

if (mark->has_monitor()) {

//If the object itself holds a heavyweight lock, the ObjectMonitor pointer contained in the object header is returned directly

assert(ObjectSynchronizer::verify_objmon_isinpool(mark->monitor()), "monitor is invalid");

assert(mark->monitor()->header()->is_neutral(), "monitor must record a good object header");

return mark->monitor();

}

//If the monitor lock is not held, create one and save it in the object header

return ObjectSynchronizer::inflate(Thread::current(), obj);

}

ObjectMonitor * ATTR ObjectSynchronizer::inflate (Thread * Self, oop object) {

assert (Universe::verify_in_progress() ||

!SafepointSynchronize::is_at_safepoint(), "invariant") ;

for (;;) {

const markOop mark = object->mark() ;

assert (!mark->has_bias_pattern(), "invariant") ;

// The mark can be in one of the following states:

// *Embedded - monitor lock already held

// *Stack locked - holds lightweight locks ready to perform lock inflation

// *Expanding - lock inflation in progress

// *Neutral - lock not held

// *Bias - holds a BIASED lock, which is not possible, because BIASED locks will only expand to a lightweight lock before expanding to a heavyweight lock

// CASE: inflated

if (mark->has_monitor()) {

//Returns the ObjectMonitor pointer saved in the object header if the monitor lock is already held

ObjectMonitor * inf = mark->monitor() ;

assert (inf->header()->is_neutral(), "invariant");

assert (inf->object() == object, "invariant") ;

assert (ObjectSynchronizer::verify_objmon_isinpool(inf), "monitor is invalid");

return inf ;

}

// CASE: inflation in progress - inflating over a stack-lock.

if (mark == markOopDesc::INFLATING()) {

TEVENT (Inflate: spin while INFLATING) ;

//Some other thread is expanding the lock of this object into a monitor lock, waiting for the expansion to complete. It will spin first, and then it will execute park after more than 1000 times of yeld and yield

ReadStableMark(object) ;

continue ;

}

// CASE: stack-locked

if (mark->has_locker()) {

//Assign a free ObjectMonitor from the local or global free list of the current thread

ObjectMonitor * m = omAlloc (Self) ;

// Reset it

m->Recycle();

m->_Responsible = NULL ;

m->OwnerIsThread = 0 ;

m->_recursions = 0 ;

m->_SpinDuration = ObjectMonitor::Knob_SpinLimit ; // Consider: maintain by type/class

//Change its object header atom to expanding

markOop cmp = (markOop) Atomic::cmpxchg_ptr (markOopDesc::INFLATING(), object->mark_addr(), mark) ;

if (cmp != mark) {

//If the modification fails, m will be returned to the idle list of the local thread. If the modification fails, another thread is trying to perform lock inflation

omRelease (Self, m, true) ;

continue ; //Start next cycle

}

//Get the original object header of the object saved in the current thread stack frame according to the pointer in the object header

markOop dmw = mark->displaced_mark_helper() ;

assert (dmw->is_neutral(), "invariant") ;

//Save the original object header

m->set_header(dmw) ;

m->set_owner(mark->locker());

m->set_object(object);

guarantee (object->mark() == markOopDesc::INFLATING(), "invariant") ;

//Reset object header

object->release_set_mark(markOopDesc::encode(m));

//Increase count

if (ObjectMonitor::_sync_Inflations != NULL) ObjectMonitor::_sync_Inflations->inc() ;

TEVENT(Inflate: overwrite stacklock) ;

if (TraceMonitorInflation) {

if (object->is_instance()) {

ResourceMark rm;

tty->print_cr("Inflating object " INTPTR_FORMAT " , mark " INTPTR_FORMAT " , type %s",

(void *) object, (intptr_t) object->mark(),

object->klass()->external_name());

}

}

return m ;

}

// CASE: neutral

assert (mark->is_neutral(), "invariant");

//Assign a new ObjectMonitor

ObjectMonitor * m = omAlloc (Self) ;

//Initialize it

m->Recycle();

//Save the original object header and set related properties

m->set_header(mark);

m->set_owner(NULL);

m->set_object(object);

m->OwnerIsThread = 1 ;

m->_recursions = 0 ;

m->_Responsible = NULL ;

m->_SpinDuration = ObjectMonitor::Knob_SpinLimit ; // consider: keep metastats by type/class

if (Atomic::cmpxchg_ptr (markOopDesc::encode(m), object->mark_addr(), mark) != mark) {

//Atomic modification object header. If the modification fails, restore m to its original state

m->set_object (NULL) ;

m->set_owner (NULL) ;

m->OwnerIsThread = 0 ;

m->Recycle() ;

//Return to the local free list

omRelease (Self, m, true) ;

m = NULL ;

continue ;//Start next cycle

}

//Object header modified successfully, increase count

if (ObjectMonitor::_sync_Inflations != NULL) ObjectMonitor::_sync_Inflations->inc() ;

TEVENT(Inflate: overwrite neutral) ;

if (TraceMonitorInflation) {

if (object->is_instance()) {

ResourceMark rm;

tty->print_cr("Inflating object " INTPTR_FORMAT " , mark " INTPTR_FORMAT " , type %s",

(void *) object, (intptr_t) object->mark(),

object->klass()->external_name());

}

}

return m ;

}

}

BasicLock* locker() const {

assert(has_locker(), "check");

//Get the BasicLock saved in the object header

return (BasicLock*) value();

}This method will do different processing according to the lock state of the object. If the monitor lock is already held, the ObjectMonitor pointer contained in the object header will be returned directly. If the lock is in the process of inflation, the lock inflation will be completed by spinning, yeld or park. If the object holds a lightweight lock or no lock, the free ObjectMonitor linked list or Allocate an ObjectMonitor in the global free ObjectMonitor chain list, reset and save the object first-class properties of the current object, and modify the object header of the last atom. If other threads are also trying to modify the object header of the same object, resulting in modification failure, return the previously allocated ObjectMonitor to the thread local free ObjectMonitor chain list, and start the next time Loop. If the modification is successful, the ObjectMonitor instance is returned.

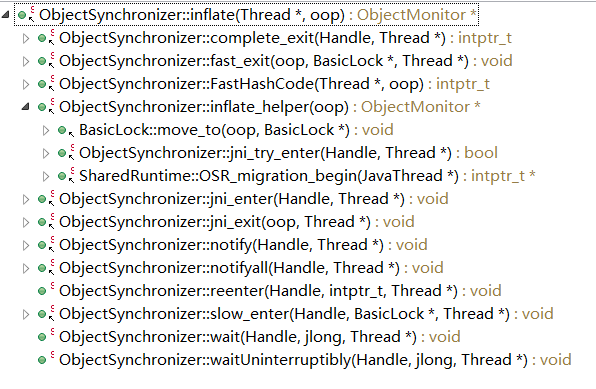

4,omAlloc / omRelease / omFlush

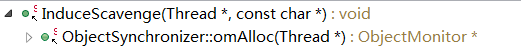

omAlloc method is used to allocate ObjectMonitor. When allocating, firstly allocate from the local ObjectMonitor idle list saved by the current thread. If the local ObjectMonitor idle list is empty, try to allocate up to 32 ObjectMonitor instances from the global ObjectMonitor idle list and store them in the ObjectMonitor idle list of the thread, and then allocate ObjectMonitor from it Or; if both the local ObjectMonitor idle list and the global ObjectMonitor idle list are empty, create 128 ObjectMonitor instances at a time and add them to the global ObjectMonitor idle list. Using the local ObjectMonitor idle list can reduce the lock contention and consistency problems when allocating from the global ObjectMonitor idle list, improve the allocation efficiency, but increase the overhead of root node scanning during GC. The call chain is as follows:

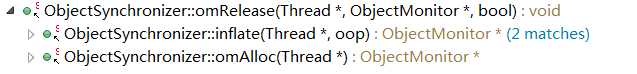

omRelease is used to return an ObjectMonitor to the local ObjectMonitor free linked list. If MonitorInUseLists is true, it needs to be removed from the global in use ObjectMonitor linked list. The call chain is as follows:

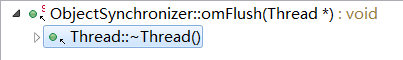

omFlush is called when the thread exits. It returns the elements in the local ObjectMonitor free list of the thread to the global free list, and returns the elements in the local ObjectMonitor list to the global free list. Why can't the elements in the local ObjectMonitor list be returned to the global free list? Because one of the ObjectMonitor instances may also be used by other threads, the call chain is as follows:

The implementation of the above method is as follows:

ObjectMonitor * ATTR ObjectSynchronizer::omAlloc (Thread * Self) {

const int MAXPRIVATE = 1024 ;

for (;;) {

ObjectMonitor * m ;

// 1: try to allocate from the thread's local omFreeList.

m = Self->omFreeList ;

if (m != NULL) {

//If the thread local free list is not empty, assign a

Self->omFreeList = m->FreeNext ;

Self->omFreeCount -- ;

guarantee (m->object() == NULL, "invariant") ;

//MonitorInUseLists indicates whether to record the ObjectMonitor in use. The default value is false

if (MonitorInUseLists) {

//If true, add it to the omInUseList

m->FreeNext = Self->omInUseList;

Self->omInUseList = m;

Self->omInUseCount ++;

// verifyInUse(Self);

} else {

//If false, set FreeNext to NULL

m->FreeNext = NULL;

}

return m ;

}

// 2: try to allocate from the global gFreeList

//Thread local idle list is empty, trying to allocate from global idle list

if (gFreeList != NULL) {

//Get lock ListLock

Thread::muxAcquire (&ListLock, "omAlloc") ;

for (int i = Self->omFreeProvision; --i >= 0 && gFreeList != NULL; ) {

//Take one from the global list

MonitorFreeCount --;

ObjectMonitor * take = gFreeList ;

gFreeList = take->FreeNext ;

guarantee (take->object() == NULL, "invariant") ;

guarantee (!take->is_busy(), "invariant") ;

//Reset the removed ObjectMonitor

take->Recycle() ;

//Add ObjectMonitor to the thread local free list

omRelease (Self, take, false) ;

}

//Release lock

Thread::muxRelease (&ListLock) ;

//Increase omFreeProvision by half and set it to maxvary if it exceeds maxvary

Self->omFreeProvision += 1 + (Self->omFreeProvision/2) ;

if (Self->omFreeProvision > MAXPRIVATE ) Self->omFreeProvision = MAXPRIVATE ;

TEVENT (omFirst - reprovision) ;

//The default value of MonitorBound is 0, which indicates the maximum number of objectmonitors allowed to be used

const int mx = MonitorBound ;

if (mx > 0 && (MonitorPopulation-MonitorFreeCount) > mx) {

//Force the JVM to enter the security point through VM operation and call the deflate idle monitors method to reclaim some idle objectmonitors

InduceScavenge (Self, "omAlloc") ;

}

//After allocating a specified number of free objectmonitors from the global free list, the next cycle will start, which will be allocated in the local free list

continue;

}

// 3: allocate a block of new ObjectMonitors

//Both the local free list and the global free list are empty and need to be reallocated

//_BLOCKSIZE is an enumeration value, 128 by default

assert (_BLOCKSIZE > 1, "invariant") ;

//Create an array of objectmonitors, i.e. 128 objectmonitors at a time

ObjectMonitor * temp = new ObjectMonitor[_BLOCKSIZE];

if (temp == NULL) {

//Allocation failed, exception thrown

vm_exit_out_of_memory (sizeof (ObjectMonitor[_BLOCKSIZE]), OOM_MALLOC_ERROR,

"Allocate ObjectMonitors");

}

//Link the ObjectMonitor in the array

for (int i = 1; i < _BLOCKSIZE ; i++) {

temp[i].FreeNext = &temp[i+1];

}

//FreeNext of the last ObjectMonitor in the array is set to NULL

temp[_BLOCKSIZE - 1].FreeNext = NULL ;

//The first element is marked as being built in the linked list

temp[0].set_object(CHAINMARKER);

//Get lock

Thread::muxAcquire (&ListLock, "omAlloc [2]") ;

//Increase count

MonitorPopulation += _BLOCKSIZE-1;

MonitorFreeCount += _BLOCKSIZE-1;

//Add the first array element to the gBlockList, which is used to traverse all ObjectMonitor instances, whether they are idle or in use

temp[0].FreeNext = gBlockList;

gBlockList = temp;

//Add the second element to gFreeList

temp[_BLOCKSIZE - 1].FreeNext = gFreeList ;

gFreeList = temp + 1;

//Release lock

Thread::muxRelease (&ListLock) ;

TEVENT (Allocate block of monitors) ;

}

}

void ObjectSynchronizer::omRelease (Thread * Self, ObjectMonitor * m, bool fromPerThreadAlloc) {

guarantee (m->object() == NULL, "invariant") ;

// Remove from omInUseList

//MonitorInUseLists defaults to false

if (MonitorInUseLists && fromPerThreadAlloc) {

ObjectMonitor* curmidinuse = NULL;

//Traverse omInUseList and remove target m if it exists

for (ObjectMonitor* mid = Self->omInUseList; mid != NULL; ) {

if (m == mid) {

if (mid == Self->omInUseList) {

//If at chain header

Self->omInUseList = mid->FreeNext;

} else if (curmidinuse != NULL) {

//If in the middle of the list

curmidinuse->FreeNext = mid->FreeNext; // maintain the current thread inuselist

}

Self->omInUseCount --;

// verifyInUse(Self);

break;

} else {

//curmidinuse indicates the previous ObjectMonitor of the current mid

curmidinuse = mid;

mid = mid->FreeNext;

}

}

}

//Add m to the idle list of the current thread

m->FreeNext = Self->omFreeList ;

Self->omFreeList = m ;

Self->omFreeCount ++ ;

}

void ObjectSynchronizer::omFlush (Thread * Self) {

ObjectMonitor * List = Self->omFreeList ; // Null-terminated SLL

Self->omFreeList = NULL ;

ObjectMonitor * Tail = NULL ;

int Tally = 0;

if (List != NULL) {

ObjectMonitor * s ;

//Traverse the ObjectMonitor in the local free list to find the last element

for (s = List ; s != NULL ; s = s->FreeNext) {

Tally ++ ;

Tail = s ;

guarantee (s->object() == NULL, "invariant") ;

guarantee (!s->is_busy(), "invariant") ;

s->set_owner (NULL) ; // redundant but good hygiene

TEVENT (omFlush - Move one) ;

}

guarantee (Tail != NULL && List != NULL, "invariant") ;

}

ObjectMonitor * InUseList = Self->omInUseList;

ObjectMonitor * InUseTail = NULL ;

int InUseTally = 0;

if (InUseList != NULL) {

Self->omInUseList = NULL;

ObjectMonitor *curom;

//Traverse the list of ObjectMonitor in use locally to find the last one

for (curom = InUseList; curom != NULL; curom = curom->FreeNext) {

InUseTail = curom;

InUseTally++;

}

// TODO debug

assert(Self->omInUseCount == InUseTally, "inuse count off");

Self->omInUseCount = 0;

guarantee (InUseTail != NULL && InUseList != NULL, "invariant");

}

//Get lock

Thread::muxAcquire (&ListLock, "omFlush") ;

if (Tail != NULL) {

//Return the local free ObjectMonitor linked list to the global free linked list

Tail->FreeNext = gFreeList ;

gFreeList = List ;

MonitorFreeCount += Tally;

}

if (InUseTail != NULL) {

//Return the local in use ObjectMonitor linked list to the global in use linked list

InUseTail->FreeNext = gOmInUseList;

gOmInUseList = InUseList;

gOmInUseCount += InUseTally;

}

//Release lock

Thread::muxRelease (&ListLock) ;

TEVENT (omFlush) ;

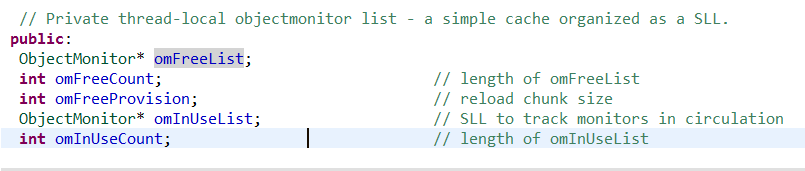

}The related properties of the ObjectMonitor linked list defined in Thread are as follows:

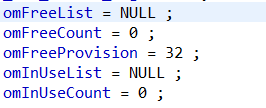

omFreeList is the free list of ObjectMonitor. omFreeCount is the number of spatial lists. omFreeProvision is the maximum number that can be obtained when several objectmonitors are obtained from the global free list to the local free list. omInUseList is the list of objectmonitors in use. omInUseCount is the number of objectmonitors in use. The above properties Initialization in the Thread construction method is as follows:

5,InduceScavenge / deflate_idle_monitors / deflate_monitor / walk_monitor_list

When the MonitorBound property is set, if the currently used ObjectMonitor exceeds this property, it will force the JVM to enter the security point through inductcascade. After entering the security point, it will execute the deflate ABCD monitors method to clean up the ObjectMonitor instances that have been allocated from the local or global free list but are not used, and return them to the global free list Medium. The default value of this property is 0, that is to say, the collection of ObjectMonitor will be triggered only when the GC and other operations that need to be performed under the security point are performed by default. In addition, the number of ObjectMonitor will continue to grow.

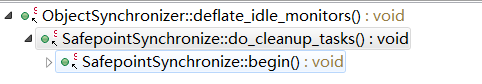

The call chain of inductcavenge is as follows:

It is as follows:

static void InduceScavenge (Thread * Self, const char * Whence) {

//ForceMonitorScavenge is a static volatile variable, initially 0, which is used to control that inductcavenge will not be executed repeatedly

if (ForceMonitorScavenge == 0 && Atomic::xchg (1, &ForceMonitorScavenge) == 0) {

if (ObjectMonitor::Knob_Verbose) {

::printf ("Monitor scavenge - Induced STW @%s (%d)\n", Whence, ForceMonitorScavenge) ;

::fflush(stdout) ;

}

//Execute VM? Forceasyncsafepoint

VMThread::execute (new VM_ForceAsyncSafepoint()) ;

if (ObjectMonitor::Knob_Verbose) {

::printf ("Monitor scavenge - STW posted @%s (%d)\n", Whence, ForceMonitorScavenge) ;

::fflush(stdout) ;

}

}

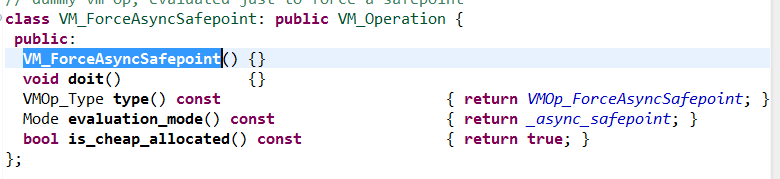

}VM? Forceasyncsafepoint is defined as follows:

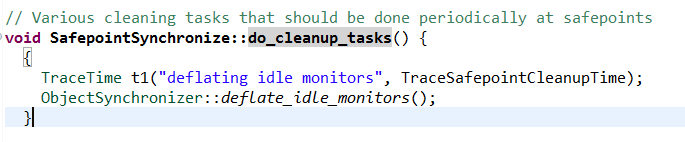

The doit method is an empty implementation, and the evaluation mode method indicates that it needs to be executed under the security point. After the SafepointSynchronize::begin method completes the security point synchronization, that is, after all threads stop, it will execute the do ﹣ cleanup ﹣ tasks method, which will call deflate ﹣ idle ﹣ monitors to clean up the idle ObjectMonitor, as shown in the following figure:

The call chain of deflate ABCD idle ABCD monitors is as follows:

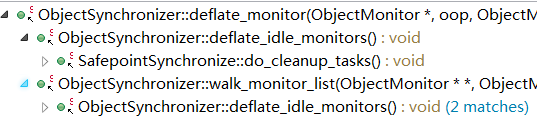

If MonitorInUseLists is true, deflate ﹣ idle ﹣ monitors rely on walk ﹣ monitor ﹣ list to traverse the local omniuselist list list of all threads and the global gOmInUseList. If the ObjectMonitor in the linked list is not used, it will be returned to a linked list. If MonitorInUseLists is false, deflate ﹣ idle ﹣ monitors rely on deflate ﹣ monitor, and traverse all the created ones through the gBlockList If the Object of the ObjectMonitor instance of is not empty, it will be recycled and returned to a linked list if it has been allocated but not used. When the walk monitor list processes the ObjectMonitor instance in the linked list, it also calls the deflate monitor method. Finally, insert the linked list of all returned ObjectMonitor instances into the front of the global free linked list gFreeList. Refer to the call chain of the deflate ﹣ monitor method, as follows:

The implementation of these three methods is as follows:

void ObjectSynchronizer::deflate_idle_monitors() {

assert(SafepointSynchronize::is_at_safepoint(), "must be at safepoint");

int nInuse = 0 ; // currently associated with objects

int nInCirculation = 0 ; // extant

int nScavenged = 0 ; // reclaimed

bool deflated = false;

ObjectMonitor * FreeHead = NULL ; // Local SLL of scavenged monitors

ObjectMonitor * FreeTail = NULL ;

TEVENT (deflate_idle_monitors) ;

//Get lock

Thread::muxAcquire (&ListLock, "scavenge - return") ;

//MonitorInUseLists defaults to false

if (MonitorInUseLists) {

int inUse = 0;

for (JavaThread* cur = Threads::first(); cur != NULL; cur = cur->next()) {

nInCirculation+= cur->omInUseCount;

//Traverse the ObjectMonitor in the omniuselist linked list of the thread, recycle it if it is not used, and return the number of objectmonitors recycled in the linked list

int deflatedcount = walk_monitor_list(cur->omInUseList_addr(), &FreeHead, &FreeTail);

cur->omInUseCount-= deflatedcount;

//nScavenged indicates the cumulative number of objectmonitors recycled

nScavenged += deflatedcount;

//nInuse indicates the cumulative number of objectmonitors in use

nInuse += cur->omInUseCount;

}

// For moribund threads, scan gOmInUseList

if (gOmInUseList) {

nInCirculation += gOmInUseCount;

//Traverse the ObjectMonitor in the global list of gOmInUseList

int deflatedcount = walk_monitor_list((ObjectMonitor **)&gOmInUseList, &FreeHead, &FreeTail);

gOmInUseCount-= deflatedcount;

nScavenged += deflatedcount;

nInuse += gOmInUseCount;

}

} else for (ObjectMonitor* block = gBlockList; block != NULL; block = next(block)) {

//All created objectmonitors are traversed through the gBlockList linked list, where each element is the header element of an ObjectMonitor array

assert(block->object() == CHAINMARKER, "must be a block header");

nInCirculation += _BLOCKSIZE ;

for (int i = 1 ; i < _BLOCKSIZE; i++) {

ObjectMonitor* mid = &block[i];

oop obj = (oop) mid->object();

if (obj == NULL) {

//If obj is empty, it means that it is not allocated and is still in the local or global free list

guarantee (!mid->is_busy(), "invariant") ;

continue ;

}

//Insert the ObjectMonitor into the FreeTail list if it is not used

deflated = deflate_monitor(mid, obj, &FreeHead, &FreeTail);

if (deflated) {

//It is not used, set its FreeNext to null, because the ObjectMonitor has been removed from the free list when assigning the ObjectMonitor, so there is no need to remove it from the free list again

mid->FreeNext = NULL ;

nScavenged ++ ;

} else {

nInuse ++;

}

}

}

//Increase idle count

MonitorFreeCount += nScavenged;

// Consider: audit gFreeList to ensure that MonitorFreeCount and list agree.

if (ObjectMonitor::Knob_Verbose) {

::printf ("Deflate: InCirc=%d InUse=%d Scavenged=%d ForceMonitorScavenge=%d : pop=%d free=%d\n",

nInCirculation, nInuse, nScavenged, ForceMonitorScavenge,

MonitorPopulation, MonitorFreeCount) ;

::fflush(stdout) ;

}

ForceMonitorScavenge = 0; // Reset

//If the ObjectMonitor instance is recycled

if (FreeHead != NULL) {

guarantee (FreeTail != NULL && nScavenged > 0, "invariant") ;

assert (FreeTail->FreeNext == NULL, "invariant") ;

//Insert FreeTail after gFreeList

FreeTail->FreeNext = gFreeList ;

gFreeList = FreeHead ;

}

//Release lock

Thread::muxRelease (&ListLock) ;

// Increase count

if (ObjectMonitor::_sync_Deflations != NULL) ObjectMonitor::_sync_Deflations->inc(nScavenged) ;

if (ObjectMonitor::_sync_MonExtant != NULL) ObjectMonitor::_sync_MonExtant ->set_value(nInCirculation);

// Increase count

GVars.stwRandom = os::random() ;

GVars.stwCycle ++ ;

}

int ObjectSynchronizer::walk_monitor_list(ObjectMonitor** listheadp,

ObjectMonitor** FreeHeadp, ObjectMonitor** FreeTailp) {

ObjectMonitor* mid;

ObjectMonitor* next;

ObjectMonitor* curmidinuse = NULL;

int deflatedcount = 0;

for (mid = *listheadp; mid != NULL; ) {

oop obj = (oop) mid->object();

bool deflated = false;

if (obj != NULL) {

deflated = deflate_monitor(mid, obj, FreeHeadp, FreeTailp);

}

if (deflated) {

//If unused is recycled, remove it from the listeadp

if (mid == *listheadp) {

*listheadp = mid->FreeNext;

} else if (curmidinuse != NULL) {

curmidinuse->FreeNext = mid->FreeNext; // maintain the current thread inuselist

}

next = mid->FreeNext;

mid->FreeNext = NULL; // This mid is current tail in the FreeHead list

mid = next;

//Increase count

deflatedcount++;

} else {

curmidinuse = mid;

mid = mid->FreeNext;

}

}

return deflatedcount;

}

bool ObjectSynchronizer::deflate_monitor(ObjectMonitor* mid, oop obj,

ObjectMonitor** FreeHeadp, ObjectMonitor** FreeTailp) {

bool deflated;

// Normal case ... The monitor is associated with obj.

guarantee (obj->mark() == markOopDesc::encode(mid), "invariant") ;

guarantee (mid == obj->mark()->monitor(), "invariant");

guarantee (mid->header()->is_neutral(), "invariant");

if (mid->is_busy()) {

//false if ObjectMonitor is in use

if (ClearResponsibleAtSTW) mid->_Responsible = NULL ;

deflated = false;

} else {

//Recycle if ObjectMonitor is not used, return it to FreeTailp free link list, and return true

TEVENT (deflate_idle_monitors - scavenge1) ;

if (TraceMonitorInflation) {

if (obj->is_instance()) {

ResourceMark rm;

tty->print_cr("Deflating object " INTPTR_FORMAT " , mark " INTPTR_FORMAT " , type %s",

(void *) obj, (intptr_t) obj->mark(), obj->klass()->external_name());

}

}

//Restore original object header

obj->release_set_mark(mid->header());

//Reset

mid->clear();

assert (mid->object() == NULL, "invariant") ;

//Insert it into the free list

if (*FreeHeadp == NULL) *FreeHeadp = mid;

if (*FreeTailp != NULL) {

ObjectMonitor * prevtail = *FreeTailp;

assert(prevtail->FreeNext == NULL, "cleaned up deflated?"); // TODO KK

prevtail->FreeNext = mid;

}

*FreeTailp = mid;

deflated = true;

}

return deflated;

}

6,wait / notify / notifyall

These three methods are the core implementation of Object's wait, notify and notifyAll methods. The wait method assigns a ObjectMonitor associated with the object and then calls its wait method; notify and notifyAll check whether the object holds a lightweight lock, and if there is, it returns directly, because if a lightweight lock is specified, it does not invoke wait method, and there is no associated ObjectMonitor. Instance, if not, get the associated objectmonitor instance, call its notify and notifyAll methods, check whether the acquired objectmonitor instance is held by the current thread before executing the specific logic, if not, throw an exception objectmonitor instance, if you call the wait method first and then the notify or notifyAll method, no error will be reported. It is as follows:

void ObjectSynchronizer::wait(Handle obj, jlong millis, TRAPS) {

//UseBiasedLocking defaults to true

if (UseBiasedLocking) {

//Undo biased lock contained in object header

BiasedLocking::revoke_and_rebias(obj, false, THREAD);

assert(!obj->mark()->has_bias_pattern(), "biases should be revoked by now");

}

if (millis < 0) {

TEVENT (wait - throw IAX) ;

//Illegal exception thrown by parameter

THROW_MSG(vmSymbols::java_lang_IllegalArgumentException(), "timeout value is negative");

}

//Assign an associated ObjectMonitor instance

ObjectMonitor* monitor = ObjectSynchronizer::inflate(THREAD, obj());

DTRACE_MONITOR_WAIT_PROBE(monitor, obj(), THREAD, millis);

//Call its wait method

monitor->wait(millis, true, THREAD);

dtrace_waited_probe(monitor, obj, THREAD);

}

void ObjectSynchronizer::notify(Handle obj, TRAPS) {

if (UseBiasedLocking) {

//Undo biased lock contained in object header

BiasedLocking::revoke_and_rebias(obj, false, THREAD);

assert(!obj->mark()->has_bias_pattern(), "biases should be revoked by now");

}

markOop mark = obj->mark();

if (mark->has_locker() && THREAD->is_lock_owned((address)mark->locker())) {

//If the object holds a lightweight lock, it will return directly. Holding a lightweight lock means that its object header does not contain an ObjectMonitor pointer

return;

}

//Get the ObjectMonitor instance associated with it and call its notify method

ObjectSynchronizer::inflate(THREAD, obj())->notify(THREAD);

}

// NOTE: see comment of notify()

void ObjectSynchronizer::notifyall(Handle obj, TRAPS) {

if (UseBiasedLocking) {

//Undo biased lock contained in object header

BiasedLocking::revoke_and_rebias(obj, false, THREAD);

assert(!obj->mark()->has_bias_pattern(), "biases should be revoked by now");

}

markOop mark = obj->mark();

if (mark->has_locker() && THREAD->is_lock_owned((address)mark->locker())) {

//Returns directly if the object holds a lightweight lock

return;

}

//Get the ObjectMonitor instance associated with it and call its notify method

ObjectSynchronizer::inflate(THREAD, obj())->notifyAll(THREAD);

}