SparkStreaming02 enhanced cluster

Code display

package com.hpznyf.sparkstreaming.ss64

import org.apache.kafka.common.serialization.StringDeserializer

import org.apache.spark.SparkConf

import org.apache.spark.streaming.kafka010.ConsumerStrategies.Subscribe

import org.apache.spark.streaming.kafka010.LocationStrategies.PreferConsistent

import org.apache.spark.streaming.kafka010.{CanCommitOffsets, HasOffsetRanges, KafkaUtils}

import org.apache.spark.streaming.{Seconds, StreamingContext}

/**

*

spark-submit \

--master local[4] \

--class com.hpznyf.sparkstreaming.ss64.OffsetClusterApp \

--packages org.apache.spark:spark-streaming-kafka-0-10_2.12:2.4.6 \

--jars /home/hadoop/app/hive/lib/mysql-connector-java-5.1.47.jar \

/home/hadoop/lib/hpznyf-spark-core-1.0-SNAPSHOT.jar \

10 ruoze hadoop003:9092,hadoop004:9093,hadoop005:9094 pkss

--conf spark.serializer=org.apache.spark.serializer.KryoSerialize \

*/

object OffsetClusterApp {

def main(args: Array[String]): Unit = {

if(args.length != 4){

System.err.println(

"""

|Usage : OffsetClusterApp <batch> ,<groupid>, <brokers>, <topic>

| <batch> : spark The interval at which the streaming job runs

| <groupid> : Consumer group number

| <brokers> : Kafka Cluster address

| <topic> : Consumption Topic name

|""".stripMargin)

System.exit(1)

}

val Array(batch, groupid, brokers, topic) = args

val sparkConf = new SparkConf()

//.setAppName(this.getClass.getCanonicalName)

//.setMaster("local[3]")

//.set("spark.serializer","org.apache.spark.serializer.KryoSerializer")

val ssc = new StreamingContext(sparkConf, Seconds(batch.toInt))

val kafkaParams = Map[String, Object](

"bootstrap.servers" -> brokers,

"key.deserializer" -> classOf[StringDeserializer],

"value.deserializer" -> classOf[StringDeserializer],

"group.id" -> groupid, // Change group and restart consumption

"auto.offset.reset" -> "earliest",

"enable.auto.commit" -> (false: java.lang.Boolean)

)

val topics = Array(topic)

val stream = KafkaUtils.createDirectStream[String, String](

ssc,

PreferConsistent,

Subscribe[String, String](topics, kafkaParams)

)

stream.foreachRDD(rdd => {

if(!rdd.isEmpty()){

/**

* TODO... The offset must be kafkaRDD, so the upper part must also be a first-hand rdd

* driver

* If the upper part is changed to mapartitionrdd, you cannot obtain offsetRange

*/

val offsetRanges = rdd.asInstanceOf[HasOffsetRanges].offsetRanges

offsetRanges.foreach(x => {

println(s"${x.topic}, ${x.partition}, ${x.fromOffset}, ${x.untilOffset}")

})

/**

* TODO.. Business processing

* executor

*/

rdd.flatMap(_.value().split(",")).map((_,1)).reduceByKey(_+_).foreach(println)

/**

* TODO.. After submitting Offset, the ke page can be viewed

* Driver

* Asynchronous, but kafka has no transaction. The output needs to be idempotent, which can not guarantee accurate one-time consumption

*/

stream.asInstanceOf[CanCommitOffsets].commitAsync(offsetRanges)

}else{

println("There is no data for this batch")

}

})

// wc operation

// stream.map(_.value()).flatMap(_.split(",")).map((_,1)).reduceByKey(_+_).print()

ssc.start()

ssc.awaitTermination()

}

}

1. Cluster error reporting solution

script: spark-submit \ --master local[4] \ --class com.hpznyf.sparkstreaming.ss64.OffsetClusterApp \ --conf spark.serializer=org.apache.spark.serializer.KryoSerialize \ /home/hadoop/lib/hpznyf-spark-core-1.0-SNAPSHOT.jar \ 10 ruoze hadoop003:9092,hadoop004:9093,hadoop005:9094 pkss

report errors noClassDefFoundError:org/apache/kafka./common/stringDeserializer Reason: use kafka util But no

Solution 1:

spark-submit \ --master local[4] \ --class com.hpznyf.sparkstreaming.ss64.OffsetClusterApp \ --conf spark.serializer=org.apache.spark.serializer.KryoSerialize \ --packages org.apache.spark:spark-streaming-kafka-0-10_2.12:2.4.6 \ /home/hadoop/lib/hpznyf-spark-core-1.0-SNAPSHOT.jar \ 10 ruoze hadoop003:9092,hadoop004:9093,hadoop005:9094 pkss Added package Have to rely on

report errors lack mysql drive spark-submit \ --master local[4] \ --class com.hpznyf.sparkstreaming.ss64.OffsetClusterApp \ --conf spark.serializer=org.apache.spark.serializer.KryoSerialize \ --packages org.apache.spark:spark-streaming-kafka-0-10_2.12:2.4.6 \ --jars /home/hadoop/app/hive/lib/mysql-connector-java-5.1.47.jar \ /home/hadoop/lib/hpznyf-spark-core-1.0-SNAPSHOT.jar \ 10 ruoze hadoop003:9092,hadoop004:9093,hadoop005:9094 pkss

It's also possible that the serialized class can't be found. Find another way at this time

----------------------But there is a big problem. If the cluster can't access the Internet, it's over

2. Solve the problem of cluster error reporting and use fat packets

Thin bag:

Source code only

If relevant dependencies are required in the application, the dependencies need to be passed into the server

Fat but not fat:

Including source code

However, it does not include the dependencies in all pom dependencies

But it contains some information that is not available on the server

For this application, only mysql sparkstreaming kafka is required

Fat bag:

Put all the dependencies in

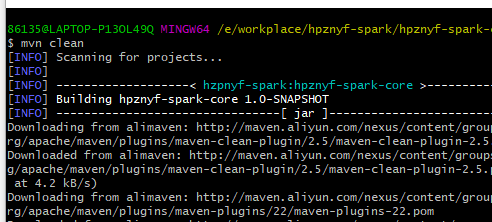

Execute the mvn command in the directory

Clear target file

mvn clean

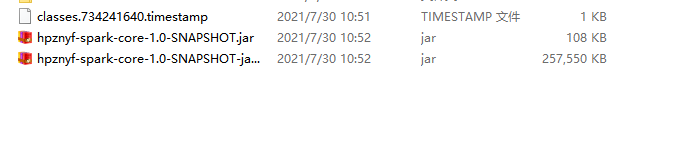

mvn assembly:assembly

Packing takes a long time

But the bag is too big. What should I do?

At this time, we need to solve this problem, which is to shield the unnecessary dependencies in the pom file

Add to unnecessary dependence

<scope>provided</scope>

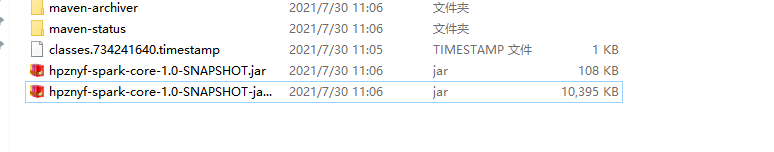

then git

mvn clean

mvn assembly:assembly -DskipTests

Now just need spark-submit \ --master local[4] \ --class com.hpznyf.sparkstreaming.ss64.OffsetClusterApp \ /home/hadoop/lib/hpznyf-spark-core-1.0-SNAPSHOT-jar-with-dependencies.jar \ 10 ruoze hadoop003:9092,hadoop004:9093,hadoop005:9094 pkss

You can start producer to test

kafka-console-producer.sh --broker-list hadoop003:9092,hadoop004:9093,hadoop005:9094 --topic pkss

Then start the consumer

Complete~

3. Review SS docking Kafka scenario

SS docking Kafka data

- Business logic processing

- Save results

- Submit Offset

For business logic processing results

- Aggregation: pull the data to the driver and save it

- Non aggregation: the amount of data is huge and cannot be pulled to the driver

So what about non aggregation?

Non aggregation typical: ETL operation

Data - kafka - ETL - NOSQL(HBase/ES /...)

It is not feasible to pull the Executor data to the Driver side for saving!!!!! The amount of data is too large for the Driver to carry!!!!

In this scenario, two things need to be done on the Executor side

- Datasheet NoSQL - a table

- Offset should also fall into NoSQL - b table

HBase guarantees row level transactions

Key point = = how to save data in a row

Table design:

HBase table – design two CF S

CF1: O indicates CF of the data

CF2: Offset stores CF of Offset

rk1, data cf,offsetCf rk2, data cf,offsetCf rk3, data cf,offsetCf .....

Scenario: how to ensure that Offset is successfully written after data is successfully written.

Is offset guaranteed? Yes, because it's row level

Scenario 2: what if the data is repeated?

No problem. RK is the same. The data will be updated. You can overwrite multiple versions

What to do:

- The executor obtains offset+data and writes it to HBase

- You also need to get the existing offset from HBase

As long as the last record in the record partition is saved as offset

4. Implement the non aggregate executor side data entry into HBase

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

</dependency>

Create topic

[hadoop@hadoop003 ~]$ kafka-topics.sh --create --zookeeper hadoop003:2181/kafka --replication-factor 1 --partitions 3 --topic hbaseoffset [hadoop@hadoop003 ~]$ kafka-topics.sh --list --zookeeper hadoop003:2181/kafka

4.1 simple code display: read hbaseoffset topic data

package com.hpznyf.sparkstreaming.ss64

import org.apache.kafka.common.serialization.StringDeserializer

import org.apache.spark.SparkConf

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.streaming.kafka010.ConsumerStrategies.Subscribe

import org.apache.spark.streaming.kafka010.{CanCommitOffsets, HasOffsetRanges, KafkaUtils}

import org.apache.spark.streaming.kafka010.LocationStrategies.PreferConsistent

object HBaseOffsetApp {

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf()

.setAppName(this.getClass.getCanonicalName)

.setMaster("local[3]")

.set("spark.serializer","org.apache.spark.serializer.KryoSerializer")

val ssc = new StreamingContext(sparkConf, Seconds(5))

val groupId = "kafka-ss-hbase-offset"

val kafkaParams = Map[String, Object](

"bootstrap.servers" -> "hadoop003:9092,hadoop004:9093,hadoop005:9094",

"key.deserializer" -> classOf[StringDeserializer],

"value.deserializer" -> classOf[StringDeserializer],

"group.id" -> groupId, // Change group and restart consumption

"auto.offset.reset" -> "earliest",

"enable.auto.commit" -> (false: java.lang.Boolean)

)

val topics = Array("hbaseoffset")

val stream = KafkaUtils.createDirectStream[String, String](

ssc,

PreferConsistent,

Subscribe[String, String](topics, kafkaParams)

)

stream.foreachRDD(rdd => {

if(!rdd.isEmpty()){

/**

* TODO... The offset must be kafkaRDD, so the upper part must also be a first-hand rdd

* driver

* If the upper part is changed to mapartitionrdd, you cannot obtain offsetRange

*/

val offsetRanges = rdd.asInstanceOf[HasOffsetRanges].offsetRanges

offsetRanges.foreach(x => {

println(s"${x.topic}, ${x.partition}, ${x.fromOffset}, ${x.untilOffset}")

})

}else{

println("There is no data for this batch")

}

})

ssc.start()

ssc.awaitTermination()

}

}

4.2 encountered error: jar package conflict

noSuchMethodError: io.netty.buffer.PooledByteByufAllocator

The error reported is a jar package conflict

How to solve it?