Introduction case:

object SparkSqlTest {

def main(args: Array[String]): Unit = {

//Block redundant logs

Logger.getLogger("org.apache.hadoop").setLevel(Level.WARN)

Logger.getLogger("org.apache.spark").setLevel(Level.WARN)

Logger.getLogger("org.project-spark").setLevel(Level.WARN)

//Building programming portals

val conf: SparkConf = new SparkConf()

conf.setAppName("SparkSqlTest")

.setMaster("local[2]")

val spark: SparkSession = SparkSession.builder().config(conf)

.getOrCreate()

/**

* Note after spark 2.0:

* val sqlContext = new SQLContext(sparkContext)

* val hiveContext = new HiveContext(sparkContext)

* The main constructor is privatized, so only the SparkSession object can be used here

*/

//Create sqlcontext object

val sqlContext: SQLContext = spark.sqlContext

//The load data is DataFrame, and the load here is json data

//Data format: {name:'',age:18}

val perDF: DataFrame = sqlContext.read.json("hdfs://zzy/data/person.json")

//View 2D table structure

perDF.printSchema()

//View data, display 20 records by default

perDF.show()

//Complex queries

perDF.select("name").show() //Specify fields to query

perDF.select(new Column("name"),new Column("age").>(18)).show() //Specify query criteria to query

perDF.select("name","age").where(new Column("age").>(18)).show() //Specify query criteria to query

perDF.select("age").groupBy("age").avg("age") //Aggregation operation

}

}If you don't know much about the introduction case, next step by step introduction:

(1) conversion between RDD/DataSet//DataFrame/list

There are two ways to convert from RDD to DataFrame/DataSet:

note: if the dataFrame corresponds to a java bean, if the dataSet corresponds to a case class

Convert RDD or external collection to dataframe/datasets by reflection

Data preparation:

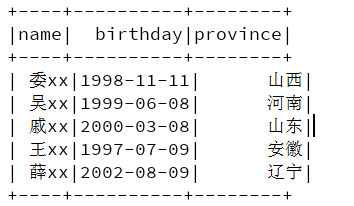

case class Student(name:String, birthday:String, province:String)

val stuList = List(

new Student("committee xx", "1998-11-11", "Shanxi"),

new Student("Wu xx", "1999-06-08", "Henan"),

new Student("Qi xx", "2000-03-08", "Shandong"),

new Student("king xx", "1997-07-09", "Anhui"),

new Student("Xue xx", "2002-08-09", "Liaoning")

)list --> DataFrame:

//Block redundant logs

Logger.getLogger("org.apache.hadoop").setLevel(Level.WARN)

Logger.getLogger("org.apache.spark").setLevel(Level.WARN)

Logger.getLogger("org.project-spark").setLevel(Level.WARN)

//Building programming portals

val conf: SparkConf = new SparkConf()

conf.setAppName("SparkSqlTest")

.setMaster("local[2]")

.set("spark.serializer","org.apache.spark.serializer.KryoSerializer")

.registerKryoClasses(Array(classOf[Student]))

val spark: SparkSession = SparkSession.builder().config(conf)

.getOrCreate()

//Create sqlcontext object

val sqlContext: SQLContext = spark.sqlContext

/**

* list--->DataFrame

* Converting scala collections to java collections

*/

val javaList: util.List[Student] = JavaConversions.seqAsJavaList(stuList)

val stuDF: DataFrame = sqlContext.createDataFrame(javaList,classOf[Student])

val count = stuDF.count()

println(count)RDD --> DataFrame:

//Block redundant logs

Logger.getLogger("org.apache.hadoop").setLevel(Level.WARN)

Logger.getLogger("org.apache.spark").setLevel(Level.WARN)

Logger.getLogger("org.project-spark").setLevel(Level.WARN)

//Building programming portals

val conf: SparkConf = new SparkConf()

conf.setAppName("SparkSqlTest")

.setMaster("local[2]")

.set("spark.serializer","org.apache.spark.serializer.KryoSerializer")

.registerKryoClasses(Array(classOf[Student]))

val spark: SparkSession = SparkSession.builder().config(conf)

.getOrCreate()

//Create sqlcontext object

val sqlContext: SQLContext = spark.sqlContext

//Create sparkContext

val sc: SparkContext = spark.sparkContext

/**

* RDD--->DataFrame

*/

val stuRDD: RDD[Student] = sc.makeRDD(stuList)

val stuDF: DataFrame = sqlContext.createDataFrame(stuRDD,classOf[Student])

val count = stuDF.count()

println(count)list --> DataSet:

//Block redundant logs

Logger.getLogger("org.apache.hadoop").setLevel(Level.WARN)

Logger.getLogger("org.apache.spark").setLevel(Level.WARN)

Logger.getLogger("org.project-spark").setLevel(Level.WARN)

//Building programming portals

val conf: SparkConf = new SparkConf()

conf.setAppName("SparkSqlTest")

.setMaster("local[2]")

.set("spark.serializer","org.apache.spark.serializer.KryoSerializer")

.registerKryoClasses(Array(classOf[Student]))

val spark: SparkSession = SparkSession.builder().config(conf)

.getOrCreate()

//Create sqlcontext object

val sqlContext: SQLContext = spark.sqlContext

//Create sparkContext

val sc: SparkContext = spark.sparkContext

/**

* list--->DataSet

*/

//If you create a Dataset, you must import the following implicit transformation

import spark.implicits._

val stuDF: Dataset[Student] = sqlContext.createDataset(stuList)

stuDF.createTempView("student")

//Use the complete sql statement to query, and use the reflection method. Only Dataset can query, not dataFrame

val sql=

"""

|select * from student

""".stripMargin

spark.sql(sql).show()

RDD --> DataSet:

//Block redundant logs

Logger.getLogger("org.apache.hadoop").setLevel(Level.WARN)

Logger.getLogger("org.apache.spark").setLevel(Level.WARN)

Logger.getLogger("org.project-spark").setLevel(Level.WARN)

//Building programming portals

val conf: SparkConf = new SparkConf()

conf.setAppName("SparkSqlTest")

.setMaster("local[2]")

.set("spark.serializer","org.apache.spark.serializer.KryoSerializer")

.registerKryoClasses(Array(classOf[Student]))

val spark: SparkSession = SparkSession.builder().config(conf)

.getOrCreate()

//Create sqlcontext object

val sqlContext: SQLContext = spark.sqlContext

//Create sparkContext

val sc: SparkContext = spark.sparkContext

/**

* RDD--->DataSet

*/

//If you create a Dataset, you must import the following implicit transformation

import spark.implicits._

val stuRDD: RDD[Student] = sc.makeRDD(stuList)

val stuDF: Dataset[Student] = sqlContext.createDataset(stuRDD)

stuDF.createTempView("student")

//Use the complete sql statement to query, and use the reflection method. Only Dataset can query, not dataFrame

val sql=

"""

|select * from student

""".stripMargin

spark.sql(sql).show()Dynamically translate external collections or RDD S into data frames or dataset s by programming

list --> DataFrame:

//Block redundant logs

Logger.getLogger("org.apache.hadoop").setLevel(Level.WARN)

Logger.getLogger("org.apache.spark").setLevel(Level.WARN)

Logger.getLogger("org.project-spark").setLevel(Level.WARN)

//Building programming portals

val conf: SparkConf = new SparkConf()

conf.setAppName("SparkSqlTest")

.setMaster("local[2]")

.set("spark.serializer","org.apache.spark.serializer.KryoSerializer")

.registerKryoClasses(Array(classOf[Student]))

val spark: SparkSession = SparkSession.builder().config(conf)

.getOrCreate()

//Create sqlcontext object

val sqlContext: SQLContext = spark.sqlContext

//Create sparkContext

val sc: SparkContext = spark.sparkContext

//list-DataFrame

//1. Convert all elements in the list to Row

val RowList: List[Row] = stuList.map(item => {

Row(item.name, item.birthday, item.province)

})

//2. Build metadata

val schema=StructType(List(

StructField("name",DataTypes.StringType),

StructField("birthday",DataTypes.StringType),

StructField("province",DataTypes.StringType)

))

//Convert the collection of scala to java collection

val javaList = JavaConversions.seqAsJavaList(RowList)

val stuDF = spark.createDataFrame(javaList,schema)

stuDF.createTempView("student")

//Use the complete sql statement to query, and use the dynamic programming method. Both Dataset and dataFrame can

val sql=

"""

|select * from student

""".stripMargin

spark.sql(sql).show()RDD--> DataFrame:

//Block redundant logs

Logger.getLogger("org.apache.hadoop").setLevel(Level.WARN)

Logger.getLogger("org.apache.spark").setLevel(Level.WARN)

Logger.getLogger("org.project-spark").setLevel(Level.WARN)

//Building programming portals

val conf: SparkConf = new SparkConf()

conf.setAppName("SparkSqlTest")

.setMaster("local[2]")

.set("spark.serializer","org.apache.spark.serializer.KryoSerializer")

.registerKryoClasses(Array(classOf[Student]))

val spark: SparkSession = SparkSession.builder().config(conf)

.getOrCreate()

//Create sqlcontext object

val sqlContext: SQLContext = spark.sqlContext

//Create sparkContext

val sc: SparkContext = spark.sparkContext

//RDD-DataFrame

//Convert elements in RDD to Row

val RowRDD: RDD[Row] = sc.makeRDD(stuList).map(item => {

Row(item.name, item.birthday, item.province)

})

//2. Build metadata

val schema=StructType(List(

StructField("name",DataTypes.StringType),

StructField("birthday",DataTypes.StringType),

StructField("province",DataTypes.StringType)

))

val stuDF = spark.createDataFrame(RowRDD,schema)

stuDF.createTempView("student")

//Use the complete sql statement to query, and use the dynamic programming method. Both Dataset and dataFrame can

val sql=

"""

|select * from student

""".stripMargin

spark.sql(sql).show()Because building DataFrame is exactly the same as building DataSet, this is not a demonstration.

(2) how to load data with spark SQL

//Block redundant logs

Logger.getLogger("org.apache.hadoop").setLevel(Level.WARN)

Logger.getLogger("org.apache.spark").setLevel(Level.WARN)

Logger.getLogger("org.project-spark").setLevel(Level.WARN)

//Building programming portals

val conf: SparkConf = new SparkConf()

conf.setAppName("SparkSqlTest")

.setMaster("local[2]")

val spark: SparkSession = SparkSession.builder().config(conf)

.getOrCreate()

//Create sqlcontext object

val sqlContext: SQLContext = spark.sqlContext

//Create sparkContext

val sc: SparkContext = spark.sparkContext

//Previous versions loading: parquet files

sqlContext.load("hdfs://zzy/hello.parquet")

//Load json data

sqlContext.read.json("hdfs://zzy/hello.json")

//Load normal file

sqlContext.read.text("hdfs://zzy/hello.txt")

//Loading csv

sqlContext.read.csv("hdfs://zy/hello.csv")

//Reading jdbc data

val url="jdbc:mysql://localhost:3306/hello"

val properties=new Properties()

properties.setProperty("user","root")

properties.setProperty("password","123456")

val tableName="book"

sqlContext.read.jdbc(url,tableName,properties)(3) how to land spark SQL data

//Block redundant logs

Logger.getLogger("org.apache.hadoop").setLevel(Level.WARN)

Logger.getLogger("org.apache.spark").setLevel(Level.WARN)

Logger.getLogger("org.project-spark").setLevel(Level.WARN)

//Building programming portals

val conf: SparkConf = new SparkConf()

conf.setAppName("SparkSqlTest")

.setMaster("local[2]")

val spark: SparkSession = SparkSession.builder().config(conf)

.getOrCreate()

//Create sqlcontext object

val sqlContext: SQLContext = spark.sqlContext

//Create sparkContext

val sc: SparkContext = spark.sparkContext

val testFD: DataFrame = sqlContext.read.text("hdfs://zzy/hello.txt")

//Write to normal file

testFD.write.format("json") //In what format

.mode(SaveMode.Append) //Writing mode

.save("hdfs://zzy/hello.json ") / / file location written

//Write to database

val url="jdbc:mysql://localhost:3306/hello"

val table_name="book"

val prots=new Properties()

prots.put("user","root")

prots.put("password","123456")

testFD.write.mode(SaveMode.Append).jdbc(url,table_name,prots)