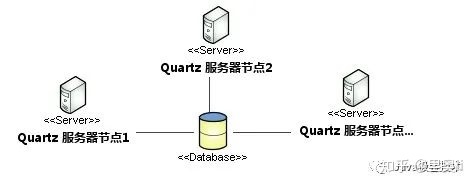

1, Quartz Cluster architecture

Quartz is the most famous open source task scheduling tool in the Java field.

In the previous article, we introduced the single application practice of Quartz in detail. If it is only applied in a single environment, Quartz may not be the best choice. For example, Spring Scheduled can also realize task scheduling, and is seamlessly integrated with SpringBoot. It supports annotation configuration. It is very simple, but it has a disadvantage that it is in a cluster environment, The task will be repeatedly scheduled!

The corresponding Quartz provides widely used features, such as task persistence, cluster deployment and distributed task scheduling. Therefore, the task scheduling function based on Quartz is widely used in system development!

In the cluster environment, each node in the Quartz cluster is an independent Quartz application. There is no node responsible for centralized management. Instead, it senses another application through the database table and uses the database lock to realize concurrency control in the cluster environment. There is and only one effective node currently running each task!

Special attention should be paid to: during distributed deployment, it is necessary to ensure that the system time of each node is consistent!

II. Data table initialization

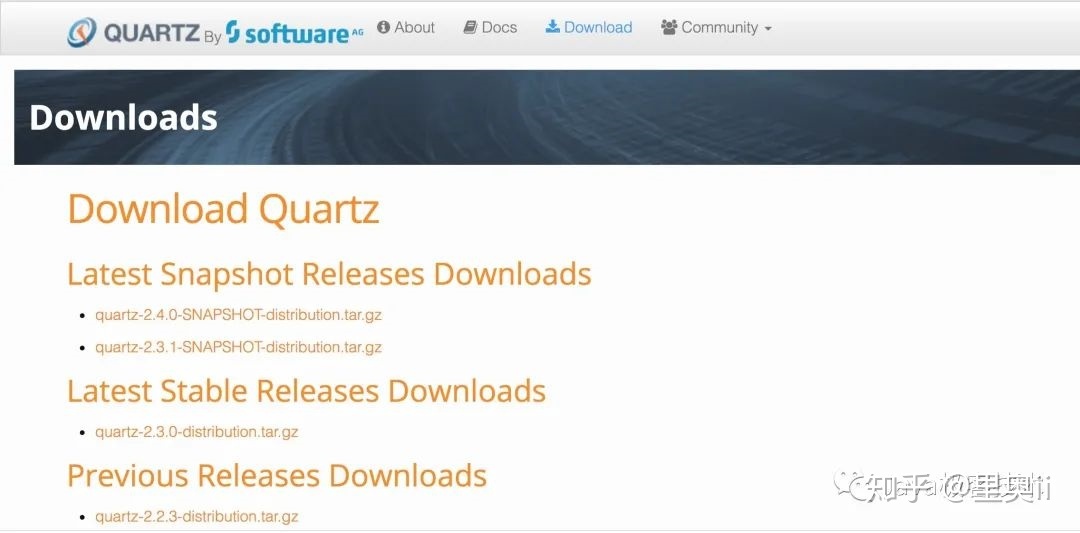

The official website of database table structure has been provided. We can directly visit the official website corresponding to Quartz, find the corresponding version, and then download it!

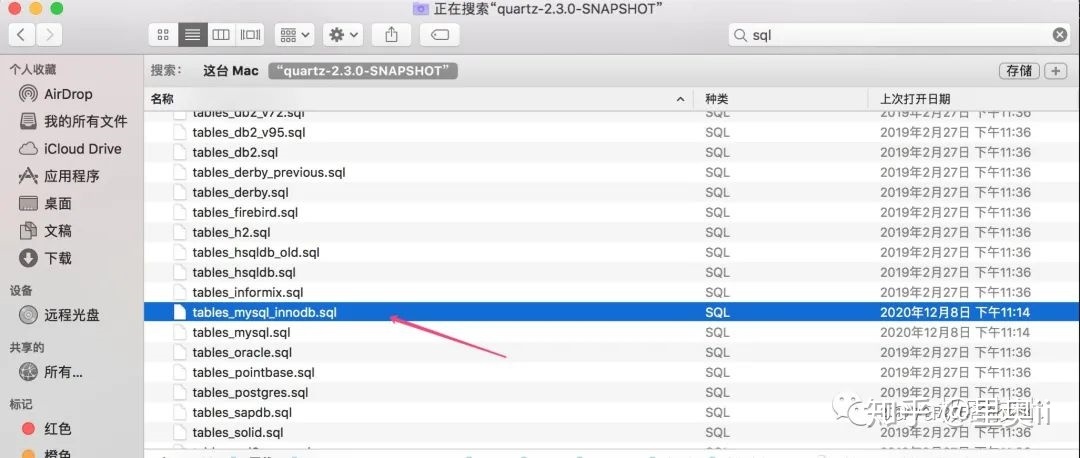

I chose quartz-2.3 0-distribution. tar. GZ, after downloading, unzip it, search for sql in the file, and select the one suitable for the current environment Database script file , and then initialize it into the database!

For example, the database I use is mysql-5.7, so I choose tables_mysql_innodb.sql script, as follows:

DROP TABLE IF EXISTS QRTZ_FIRED_TRIGGERS;

DROP TABLE IF EXISTS QRTZ_PAUSED_TRIGGER_GRPS;

DROP TABLE IF EXISTS QRTZ_SCHEDULER_STATE;

DROP TABLE IF EXISTS QRTZ_LOCKS;

DROP TABLE IF EXISTS QRTZ_SIMPLE_TRIGGERS;

DROP TABLE IF EXISTS QRTZ_SIMPROP_TRIGGERS;

DROP TABLE IF EXISTS QRTZ_CRON_TRIGGERS;

DROP TABLE IF EXISTS QRTZ_BLOB_TRIGGERS;

DROP TABLE IF EXISTS QRTZ_TRIGGERS;

DROP TABLE IF EXISTS QRTZ_JOB_DETAILS;

DROP TABLE IF EXISTS QRTZ_CALENDARS;

CREATE TABLE QRTZ_JOB_DETAILS(

SCHED_NAME VARCHAR(120) NOT NULL,

JOB_NAME VARCHAR(190) NOT NULL,

JOB_GROUP VARCHAR(190) NOT NULL,

DESCRIPTION VARCHAR(250) NULL,

JOB_CLASS_NAME VARCHAR(250) NOT NULL,

IS_DURABLE VARCHAR(1) NOT NULL,

IS_NONCONCURRENT VARCHAR(1) NOT NULL,

IS_UPDATE_DATA VARCHAR(1) NOT NULL,

REQUESTS_RECOVERY VARCHAR(1) NOT NULL,

JOB_DATA BLOB NULL,

PRIMARY KEY (SCHED_NAME,JOB_NAME,JOB_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_TRIGGERS (

SCHED_NAME VARCHAR(120) NOT NULL,

TRIGGER_NAME VARCHAR(190) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

JOB_NAME VARCHAR(190) NOT NULL,

JOB_GROUP VARCHAR(190) NOT NULL,

DESCRIPTION VARCHAR(250) NULL,

NEXT_FIRE_TIME BIGINT(13) NULL,

PREV_FIRE_TIME BIGINT(13) NULL,

PRIORITY INTEGER NULL,

TRIGGER_STATE VARCHAR(16) NOT NULL,

TRIGGER_TYPE VARCHAR(8) NOT NULL,

START_TIME BIGINT(13) NOT NULL,

END_TIME BIGINT(13) NULL,

CALENDAR_NAME VARCHAR(190) NULL,

MISFIRE_INSTR SMALLINT(2) NULL,

JOB_DATA BLOB NULL,

PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

FOREIGN KEY (SCHED_NAME,JOB_NAME,JOB_GROUP)

REFERENCES QRTZ_JOB_DETAILS(SCHED_NAME,JOB_NAME,JOB_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_SIMPLE_TRIGGERS (

SCHED_NAME VARCHAR(120) NOT NULL,

TRIGGER_NAME VARCHAR(190) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

REPEAT_COUNT BIGINT(7) NOT NULL,

REPEAT_INTERVAL BIGINT(12) NOT NULL,

TIMES_TRIGGERED BIGINT(10) NOT NULL,

PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

FOREIGN KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP)

REFERENCES QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_CRON_TRIGGERS (

SCHED_NAME VARCHAR(120) NOT NULL,

TRIGGER_NAME VARCHAR(190) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

CRON_EXPRESSION VARCHAR(120) NOT NULL,

TIME_ZONE_ID VARCHAR(80),

PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

FOREIGN KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP)

REFERENCES QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_SIMPROP_TRIGGERS

(

SCHED_NAME VARCHAR(120) NOT NULL,

TRIGGER_NAME VARCHAR(190) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

STR_PROP_1 VARCHAR(512) NULL,

STR_PROP_2 VARCHAR(512) NULL,

STR_PROP_3 VARCHAR(512) NULL,

INT_PROP_1 INT NULL,

INT_PROP_2 INT NULL,

LONG_PROP_1 BIGINT NULL,

LONG_PROP_2 BIGINT NULL,

DEC_PROP_1 NUMERIC(13,4) NULL,

DEC_PROP_2 NUMERIC(13,4) NULL,

BOOL_PROP_1 VARCHAR(1) NULL,

BOOL_PROP_2 VARCHAR(1) NULL,

PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

FOREIGN KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP)

REFERENCES QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_BLOB_TRIGGERS (

SCHED_NAME VARCHAR(120) NOT NULL,

TRIGGER_NAME VARCHAR(190) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

BLOB_DATA BLOB NULL,

PRIMARY KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP),

INDEX (SCHED_NAME,TRIGGER_NAME, TRIGGER_GROUP),

FOREIGN KEY (SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP)

REFERENCES QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_CALENDARS (

SCHED_NAME VARCHAR(120) NOT NULL,

CALENDAR_NAME VARCHAR(190) NOT NULL,

CALENDAR BLOB NOT NULL,

PRIMARY KEY (SCHED_NAME,CALENDAR_NAME))

ENGINE=InnoDB;

CREATE TABLE QRTZ_PAUSED_TRIGGER_GRPS (

SCHED_NAME VARCHAR(120) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

PRIMARY KEY (SCHED_NAME,TRIGGER_GROUP))

ENGINE=InnoDB;

CREATE TABLE QRTZ_FIRED_TRIGGERS (

SCHED_NAME VARCHAR(120) NOT NULL,

ENTRY_ID VARCHAR(95) NOT NULL,

TRIGGER_NAME VARCHAR(190) NOT NULL,

TRIGGER_GROUP VARCHAR(190) NOT NULL,

INSTANCE_NAME VARCHAR(190) NOT NULL,

FIRED_TIME BIGINT(13) NOT NULL,

SCHED_TIME BIGINT(13) NOT NULL,

PRIORITY INTEGER NOT NULL,

STATE VARCHAR(16) NOT NULL,

JOB_NAME VARCHAR(190) NULL,

JOB_GROUP VARCHAR(190) NULL,

IS_NONCONCURRENT VARCHAR(1) NULL,

REQUESTS_RECOVERY VARCHAR(1) NULL,

PRIMARY KEY (SCHED_NAME,ENTRY_ID))

ENGINE=InnoDB;

CREATE TABLE QRTZ_SCHEDULER_STATE (

SCHED_NAME VARCHAR(120) NOT NULL,

INSTANCE_NAME VARCHAR(190) NOT NULL,

LAST_CHECKIN_TIME BIGINT(13) NOT NULL,

CHECKIN_INTERVAL BIGINT(13) NOT NULL,

PRIMARY KEY (SCHED_NAME,INSTANCE_NAME))

ENGINE=InnoDB;

CREATE TABLE QRTZ_LOCKS (

SCHED_NAME VARCHAR(120) NOT NULL,

LOCK_NAME VARCHAR(40) NOT NULL,

PRIMARY KEY (SCHED_NAME,LOCK_NAME))

ENGINE=InnoDB;

CREATE INDEX IDX_QRTZ_J_REQ_RECOVERY ON QRTZ_JOB_DETAILS(SCHED_NAME,REQUESTS_RECOVERY);

CREATE INDEX IDX_QRTZ_J_GRP ON QRTZ_JOB_DETAILS(SCHED_NAME,JOB_GROUP);

CREATE INDEX IDX_QRTZ_T_J ON QRTZ_TRIGGERS(SCHED_NAME,JOB_NAME,JOB_GROUP);

CREATE INDEX IDX_QRTZ_T_JG ON QRTZ_TRIGGERS(SCHED_NAME,JOB_GROUP);

CREATE INDEX IDX_QRTZ_T_C ON QRTZ_TRIGGERS(SCHED_NAME,CALENDAR_NAME);

CREATE INDEX IDX_QRTZ_T_G ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_GROUP);

CREATE INDEX IDX_QRTZ_T_STATE ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_STATE);

CREATE INDEX IDX_QRTZ_T_N_STATE ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP,TRIGGER_STATE);

CREATE INDEX IDX_QRTZ_T_N_G_STATE ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_GROUP,TRIGGER_STATE);

CREATE INDEX IDX_QRTZ_T_NEXT_FIRE_TIME ON QRTZ_TRIGGERS(SCHED_NAME,NEXT_FIRE_TIME);

CREATE INDEX IDX_QRTZ_T_NFT_ST ON QRTZ_TRIGGERS(SCHED_NAME,TRIGGER_STATE,NEXT_FIRE_TIME);

CREATE INDEX IDX_QRTZ_T_NFT_MISFIRE ON QRTZ_TRIGGERS(SCHED_NAME,MISFIRE_INSTR,NEXT_FIRE_TIME);

CREATE INDEX IDX_QRTZ_T_NFT_ST_MISFIRE ON QRTZ_TRIGGERS(SCHED_NAME,MISFIRE_INSTR,NEXT_FIRE_TIME,TRIGGER_STATE);

CREATE INDEX IDX_QRTZ_T_NFT_ST_MISFIRE_GRP ON QRTZ_TRIGGERS(SCHED_NAME,MISFIRE_INSTR,NEXT_FIRE_TIME,TRIGGER_GROUP,TRIGGER_STATE);

CREATE INDEX IDX_QRTZ_FT_TRIG_INST_NAME ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,INSTANCE_NAME);

CREATE INDEX IDX_QRTZ_FT_INST_JOB_REQ_RCVRY ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,INSTANCE_NAME,REQUESTS_RECOVERY);

CREATE INDEX IDX_QRTZ_FT_J_G ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,JOB_NAME,JOB_GROUP);

CREATE INDEX IDX_QRTZ_FT_JG ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,JOB_GROUP);

CREATE INDEX IDX_QRTZ_FT_T_G ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,TRIGGER_NAME,TRIGGER_GROUP);

CREATE INDEX IDX_QRTZ_FT_TG ON QRTZ_FIRED_TRIGGERS(SCHED_NAME,TRIGGER_GROUP);

commit;The specific table is described as follows:

Where, QRTZ_LOCKS is the row lock table that Quartz cluster implements the synchronization mechanism!

3, Quartz cluster practice

3.1. Create a springboot project and import maven dependency packages

<!--introduce boot Parent class-->

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.1.0.RELEASE</version>

</parent>

<!--Import related packages-->

<dependencies>

<!--spring boot core-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<!--spring boot test-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!--springmvc web-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!--Development environment debugging-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

<optional>true</optional>

</dependency>

<!--jpa support-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-jpa</artifactId>

</dependency>

<!--mysql data source-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

</dependency>

<!--druid Data connection pool-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.1.17</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-quartz</artifactId>

</dependency>

<!--Alibaba Json Processing package -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.46</version>

</dependency>

</dependencies>3.2. Create application Properties configuration file

spring.application.name=springboot-quartz-001 server.port=8080 #Import data source spring.datasource.url=jdbc:mysql://127.0.0.1:3306/test?serverTimezone=UTC&useUnicode=true&characterEncoding=utf-8&useSSL=true spring.datasource.username=root spring.datasource.password=123456 spring.datasource.driver-class-name=com.mysql.cj.jdbc.Driver

3.3. Create quartz Properties configuration file

#Scheduling configuration #Scheduler instance name org.quartz.scheduler.instanceName=SsmScheduler #Automatic generation of scheduler instance number org.quartz.scheduler.instanceId=AUTO #Do you want to use UserTransaction before Quartz executes a job org.quartz.scheduler.wrapJobExecutionInUserTransaction=false #Thread pool configuration #Implementation class of thread pool org.quartz.threadPool.class=org.quartz.simpl.SimpleThreadPool #Number of threads in the thread pool org.quartz.threadPool.threadCount=10 #thread priority org.quartz.threadPool.threadPriority=5 #Configure whether to start the scheduled task in the automatic loading database. The default is true org.quartz.threadPool.threadsInheritContextClassLoaderOfInitializingThread=true #Whether to set it as a daemon thread. After setting, the task will not be executed #org.quartz.threadPool.makeThreadsDaemons=true #Persistent mode configuration #Are JobDataMaps all of String type org.quartz.jobStore.useProperties=true #Prefix of data table, default QRTZ_ org.quartz.jobStore.tablePrefix=QRTZ_ #Maximum tolerable trigger timeout org.quartz.jobStore.misfireThreshold=60000 #Whether to run in cluster mode org.quartz.jobStore.isClustered=true #The inspection interval for the failure of the scheduling instance, in milliseconds org.quartz.jobStore.clusterCheckinInterval=2000 #The data storage method is database persistence org.quartz.jobStore.class=org.quartz.impl.jdbcjobstore.JobStoreTX #Database agent class, general org quartz. impl. jdbcjobstore. Stdjdbc delegate can satisfy most databases org.quartz.jobStore.driverDelegateClass=org.quartz.impl.jdbcjobstore.StdJDBCDelegate #Random database alias org.quartz.jobStore.dataSource=qzDS #Database connection pool, set it to druid org.quartz.dataSource.qzDS.connectionProvider.class=com.example.cluster.quartz.config.DruidConnectionProvider #database engine org.quartz.dataSource.qzDS.driver=com.mysql.cj.jdbc.Driver #Database connection org.quartz.dataSource.qzDS.URL=jdbc:mysql://127.0.0.1:3306/test-quartz?serverTimezone=UTC&useUnicode=true&characterEncoding=utf-8&useSSL=true #Database user org.quartz.dataSource.qzDS.user=root #Database password org.quartz.dataSource.qzDS.password=123456 #Maximum connections allowed org.quartz.dataSource.qzDS.maxConnection=5 #Validation query sql can be set org.quartz.dataSource.qzDS.validationQuery=select 0 from dual

3.4. Register Quartz Task factory

@Component

public class QuartzJobFactory extends AdaptableJobFactory {

@Autowired

private AutowireCapableBeanFactory capableBeanFactory;

@Override

protected Object createJobInstance(TriggerFiredBundle bundle) throws Exception {

//Call the method of the parent class

Object jobInstance = super.createJobInstance(bundle);

//Inject

capableBeanFactory.autowireBean(jobInstance);

return jobInstance;

}

}3.5 registered dispatching factory

@Configuration

public class QuartzConfig {

@Autowired

private QuartzJobFactory jobFactory;

@Bean

public SchedulerFactoryBean schedulerFactoryBean() throws IOException {

//Get configuration properties

PropertiesFactoryBean propertiesFactoryBean = new PropertiesFactoryBean();

propertiesFactoryBean.setLocation(new ClassPathResource("/quartz.properties"));

//In quartz The properties in properties are read and injected before initializing the object

propertiesFactoryBean.afterPropertiesSet();

//Create SchedulerFactoryBean

SchedulerFactoryBean factory = new SchedulerFactoryBean();

factory.setQuartzProperties(propertiesFactoryBean.getObject());

factory.setJobFactory(jobFactory);//Other business objects can be injected into the JOB instance

factory.setApplicationContextSchedulerContextKey("applicationContextKey");

factory.setWaitForJobsToCompleteOnShutdown(true);//In this way, when spring is shut down, it will wait for all started quartz job s to end before spring can be completely shut down.

factory.setOverwriteExistingJobs(false);//Overwrite existing jobs

factory.setStartupDelay(10);//The QuartzScheduler delays starting. After the application starts, the QuartzScheduler starts again

return factory;

}

/**

* Obtain an instance of the Scheduler through the SchedulerFactoryBean

* @return

* @throws IOException

* @throws SchedulerException

*/

@Bean(name = "scheduler")

public Scheduler scheduler() throws IOException, SchedulerException {

Scheduler scheduler = schedulerFactoryBean().getScheduler();

return scheduler;

}

}3.6. Reset Quartz data connection pool

The default Quartz data connection pool is c3p0. Because the performance is not stable, it is not recommended. Therefore, we change it to driud data connection pool. The configuration is as follows:

public class DruidConnectionProvider implements ConnectionProvider {

/**

* Constant configuration, and Quartz The key of the properties file is consistent (remove the prefix), and the set method is provided. The Quartz framework automatically injects the value.

* @return

* @throws SQLException

*/

//JDBC Driver

public String driver;

//JDBC connection string

public String URL;

//Database user name

public String user;

//Database user password

public String password;

//Maximum number of database connections

public int maxConnection;

//The database SQL query executes back to the connection pool every time a connection is returned to ensure that it is still valid.

public String validationQuery;

private boolean validateOnCheckout;

private int idleConnectionValidationSeconds;

public String maxCachedStatementsPerConnection;

private String discardIdleConnectionsSeconds;

public static final int DEFAULT_DB_MAX_CONNECTIONS = 10;

public static final int DEFAULT_DB_MAX_CACHED_STATEMENTS_PER_CONNECTION = 120;

//Druid connection pool

private DruidDataSource datasource;

@Override

public Connection getConnection() throws SQLException {

return datasource.getConnection();

}

@Override

public void shutdown() throws SQLException {

datasource.close();

}

@Override

public void initialize() throws SQLException {

if (this.URL == null) {

throw new SQLException("DBPool could not be created: DB URL cannot be null");

}

if (this.driver == null) {

throw new SQLException("DBPool driver could not be created: DB driver class name cannot be null!");

}

if (this.maxConnection < 0) {

throw new SQLException("DBPool maxConnectins could not be created: Max connections must be greater than zero!");

}

datasource = new DruidDataSource();

try{

datasource.setDriverClassName(this.driver);

} catch (Exception e) {

try {

throw new SchedulerException("Problem setting driver class name on datasource: " + e.getMessage(), e);

} catch (SchedulerException e1) {

}

}

datasource.setUrl(this.URL);

datasource.setUsername(this.user);

datasource.setPassword(this.password);

datasource.setMaxActive(this.maxConnection);

datasource.setMinIdle(1);

datasource.setMaxWait(0);

datasource.setMaxPoolPreparedStatementPerConnectionSize(DEFAULT_DB_MAX_CONNECTIONS);

if (this.validationQuery != null) {

datasource.setValidationQuery(this.validationQuery);

if(!this.validateOnCheckout)

datasource.setTestOnReturn(true);

else

datasource.setTestOnBorrow(true);

datasource.setValidationQueryTimeout(this.idleConnectionValidationSeconds);

}

}

public String getDriver() {

return driver;

}

public void setDriver(String driver) {

this.driver = driver;

}

public String getURL() {

return URL;

}

public void setURL(String URL) {

this.URL = URL;

}

public String getUser() {

return user;

}

public void setUser(String user) {

this.user = user;

}

public String getPassword() {

return password;

}

public void setPassword(String password) {

this.password = password;

}

public int getMaxConnection() {

return maxConnection;

}

public void setMaxConnection(int maxConnection) {

this.maxConnection = maxConnection;

}

public String getValidationQuery() {

return validationQuery;

}

public void setValidationQuery(String validationQuery) {

this.validationQuery = validationQuery;

}

public boolean isValidateOnCheckout() {

return validateOnCheckout;

}

public void setValidateOnCheckout(boolean validateOnCheckout) {

this.validateOnCheckout = validateOnCheckout;

}

public int getIdleConnectionValidationSeconds() {

return idleConnectionValidationSeconds;

}

public void setIdleConnectionValidationSeconds(int idleConnectionValidationSeconds) {

this.idleConnectionValidationSeconds = idleConnectionValidationSeconds;

}

public DruidDataSource getDatasource() {

return datasource;

}

public void setDatasource(DruidDataSource datasource) {

this.datasource = datasource;

}

public String getDiscardIdleConnectionsSeconds() {

return discardIdleConnectionsSeconds;

}

public void setDiscardIdleConnectionsSeconds(String discardIdleConnectionsSeconds) {

this.discardIdleConnectionsSeconds = discardIdleConnectionsSeconds;

}

}After the creation is completed, you also need to open the Just set it in the properties configuration file!

#Database connection pool, set it to druid org.quartz.dataSource.qzDS.connectionProvider.class=com.example.cluster.quartz.config.DruidConnectionProvider

If already configured, please ignore!

3.7. Write Job specific tasks

public class TfCommandJob implements Job {

private static final Logger log = LoggerFactory.getLogger(TfCommandJob.class);

@Override

public void execute(JobExecutionContext context) {

try {

System.out.println(context.getScheduler().getSchedulerInstanceId() + "--" + new SimpleDateFormat("YYYY-MM-dd HH:mm:ss").format(new Date()));

} catch (SchedulerException e) {

log.error("Task execution failed",e);

}

}

}3.8. Write Quartz service layer interface

public interface QuartzJobService {

/**

* Add task parameters

* @param clazzName

* @param jobName

* @param groupName

* @param cronExp

* @param param

*/

void addJob(String clazzName, String jobName, String groupName, String cronExp, Map<String, Object> param);

/**

* Suspend task

* @param jobName

* @param groupName

*/

void pauseJob(String jobName, String groupName);

/**

* Recovery task

* @param jobName

* @param groupName

*/

void resumeJob(String jobName, String groupName);

/**

* Run a scheduled task immediately

* @param jobName

* @param groupName

*/

void runOnce(String jobName, String groupName);

/**

* Update task

* @param jobName

* @param groupName

* @param cronExp

* @param param

*/

void updateJob(String jobName, String groupName, String cronExp, Map<String, Object> param);

/**

* Delete task

* @param jobName

* @param groupName

*/

void deleteJob(String jobName, String groupName);

/**

* Start all tasks

*/

void startAllJobs();

/**

* Pause all tasks

*/

void pauseAllJobs();

/**

* Restore all tasks

*/

void resumeAllJobs();

/**

* Close all tasks

*/

void shutdownAllJobs();

}The corresponding implementation class QuartzJobServiceImpl is as follows:

@Service

public class QuartzJobServiceImpl implements QuartzJobService {

private static final Logger log = LoggerFactory.getLogger(QuartzJobServiceImpl.class);

@Autowired

private Scheduler scheduler;

@Override

public void addJob(String clazzName, String jobName, String groupName, String cronExp, Map<String, Object> param) {

try {

// Start the scheduler. It has been started by default during initialization

// scheduler.start();

//Build job information

Class<? extends Job> jobClass = (Class<? extends Job>) Class.forName(clazzName);

JobDetail jobDetail = JobBuilder.newJob(jobClass).withIdentity(jobName, groupName).build();

//Expression scheduling Builder (that is, the time when the task is executed)

CronScheduleBuilder scheduleBuilder = CronScheduleBuilder.cronSchedule(cronExp);

//Build a new trigger according to the new cronExpression expression

CronTrigger trigger = TriggerBuilder.newTrigger().withIdentity(jobName, groupName).withSchedule(scheduleBuilder).build();

//Obtain JobDataMap and write data

if (param != null) {

trigger.getJobDataMap().putAll(param);

}

scheduler.scheduleJob(jobDetail, trigger);

} catch (Exception e) {

log.error("Failed to create task", e);

}

}

@Override

public void pauseJob(String jobName, String groupName) {

try {

scheduler.pauseJob(JobKey.jobKey(jobName, groupName));

} catch (SchedulerException e) {

log.error("Failed to suspend task", e);

}

}

@Override

public void resumeJob(String jobName, String groupName) {

try {

scheduler.resumeJob(JobKey.jobKey(jobName, groupName));

} catch (SchedulerException e) {

log.error("Recovery task failed", e);

}

}

@Override

public void runOnce(String jobName, String groupName) {

try {

scheduler.triggerJob(JobKey.jobKey(jobName, groupName));

} catch (SchedulerException e) {

log.error("Failed to run a scheduled task immediately", e);

}

}

@Override

public void updateJob(String jobName, String groupName, String cronExp, Map<String, Object> param) {

try {

TriggerKey triggerKey = TriggerKey.triggerKey(jobName, groupName);

CronTrigger trigger = (CronTrigger) scheduler.getTrigger(triggerKey);

if (cronExp != null) {

// Expression scheduling builder

CronScheduleBuilder scheduleBuilder = CronScheduleBuilder.cronSchedule(cronExp);

// Rebuild the trigger with the new cronExpression expression

trigger = trigger.getTriggerBuilder().withIdentity(triggerKey).withSchedule(scheduleBuilder).build();

}

//Modify map

if (param != null) {

trigger.getJobDataMap().putAll(param);

}

// Press the new trigger to reset the job execution

scheduler.rescheduleJob(triggerKey, trigger);

} catch (Exception e) {

log.error("Update task failed", e);

}

}

@Override

public void deleteJob(String jobName, String groupName) {

try {

//Pause, remove, delete

scheduler.pauseTrigger(TriggerKey.triggerKey(jobName, groupName));

scheduler.unscheduleJob(TriggerKey.triggerKey(jobName, groupName));

scheduler.deleteJob(JobKey.jobKey(jobName, groupName));

} catch (Exception e) {

log.error("Delete task failed", e);

}

}

@Override

public void startAllJobs() {

try {

scheduler.start();

} catch (Exception e) {

log.error("Failed to open all tasks", e);

}

}

@Override

public void pauseAllJobs() {

try {

scheduler.pauseAll();

} catch (Exception e) {

log.error("Failed to pause all tasks", e);

}

}

@Override

public void resumeAllJobs() {

try {

scheduler.resumeAll();

} catch (Exception e) {

log.error("Failed to restore all tasks", e);

}

}

@Override

public void shutdownAllJobs() {

try {

if (!scheduler.isShutdown()) {

// Be careful to close the scheduler container

// The scheduler life cycle has ended and cannot be started by start()

scheduler.shutdown(true);

}

} catch (Exception e) {

log.error("Failed to close all tasks", e);

}

}

}3.9. Write the contoller service

- First create a request parameter entity class

public class QuartzConfigDTO implements Serializable {

private static final long serialVersionUID = 1L;

/**

* Task name

*/

private String jobName;

/**

* Task group

*/

private String groupName;

/**

* Task execution class

*/

private String jobClass;

/**

* Task scheduling time expression

*/

private String cronExpression;

/**

* Additional parameters

*/

private Map<String, Object> param;

public String getJobName() {

return jobName;

}

public QuartzConfigDTO setJobName(String jobName) {

this.jobName = jobName;

return this;

}

public String getGroupName() {

return groupName;

}

public QuartzConfigDTO setGroupName(String groupName) {

this.groupName = groupName;

return this;

}

public String getJobClass() {

return jobClass;

}

public QuartzConfigDTO setJobClass(String jobClass) {

this.jobClass = jobClass;

return this;

}

public String getCronExpression() {

return cronExpression;

}

public QuartzConfigDTO setCronExpression(String cronExpression) {

this.cronExpression = cronExpression;

return this;

}

public Map<String, Object> getParam() {

return param;

}

public QuartzConfigDTO setParam(Map<String, Object> param) {

this.param = param;

return this;

}

}- Writing web service interfaces

@RestController

@RequestMapping("/test")

public class TestController {

private static final Logger log = LoggerFactory.getLogger(TestController.class);

@Autowired

private QuartzJobService quartzJobService;

/**

* Add new task

* @param configDTO

* @return

*/

@RequestMapping("/addJob")

public Object addJob(@RequestBody QuartzConfigDTO configDTO) {

quartzJobService.addJob(configDTO.getJobClass(), configDTO.getJobName(), configDTO.getGroupName(), configDTO.getCronExpression(), configDTO.getParam());

return HttpStatus.OK;

}

/**

* Suspend task

* @param configDTO

* @return

*/

@RequestMapping("/pauseJob")

public Object pauseJob(@RequestBody QuartzConfigDTO configDTO) {

quartzJobService.pauseJob(configDTO.getJobName(), configDTO.getGroupName());

return HttpStatus.OK;

}

/**

* Recovery task

* @param configDTO

* @return

*/

@RequestMapping("/resumeJob")

public Object resumeJob(@RequestBody QuartzConfigDTO configDTO) {

quartzJobService.resumeJob(configDTO.getJobName(), configDTO.getGroupName());

return HttpStatus.OK;

}

/**

* Run a scheduled task immediately

* @param configDTO

* @return

*/

@RequestMapping("/runOnce")

public Object runOnce(@RequestBody QuartzConfigDTO configDTO) {

quartzJobService.runOnce(configDTO.getJobName(), configDTO.getGroupName());

return HttpStatus.OK;

}

/**

* Update task

* @param configDTO

* @return

*/

@RequestMapping("/updateJob")

public Object updateJob(@RequestBody QuartzConfigDTO configDTO) {

quartzJobService.updateJob(configDTO.getJobName(), configDTO.getGroupName(), configDTO.getCronExpression(), configDTO.getParam());

return HttpStatus.OK;

}

/**

* Delete task

* @param configDTO

* @return

*/

@RequestMapping("/deleteJob")

public Object deleteJob(@RequestBody QuartzConfigDTO configDTO) {

quartzJobService.deleteJob(configDTO.getJobName(), configDTO.getGroupName());

return HttpStatus.OK;

}

/**

* Start all tasks

* @return

*/

@RequestMapping("/startAllJobs")

public Object startAllJobs() {

quartzJobService.startAllJobs();

return HttpStatus.OK;

}

/**

* Pause all tasks

* @return

*/

@RequestMapping("/pauseAllJobs")

public Object pauseAllJobs() {

quartzJobService.pauseAllJobs();

return HttpStatus.OK;

}

/**

* Restore all tasks

* @return

*/

@RequestMapping("/resumeAllJobs")

public Object resumeAllJobs() {

quartzJobService.resumeAllJobs();

return HttpStatus.OK;

}

/**

* Close all tasks

* @return

*/

@RequestMapping("/shutdownAllJobs")

public Object shutdownAllJobs() {

quartzJobService.shutdownAllJobs();

return HttpStatus.OK;

}

}3.10 service interface test

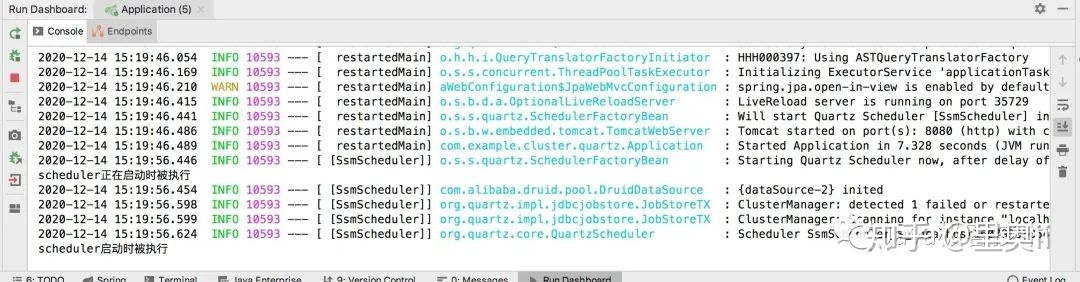

Run the Application class of SpringBoot and start the service!

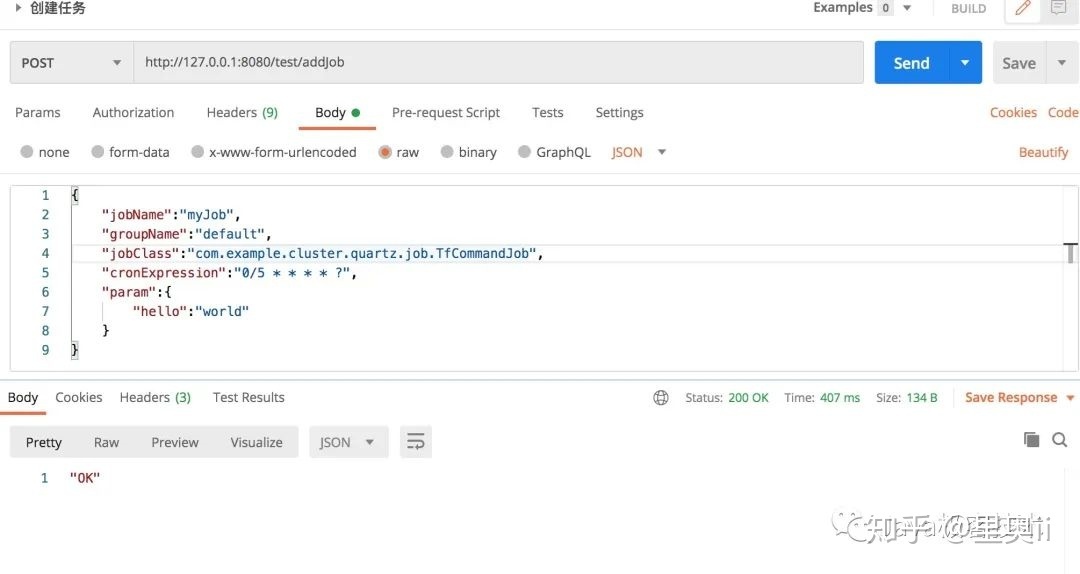

Create a scheduled task that executes every 5 seconds

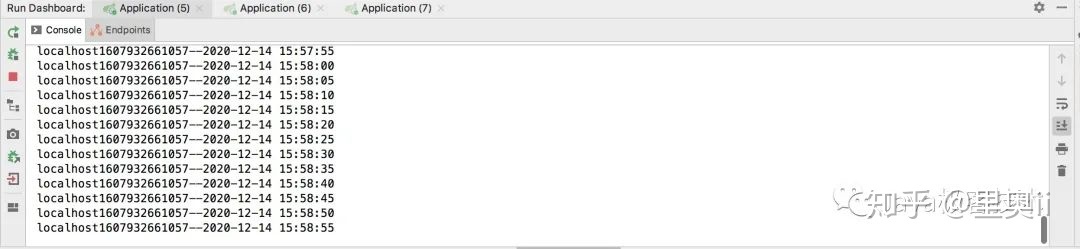

You can see that the service is running normally!

3.11 registered listener (optional)

Of course, if you want to integrate Quartz listeners in SpringBoot, the operation is also very simple!

- Create task scheduling listener

@Component

public class SimpleSchedulerListener extends SchedulerListenerSupport {

@Override

public void jobScheduled(Trigger trigger) {

System.out.println("Tasks are executed when deployed");

}

@Override

public void jobUnscheduled(TriggerKey triggerKey) {

System.out.println("The task is executed when it is unloaded");

}

@Override

public void triggerFinalized(Trigger trigger) {

System.out.println("The mission completed its mission and was carried out when it retired with honor");

}

@Override

public void triggerPaused(TriggerKey triggerKey) {

System.out.println(triggerKey + "(A trigger) is executed when suspended");

}

@Override

public void triggersPaused(String triggerGroup) {

System.out.println(triggerGroup + "Is executed when all triggers in the group are stopped");

}

@Override

public void triggerResumed(TriggerKey triggerKey) {

System.out.println(triggerKey + "(A trigger) is executed when it is restored");

}

@Override

public void triggersResumed(String triggerGroup) {

System.out.println(triggerGroup + "All triggers in the group are executed when they are replied");

}

@Override

public void jobAdded(JobDetail jobDetail) {

System.out.println("One JobDetail Dynamically added");

}

@Override

public void jobDeleted(JobKey jobKey) {

System.out.println(jobKey + "Executed when deleted");

}

@Override

public void jobPaused(JobKey jobKey) {

System.out.println(jobKey + "Executed when suspended");

}

@Override

public void jobsPaused(String jobGroup) {

System.out.println(jobGroup + "(A group of tasks) is executed when suspended");

}

@Override

public void jobResumed(JobKey jobKey) {

System.out.println(jobKey + "Executed when restored");

}

@Override

public void jobsResumed(String jobGroup) {

System.out.println(jobGroup + "(A set of tasks) is executed when restored");

}

@Override

public void schedulerError(String msg, SchedulerException cause) {

System.out.println("An exception occurred" + msg + "Executed when");

cause.printStackTrace();

}

@Override

public void schedulerInStandbyMode() {

System.out.println("scheduler Set to standBy Executed in wait mode");

}

@Override

public void schedulerStarted() {

System.out.println("scheduler Executed at startup");

}

@Override

public void schedulerStarting() {

System.out.println("scheduler Executed at startup");

}

@Override

public void schedulerShutdown() {

System.out.println("scheduler Executed when closed");

}

@Override

public void schedulerShuttingdown() {

System.out.println("scheduler Executed while shutting down");

}

@Override

public void schedulingDataCleared() {

System.out.println("scheduler All data in the include jobs, triggers and calendars Is executed when all are cleared");

}

}- Create task trigger listener

@Component

public class SimpleTriggerListener extends TriggerListenerSupport {

/**

* Trigger The name of the listener

* @return

*/

@Override

public String getName() {

return "mySimpleTriggerListener";

}

/**

* Trigger The job that is fired and associated with it is about to be run

* @param trigger

* @param context

*/

@Override

public void triggerFired(Trigger trigger, JobExecutionContext context) {

System.out.println("myTriggerListener.triggerFired()");

}

/**

* Trigger The Job associated with the triggered Job is about to be run. The TriggerListener gives an option to veto the execution of the Job. If TRUE is returned, the task Job will be terminated

* @param trigger

* @param context

* @return

*/

@Override

public boolean vetoJobExecution(Trigger trigger, JobExecutionContext context) {

System.out.println("myTriggerListener.vetoJobExecution()");

return false;

}

/**

* When the Trigger misses being fired, for example, many triggers need to be executed at the current time, but the effective threads in the thread pool are working,

* Then some triggers may timeout and miss this round of trigger.

* @param trigger

*/

@Override

public void triggerMisfired(Trigger trigger) {

System.out.println("myTriggerListener.triggerMisfired()");

}

/**

* Triggered when the task is completed

* @param trigger

* @param context

* @param triggerInstructionCode

*/

@Override

public void triggerComplete(Trigger trigger, JobExecutionContext context, Trigger.CompletedExecutionInstruction triggerInstructionCode) {

System.out.println("myTriggerListener.triggerComplete()");

}

}- Create task execution listener

@Component

public class SimpleJobListener extends JobListenerSupport {

/**

* job Listener name

* @return

*/

@Override

public String getName() {

return "mySimpleJobListener";

}

/**

* Before the task is scheduled

* @param context

*/

@Override

public void jobToBeExecuted(JobExecutionContext context) {

System.out.println("simpleJobListener Listener, ready to execute:"+context.getJobDetail().getKey());

}

/**

* Task scheduling was rejected

* @param context

*/

@Override

public void jobExecutionVetoed(JobExecutionContext context) {

System.out.println("simpleJobListener Listener, cancel execution:"+context.getJobDetail().getKey());

}

/**

* After the task is scheduled

* @param context

* @param jobException

*/

@Override

public void jobWasExecuted(JobExecutionContext context, JobExecutionException jobException) {

System.out.println("simpleJobListener Listener, end of execution:"+context.getJobDetail().getKey());

}

}- Finally, register the listener with the Scheduler

@Autowired

private SimpleSchedulerListener simpleSchedulerListener;

@Autowired

private SimpleJobListener simpleJobListener;

@Autowired

private SimpleTriggerListener simpleTriggerListener;

@Bean(name = "scheduler")

public Scheduler scheduler() throws IOException, SchedulerException {

Scheduler scheduler = schedulerFactoryBean().getScheduler();

//Add listener globally

//Add SchedulerListener listener

scheduler.getListenerManager().addSchedulerListener(simpleSchedulerListener);

// Add JobListener to support listeners with conditional matching

scheduler.getListenerManager().addJobListener(simpleJobListener, KeyMatcher.keyEquals(JobKey.jobKey("myJob", "myGroup")));

// Add a triggerListener to set global listening

scheduler.getListenerManager().addTriggerListener(simpleTriggerListener, EverythingMatcher.allTriggers());

return scheduler;

}3.12 project data source (optional)

In the above Quartz data source configuration, we use a custom data source to decouple from the data source in the project. Of course, some students do not want to build a separate database and want to be consistent with the data source in the project. The configuration is also very simple!

- In quartz In the properties configuration file, remove org quartz. jobStore. Datasource configuration

#Comment out the data source configuration of quartz #org.quartz.jobStore.dataSource=qzDS

- Add dataSource data source in QuartzConfig configuration class and inject it into quartz

@Autowired

private DataSource dataSource;

@Bean

public SchedulerFactoryBean schedulerFactoryBean() throws IOException {

//...

SchedulerFactoryBean factory = new SchedulerFactoryBean();

factory.setQuartzProperties(propertiesFactoryBean.getObject());

//Using data sources, customizing data sources

factory.setDataSource(dataSource);

//...

return factory;

}4, Task scheduling test

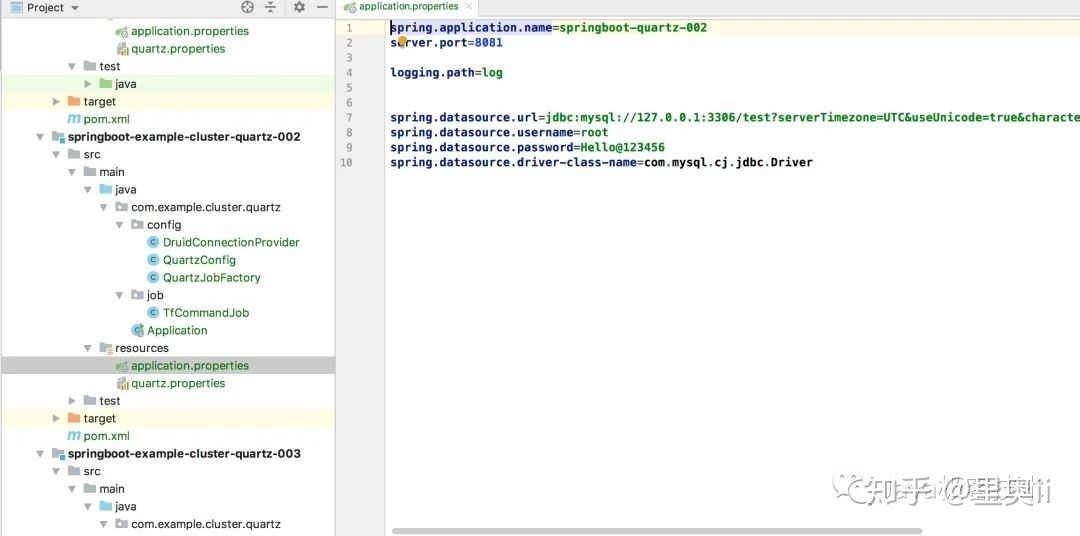

In the actual deployment, projects are deployed in clusters. Therefore, in order to be consistent with the formal environment, we will create two new projects to test whether quartz can realize distributed scheduling in the cluster environment to ensure that only one machine is running for any scheduled task?

In theory, we only need to copy the newly created project, and then modify the port number to realize the local test!

Because there is only one curd service, we do not need to write additional, deletion and modification services such as QuartzJobService, but only maintain QuartzConfig, DruidConnectionProvider, QuartzJobFactory, TfCommandJob and quartz The properties class and configuration are the same!

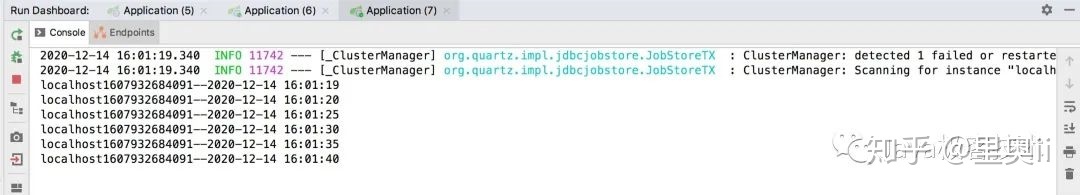

- Start the services quartz-001, quartz-002 and quartz-003 in turn to see the effect

The first started service quartz-001 will preferentially load the scheduled tasks already configured in the database. The other two services quartz-002 and quartz-003 do not have active scheduling services

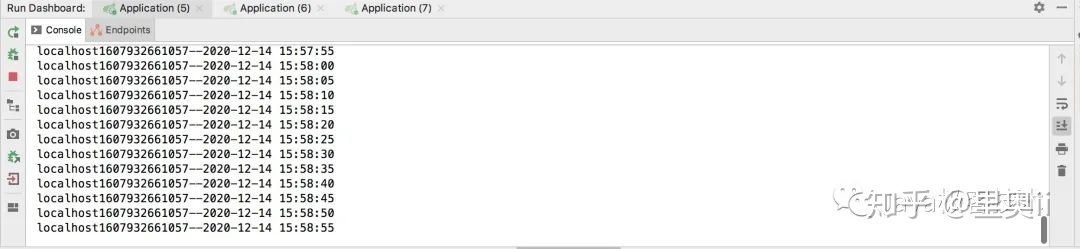

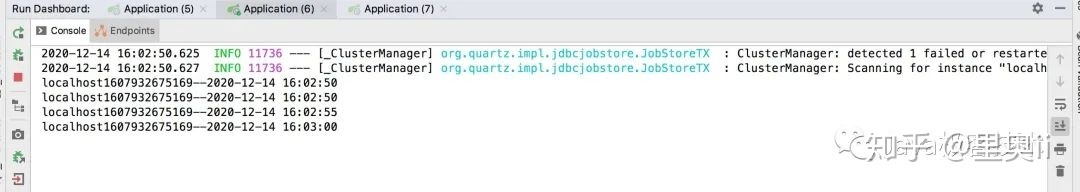

- When we actively shut down quartz-001, the quartz-002 service actively receives task scheduling

- When we actively close quartz-002, the same quartz-003 service actively receives task scheduling

The final result is consistent with our expected effect!

5, Summary

This paper focuses on the implementation of persistent distributed scheduling by springboot + quartz + mysql. All code functions have been tested.