preface

Spring Cloud Ribbon is a set of client-side load balancing tools based on Netflix Ribbon.

In short, ribbon is an open source project released by Netflix. Its main function is to provide software load balancing algorithms and service calls on the client. Ribbon client component provides a series of perfect configuration items, such as connection timeout, Retry, etc.

Simply put, list all the machines behind the load balancer (LB) in the configuration file. The Ribbon will automatically help you connect these machines based on certain rules (such as simple polling, random connection, etc.). We can easily use Ribbon to implement a custom load balancing algorithm.

load balancing

At present, most internet systems use server cluster technology, which means that the same service is deployed on multiple servers to form a cluster to provide services. These clusters can be Web application server clusters, database server clusters, distributed cache server clusters, etc. However, the access portal provided by our website is one, such as www.baidu.com com. Then when the user enters www.baidu.com in the browser COM, how to distribute user requests to different machines in the cluster, which is what load balancing is doing.

In practical applications, there will be a component responsible for load balancing in front of our cluster server. This component acts as the traffic entrance for the client to access the server, forwards the client's request to it for processing, and realizes the transparent forwarding from the client to the real server.

The two core problems solved by software load are: who to choose and forward. The most famous one is LVS (Linux Virtual Server)

Load balancing classification

Broadly speaking, load balancers can be divided into three categories, including DNS load balancing, hardware load balancing and software load balancing.

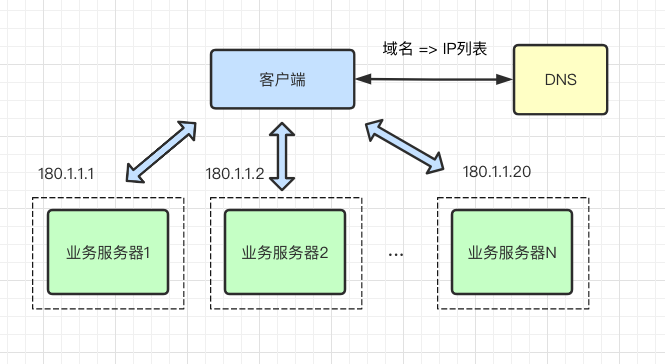

DNS load balancing

DNS load balancing is the most basic and simple way. A domain name is resolved to multiple IPS through DNS, and each IP corresponds to different server instances. In this way, the traffic scheduling is completed. Although the conventional load balancer is not used, the simple load balancing function is realized.

The way to realize load balancing through DNS has the greatest advantages of simple implementation and low cost. There is no need to develop or maintain load balancing equipment, but there are some disadvantages:

The server failover delay is large and the server upgrade is inconvenient. We know that there are layers of cache between DNS and users. Even if the failed server is modified or removed through DNS in time when the failure occurs, it passes through the DNS cache of the operator in the middle, and the cache is likely not to follow the TTL rules, resulting in a very slow DNS effective time, and sometimes there will be some request traffic one day later.

Traffic scheduling is unbalanced and the granularity is too coarse. The balance of DNS scheduling is related to the policy of local DNS returning IP list of regional operators. Some operators do not poll and return multiple different IP addresses. In addition, how many users are served behind a certain operator's LocalDNS, which will also constitute an important factor in uneven traffic scheduling.

The traffic allocation strategy is too simple and there are too few supported algorithms. Generally, DNS only supports the polling mode of {rr}, the traffic allocation strategy is relatively simple, and does not support scheduling algorithms such as weight and Hash.

The list of IP addresses supported by DNS is limited. We know that DNS uses UDP messages for information transmission. The size of each UDP message is limited by the MTU of the link, so the number of IP addresses stored in the message is also very limited. Alibaba DNS system supports configuring 10 different IP addresses for the same domain name.

Hardware load balancing

Hardware load balancing is to realize the load balancing function through special hardware equipment. It is a special load balancing equipment. At present, there are two typical hardware load balancing devices in the industry: F5 and A10.

This kind of equipment has strong performance and powerful functions, but the price is very expensive. Generally, only local tyrants will use this kind of equipment. Small and medium-sized companies generally can't afford it, and the business volume is not so large. Using these equipment is also very wasteful.

Advantages of hardware load balancing:

Powerful: fully support load balancing at all levels and comprehensive load balancing algorithms.

Powerful performance: the performance is far better than the common software load balancer.

High stability: commercial hardware load balancing has been well and strictly tested, and has high stability after large-scale use.

Security protection: it also has firewall, anti DDoS attack and other security functions, and supports SNAT function.

The disadvantages of hardware load balancing are also obvious:

expensive price;

Poor scalability, unable to expand and customize;

Commissioning and maintenance are troublesome and require professional personnel;

Software load balancing

Software load balancing can run load balancing software on ordinary servers to realize load balancing function. At present, the common ones are} Nginx, HAproxy and LVS. The differences are:

Nginx: seven layer load balancing, supporting HTTP and E-mail protocols, as well as four layer load balancing;

HAproxy: it supports seven layer rules, and its performance is also very good. The default load balancing software used by OpenStack is HAproxy;

LVS: it runs in the kernel state and has the highest performance in software load balancing. Strictly speaking, it works in layer 3, so it is more general and applicable to various application services.

Advantages of software load balancing:

Easy to operate: both deployment and maintenance are relatively simple;

Cheap: only the cost of the server is needed, and the software is free;

Flexible: layer 4 and layer 7 load balancing can be selected according to business characteristics to facilitate expansion and customization.

Local and global load balancing

From the geographical structure of its application, load balancing is divided into local load balance and Global Load Balance. Local load balance refers to load balancing for local server groups, and Global Load Balance refers to load balancing for server groups placed in different geographical locations and with different network structures.

Local load balancing can effectively solve the problems of excessive data traffic and heavy network load, and do not need to spend expensive expenses to purchase servers with excellent performance, make full use of existing equipment, and avoid the loss of data traffic caused by single point of failure of servers.

It has flexible and diverse balancing strategies to reasonably distribute the data traffic to the servers in the server cluster. Even if the existing server is expanded and upgraded, it is only a simple way to add a new server to the service group without changing the existing network structure and stopping the existing service.

Global load balancing is mainly used for sites with their own servers in multiple regions. In order to enable global users to access the nearest server with only one IP address or domain name, so as to obtain the fastest access speed, it can also be used for large companies with scattered subsidiaries and widely distributed sites to achieve the purpose of unified and rational resource allocation through intranet (enterprise intranet).

Ribbon

characteristic

Ribbon local load balancing client VS Nginx server load balancing difference

Nginx is server load balancing. All client requests will be handed over to nginx, and then nginx will forward the requests. That is, load balancing is realized by the server.

Ribbon local load balancing, when calling the micro service interface, will obtain the registration information service list on the registry and cache it to the JVM local, so as to realize the RPC remote service call technology locally.

Centralized LB

That is, an independent LB facility (which can be hardware, such as F5, or software, such as nginx) is used between the service consumer and the service provider, and the facility is responsible for forwarding the access request to the service provider through some policy;

In process LB

Integrate LB logic into the consumer. The consumer knows which addresses are available from the service registry, and then selects an appropriate server from these addresses.

Ribbon belongs to in-process LB, which is just a class library integrated into the consumer process, through which the consumer obtains the address of the service provider.

Generally speaking, the Ribbon is: load balancing + RestTemplate call

Load balancing algorithm

com. netflix. loadbalancer. Roundrobin rule: load balancing by rotation training

com. netflix. loadbalancer. Random rule: random policy

com.netflix.loadbalancer.RetryRule: retry policy

com.netflix.loadbalancer.WeightResponseTimeRule: weight policy

com.netflix.loadbalancer.BestAvailableRule: best policy

Availability filtering rule: filter out the failed instances first, and then select the less concurrent instances

Principle of polling algorithm

Default load rotation algorithm: the number of requests of the rest interface% the total number of server clusters = the subscript of the actual calling server location. The count of the rest interface starts from 1 after each service restart.

For example:

List [0] instances = 127.0.0.1:8002

List [1] instances = 127.0.0.1:8001

8001 + 8002 are combined into clusters. There are 2 machines in total, and the total number of clusters is 2. According to the principle of polling algorithm:

When the total number of requests is 1: 1% 2 = 1 and the corresponding subscript position is 1, the service address obtained is 127.0.0.1:8001

When the total request digit is 2: 2% 2= О If the corresponding subscript position is 0, the service address is 127.0.0.1:8002

When the total request digit is 3: 3% 2 = 1 and the corresponding subscript position is 1, the service address is 127.0.0.1:8001

When the total request digit is 4: 4% 2= О If the corresponding subscript position is 0, the service address is 127.0.0.1:8002

Polling algorithm source code:

public interface IRule{

/*

* choose one alive server from lb.allServers or

* lb.upServers according to key

*

* @return choosen Server object. NULL is returned if none

* server is available

*/

//Focus on this method

public Server choose(Object key);

public void setLoadBalancer(ILoadBalancer lb);

public ILoadBalancer getLoadBalancer();

}

package com.netflix.loadbalancer;

import com.netflix.client.config.IClientConfig;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.List;

import java.util.concurrent.atomic.AtomicInteger;

/**

* The most well known and basic load balancing strategy, i.e. Round Robin Rule.

*

* @author stonse

* @author Nikos Michalakis <nikos@netflix.com>

*

*/

public class RoundRobinRule extends AbstractLoadBalancerRule {

private AtomicInteger nextServerCyclicCounter;

private static final boolean AVAILABLE_ONLY_SERVERS = true;

private static final boolean ALL_SERVERS = false;

private static Logger log = LoggerFactory.getLogger(RoundRobinRule.class);

public RoundRobinRule() {

nextServerCyclicCounter = new AtomicInteger(0);

}

public RoundRobinRule(ILoadBalancer lb) {

this();

setLoadBalancer(lb);

}

//Focus on this method.

public Server choose(ILoadBalancer lb, Object key) {

if (lb == null) {

log.warn("no load balancer");

return null;

}

Server server = null;

int count = 0;

while (server == null && count++ < 10) {

List<Server> reachableServers = lb.getReachableServers();

List<Server> allServers = lb.getAllServers();

int upCount = reachableServers.size();

int serverCount = allServers.size();

if ((upCount == 0) || (serverCount == 0)) {

log.warn("No up servers available from load balancer: " + lb);

return null;

}

int nextServerIndex = incrementAndGetModulo(serverCount);

server = allServers.get(nextServerIndex);

if (server == null) {

/* Transient. */

Thread.yield();

continue;

}

if (server.isAlive() && (server.isReadyToServe())) {

return (server);

}

// Next.

server = null;

}

if (count >= 10) {

log.warn("No available alive servers after 10 tries from load balancer: "

+ lb);

}

return server;

}

/**

* Inspired by the implementation of {@link AtomicInteger#incrementAndGet()}.

*

* @param modulo The modulo to bound the value of the counter.

* @return The next value.

*/

private int incrementAndGetModulo(int modulo) {

for (;;) {

int current = nextServerCyclicCounter.get();

int next = (current + 1) % modulo;//Remainder method

if (nextServerCyclicCounter.compareAndSet(current, next))

return next;

}

}

@Override

public Server choose(Object key) {

return choose(getLoadBalancer(), key);

}

@Override

public void initWithNiwsConfig(IClientConfig clientConfig) {

}

}

Analysis of Ribbon principle

reference resources

cnblogs.com/kingreatwill/p/7991151.html#/cnblog/works/article/7991151