7. Hystrix circuit breaker

7.1 general

7.1.1 problems faced by distributed system

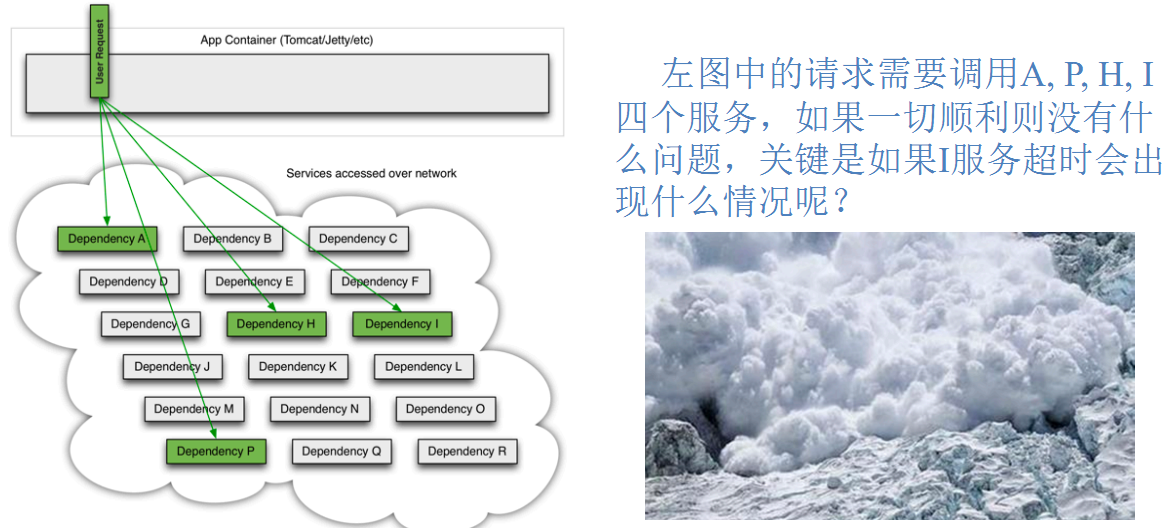

Applications in complex distributed architecture have dozens of dependencies, and each dependency will inevitably fail at some time.

Service avalanche

When calling between multiple microservices, suppose microservice A calls microservice B and microservice C, and microservice B and microservice C call other microservices, which is the so-called "fan out". If the call response time of A microservice on the fan out link is too long or unavailable, the call to microservice A will occupy more and more system resources, resulting in system crash, the so-called "avalanche effect"

For high traffic applications, a single back-end dependency may cause all resources on all servers to saturate in a few seconds. Worse than failure, these applications may also lead to increased latency between services, tight backup queues, threads and other system resources, resulting in more cascading failures of the whole system. These all indicate the need to isolate and manage failures and delays so that the failure of a single dependency cannot cancel the entire application or system.

Therefore, usually when you find that an instance under a module fails, the module will still receive traffic, and then the problematic module calls other modules, which will lead to cascading failure, or avalanche.

7.1.2 what is it

Hystrix is an open source library for dealing with delay and fault tolerance of distributed systems. In distributed systems, many dependencies inevitably fail to call, such as timeout and exception. Hystrix can ensure that when a dependency fails, it will not lead to overall service failure, avoid cascading failures, and improve the elasticity of distributed systems.

"Circuit breaker" itself is a kind of switching device. When a service unit fails, it returns an expected and treatable alternative response (FallBack) to the caller through the fault monitoring of the circuit breaker (similar to fusing fuse), rather than waiting for a long time or throwing an exception that the caller cannot handle, This ensures that the thread of the service caller will not be occupied unnecessarily for a long time, so as to avoid the spread and even avalanche of faults in the distributed system.

7.1.3 service degradation

- service degradation

- Service fuse

- Near real-time monitoring

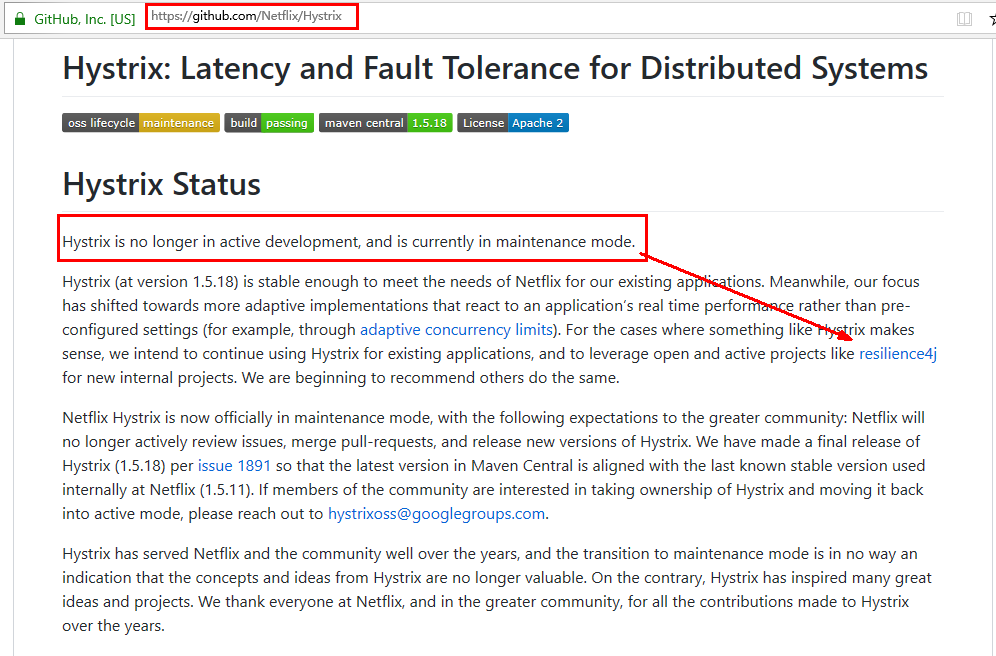

7.1.4 official website information

How to use: https://github.com/Netflix/Hystrix/wiki/How-To-Use

The official announcement of hystrix, stopping for further maintenance: https://github.com/Netflix/Hystrix

- Passively fix bugs

- No more merge requests accepted

- Don't release new version

7.2 key concepts of hystrix

7.2.1 service degradation

The server is busy. Please try again later. Don't let the client wait and immediately return a friendly prompt, fallback

Which conditions will degrade:

- Abnormal program operation

- overtime

- Service fuse triggers service degradation

- Full thread pool / semaphore will also cause service degradation

7.2.2 service fusing

Analog fuses achieve maximum service access, directly deny access, pull down the power limit, then call the service degradation method and return friendly hints.

It is the fuse: service degradation - > blow - > restore call link

7.2.3 service current limit

Second kill, high concurrency and other operations. It is strictly prohibited to rush over and crowd. Everyone queue up, N per second, in an orderly manner

7.3 hystrix case

7.3.1 build producer payment8001

- Build Module cloud provider hystrix payment8001

- POM

<dependencies>

<!--hystrix-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-netflix-hystrix</artifactId>

</dependency>

<!--eureka client-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-netflix-eureka-client</artifactId>

</dependency>

<!--web-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency><!-- Introduce self defined api General package, you can use Payment payment Entity -->

<groupId>com.rg.springcloud</groupId>

<artifactId>cloud-api-commons</artifactId>

<version>${project.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

<scope>runtime</scope>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

- YML

server:

port: 8001

spring:

application:

name: cloud-provider-hystrix-payment

eureka:

client:

register-with-eureka: true

fetch-registry: true

service-url:

#defaultZone: http://eureka7001.com:7001/eureka

defaultZone: http://eureka7001.com:7001/eureka,http://eureka7002.com:7002/eureka,http://eureka7004.com:7004/eureka #Cluster version

- Main start

@SpringBootApplication

@EnableEurekaClient //After the service is started, it will be automatically registered into eureka service

public class PaymentHystrixMain8001

{

public static void main(String[] args)

{

SpringApplication.run(PaymentHystrixMain8001.class,args);

}

}

- Business class

service

public interface PaymentService {

public String paymentInfo_OK(Integer id);

public String paymentInfo_TimeOut(Integer id);

}

@Service

public class PaymentServiceImpl implements PaymentService {

/**

* Normal access, return to OK

* @param id

* @return

*/

@Override

public String paymentInfo_OK(Integer id) {

return "Thread pool: "+Thread.currentThread().getName()+" paymentInfo_OK,id: "+id+"O(∩_∩)O Ha ha ha~~~";

}

/**

* Timeout access, demotion

* @param id

* @return

*/

public String paymentInfo_TimeOut(Integer id) {

int timeNumber = 3;

try {

TimeUnit.SECONDS.sleep(timeNumber);

}catch (InterruptedException e){

e.printStackTrace();

}

return "Thread pool: " + Thread.currentThread().getName() + " paymentInfo_TimeOUt,id: " + id + "O(∩_∩)O Ha ha ha~~~" + "time consuming(second):" + timeNumber;

}

}

controller

@RestController

@Slf4j

public class PaymentController {

@Resource

private PaymentService paymentService;

@Value("{server.port}")

private String port;

@GetMapping("/payment/hystrix/ok/{id}")

public String paymentInfo_OK(@PathVariable("id") Integer id){

String result = paymentService.paymentInfo_OK(id);

log.info("*****result:"+result);

return result;

}

@GetMapping("/payment/hystrix/timeout/{id}")

public String paymentInfo_TimeOut(@PathVariable("id") Integer id) {

String result = paymentService.paymentInfo_TimeOut(id);

log.info("*****result:"+result);

return result;

}

}

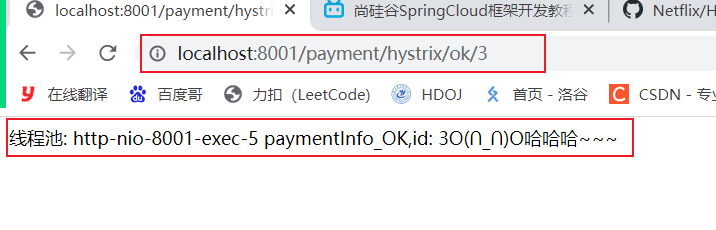

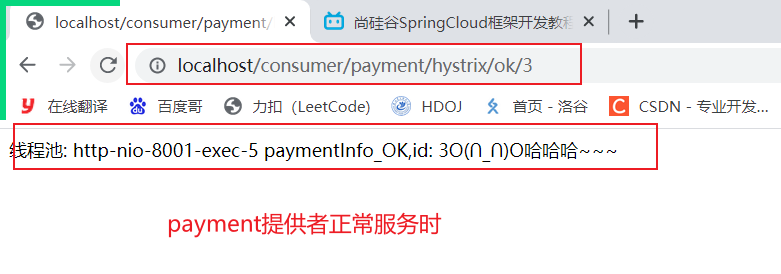

- Master test

1. Start Eureka 700170027004;

2. Start cloud provider hystrix payment8001

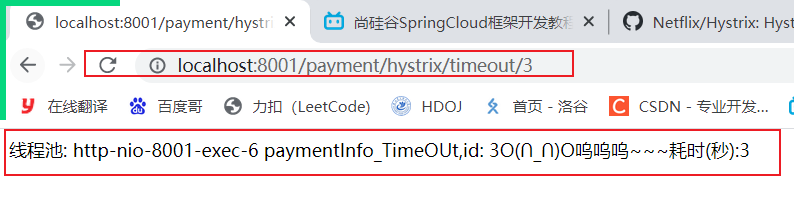

3. How to access success http://localhost:8001/payment/hystrix/ok/31

4. Access timeout method (each call takes 5 seconds): http://localhost:8001/payment/hystrix/timeout/31

5. All the above module s are OK

6. Based on the above platform, from correct - > error - > degraded fuse - > recovery

- High concurrency test

In the case of non high concurrency, but

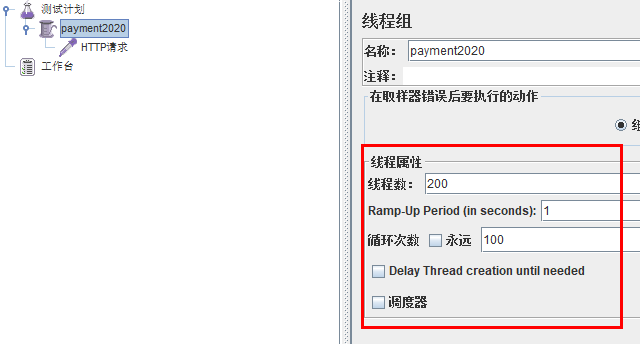

Jmeter pressure test

1. Start Jmeter to access paymentinfo for 20000 concurrent requests and kill 800 and 120000 requests_ Timeout service

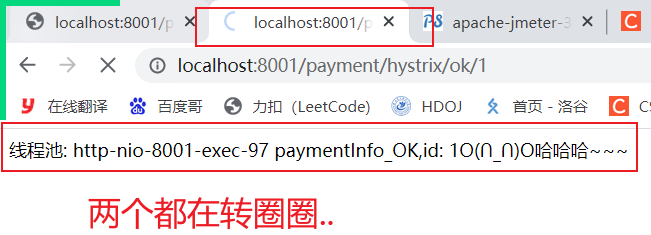

2. Another visit http://localhost:8001/payment/hystrix/ok/31

Both are turning around by themselves

Reason: the default number of working threads of tomcat is full, and there are no redundant threads to decompose pressure and processing.

Jmeter pressure test conclusion: the above is still tested by the service provider 8001. If the external consumer 80 also visits at this time,

The consumer can only wait, which eventually leads to the dissatisfaction of the consumer end 80 and the death of the service end 8001

7.3.2 building consumer order80

- Build Module - cloud consumer feign hystrix order80

- POM

<dependencies>

<!--openfeign-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-openfeign</artifactId>

</dependency>

<!--hystrix-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-netflix-hystrix</artifactId>

</dependency>

<!--eureka client-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-netflix-eureka-client</artifactId>

</dependency>

<!-- Introduce self defined api General package, you can use Payment payment Entity -->

<dependency>

<groupId>com.atguigu.springcloud</groupId>

<artifactId>cloud-api-commons</artifactId>

<version>${project.version}</version>

</dependency>

<!--web-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<!--General foundation general configuration-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

<scope>runtime</scope>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

- YML

server:

port: 80

spring:

application:

name: cloud-feign-hystrix-order-service

eureka:

client:

register-with-eureka: true

service-url:

defaultZone: http://eureka7001.com:7001/eureka,http://eureka7002.com:7002/eureka,http://eureka7004.com:7004/eureka #Cluster version

- Main start

@SpringBootApplication

@EnableFeignClients

public class OrderHystrixMain80

{

public static void main(String[] args)

{

SpringApplication.run(OrderHystrixMain80.class,args);

}

}

- Business class

PaymentHystrixService

@Component

@FeignClient(value = "CLOUD-PROVIDER-HYSTRIX-PAYMENT")

public interface PaymentHystrixService

{

@GetMapping("/payment/hystrix/ok/{id}")

String paymentInfo_OK(@PathVariable("id") Integer id);

@GetMapping("/payment/hystrix/timeout/{id}")

String paymentInfo_TimeOut(@PathVariable("id") Integer id);

}

OrderHystirxController

@RestController

@Slf4j

public class OrderHystirxController

{

@Resource

private PaymentHystrixService paymentHystrixService;

@GetMapping("/consumer/payment/hystrix/ok/{id}")

public String paymentInfo_OK(@PathVariable("id") Integer id)

{

String result = paymentHystrixService.paymentInfo_OK(id);

return result;

}

@GetMapping("/consumer/payment/hystrix/timeout/{id}")

public String paymentInfo_TimeOut(@PathVariable("id") Integer id)

{

String result = paymentHystrixService.paymentInfo_TimeOut(id);

return result;

}

}

- test

Normal test: http://localhost/consumer/payment/hystrix/ok/31

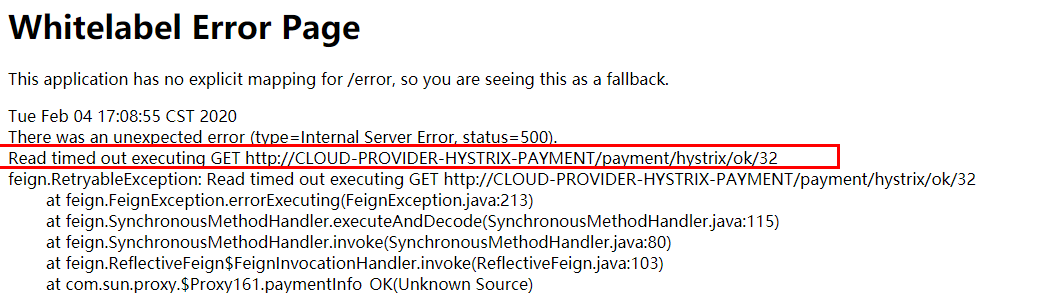

High concurrency test:

1.2W thread pressure 8001

2. The consumer end 80 micro service accesses the normal Ok micro service 8001 address http://localhost/consumer/payment/hystrix/ok/32

Consumer 80, o(╥﹏╥) o either turns around and waits, or the consumer reports a timeout error

7.3.3 fault phenomena and Solutions

8001 other interface services at the same level are trapped because the working threads in the tomcat thread pool have been occupied. 80 at this time, call 8001, and the client access response is slow and turns around

Conclusion: it is precisely because of the above faults or poor performance that our degradation / fault tolerance / current limiting technology was born

How to solve it? Resolved requirements

- Timeout causes the server to slow down (circle) - > timeout no longer waits

- Errors (downtime or program running errors) - > errors should be explained

solve:

- The other party's service (8001) has timed out, and the caller (80) cannot wait all the time. There must be service degradation

- The other party's service (8001) is down, and the caller (80) can't wait all the time. There must be service degradation

- The other party's service (8001) is OK, the caller (80) has its own failure or self request (its own waiting time is less than that of the service provider), and handles the degradation by itself

7.4 service degradation

8001 first find the problem from yourself:

Set the peak value of its own call timeout. It can run normally within the peak value. If it exceeds the need for a thorough method, it will be used as a service degradation fallback

7.4.1 8001fallback processing

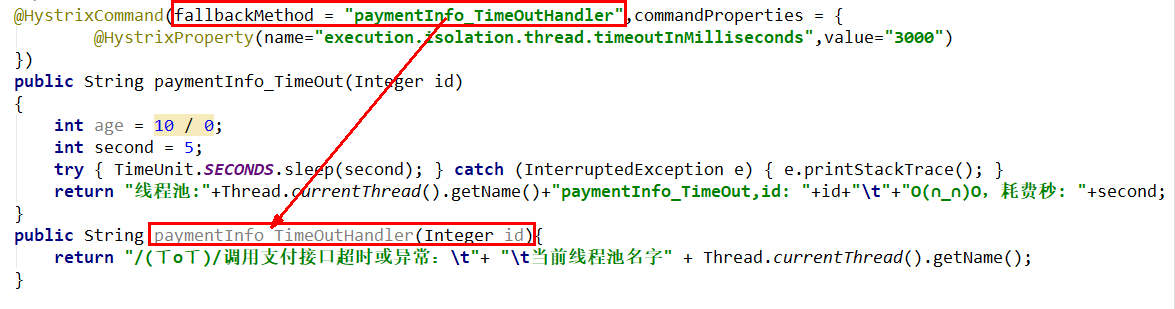

- Enable @ HystrixCommand for business class

@Service

public class PaymentServiceImpl implements PaymentService {

/**

* Normal access, return to OK

* @param id

* @return

*/

@Override

public String paymentInfo_OK(Integer id) {

return "Thread pool: "+Thread.currentThread().getName()+" paymentInfo_OK,id: "+id+"O(∩_∩)O Ha ha ha~~~";

}

/**

* Timeout access

* @param id

* @return

*/

@HystrixCommand(fallbackMethod = "paymentInfo_TimeOutHandler",commandProperties = {

@HystrixProperty(name = "execution.isolation.thread.timeoutInMilliseconds",value = "3000")

})

public String paymentInfo_TimeOut(Integer id) {

int timeNumber = 5;

// int a = 10 / 0;

try {

TimeUnit.SECONDS.sleep(timeNumber);

}catch (InterruptedException e){

e.printStackTrace();

}

return "Thread pool: " + Thread.currentThread().getName() + " paymentInfo_TimeOUt,id: " + id + "O(∩_∩)O Ha ha ha~~~" + "time consuming(second):" + timeNumber;

}

public String paymentInfo_TimeOutHandler(Integer id){

return "Thread pool: " + Thread.currentThread().getName() + " 8001 System busy or running error,Please try again later,id: " + id + "\t"+"o(╥﹏╥)o Woo woo" ;

}

}

@How to handle the exception reported by HystrixCommand?

Once calling the service method fails and an error message is thrown, it will automatically call the specified method in the fallbackMethod call class marked by @ HystrixCommand

The above figure deliberately creates two exceptions:

1 int age = 10/0; Calculation exception

2 we can accept 3 seconds, it runs for 5 seconds, timeout exception.

The current service is unavailable. The solution for service degradation is paymentInfo_TimeOutHandler

- Main startup class activation: add new annotation * * @ enablercircuitbreaker**

7.4.2 80fallback

80 order micro services can also better protect themselves, and they can also draw gourds for client degradation protection

- YML

feign:

hystrix:

enabled: true

- Add @ EnableHystrix to main boot

@SpringBootApplication

@EnableFeignClients

@EnableHystrix

public class OrderHystrixMain80

{

public static void main(String[] args)

{

SpringApplication.run(OrderHystrixMain80.class,args);

}

}

- Business class

@RestController

@Slf4j

public class OrderHystrixController {

@Resource

private PaymentHystrixService paymentHystrixService;

@GetMapping("/consumer/payment/hystrix/ok/{id}")

public String paymentInfo_OK(@PathVariable("id") Integer id){

return paymentHystrixService.paymentInfo_OK(id);

}

@GetMapping("/consumer/payment/hystrix/timeout/{id}")

@HystrixCommand(fallbackMethod = "paymentTimeOutFallbackMethod",commandProperties = {

@HystrixProperty(name = "execution.isolation.thread.timeoutInMilliseconds",value = "1500")

})

public String paymentInfo_TimeOut(@PathVariable("id") Integer id) {

// int age = 10 / 0;

return paymentHystrixService.paymentInfo_TimeOut(id);

}

public String paymentTimeOutFallbackMethod(@PathVariable("id") Integer id){

return "I am a consumer 80,The opposite payment system is busy, please 10 s Try again or run your own error. Please check yourself,o(╥﹏╥)o";

}

Note: the hot deployment method we have configured obviously changes the java code, but for the modification of the properties in @ HystrixCommand, it is recommended to restart the micro server

7.4.3 existing problems and Solutions

Existing problems:

-

Each business method corresponds to a bottom-up method, and the code expands

-

Separation of unified and customized

Solution:

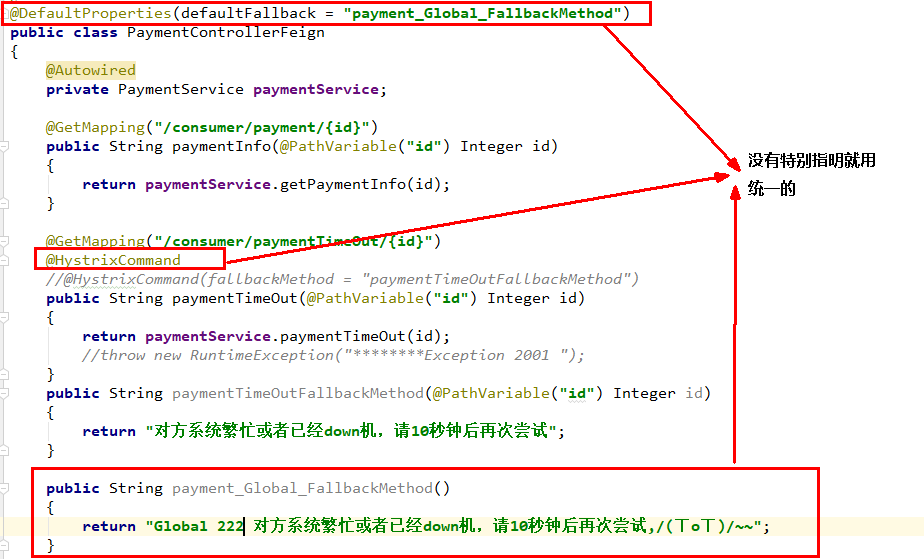

- Each method is configured with a??? Expansion = = > it is necessary to extract the with the same function You can use * * @ DefaultProperties(defaultFallback = "")**

instructions:

1: 1. Each method is configured with a service degradation method. Technically, it is OK. In fact, it is OK

1: N except for some important core businesses, other ordinary businesses can jump to the unified processing result page through @ DefaultProperties(defaultFallback = "")

The general and exclusive are separated to avoid code expansion and reasonably reduce the amount of code. O(∩∩) O ha ha~

controller configuration

@RestController

@Slf4j

@DefaultProperties(defaultFallback = "payment_Global_FallbackMethod")

public class OrderHystrixController {

@Resource

private PaymentHystrixService paymentHystrixService;

@GetMapping("/consumer/payment/hystrix/ok/{id}")

public String paymentInfo_OK(@PathVariable("id") Integer id){

return paymentHystrixService.paymentInfo_OK(id);

}

@GetMapping("/consumer/payment/hystrix/timeout/{id}")

// @HystrixCommand(fallbackMethod = "paymentTimeOutFallbackMethod",commandProperties = {

// @HystrixProperty(name = "execution.isolation.thread.timeoutInMilliseconds",value = "1500")

// })

@HystrixCommand //If the DefaultProperties annotation is added and the specific method name is not written, the unified global fallback is used

public String paymentInfo_TimeOut(@PathVariable("id") Integer id) {

// int age = 10 / 0;

return paymentHystrixService.paymentInfo_TimeOut(id);

}

public String paymentTimeOutFallbackMethod(@PathVariable("id") Integer id){

return "I am a consumer 80,The opposite payment system is busy, please 10 s Try again or run your own error. Please check yourself,o(╥﹏╥)o";

}

public String payment_Global_FallbackMethod(){

return "Global Exception handling information,Please wait for more information,o(╥﹏╥)o";

}

}

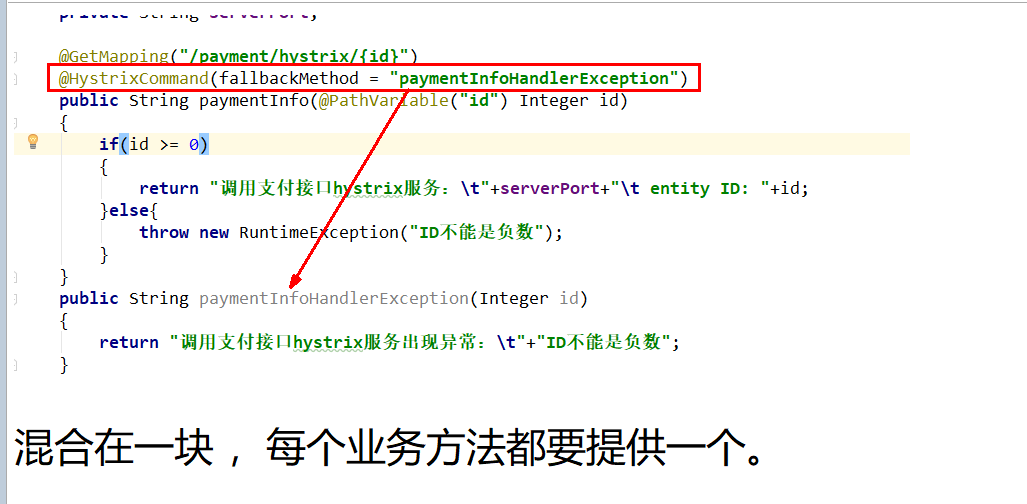

2. Mixed with business logic??? Chaos = = > decoupling is needed to separate logic from service degradation code

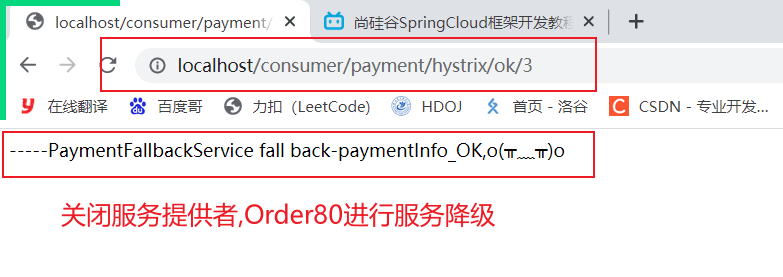

- Demo: when the service is degraded, the client calls the server, and the server goes down or shuts down

In this case, the service degradation processing is completed at the client 80, which has nothing to do with the server 8001. It only needs to add an implementation class of service degradation processing to the interface defined by Feign client to realize decoupling

Exceptions we will face in the future: running, timeout and downtime

- Let's look at our business class PaymentController

- Modify cloud consumer feign hystrix order80

- According to the existing PaymentHystrixService interface of cloud consumer feign hystrix order80,

Create a new class (PaymentFallbackService) to implement the interface and handle exceptions for the methods in the interface

@Component

public class PaymentFallbackService implements PaymentHystrixService{

@Override

public String paymentInfo_OK(Integer id) {

return "-----PaymentFallbackService fall back-paymentInfo_OK,o(╥﹏╥)o";

}

@Override

public String paymentInfo_TimeOut(Integer id) {

return "-----PaymentFallbackService fall back-paymentInfo_Timeout,o(╥﹏╥)o";

}

}

- Add @ FeignClient for PaymentHystrixService

@Component

@FeignClient(value = "CLOUD-PROVIDER-HYSTRIX-PAYMENT",fallback = PaymentFallbackService.class)

public interface PaymentHystrixService {

@GetMapping("/payment/hystrix/ok/{id}")

public String paymentInfo_OK(@PathVariable("id") Integer id);

@GetMapping("/payment/hystrix/timeout/{id}")

public String paymentInfo_TimeOut(@PathVariable("id") Integer id) ;

}

- test

-

A single eureka starts 700170027003 first

-

PaymentHystrixMain8001 start

-

Normal access test http://localhost/consumer/payment/hystrix/ok/31

-

Deliberately shut down microservice 8001

-

The client calls the prompt: at this time, the service provider has been down, but we have degraded the service,

Let the client get prompt information when the server is unavailable without suspending the server

7.5 service fuse

Circuit breaker: in a word, it is the fuse at home

7.5.1 what is fusing

Overview of fuse mechanism

Fuse mechanism is a microservice link protection mechanism to deal with avalanche effect. When a microservice on the fan out link fails to be available or the response time is too long, the service will be degraded, which will fuse the call of the node microservice and quickly return the wrong response information.

When it is detected that the microservice call response of the node is normal, the call link is restored.

In the Spring Cloud framework, the circuit breaker mechanism is implemented through hystrix. Hystrix monitors the status of calls between microservices,

When the failed call reaches a certain threshold, the default is 20 calls failed within 5 seconds, and the fuse mechanism will be started. The annotation of the fuse mechanism is * * @ HystrixCommand**

Great God's paper: https://martinfowler.com/bliki/CircuitBreaker.html

7.5.2 practical operation

-

Modify cloud provider hystrix payment8001

-

PaymentService

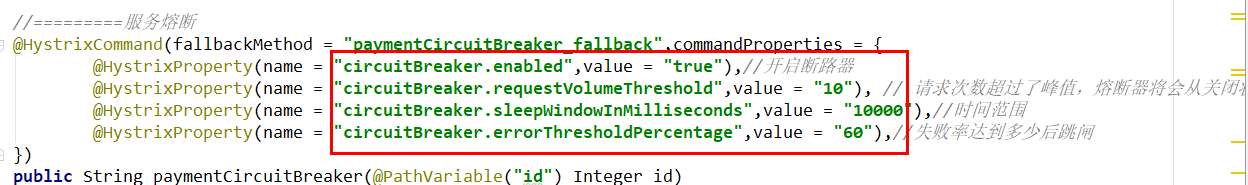

//===Service fuse

@HystrixCommand(fallbackMethod = "paymentCircuitBreaker_fallback",commandProperties = {

@HystrixProperty(name = "circuitBreaker.enabled",value = "true"),//Is the circuit breaker open

@HystrixProperty(name = "circuitBreaker.requestVolumeThreshold",value = "10"),//Request times when this number of failures is reached within the configured time window, a short circuit is performed. Default 20

@HystrixProperty(name = "circuitBreaker.sleepWindowInMilliseconds",value = "10000"),//The time window period is also equal to the time to try to recover after the short circuit Default 5s

@HystrixProperty(name = "circuitBreaker.errorThresholdPercentage",value = "60"),//Error percentage threshold. When the failure rate reaches this threshold, the short-circuit trip starts. Default 50%

})

public String paymentCircuitBreaker(@PathVariable("id") Integer id){

if(id<0){

throw new RuntimeException("*******id Cannot be negative");

}

String serialNumber = IdUtil.simpleUUID();

return Thread.currentThread().getName()+"\t"+"Call succeeded,Serial number:"+serialNumber;

}

public String paymentCircuitBreaker_fallback(@PathVariable("id") Integer id){

return "id Cannot be negative,Please try again later,o(╥﹏╥)o id:"+id;

}

why configure these parameters

- PaymentController

@GetMapping("/payment/circuit/{id}")

public String paymentCircuitBreaker(@PathVariable("id") Integer id)

{

String result = paymentService.paymentCircuitBreaker(id);

log.info("****result: "+result);

return result;

}

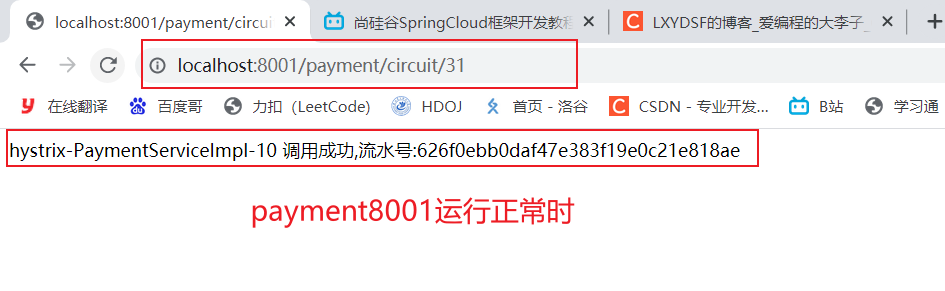

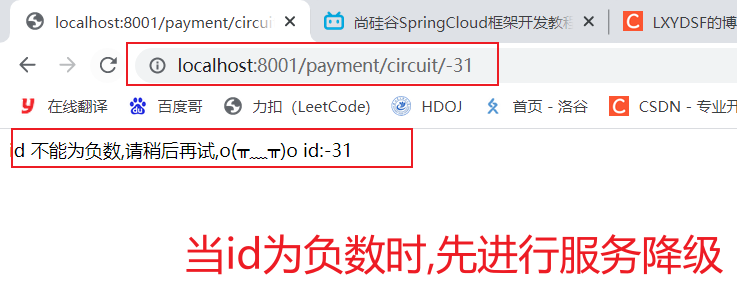

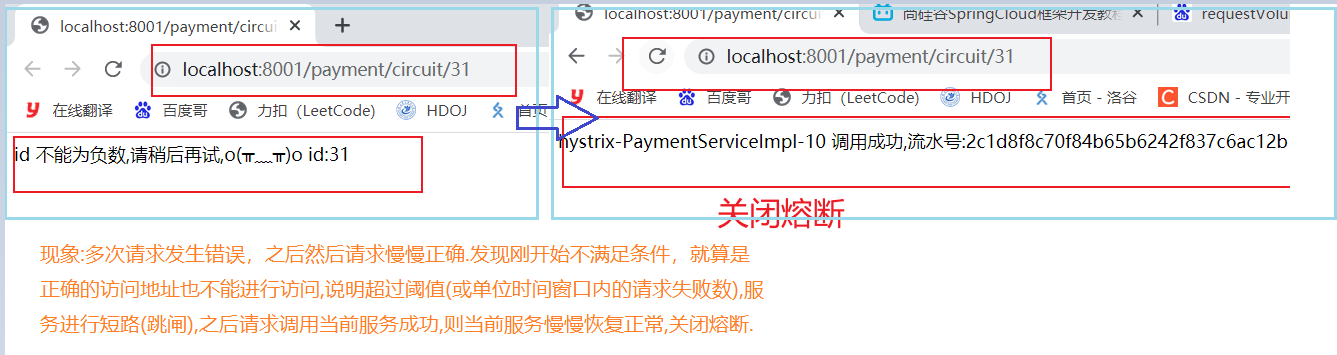

- test

correct: http://localhost:8001/payment/circuit/31

Error: http://localhost:8001/payment/circuit/31

One right and one wrong try try

After several errors, it is found that the conditions are not met at the beginning, and even the correct access address cannot be accessed

7.5.3 principle (summary)

Great God conclusion:

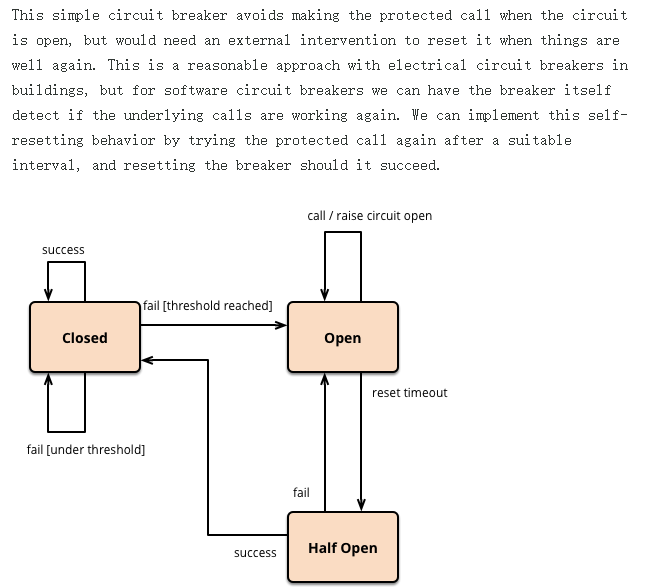

Fuse type:

-

Fusing on: it is requested not to call the current service. The internal set clock is generally MTTR (average fault handling time). When it is turned on for up to the set clock, it will enter the semi fusing state

-

Fusing off: fusing off will not fuse the service

-

Fuse half open: some requests call the current service according to the rules. If the request is successful and meets the rules, it is considered that the current service returns to normal and the fuse is closed

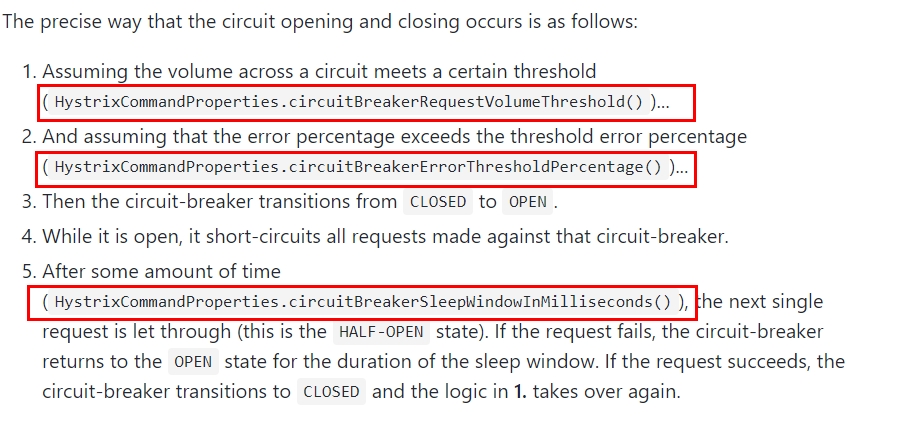

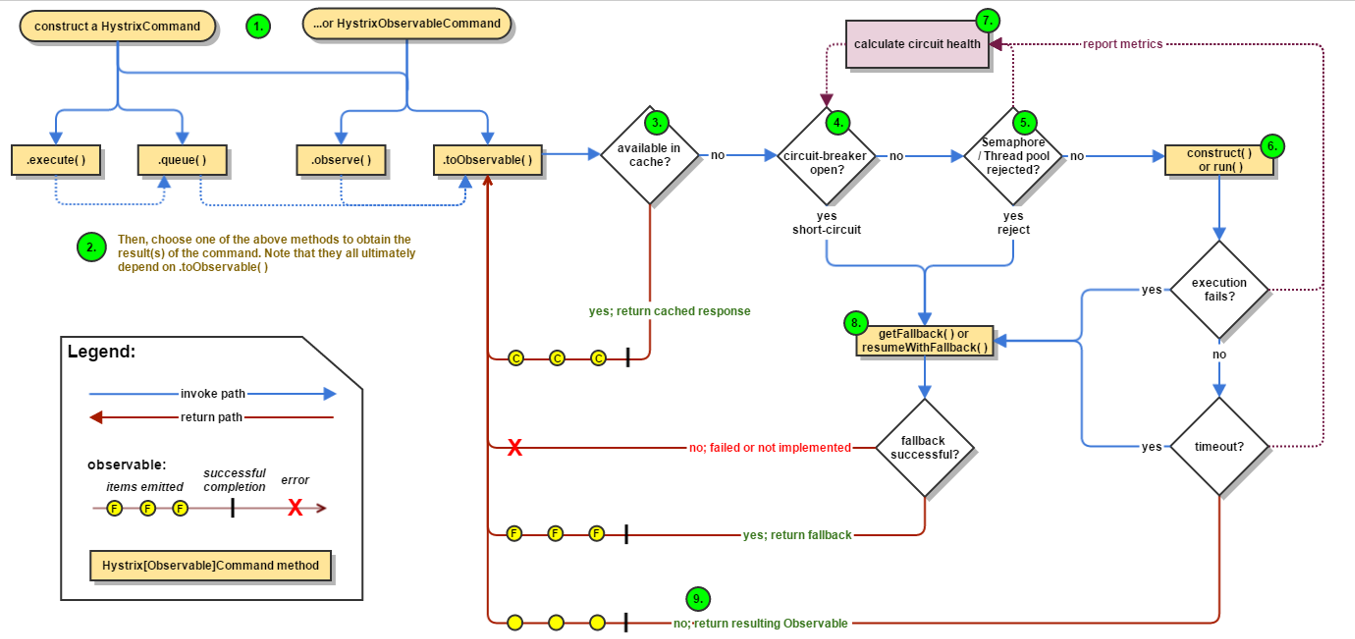

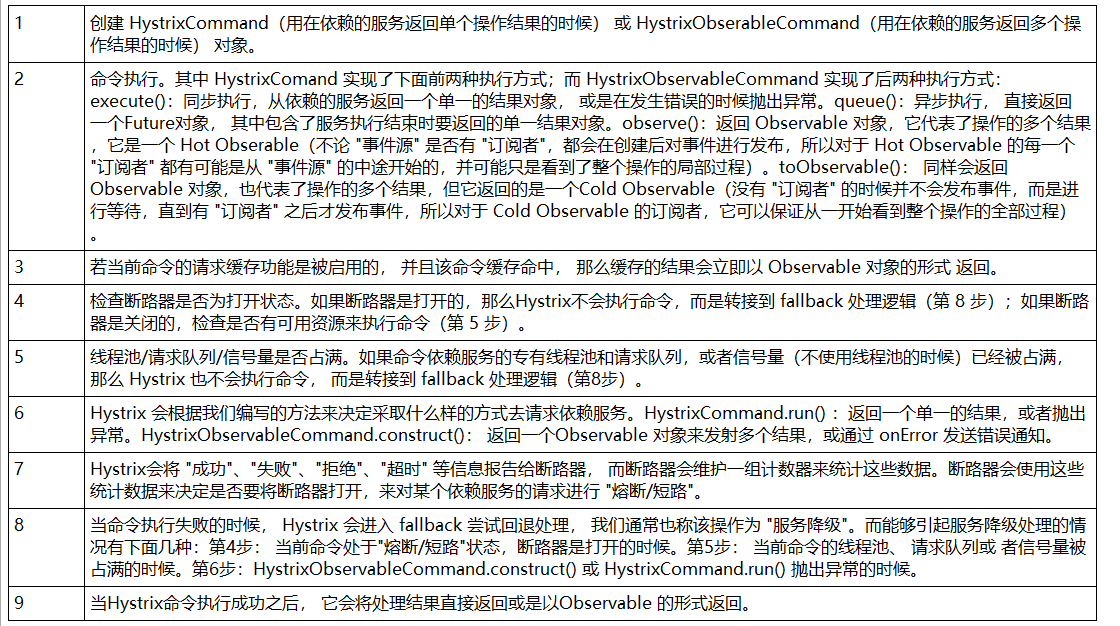

Flow chart of circuit breaker on official website

- Official website steps

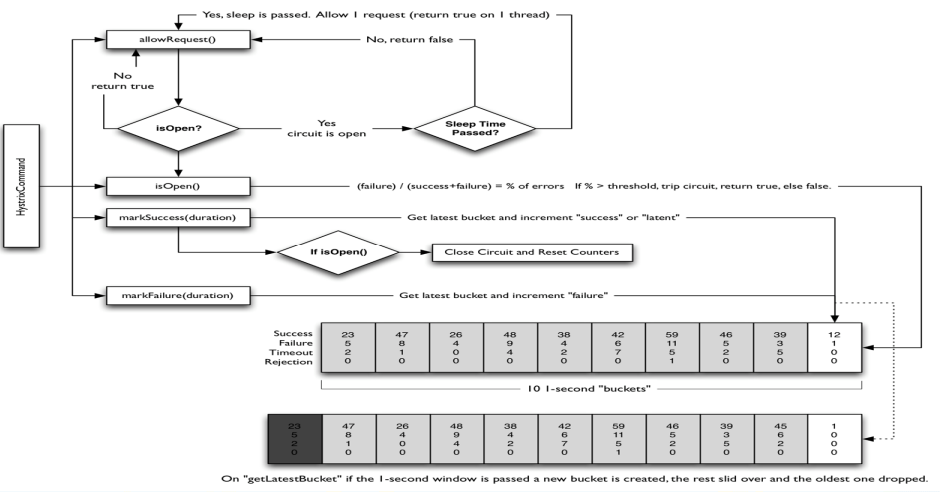

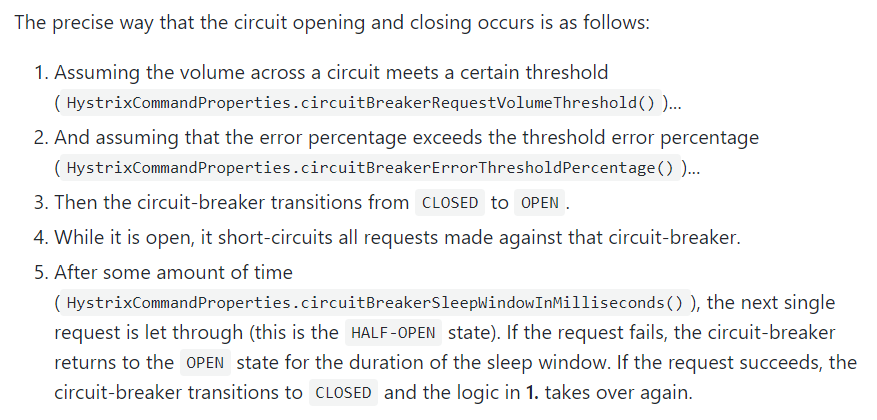

- When does the circuit breaker start to work

Three important parameters related to the circuit breaker: snapshot time window, total request threshold and error percentage threshold.

1: Snapshot time window: the circuit breaker needs to count some request and error data to determine whether to open, and the statistical time range is the snapshot time window, which defaults to the last 10 seconds.

2: Threshold of total requests: within the snapshot time window, the threshold of total requests must be met to be eligible for fusing. The default is 20, which means that if the number of calls of the hystrix command is less than 20 within 10 seconds, even if all requests timeout or fail for other reasons, the circuit breaker will not open.

3: Error percentage threshold: when the total number of requests exceeds the threshold within the snapshot time window, such as 30 calls, if timeout exceptions occur in 15 of the 30 calls, that is, more than 50% of the error percentage, the circuit breaker will be opened when the 50% threshold is set by default.

- Conditions for opening or closing the circuit breaker

1. When a certain threshold is met (more than 20 requests in 10 seconds by default)

2. When the failure rate reaches a certain level (more than 50% requests fail within 10 seconds by default)

3. When the above threshold is reached, the circuit breaker will open

4. When enabled, all requests will not be forwarded

5. After a period of time (5 seconds by default), when the circuit breaker is half open, one of the requests will be forwarded. If successful, the circuit breaker will close. If failed, it will continue to open. Repeat 4 and 5

- After the circuit breaker is opened

1: When another request is called, the main logic will not be called, but the degraded fallback will be called directly. Through the circuit breaker, it can automatically find errors and switch the degraded logic to the main logic to reduce the response delay.

2: How to restore the original main logic?

For this problem, hystrix also implements the automatic recovery function for us.

When the circuit breaker is opened and the main logic is fused, hystrix will start a sleep time window. In this time window, the degraded logic is temporary and becomes the main logic,

When the sleep time window expires, the circuit breaker will enter the half open state and release a request to the original main logic. If the request returns normally, the circuit breaker will continue to close,

The main logic is restored. If there is still a problem with this request, the circuit breaker will continue to open and the sleep time window will be timed again.

- All configuration

//========================All

@HystrixCommand(fallbackMethod = "str_fallbackMethod",

groupKey = "strGroupCommand",

commandKey = "strCommand",

threadPoolKey = "strThreadPool",

commandProperties = {

// Set the isolation policy. THREAD indicates THREAD pool. SEMAPHORE: signal pool isolation

@HystrixProperty(name = "execution.isolation.strategy", value = "THREAD"),

// When the isolation strategy selects signal pool isolation, it is used to set the size of the signal pool (maximum concurrent number)

@HystrixProperty(name = "execution.isolation.semaphore.maxConcurrentRequests", value = "10"),

// Configure the timeout for command execution

@HystrixProperty(name = "execution.isolation.thread.timeoutinMilliseconds", value = "10"),

// Enable timeout

@HystrixProperty(name = "execution.timeout.enabled", value = "true"),

// Is the execution interrupted when it times out

@HystrixProperty(name = "execution.isolation.thread.interruptOnTimeout", value = "true"),

// Is the execution interrupted when it is cancelled

@HystrixProperty(name = "execution.isolation.thread.interruptOnCancel", value = "true"),

// Maximum concurrent number of callback method executions allowed

@HystrixProperty(name = "fallback.isolation.semaphore.maxConcurrentRequests", value = "10"),

// Whether the service degradation is enabled and whether the callback function is executed

@HystrixProperty(name = "fallback.enabled", value = "true"),

// Is the circuit breaker enabled

@HystrixProperty(name = "circuitBreaker.enabled", value = "true"),

// This attribute is used to set the minimum number of requests for circuit breaker fusing in the rolling time window. For example, when the default value is 20,

// If only 19 requests are received within the rolling time window (default 10 seconds), the circuit breaker will not open even if all 19 requests fail.

@HystrixProperty(name = "circuitBreaker.requestVolumeThreshold", value = "20"),

// This attribute is used to set in the rolling time window, indicating that in the rolling time window, the number of requests exceeds

// circuitBreaker. In the case of requestvolumethreshold, if the percentage of wrong requests exceeds 50,

// Set the circuit breaker to the "on" state, otherwise it will be set to the "off" state.

@HystrixProperty(name = "circuitBreaker.errorThresholdPercentage", value = "50"),

// This attribute is used to set the sleep time window after the circuit breaker is opened. After the sleep window ends,

// It will set the circuit breaker to "half open" state and try to fuse the request command. If it still fails, it will continue to set the circuit breaker to "open" state,

// Set to "off" if successful.

@HystrixProperty(name = "circuitBreaker.sleepWindowinMilliseconds", value = "5000"),

// Forced opening of circuit breaker

@HystrixProperty(name = "circuitBreaker.forceOpen", value = "false"),

// Forced closing of circuit breaker

@HystrixProperty(name = "circuitBreaker.forceClosed", value = "false"),

// Rolling time window setting, which is used for the duration of information to be collected when judging the health of the circuit breaker

@HystrixProperty(name = "metrics.rollingStats.timeinMilliseconds", value = "10000"),

// This attribute is used to set the number of "buckets" divided when rolling time window statistics indicator information. When collecting indicator information, the circuit breaker will

// The set time window length is divided into multiple "buckets" to accumulate each measurement value. Each "bucket" records the collection indicators over a period of time.

// For example, it can be divided into 10 "buckets" in 10 seconds, so timeinMilliseconds must be divisible by numBuckets. Otherwise, an exception will be thrown

@HystrixProperty(name = "metrics.rollingStats.numBuckets", value = "10"),

// This property is used to set whether the delay in command execution is tracked and calculated using percentiles. If set to false, all summary statistics will return - 1.

@HystrixProperty(name = "metrics.rollingPercentile.enabled", value = "false"),

// This property is used to set the duration of the scrolling window for percentile statistics, in milliseconds.

@HystrixProperty(name = "metrics.rollingPercentile.timeInMilliseconds", value = "60000"),

// This attribute is used to set the number of buckets used in the percentile statistics scroll window.

@HystrixProperty(name = "metrics.rollingPercentile.numBuckets", value = "60000"),

// This attribute is used to set the maximum number of executions to keep in each bucket during execution. If the number of executions exceeding the set value occurs within the rolling time window,

// Start rewriting from the original position. For example, set the value to 100 and scroll the window for 10 seconds. If 500 executions occur in a "bucket" within 10 seconds,

// Then only the statistics of the last 100 executions are retained in the "bucket". In addition, increasing the size of this value will increase the consumption of memory and the calculation time required to sort percentiles.

@HystrixProperty(name = "metrics.rollingPercentile.bucketSize", value = "100"),

// This attribute is used to set the interval waiting time for collecting health snapshots (requested success, error percentage) that affect the status of the circuit breaker.

@HystrixProperty(name = "metrics.healthSnapshot.intervalinMilliseconds", value = "500"),

// Enable request cache

@HystrixProperty(name = "requestCache.enabled", value = "true"),

// Whether the execution and events of the HystrixCommand are printed into the HystrixRequestLog

@HystrixProperty(name = "requestLog.enabled", value = "true"),

},

threadPoolProperties = {

// This parameter is used to set the number of core threads in the command execution thread pool, which is the maximum concurrency of command execution

@HystrixProperty(name = "coreSize", value = "10"),

// This parameter is used to set the maximum queue size of the thread pool. When set to - 1, the thread pool will use the queue implemented by SynchronousQueue,

// Otherwise, the queue implemented by LinkedBlockingQueue will be used.

@HystrixProperty(name = "maxQueueSize", value = "-1"),

// This parameter is used to set the reject threshold for the queue. With this parameter, the request can be rejected even if the queue does not reach the maximum value.

// This parameter is mainly a supplement to the LinkedBlockingQueue queue, because LinkedBlockingQueue

// The queue cannot dynamically modify its object size, but the size of the queue that rejects requests can be adjusted through this attribute.

@HystrixProperty(name = "queueSizeRejectionThreshold", value = "5"),

}

)

public String strConsumer() {

return "hello 2020";

}

public String str_fallbackMethod()

{

return "*****fall back str_fallbackMethod";

}

7.6 service current limit

In the later advanced section, we will explain the Sentinel description of alibaba

7.7 hystrix workflow

https://github.com/Netflix/Hystrix/wiki/How-it-Works

Official website legend

tips: if we do not implement the degradation logic for the command or throw an exception in the degradation processing logic, Hystrix will still return an Observable object, but it will not emit any result data. Instead, it will notify the command to immediately interrupt the request through the onError method, and send the exception causing the command failure to the caller through the onError() method.

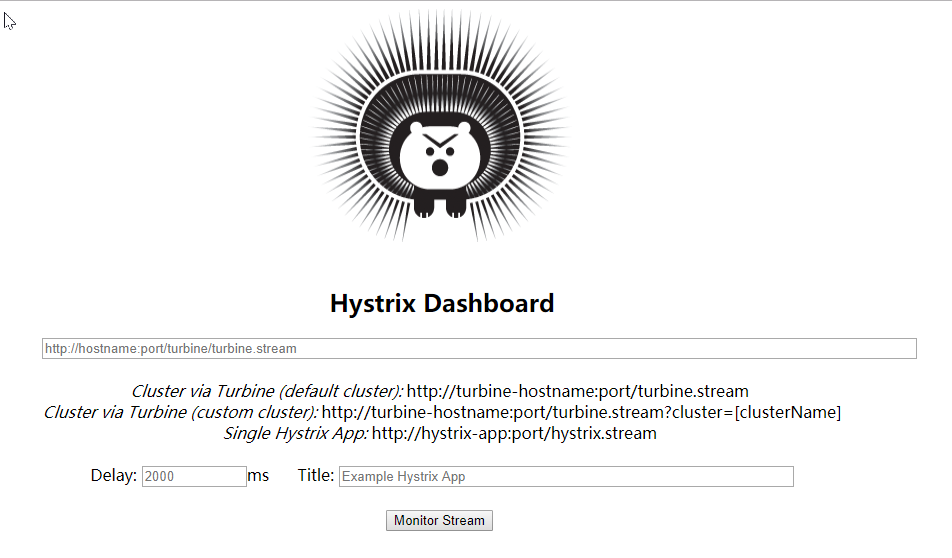

7.8 service monitoring hystrixDashboard

7.8.1 general

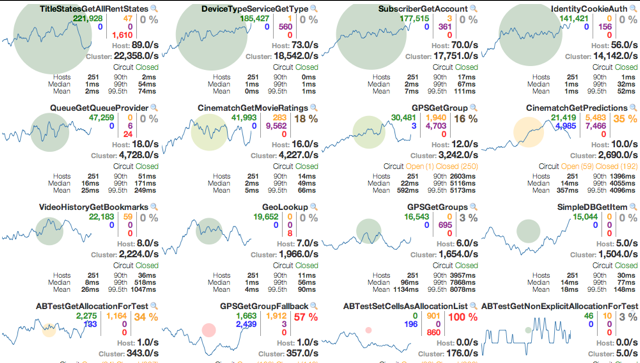

In addition to isolating the calls of dependent services, hystrix also provides a quasi real-time call monitoring (Hystrix Dashboard). Hystrix will continuously record the execution information of all requests initiated through hystrix and display it to users in the form of statistical reports and graphics, including how many requests are executed, how many successes, how many failures, etc. Netflix monitors the above indicators through the hystrix metrics event stream project. Spring Cloud also provides the integration of the Hystrix Dashboard to transform the monitoring content into a visual interface.

7.8.2 instrument panel 9001

- Create a new cloud consumer hystrix dashboard9001

- POM

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-netflix-hystrix-dashboard</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

<scope>runtime</scope>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

- YML

server:

port: 9001

spring:

application:

name: cloud-order-dashboard

- HystrixDashboardMain9001 + new annotation @ EnableHystrixDashboard

@SpringBootApplication

@EnableHystrixDashboard

public class HystrixDashboardMain9001 {

public static void main(String[] args) {

SpringApplication.run(HystrixDashboardMain9001.class, args);

}

}

- All Provider micro service Provider classes (8001 / 8002 / 8003) need to monitor dependency configuration

<!-- actuator Improvement of monitoring information -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

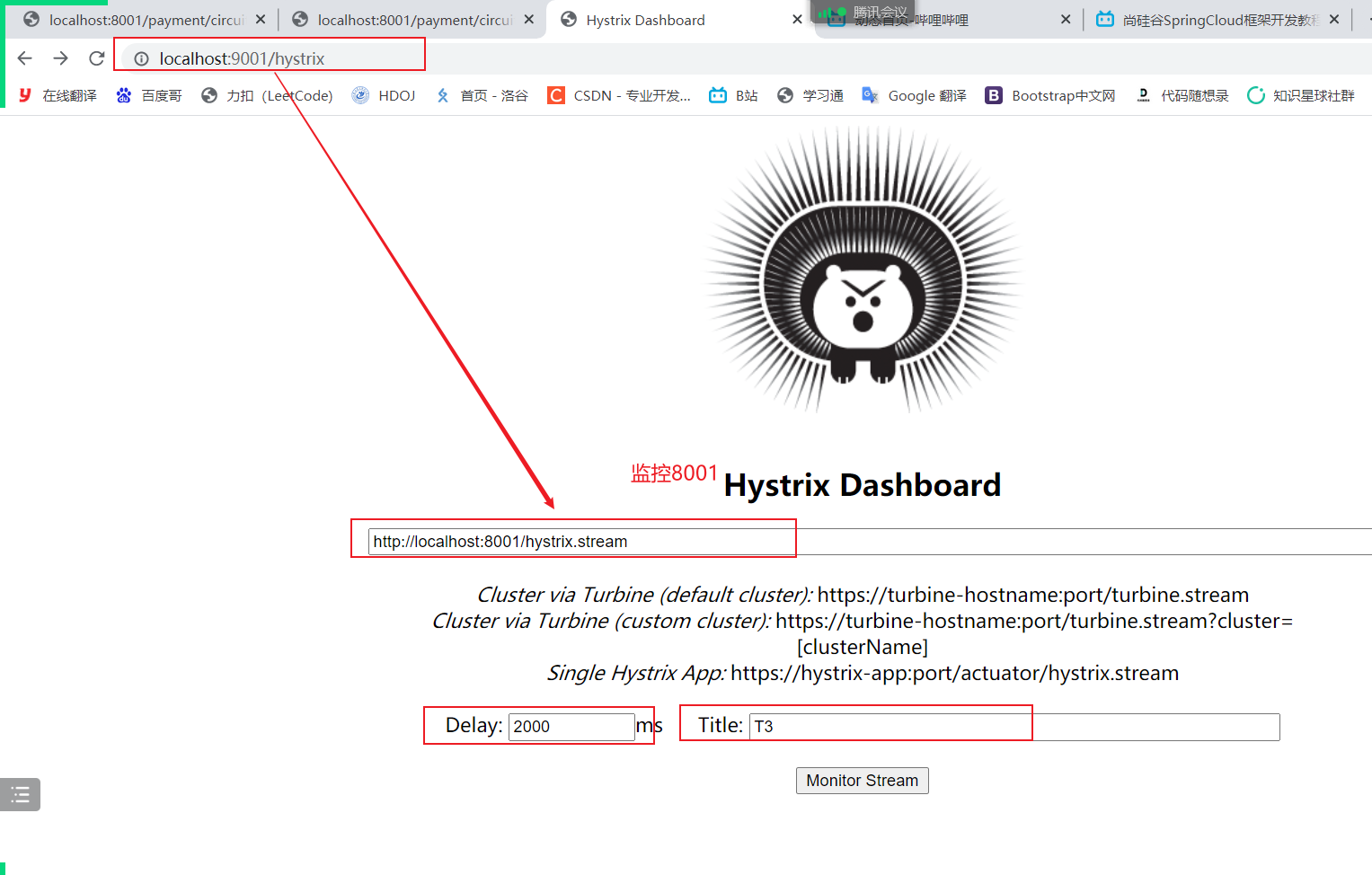

- Start cloud consumer hystrix dashboard9001. The microservice will monitor the microservice 8001 later

visit http://localhost:9001/hystrix

7.8.3 circuit breaker demonstration (service monitoring hystrixDashboard)

Modify cloud provider Hystrix payment8001. The new version of Hystrix needs to specify the monitoring path in the main startup class MainAppHystrix8001 Otherwise, Unable to connect to Command Metric Stream may appear Or 404 error

@SpringBootApplication

@EnableEurekaClient //After the service is started, it will be automatically registered into eureka service

@EnableCircuitBreaker//Support for hystrixR circuit breaker mechanism

public class MainAppHystrix8001

{

public static void main(String[] args)

{

SpringApplication.run(MainAppHystrix8001.class,args);

}

/**

*This configuration is for service monitoring and has nothing to do with the service fault tolerance itself. It is an upgrade of spring cloud

*ServletRegistrationBean Because the default path of springboot is not "/ hystrix.stream",

*Just configure the following servlet s in your project

*/

@Bean

public ServletRegistrationBean getServlet() {

HystrixMetricsStreamServlet streamServlet = new HystrixMetricsStreamServlet();

ServletRegistrationBean registrationBean = new ServletRegistrationBean(streamServlet);

registrationBean.setLoadOnStartup(1);

registrationBean.addUrlMappings("/hystrix.stream");

registrationBean.setName("HystrixMetricsStreamServlet");

return registrationBean;

}

}

Monitoring test

After starting three eureka clusters, observe the monitoring window

- 9001 monitor 8001, input http://localhost:8001/hystrix.stream

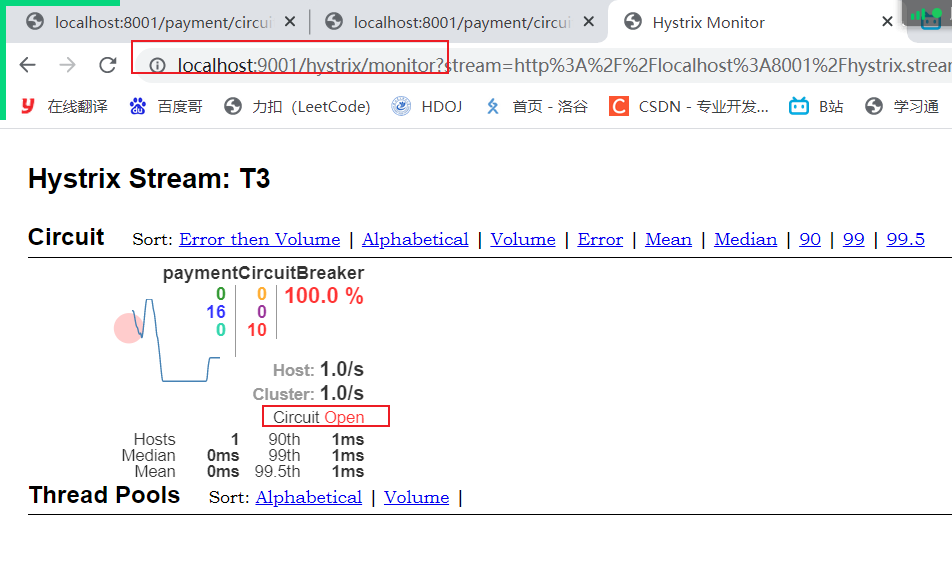

-

Test address http://localhost:8001/payment/circuit/31,http://localhost:8001/payment/circuit/-31 all passed

-

Access the correct address first, then the wrong address, and then the correct address. You will find that the circuit breakers shown in the figure are slowly released.

Monitoring results, successfully accessed address:

Test result: failed to access the address

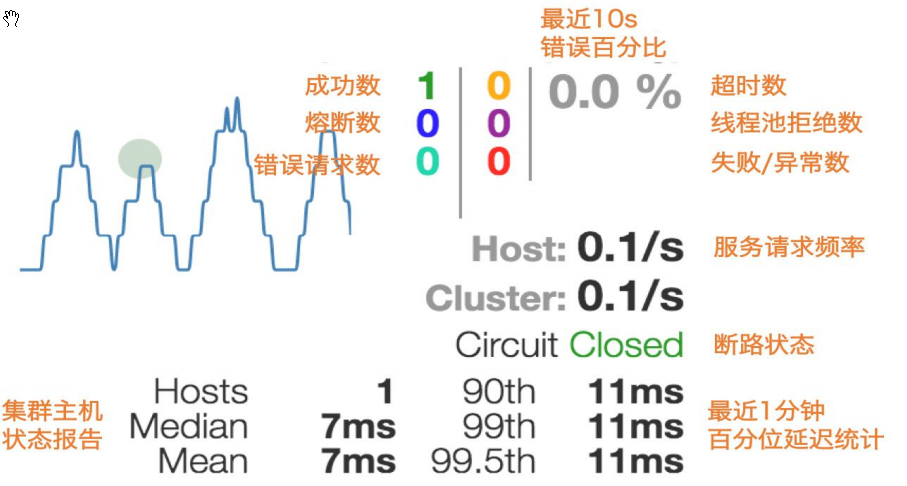

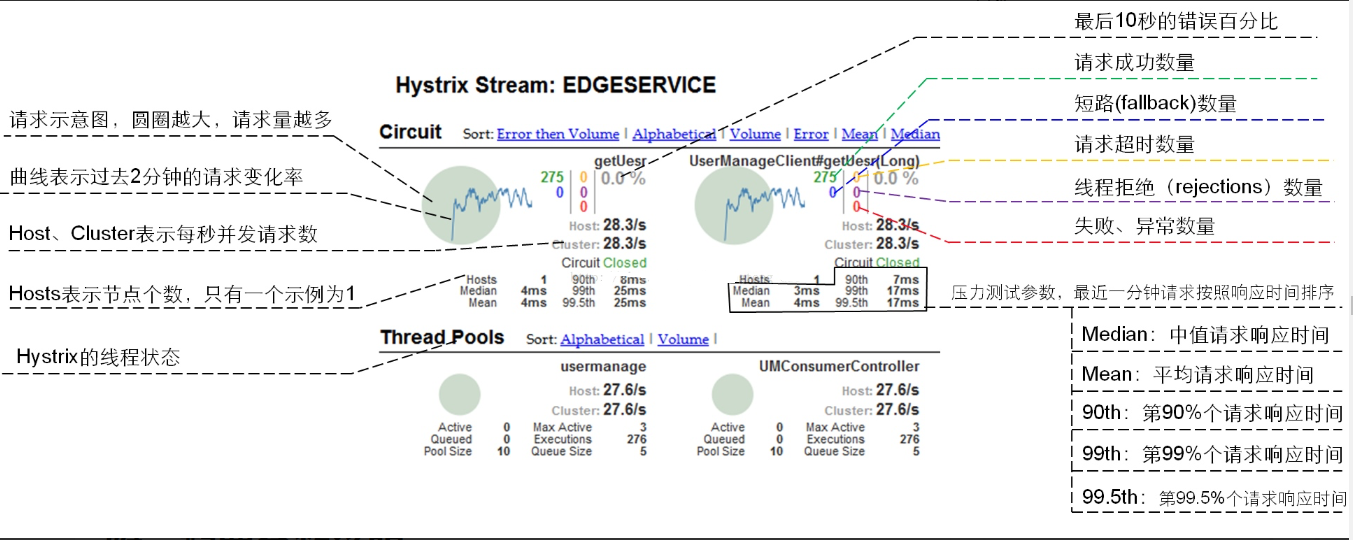

What do you think?

-

7 colors

-

1 turn

Filled circle: there are two meanings. It represents the health degree of the instance through the change of color, and its health degree decreases from green < yellow < orange < red.

In addition to the change of color, the size of the solid circle will also change according to the requested flow of the instance. The larger the flow, the larger the solid circle. Therefore, through the display of the solid circle, we can quickly find fault cases and high pressure cases in a large number of examples.

- Line 1

Curve: it is used to record the relative change of flow within 2 minutes. It can be used to observe the rising and falling trend of flow.

- Description of the whole drawing

- Overall drawing Description 2

- Only by understanding one can we understand complex problems