SQOOP installation and use

SQOOP installation

1. Upload and unzip

tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz -C /usr/local/soft/

2. Modify folder name

mv sqoop-1.4.7.bin__hadoop-2.6.0/ sqoop-1.4.7

3. Modify profile

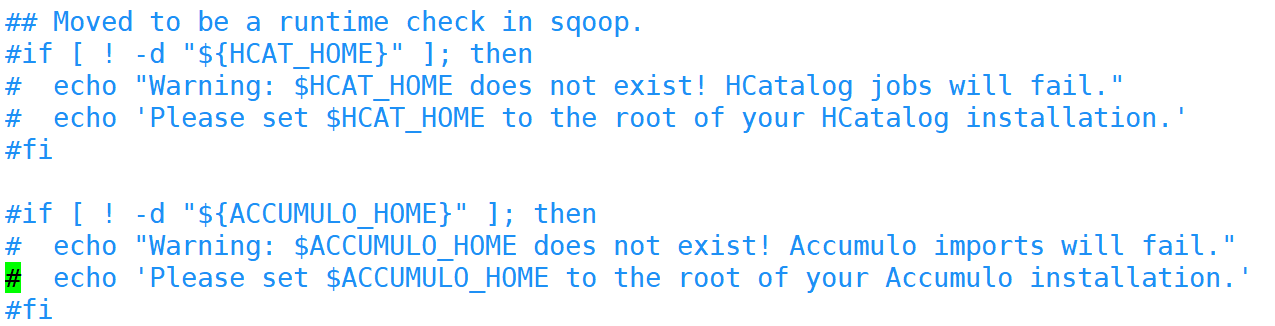

# Switch to the sqoop profile directory cd /usr/local/soft/sqoop-1.4.7/conf # Copy profile and rename cp sqoop-env-template.sh sqoop-env.sh # vim sqoop-env.sh edit the configuration file and add the following export HADOOP_COMMON_HOME=/usr/local/soft/hadoop-2.7.6 export HADOOP_MAPRED_HOME=/usr/local/soft/hadoop-2.7.6/share/hadoop/mapreduce export HBASE_HOME=/usr/local/soft/hbase-1.4.6 export HIVE_HOME=/usr/local/soft/hive-1.2.1 export ZOOCFGDIR=/usr/local/soft/zookeeper-3.4.6/conf export ZOOKEEPER_HOME=/usr/local/soft/zookeeper-3.4.6 # Switch to bin directory cd /usr/local/soft/sqoop-1.4.7/bin # VIM configure sqoop modifies the configuration file and comments out the useless content (just to remove the warning message)

4. Modify environment variables

vim /etc/profile # Add the directory of sqoop to the environment variable

5. Add MySQL connection driver

# Copy MySQL connection driver from HIVE to $SQOOP_HOME/lib cp /usr/local/soft/hive-1.2.1/lib/mysql-connector-java-5.1.49.jar /usr/local/soft/sqoop-1.4.7/lib/

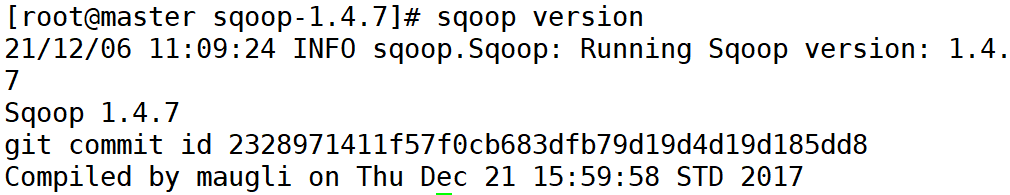

6. Testing

# Print sqoop version sqoop version

# Test MySQL connectivity sqoop list-databases -connect jdbc:mysql://master:3306?useSSL=false -username root -password 123456

Prepare MySQL data

Log in to MySQL database

mysql -u root -p123456;

Create student database

create database student;

Switch databases and import data

# Execute in mysql shell use student; source /root/student.sql; source /root/score.sql;

Another way to import data

# Execute in linux shell mysql -u root -p123456 student</root/student.sql mysql -u root -p123456 student</root/score.sql

Running SQL files using Navicat

You can also import via Navicat

Export MySQL database

mysqldump -u root -p123456 Database name>Any file name.sql

import

Import HDFS, HIVE, HBASE from traditional relational database

MySQLToHDFS

Write a script and save it as MySQLToHDFS.conf

import --connect jdbc:mysql://master:3306/student?useSSL=false --username root --password 123456 --table student --m 2 --split-by age --target-dir /sqoop/data/student1 --fields-terminated-by ','

Execute script

sqoop --options-file MySQLToHDFS.conf

matters needing attention:

1. – m means to specify how many Map tasks to generate. The more, the better, because MySQL Server has limited carrying capacity

2. When the specified number of map tasks is > 1, you need to specify the split key in combination with the -- split by parameter to determine which part of the data each map task reads. It is best to specify numerical columns and primary keys (or evenly distributed columns = > to avoid excessive differences in the amount of data processed by each map task)

3. If the specified split key data is unevenly distributed, it may cause data skew problems

4. It is best to specify numeric type for split keys, and the field types are numeric types such as int and bigint

5. When writing a script, note: for example, for the - username parameter, the parameter value cannot be on the same line as the parameter name

--username root // FALSE // It should be divided into two lines --username root

6. An error InterruptedException will be reported when running. You can ignore the problems inherent in Hadoop 2.7.6

21/01/25 14:32:32 WARN hdfs.DFSClient: Caught exception java.lang.InterruptedException at java.lang.Object.wait(Native Method) at java.lang.Thread.join(Thread.java:1252) at java.lang.Thread.join(Thread.java:1326) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.closeResponder(DFSOutputStream.java:716) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.endBlock(DFSOutputStream.java:476) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:652)

7. In fact, when sqoop reads mysql data, it uses JDBC, so when the amount of data is large, the efficiency is not very high

8. The bottom layer of sqoop imports and exports data through MapReduce. Only Map task is required, not Reduce task

9. Each Map task generates a file

MySQLToHive

First, export MySQL data and find a directory on HDFS for temporary storage. The default is: / user / user name / table name

Then load the data into Hive. After loading, the temporarily stored directory will be deleted

Write a script and save it as MySQLToHIVE.conf file

import --connect jdbc:mysql://master:3306/student?useSSL=false --username root --password 123456 --table score --fields-terminated-by "\t" --lines-terminated-by "\n" --m 3 --split-by student_id --hive-import --hive-overwrite --create-hive-table --hive-database testsqoop --hive-table score --delete-target-dir

Create testsqoop Library in Hive

hive> create database testsqoop;

Add HADOOP_CLASSPATH is added to the environment variable

vim /etc/profile # Add the following export HADOOP_CLASSPATH=$HADOOP_HOME/lib:$HIVE_HOME/lib/* # Reload environment variables source /etc/profile

Put hive-site.xml into SQOOP_HOME/conf/

cp /usr/local/soft/hive-1.2.1/conf/hive-site.xml /usr/local/soft/sqoop-1.4.7/conf/

Execute script

sqoop --options-file MySQLToHIVE.conf

–direct

With this parameter, when exporting MySQL data, you can use the export tool mysqldump provided by Mysql to speed up the export speed and improve the efficiency

You need to distribute / usr/bin/mysqldump on the master to the / usr/bin directories of node1 and node2

scp /usr/bin/mysqldump node1:/usr/bin/ scp /usr/bin/mysqldump node2:/usr/bin/

-e. use of parameters

import --connect jdbc:mysql://master:3306/student --username root --password 123456 --fields-terminated-by "\t" --lines-terminated-by "\n" --m 2 --split-by student_id --e "select * from score where student_id=1500100011 and $CONDITIONS" --target-dir /testQ --hive-import --hive-overwrite --create-hive-table --hive-database testsqoop --hive-table score2

MySQLToHBase

Write a script and save it as MySQLToHBase.conf

import --connect jdbc:mysql://master:3306/student?useSSL=false --username root --password 123456 --table student --hbase-table student --hbase-create-table --hbase-row-key id --m 1 --column-family cf1

Create student table in HBase

create 'student','cf1'

Execute script

sqoop --options-file MySQLToHBase.conf

export

HDFSToMySQL

Write a script and save it as HDFSToMySQL.conf

export --connect jdbc:mysql://master:3306/student?useSSL=false --username root --password 123456 --table student -m 1 --columns id,name,age,gender,clazz --export-dir /sqoop/data/student1/ --fields-terminated-by ','

Clear the data in the MySQL student table first, otherwise it will cause primary key conflict

Execute script

sqoop --options-file HDFSToMySQL.conf

View sqoop help

sqoop help 21/04/26 15:50:36 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6 usage: sqoop COMMAND [ARGS] Available commands: codegen Generate code to interact with database records create-hive-table Import a table definition into Hive eval Evaluate a SQL statement and display the results export Export an HDFS directory to a database table help List available commands import Import a table from a database to HDFS import-all-tables Import tables from a database to HDFS import-mainframe Import datasets from a mainframe server to HDFS job Work with saved jobs list-databases List available databases on a server list-tables List available tables in a database merge Merge results of incremental imports metastore Run a standalone Sqoop metastore version Display version information See 'sqoop help COMMAND' for information on a specific command.

# View detailed help for import sqoop import --help