The data stack is a cloud native one-stop data center PaaS. We have an interesting open source project on github and gitee: FlinkX , FlinkX is a unified data synchronization tool for batch flow based on Flink. It can collect both static data and real-time changing data. It is a data synchronization engine integrating global, heterogeneous and batch flow. Please order us a star if you like! star! star!

github open source project: https://github.com/DTStack/flinkx

gitee open source project: https://gitee.com/dtstack_dev_0/flinkx

MySQL is one of the most popular relational databases at present. With the rapid development of the Internet, MySQL database is widely used in the production systems of e-commerce, finance and many other industries.

In the actual development, operation and maintenance process, we must often encounter the problem of slow SQL. A SQL with poor performance will often bring too much performance overhead, which will lead to the excessive use of the resources of the whole operating system, and even cause session accumulation and online failure.

In the scenario of SQL tuning, a common problem is implicit type conversion. So what is implicit conversion?

In MySQL, when operators are used with operands of different types, type conversion occurs to make operands compatible, and implicit conversion occurs. Implicit conversion often means that the execution efficiency of SQL will be greatly reduced.

Next, the author will combine several common scenarios to let you actually experience what implicit conversion is and how to deal with the situation of implicit conversion. Please read the following cases.

1, Implicit conversion caused by inconsistent transfer data type and field type

A classic scenario is the implicit conversion caused by the inconsistency between the transfer data type and the field type, which is also the most common scenario we usually encounter. See the following example for details:

1) Scenarios to be optimized

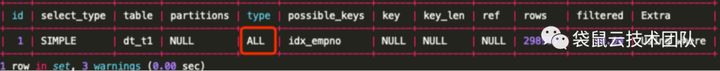

SQL and execution plan are as follows:

select * from dt_t1 where emp_no = 41680;

The table index is as follows:

key idx_empno (`emp_no`)

2) Scene analysis

From the Type part of the execution plan: ALL, full table scanning without idx_empno index. In general, the data Type passed may be inconsistent with the actual field Type. Let's take a look at the specific table structure.

root@localhost mysql.sock 5.7.28-log :[employees] 14:48:10>desc employees;

+------------+---------------+------+-----+---------+-------+

| Field | Type | Null | Key | Default | Extra |

+------------+---------------+------+-----+---------+-------+

| emp_no | varchar(14) | NO | MUL | NULL | |

| birth_date | date | NO | | NULL | |

| first_name | varchar(14) | NO | | NULL | |

| last_name | varchar(16) | NO | | NULL | |

| gender | enum('M','F') | NO | | NULL | |

| hire_date | date | NO | | NULL | |

+------------+---------------+------+-----+---------+-------+

6 rows in set (0.00 sec)

It can be seen in the table structure that the field type is varchar and the transfer field is integer, resulting in implicit conversion and no index.

3) Scene optimization

The SQL can be rewritten simply to avoid implicit conversion, as follows:

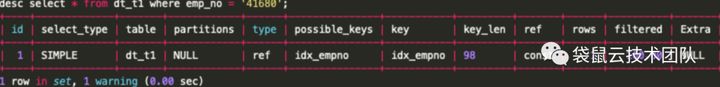

select * from dt_t1 where emp_no='41680';

When the incoming data is a varchar type consistent with the matching field, the index can be used normally. The optimization effect is as follows:

2, Implicit conversion caused by inconsistent associated field types

In addition to the query scenario of constant matching, there will also be implicit conversion when the associated fields of associated queries are inconsistent.

1) Scenarios to be optimized

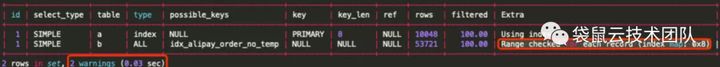

SELECT count(*) from t1 as a JOIN `t2` b on a.`id` = b.`alipay_order_no` ;

2) Scene analysis

From the execution plan, we can see the driven table b, Extra:Range checked for each record (index map: 0x8)

Generally, when we see the Range checked for each record (index map: 0x8), implicit conversion may occur. Let's see how the official document explains it.

Range checked for each record (index map: N) (JSON property: message)MySQL found no good index to use, but found that some of indexes might be used after column values from preceding tables are known. For each row combination in the preceding tables, MySQL checks whether it is possible to use a range or index_merge access method to retrieve rows. This is not very fast, but is faster than performing a join with no index at all. The applicability criteria are as described in Section 8.2.1.2, "Range Optimization", and Section 8.2.1.3, "Index Merge Optimization", with the exception that all column values for the preceding table are known and considered to be constants.

Indexes are numbered beginning with 1, in the same order as shown by SHOW INDEX for the table. The index map value N is a bitmask value that indicates which indexes are candidates. For example, a value of 0x19 (binary 11001) means that indexes 1, 4, and 5 will be considered.

View the structure of the following table:

CREATE TABLE `t2` ( `id` int(11) NOT NULL AUTO_INCREMENT, `alipay_order_no` varchar(45) DEFAULT NULL, xxxx PRIMARY KEY (`id`), KEY `idx_alipay_order_no_temp` (`alipay_order_no`) USING BTREE ) ENGINE=InnoDB AUTO_INCREMENT=2539968 DEFAULT CHARSET=utf8 A total of 1 line of records is returned,Cost 5 ms. CREATE TABLE `t1` ( `id` bigint(20) NOT NULL, xxxxxx PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8 A total of 1 line of records is returned,Cost 5 ms.

We observed from the table structure that the associated field data is of type int and varchar.

When this scenario occurs, how should we optimize it?

Let's go back to the specific execution plan. The drive table is a and the driven table is B; Association condition: a.id = b.alipay_order_no ; When the field ID of table a is a constant, it is passed to b.alipay_ order_ When no, column occurs_ If the type is inconsistent and the index cannot be used, let's make the field type passed by a.id and b.alipay_ order_ If no is consistent, you can use the index?

3) Scene optimization

We can perform explicit type conversion on the associated field of the driven table to make it consistent with the type of the associated field of the driven table. The SQL after rewriting is as follows:

SELECT COUNT(*) FROM `t1` o join `t2` og ON `o`.`def8`= `og`.`group_id` WHERE o.`def1`= 'DG21424956'

2) Scene analysis

From this execution plan, we can see that the second list og contains using join buffer (Block Nested Loop), TYpe=ALL

Generally, in this case: using join buffer (Block Nested Loop), a. the associated field has no index, b. implicit conversion, etc

See the following table for the specific structure:

create table t1(

.....

`group_id` varchar(20) NOT NULL,

PRIMARY KEY (`id`),

KEY `group_id` (`group_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8

create table t2(

.....

`def8` varchar(20) DEFAULT NULL,

PRIMARY KEY (`id`),

KEY `idx_tr_def1` (`def8`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4We can see from the table structure that there are indexes in the associated fields, but the character set is different, t1 utf8, t2 utf8mb4

3) Scene optimization

The idea of SQL rewriting is similar to the above example. We convert the character set of the associated fields of the driving table, as follows:

SELECT COUNT(*) FROM `t1` o left join `t2` og ON CONVERT( o.`def8` USING utf8 ) = `og`.`group_id` WHERE o.`def1`= 'DG21424956

After being converted into a consistent character set, it can be associated through the index

3, Implicit conversion caused by inconsistent verification rules

So, as long as the data types and character sets on both sides of the operator are consistent, will there be no implicit conversion?

The answer is no, because another important attribute of the character set is the verification rules. When the verification rules are inconsistent, there will be implicit conversion behavior. See the following example for details:

1) Scenarios to be optimized

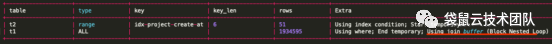

SELECT * FROM `t1` WHERE `uuid` in (SELECT uuid FROM t2 WHERE project_create_at!= "0000-00-00 00:00:00")

The SQL execution plan is as follows:

2) Scene analysis

The table structure of the two tables is as follows:

CREATE TABLE `t1` ( `id` int(11) NOT NULL AUTO_INCREMENT, ` uuid` varchar(128) COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT 'UUID', xxxxxx PRIMARY KEY (`id`), UNIQUE KEY `uuid_idx` (`uuid`) ) ENGINE=InnoDB AUTO_INCREMENT=2343994 DEFAULT CHARSET=utf8 COLLATE=utf8_unicode_ci CREATE TABLE `t2` ( `id` int(11) NOT NULL AUTO_INCREMENT, `uuid` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci NOT NULL DEFAULT '' COMMENT 'project uuid', PRIMARY KEY (`id`), ) ENGINE=InnoDB AUTO_INCREMENT=5408 DEFAULT CHARSET=utf8

From the table structure, we can see that t1 table, as the driven table uuid, has a unique index, and the data types and character sets of associated fields are consistent, but the different verification rules make it impossible to use the index in this scenario.

3) Scene optimization

We can rewrite the following to display and define the verification rules of the associated fields of the driving table to make them consistent with the driven table

explain extended select b.* from (select uuid COLLATE utf8_unicode_ci as uuid from t1 where project_create_at != "0000-00-00 00:00:00") a, t2 b where a.uuid = b.uuid +--------------+-----------------------+--------------------+----------------+-----------------------+-------------------+---------------+----------------+-----------------------+ | id | select_type | table | type | key | key_len | ref | rows | Extra | +--------------+-----------------------+--------------------+----------------+-----------------------+-------------------+---------------+----------------+-----------------------+ | 1 | PRIMARY | <derived2> | ALL | | | | 51 | | | 1 | PRIMARY | b | eq_ref | uuid_idx | 386 | a.uuid | 1 | | | 2 | DERIVED | volunteer_patients | range | idx-project-create-at | 6 | | 51 | Using index condition | +--------------+-----------------------+--------------------+----------------+-----------------------+-------------------+---------------+----------------+-----------------------+ A total of 3 lines of records are returned,Cost 4 ms.

It can be seen that the rewritten SQL normally uses the index for Field Association, which achieves our expected effect.

4, Summary

The scenarios of implicit conversion mainly include inconsistent field types, inconsistent associated field types, inconsistent character set types or inconsistent proofreading rules. When there are SQL performance problems caused by implicit conversion, analyze the corresponding scenarios and apply the medicine to the case.

In addition, implicit conversion may lead to inaccurate query result sets, inconsistent character sets, and master-slave synchronization errors. Therefore, we should try to avoid them in practical use.