We should not be unfamiliar with several states of PV and PVC, but we may also have some questions during use, such as why PV has become Failed, how can the newly created PVC bind the previous PV, and can I restore the previous PV? Here we will explain several state changes in PV and PVC again.

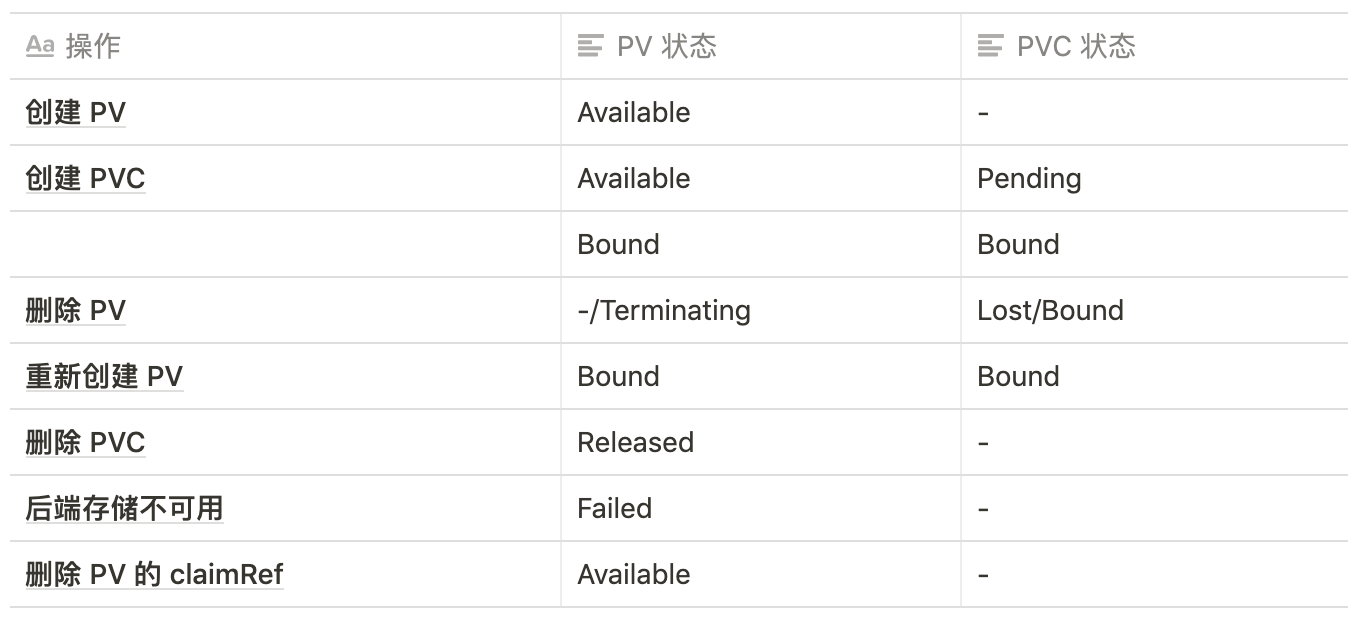

In different cases, the state changes of PV and PVC are described in the following table:

PV, PVC status

Create PV

Under normal circumstances, PV is Available after it is successfully created:

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

storageClassName: manual

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

nfs:

path: /data/k8s # Specify the mount point for nfs

server: 10.151.30.1 # Specify nfs service addressDirectly create the above PV object, you can see that the status is Available, indicating that it can be used for PVC binding:

$ kubectl get pv nfs-pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfs-pv 1Gi RWO Retain Available manual 7s

New PVC

The PVC state just added is Pending. If there is an appropriate PV, the Pending state will immediately change to Bound state, and the corresponding PVC will also change to Bound. PVC and PV are Bound. We can add PVC first and then PV, so that we can see the Pending status.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1GiCreate the above PVC resource object. It will be in Pending status just after the creation is completed:

$ kubectl get pvc nfs-pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nfs-pvc Pending manual 7s

When the PVC finds the appropriate PV binding, it will immediately change to the Bound state, and the PV will also change from the Available state to the Bound state:

$ kubectl get pvc nfs-pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nfs-pvc Bound nfs-pv 1Gi RWO manual 2m8s $ kubectl get pv nfs-pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfs-pv 1Gi RWO Retain Bound default/nfs-pvc manual 23s

Delete PV

Since PVC and PV are already bound, what happens if we accidentally delete PV at this time

$ kubectl delete pv nfs-pv persistentvolume "nfs-pv" deleted ^C $ kubectl get pv nfs-pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfs-pv 1Gi RWO Retain Terminating default/nfs-pvc manual 12m $ kubectl get pvc nfs-pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nfs-pvc Bound nfs-pv 1Gi RWO manual 13m

In fact, the PV is deleted by hang, that is, we can't really delete the PV, but at this time, the PV will become the Terminating state, and the corresponding PVC is still in the Bound state. That is to say, at this time, since the PV and PVC have been Bound together, we can't delete the PV first, but the current state is the Terminating state, which still has no impact on the PVC, So what should we do at this time?

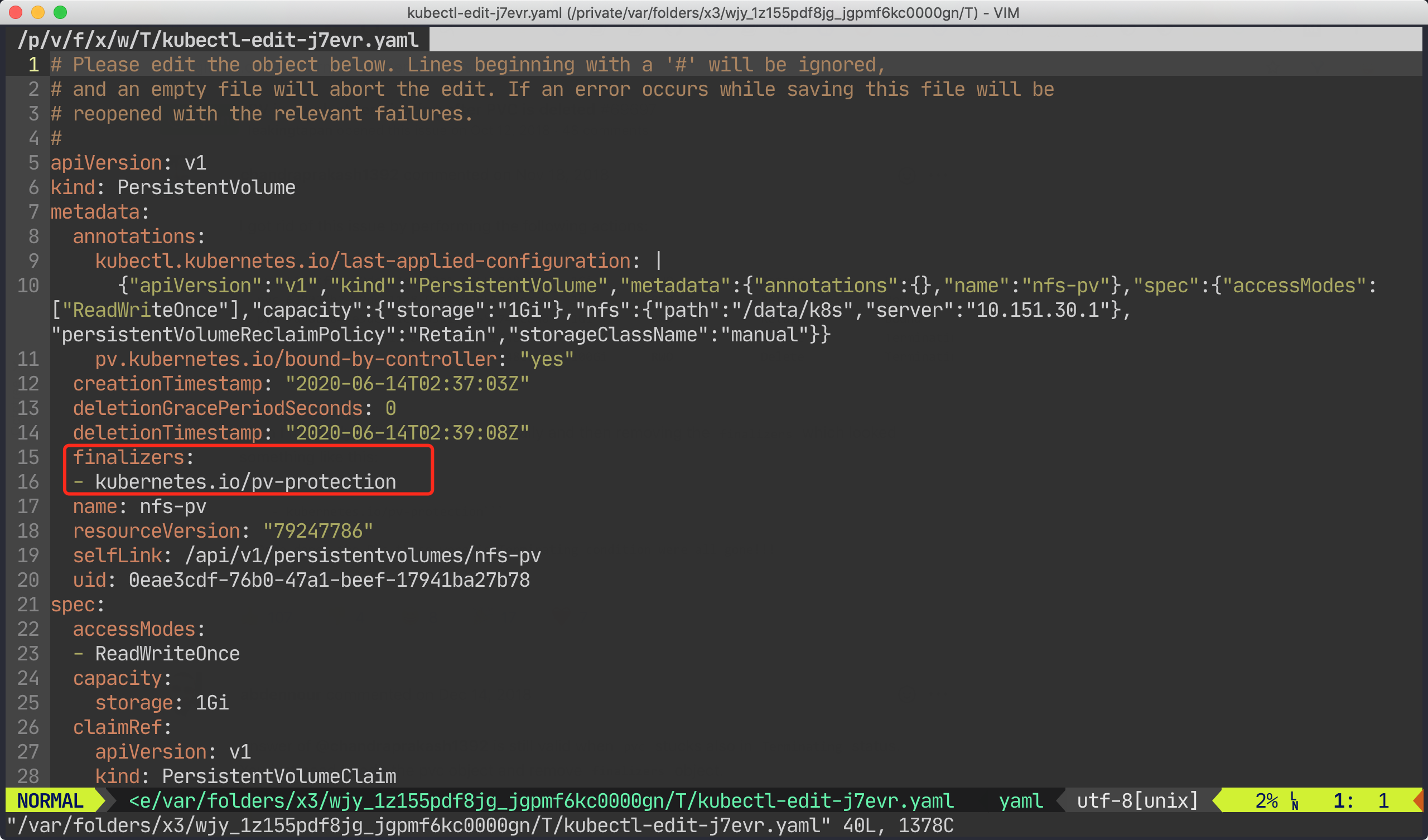

We can forcibly delete PV by editing PV and deleting the finalizers attribute in PV:

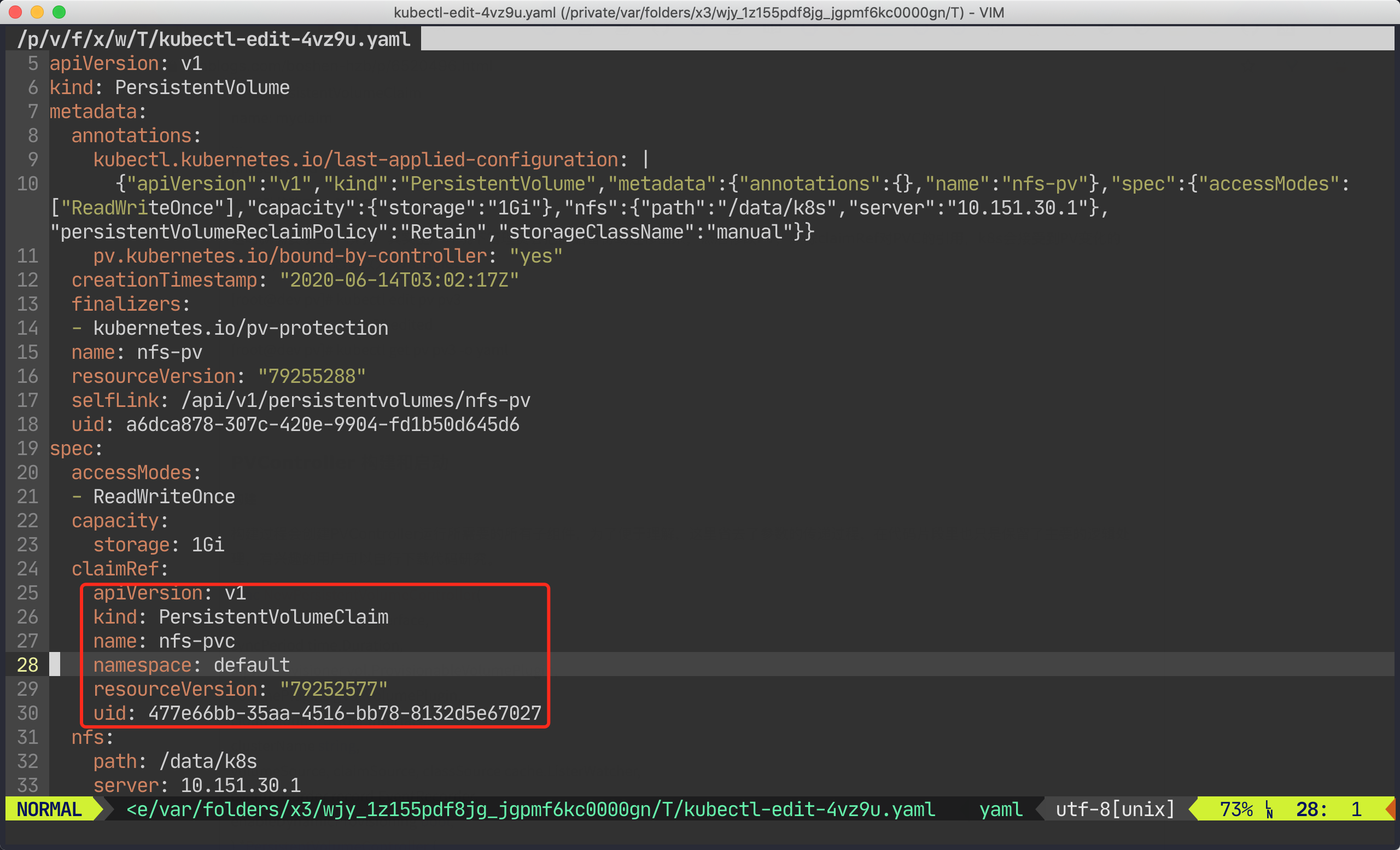

$ kubectl edit pv nfs-pv # Delete the contents of the finalizers attribute as shown below

delete finalizers

After editing, the PV will be deleted and the PVC will be in Lost status:

$ kubectl get pv nfs-pv Error from server (NotFound): persistentvolumes "nfs-pv" not found $ kubectl get pvc nfs-pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nfs-pvc Lost nfs-pv 0 manual 23m

Recreate PV

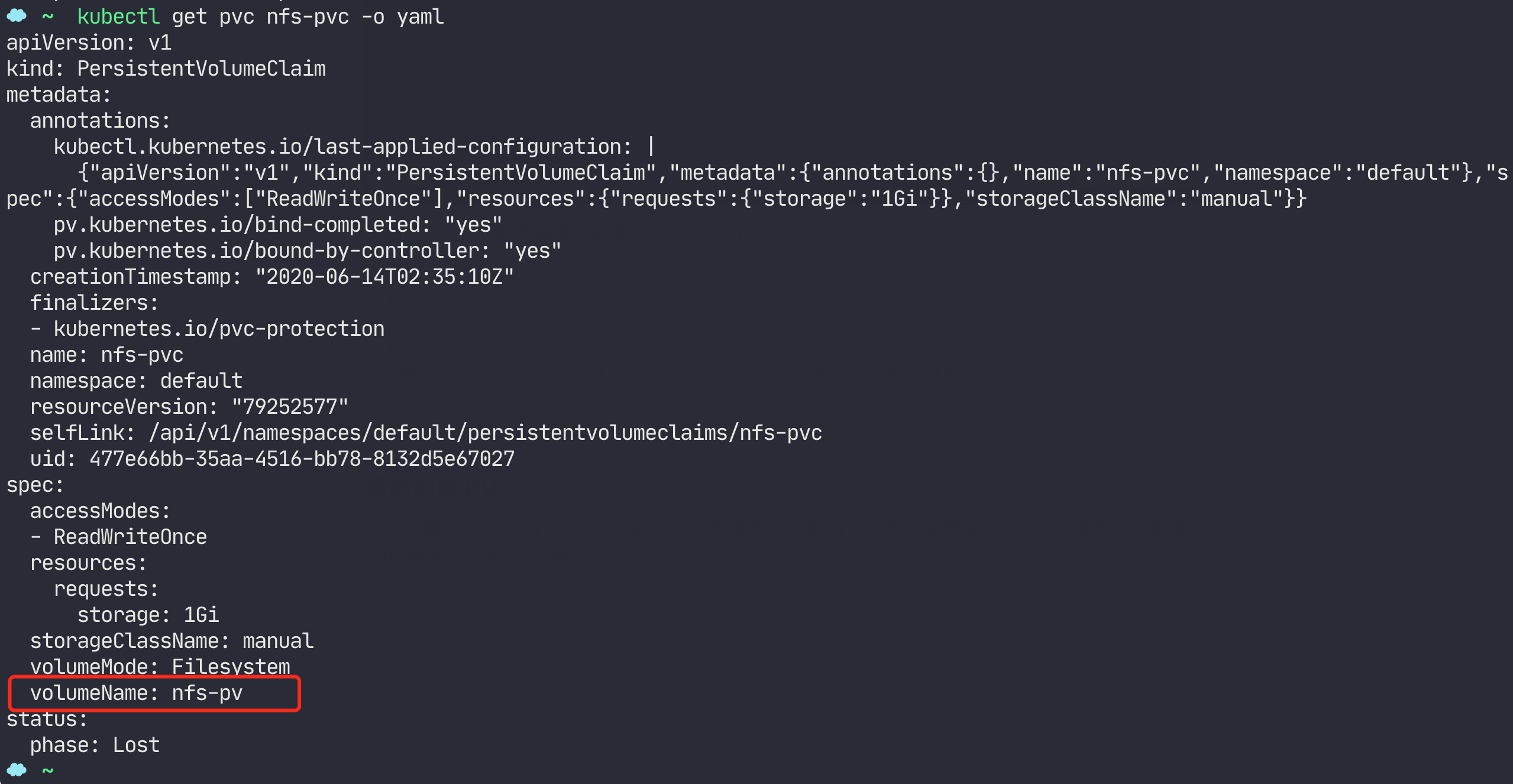

When we see that the PVC is in the Lost state, don't worry. This is because there are no PV bound before, but there is still PV binding information in the PVC:

pv volumeName

Therefore, it is also very simple to solve this problem. You only need to re create the previous PV:

# Recreate PV $ kubectl apply -f volume.yaml persistentvolume/nfs-pv created

When the PV is created successfully, the PVC and PV states are restored to the Bound state:

$ kubectl get pv nfs-pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfs-pv 1Gi RWO Retain Bound default/nfs-pvc manual 93s # PVC returned to normal Bound state $ kubectl get pvc nfs-pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nfs-pvc Bound nfs-pv 1Gi RWO manual 27m

Delete PVC

The above is the case of deleting PV first. What would happen if we deleted PVC first?

$ kubectl delete pvc nfs-pvc persistentvolumeclaim "nfs-pvc" deleted $ kubectl get pv nfs-pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfs-pv 1Gi RWO Retain Released default/nfs-pvc manual 3m36s

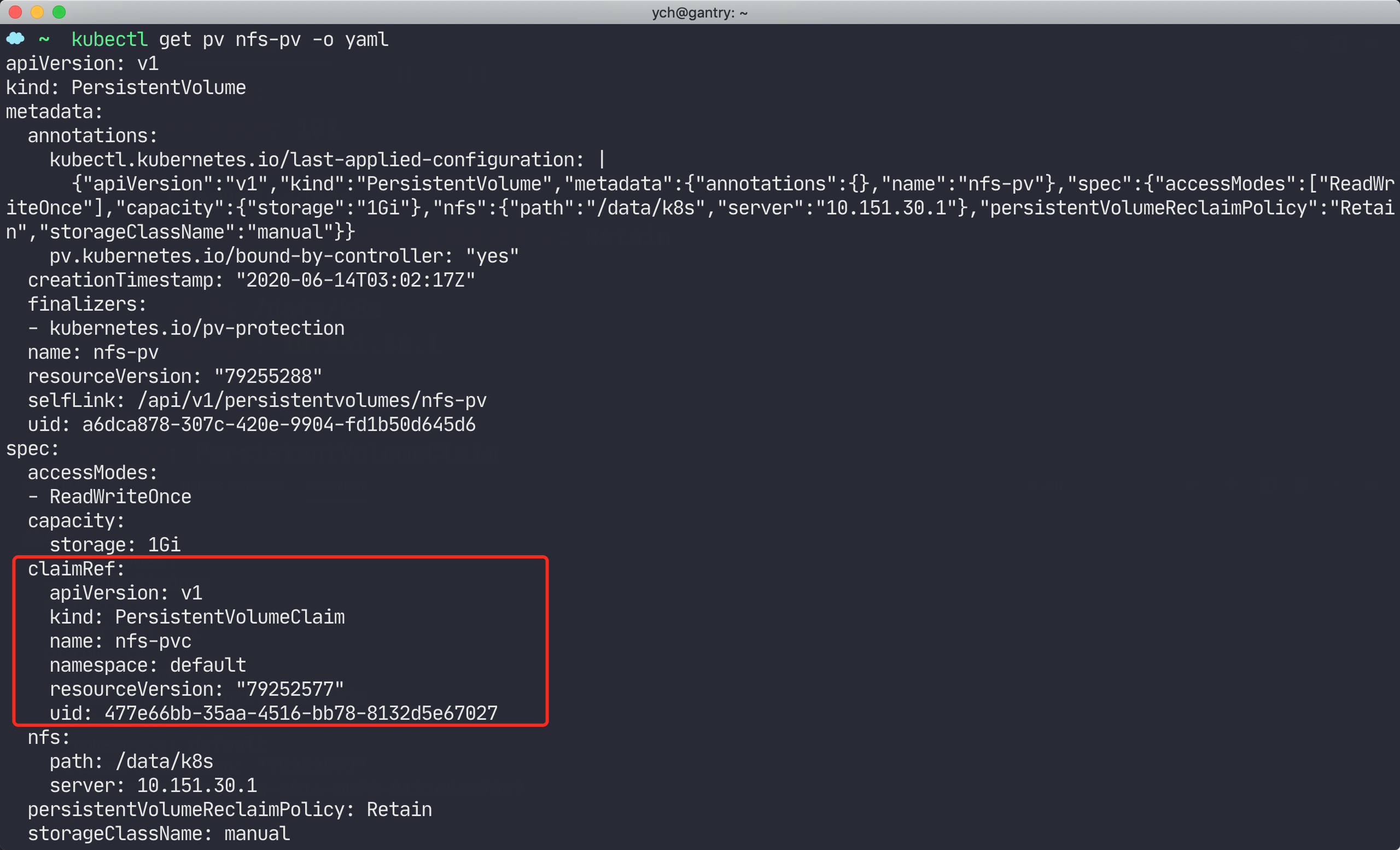

We can see that after the PVC is deleted, the PV becomes Released, but we look carefully at the later CLAIM attribute, which still retains the binding information of the PVC. You can also export the object information of the PV through the following command:

pv claimRef

At this time, you may think that now my PVC has been deleted and the PV has become Released. Then they can rebind the PVC before I rebuild. In fact, PVC can only be bound with the PV in Available state.

At this time, we need to intervene manually. In the real production environment, the administrator will back up or migrate the data, then modify the PV and delete the reference of claimRef to PVC. At this time, after the PV controller of Kubernetes watch es the PV change, it will modify the PV to the Available state. Of course, the PV in the Available state can be bound by other PVC.

Edit PV directly and delete the content in cliamRef attribute:

# Delete content in cliamRef $ kubectl edit pv nfs-pv persistentvolume/nfs-pv edited

remove pv claimRef

After deletion, the PV will be in the normal Available state. Of course, the PVC before reconstruction can be bound normally:

$ kubectl get pv nfs-pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfs-pv 1Gi RWO Retain Available manual 12m

In the newer version of Kubernetes cluster, various functions of PV have also been enhanced, such as cloning, snapshot and other functions, which are very useful. We will explain these new functions later.

Original link: https://www.qikqiak.com/post/status-in-pv-pvc/