State & Fault Tolerance

Stateful function s and operators for flow processing can store the calculation state of each Event in the flow calculation process. State computation is the construction of precise operation will not or lack of plate. Flink needs to know the status of the computing node, so as to use checkpoint and savepoint mechanisms to achieve data recovery and fault tolerance. The Queryable State allows the external to query the data state during the Flink operation. When the user uses the state operation Flink to provide the state backend mechanism for storing state information, and the calculation state can be stored in the Java heap and out of the heap, depending on the statebackend mechanism adopted. Configuring statebackend does not affect the processing logic of the application.

State Backends

Flink provides different Sate backend to specify the storage method and location of the State. Depending on your State Backend, a State can be on or off the Java heap. Flink manages the Sate of the application, which means that Flink handles memory management (which can overflow to disk if necessary) to allow the application to remain very large. By default, the configuration file flink-conf.yaml determines the State Backend for all Flink jobs. However, you can override the default State Backend based on each job, as shown below.

var env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setStateBackend(...);

(1) MemoryStateBackend: state data is saved in java heap memory. When checkpoint is executed, snapshot data of state will be saved in memory of jobmanager. Memory based state backend is not recommended in production environment.

(2) FsStateBackend: state data is saved in the memory of taskmanager. When checkpoint is executed, the snapshot data of state will be saved to the configured file system. hdfs and other distributed file systems can be used.

(3) RocksDBStateBackend: rocksdb is slightly different from the above. It will maintain state in the local file system, and state will be written directly to the local rocksdb. At the same time, it needs to configure a remote filesystem uri (usually HDFS), which will copy the local data directly to the filesystem when doing checkpoint. Recover from filesystem to local when fail over. Rocksdb overcomes the memory limitation of state and can be persisted to the remote file system. It is more suitable for production.

Without any configuration, the system uses MemoryStateBackend Sate

Configure RocksDBStateBackend state store

[root@CentOS flink-1.8.0]# vi conf/flink-conf.yaml

#============================================================================== # Fault tolerance and checkpointing #============================================================================== # The backend that will be used to store operator state checkpoints if # checkpointing is enabled. # # Supported backends are 'jobmanager', 'filesystem', 'rocksdb', or the # <class-name-of-factory>. # state.backend: rocksdb # Directory for checkpoints filesystem, when using any of the default bundled # state backends. # state.checkpoints.dir: hdfs:///flink-checkpoints # Default target directory for savepoints, optional. # state.savepoints.dir: hdfs:///flink-savepoints # Flag to enable/disable incremental checkpoints for backends that # support incremental checkpoints (like the RocksDB state backend). # # state.backend.incremental: false

Test savepoint

[root@CentOS flink-1.8.0]# ./bin/flink list -m CentOS:8081 ------------------ Running/Restarting Jobs ------------------- 27.04.2019 13:49:01 : 788af53fa5e8cc6d1d9e6381496727a9 : state tests (RUNNING) -------------------------------------------------------------- [root@CentOS flink-1.8.0]# ./bin/flink cancel -m CentOS:8081 -s 788af53fa5e8cc6d1d9e6381496727a9

-s can be followed by the savepoint address. If it is not specified, the default configuration address will be used.

Checkpointing

Each function and operator in Flink can be stateful. For state fault tolerance, Flink needs to check the state. Checkpoints allow Flink to restore state and location in the flow, providing the same semantics for the application as for trouble free execution. Flink's checkpoint mechanism interacts with persistent storage of flows and states. In general, it requires:

- Persistent data source can replay records in a certain period of time. Examples of such sources are persistent message queues (for example, Apache Kafka, RabbitMQ, Amazon Kinesis, Google PubSub) or file systems (for example, HDFS, S3, GFS, NFS, Ceph,...).

- Persistent storage of state, usually distributed file system (for example, HDFS, S3, GFS, NFS, Ceph,...)

By default, checkpoints are not turned on. To enable checkpoints, call enableCheckpointing (n) on the StreamExecutionEnvironment, where n is the checkpoint interval in milliseconds.

val env = StreamExecutionEnvironment.getExecutionEnvironment env.enableCheckpointing(10000)//Set the system to automatically chekpoint once in 10 seconds and record the status

Other parameters of Checkpoint include:

- Exactly once vs. at least once: you can choose to pass the pattern to the enableCheckpointing (n) method to choose between the two guarantee levels. For most applications, exactly once is preferred. At least once it may be related to some applications with ultra-low latency.

env.enableCheckpointing(10000,CheckpointingMode.EXACTLY_ONCE)

- checkpoint timeout: if it is not completed before the specified time, the checkpoint in progress will be aborted.

env.getCheckpointConfig.setCheckpointTimeout(2000)

- minimum time between checkpoints: to ensure that the flow application has a certain time interval between checkpoints, you can define how long it takes between checkpoints. If this value is set to 5000, for example, it means that the next round of checkpoint should delay at least 5 seconds after the end of the last checkpoint at the beginning of the checkpoint. When this parameter is configured, the priority of this parameter is higher than Checkpoint Interval

env.getCheckpointConfig.setMinPauseBetweenCheckpoints(5000)

- number of concurrent checkpoints: by default, when a program is placed in a place that spends too much time at a checkpoint, it will affect the flow calculation time. Therefore, by default, only one process of flink will do checkpoint. Of course, users can set multiple checkpoint threads to achieve checkpoint overlap, which is more convenient to recover in case of failure, but will reduce the performance of the program Sex cannot be used with minimum time between checkpoints.

env.getCheckpointConfig.setMaxConcurrentCheckpoints(1)

- External checkpoints: by default, checkpoints are not retained and are only used to recover jobs from failures. They are removed when the program is cancelled. Configure the periodic checkpoints to keep. Depending on the configuration, these reserved checkpoints are not automatically cleared when a job fails or is cancelled.

What happens to checkpoint when job cancellation is configured in ExternalizedCheckpointCleanup mode:

Retain? On? Cancellation: keep checkpoints when canceling a job. Note that in this case, the checkpoint state must be manually cleaned up after cancellation.

Delete? On? Cancellation: deletes a checkpoint when the job is cancelled. Checkpoint status is available only if the job fails. (default)

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.enableCheckpointing(5000,CheckpointingMode.EXACTLY_ONCE)

env.getCheckpointConfig.setCheckpointTimeout(2000)

env.getCheckpointConfig.setMinPauseBetweenCheckpoints(1000)

env.getCheckpointConfig.setMaxConcurrentCheckpoints(1)

env.getCheckpointConfig.enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION)

env.socketTextStream("CentOS",9999)

.flatMap(line => for(i <- line.split("\\W+")) yield (i,1))

.keyBy(0)

.sum(1)

.print()

env.execute("state tests")

Users can perform:

[root@CentOS flink-1.8.0]# ./bin/flink list -m CentOS:8081 Waiting for response... ------------------ Running/Restarting Jobs ------------------- 27.04.2019 16:19:22 : 00abda5f7ac362b854f0e95d9a567bf5 : state tests (RUNNING) -------------------------------------------------------------- No scheduled jobs. [root@CentOS flink-1.8.0]# ./bin/flink cancel -m CentOS:8081 00abda5f7ac362b854f0e95d9a567bf5

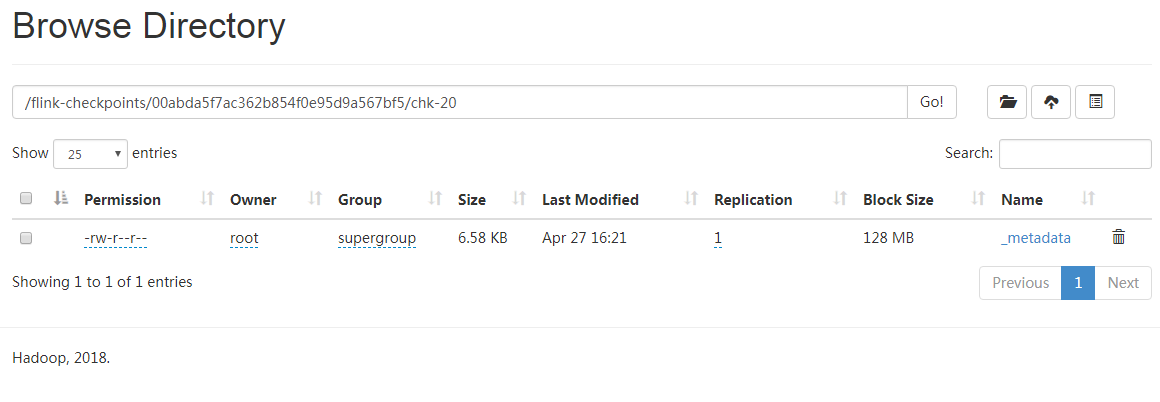

Users can view the checkpoint content in the hdfs directory

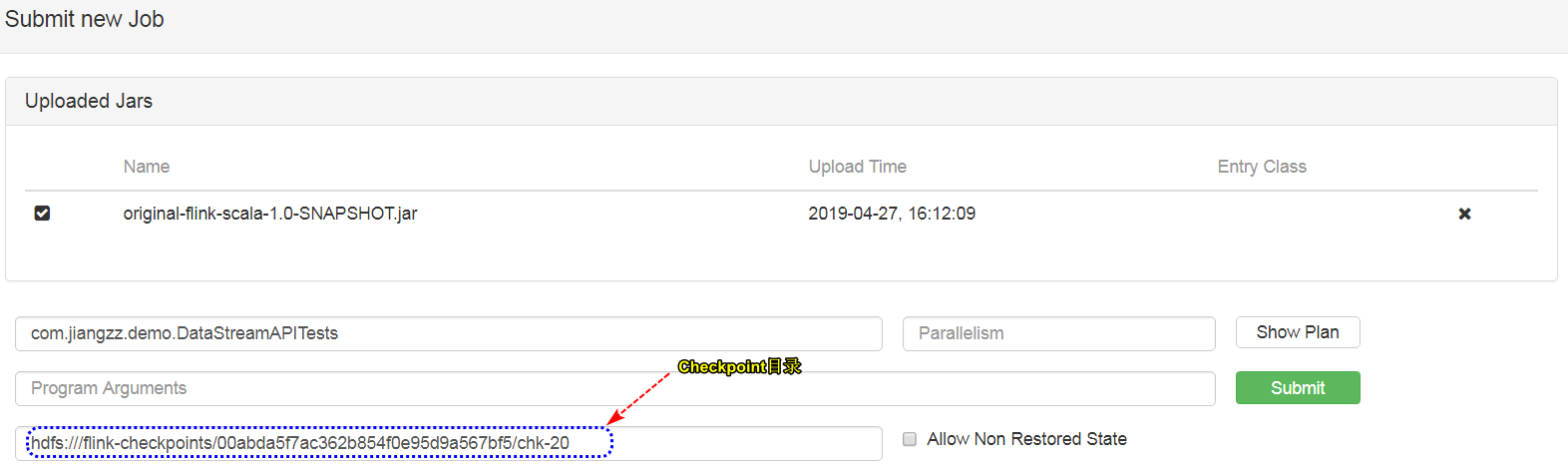

Then restart the task to test whether the status is saved

It will be found that both savepoint and checkpoint can do state recovery, but unlike savepoint, checkpoint is triggered automatically, and the trigger frequency is specified through the program. Savepoint enables the user to display the call manually, so if the user manually calls the savepoint program to exit, the checkpoint data will be automatically deleted, which means that the externalized checkpoint cleanup policy configured for appeal does not work (test version 1.8.0).

State state classification

- Keyed State: for some operations on KeyedStream, based on the key and its corresponding state

- Operator State: for the state of a non keyed state operation, for example, FlinkKafkaConsumer is an Operators state. Each Kafka consumer instance manages the Topic partition and offset information consumed by the instance.

The above two states can store state in the form of managed and raw. The managed state means that the management of state is managed to Flink to determine the data structure ("ValueState", "ListState", etc.). Flink automatically uses checkpoint mechanism to persist the calculation state during operation. All function s of Flink support the managed form. Raw state is to serialize byte persistence state at checkpoint. Flink does not know the storage structure of state. It only uses raw form to store state when Operators are defined. Flink recommends the managed form, because Flink can redistribute when the task parallelism changes, and has better memory management.

Managed Keyed State

State calculation

val env = StreamExecutionEnvironment.createLocalEnvironment()

env.socketTextStream("localhost",9999)

.flatMap(line => for(i <- line.split("\\W+")) yield (i,1))

.keyBy(0)

.map(new StateRichMapFunction())

.print()

env.execute("state tests")

- Valuestate < T >: this state records the value contained in the key. For example, you can use update (T) to update the value or T value() to get the value.

class StateRichMapFunction extends RichMapFunction[(String,Int),(String,Int)]{

var valueState:ValueState[Int] = _

override def map(value: (String, Int)): (String, Int) = {

val v = valueState.value()

var count=0

if(v == null) {

count=value._2

}else{

count = v + value._2

}

valueState.update(count)

(value._1,count)

}

override def open(parameters: Configuration): Unit = {

val context = getRuntimeContext

var vsd=new ValueStateDescriptor[Int]("count",createTypeInformation[Int])

valueState = context.getState(vsd)

}

}

- Liststate < T >: a series of elements can be stored. You can use add(T) or addAll(List) to add elements, iterative get() to get elements, or update(List) to update elements.

class StateRichMapFunction extends RichMapFunction[(String,Int),(String,Int)]{

var listState:ListState[Int] = _

override def map(value: (String, Int)): (String, Int) = {

listState.add(value._2)

(value._1,listState.get().asScala.sum)

}

override def open(parameters: Configuration): Unit = {

val context = getRuntimeContext

var lsd=new ListStateDescriptor[Int]("list",classOf[Int])

listState = context.getListState(lsd)

}

}

- Reducingstate < T >: keep a value to represent the aggregation result of all data. When creating, you need to give ReduceFunction to implement the calculation logic, and call add(T) method to implement the data summary.

class StateRichMapFunction extends RichMapFunction[(String,Int),(String,Int)]{

var reduceState:ReducingState[(String,Int)] = _

override def map(value: (String, Int)): (String, Int) = {

reduceState.add(value)

reduceState.get()

}

override def open(parameters: Configuration): Unit = {

val context = getRuntimeContext

var rsd=new ReducingStateDescriptor[(String,Int)]("reduce",

new ReduceFunction[(String, Int)] {

override def reduce(v1: (String, Int), v2: (String, Int)): (String, Int) = {

(v1._1,v1._2+v2._2)

}

}

,createTypeInformation[(String,Int)])

reduceState = context.getReducingState(rsd)

}

}

- Aggregatingstate < in, out >: reserve a value to represent the office and result of data. When creating an AggregateFunction, you need to implement calculation logic and provide add(IN) method to realize data accumulation.

class StateRichMapFunction extends RichMapFunction[(String,Int),(String,Int)]{

var aggState:AggregatingState[(String,Int),Int] = _

override def map(value: (String, Int)): (String, Int) = {

aggState.add(value)

(value._1,aggState.get())

}

override def open(parameters: Configuration): Unit = {

val context = getRuntimeContext

var asd=new AggregatingStateDescriptor[(String,Int),Int,Int]("agg",

new AggregateFunction[(String,Int),Int,Int] {

override def createAccumulator(): Int = {

0

}

override def add(value: (String, Int), accumulator: Int): Int = {

value._2+accumulator

}

override def getResult(accumulator: Int): Int = {

accumulator

}

override def merge(a: Int, b: Int): Int = {

a+b

}

},createTypeInformation[Int])

aggState = context.getAggregatingState(asd)

}

}

- FoldingState < T, ACC >: keep a value to represent the aggregate result of all data. However, in contrast to ReduceState, the aggregation type and the final type are not required to be consistent. When creating a FoldingState, you need to specify a FoldFunction and call the add(T) method to implement data summary. FoldingState is out of date in Flink version 1.4 and is expected to be abolished in later versions. Users can use AggregatingState replacement.

class StateRichMapFunction extends RichMapFunction[(String,Int),(String,Int)]{

var foldState:FoldingState[Int,Int] = _

override def map(value: (String, Int)): (String, Int) = {

foldState.add(value._2)

(value._1,foldState.get())

}

override def open(parameters: Configuration): Unit = {

val context = getRuntimeContext

val fsd=new FoldingStateDescriptor[Int,Int]("mapstate",0,new FoldFunction[Int,Int]{

override def fold(accumulator: Int, value: Int): Int = {

accumulator+value

}

},createTypeInformation[Int])

foldState = context.getFoldingState(fsd)

}

}

- Mapstate < UK, UV >: store the Mapping of some columns. Use put(UK, UV) and putall (map < UK, UV >) to add element data. You can get a value through get(UK). You can query the data through entries(), keys(), and values().

All the above states provide a clear() method to clear the state value corresponding to the current key. It should be noted that these states can only be used in some interfaces with state, which may not be stored internally, but may reside on disk or other locations.

class StateRichMapFunction extends RichMapFunction[(String,Int),(String,Int)]{

var mapState:MapState[String,Int] = _

override def map(value: (String, Int)): (String, Int) = {

var count=0;

if(mapState.contains(value._1)){

count=mapState.get(value._1)+value._2

}else{

count=value._2

}

(value._1,count)

}

override def open(parameters: Configuration): Unit = {

val context = getRuntimeContext

val msd=new MapStateDescriptor[String,Int]("mapstate",createTypeInformation[String],createTypeInformation[Int])

mapState = context.getMapState(msd)

}

}

State Time-To-Live (TTL)

Time to live (TTL) can be assigned to any type of keyed state. If TTL is configured and the status value has expired, every effort will be made to clear the stored value. All state collection types support TTL for each entry. This means that list elements and map entries expire independently. In order to use state TTL, you must first build the StateTtlConfig configuration object. You can then enable the TTL feature in any StateDescriptor by passing the configuration:

class StateRichMapFunction extends RichMapFunction[(String,Int),(String,Int)]{

var foldState:FoldingState[Int,Int] = _

override def map(value: (String, Int)): (String, Int) = {

foldState.add(value._2)

(value._1,foldState.get())

}

override def open(parameters: Configuration): Unit = {

val context = getRuntimeContext

//Configure TTL expiration time

var ttlConfig = StateTtlConfig

.newBuilder(Time.seconds(5))

.setUpdateType(StateTtlConfig.UpdateType.OnCreateAndWrite)

.setStateVisibility(StateTtlConfig.StateVisibility.NeverReturnExpired)

.build();

val fsd=new FoldingStateDescriptor[Int,Int]("mapstate",0,new FoldFunction[Int,Int]{

override def fold(accumulator: Int, value: Int): Int = {

accumulator+value

}

},createTypeInformation[Int])

fsd.enableTimeToLive(ttlConfig)

foldState = context.getFoldingState(fsd)

}

}

There are several options to consider for configuration: the first parameter of the newBuilder method is required, which is the value of the expiration time. When updating type configuration state TTL refresh (default is OnCreateAndWrite):

- StateTtlConfig.UpdateType.OnCreateAndWrite - create and write access only

- StateTtlConfig.UpdateType.OnReadAndWrite - also read access

Whether the state visibility configuration returns an expiration value (if not cleared) when reading access (NeverReturnExpired by default):

- StateTtlConfig.StateVisibility.NeverReturnExpired - expiration value will never be returned

- StateTtlConfig.StateVisibility.ReturnExpiredIfNotCleanedUp - return if still available

In the case of NeverReturnExpired, expired states are not returned. Another option, ReturnExpiredIfNotCleanedUp, allows you to return the expired State before cleanup.

- Enabling the TTL feature increases the cost of state storage, because the system not only stores the state, but also the timestamp of the last modification.

- Currently, only processing time TTL is supported, which means that the expiration time is managed by the computing node.

- If TTL is not enabled in the previous state, TTL configuration cannot be used when the task is restarted. Otherwise, the system will cause compatibility failure and StateMigrationException.

By default, expiration values are removed only when they are explicitly read out, for example by calling ValueState.value(). This means that by default, if the expired state is not read, it will not be deleted, which may cause the state to grow.

Cleanup in full snapshot

In addition, you can activate cleanup when you take a full State snapshot, which reduces the size of the State. This strategy does not reduce the local State, but does not load expired State data when love loads State. It can be configured in StateTtlConfig:

var ttlConfig = StateTtlConfig

.newBuilder(Time.seconds(1))

.cleanupFullSnapshot()

.build();

Incremental cleanup

Another option is to trigger a gradual cleanup of some state items. The system will iterate the expired entry every time the user accesses the operation state. If the entry is found to be expired, the entry will be deleted

var ttlConfig = StateTtlConfig

.newBuilder(Time.seconds(1))

.cleanupIncrementally()

.build();

At present, only Heap state backend is supported, enabling this policy will increase the delay of record processing.

Cleanup during RocksDB compaction

If you use the RocksDB state backend, another cleanup strategy is to activate Flink specific compression filters. RocksDB periodically runs asynchronous compression to merge state updates and reduce storage. The Flink compression filter uses TTL to check the expiration timestamp of the status entry and exclude the expiration value.

var ttlConfig = StateTtlConfig

.newBuilder(Time.seconds(1))

.cleanupInRocksdbCompactFilter()

.build();

Managed Operator State

With managed Operator State, stateful functions can implement a more general checkpoint edfunction interface, or listcheckpointed < T extensions serializable > interface.

CheckpointedFunction

The checkpoint edfunction interface provides access to non key states with different redistribution schemes. It needs to implement two methods:

void snapshotState(FunctionSnapshotContext context) throws Exception; void initializeState(FunctionInitializationContext context) throws Exception;

When Flink is performing Checkpoint, the system will call the snapshotState() method, and the corresponding initializeState() method will be called when the system initializes the function. Note that this method is called when the function is initialized for the first time or when it is recovered from an earlier Checkpoint state.

List style managed operator state is supported so far. This state requires a list of columns of serializable objects that are independent of each other, so the state can be reallocated when the cluster parallelism changes. Currently, there are two state allocation schemes supported:

- Average allocation: each operator returns a list of status elements. The entire state is logically a concatenation of all lists. In recovery, systematization will divide states equally

- Federated allocation: each operator returns a list of status elements. The entire state is logically a concatenation of all lists. At recovery / reallocation, each operator gets a complete list of state elements.

For example, implement a BufferSink to buffer data

class BufferingSink extends SinkFunction[(String,Int)] with CheckpointedFunction{

var listState:ListState[(String,Int)] = _

var bufferList:ArrayBuffer[(String,Int)] =new ArrayBuffer[(String,Int)]

override def invoke(value: (String, Int)): Unit = {

bufferList += value

if(bufferList.size >=10){

println("Buffer data:"+bufferList.mkString(","))

bufferList.clear()

}

}

override def snapshotState(context: FunctionSnapshotContext): Unit = {

listState.clear()

//Store data in buffer state

listState.addAll(bufferList.asJava)

}

override def initializeState(context: FunctionInitializationContext): Unit = {

val lsd = new ListStateDescriptor[(String,Int)]("buffer list",createTypeInformation[(String,Int)])

listState = context.getOperatorStateStore.getListState(lsd)

//If it's state recovery

if(context.isRestored){

bufferList.clear()

bufferList ++=(listState.get().asScala)

}

}

}

env.socketTextStream("CentOS",9999)

.flatMap(line => for(i <- line.split("\\W+")) yield (i,1))

.keyBy(0)

.sum(1)

.addSink(new BufferingSink)

env.execute("state tests")

View task list

[root@CentOS flink-1.7.2]# ./bin/flink list -m CentOS:8081 ------------------ Running/Restarting Jobs ------------------- 27.04.2019 18:35:35 : af6becbe2c6578d769571db8a1263596 : state tests (RUNNING) -------------------------------------------------------------- [root@CentOS flink-1.7.2]# ./bin/flink cancel af6becbe2c6578d769571db8a1263596 -s hdfs://CentOS:9000/savepoint -m CentOS:8081

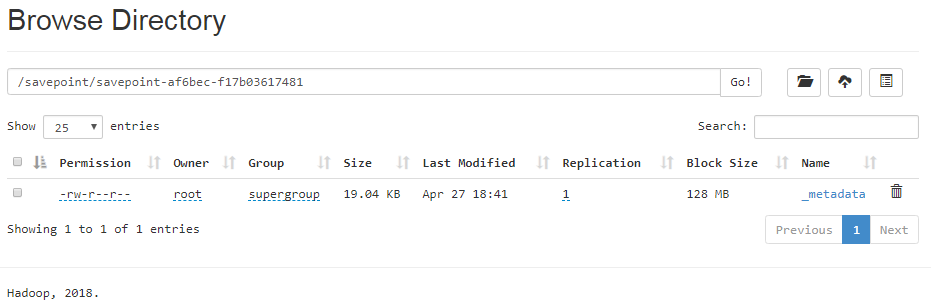

You can click HDFS to view the savepoint status, which stores the data before shutdown.

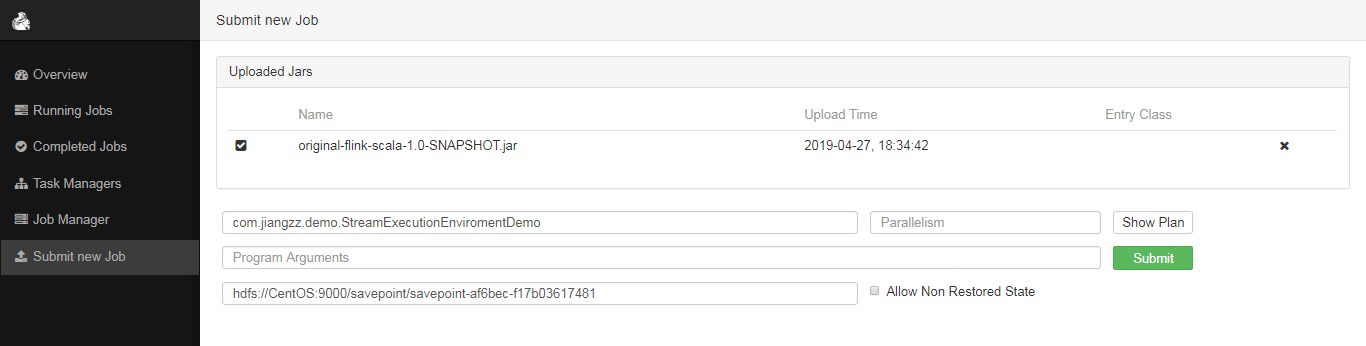

Then the user tries to recover the state before shutdown by using the interface, and observe whether the data can be recovered

ListCheckpointed

The ListCheckpointed interface is a more limited variant of the CheckpointedFunction, which only supports list style states with even split reallocation schemes at recovery time.

List<T> snapshotState(long checkpointId, long timestamp) throws Exception; void restoreState(List<T> state) throws Exception;

On the snapshotState(), the Operator should return a list of objects for the checkpoint, which restoreState must handle at recovery time. If the state is not repartitionable, collection.singletonlist (my \ state) can always be returned in snapshotState(). Stateful sources require more attention than other operators. In order for state and output sets to be updated to atoms (required for precise semantics at the time of failure / recovery), users need to obtain locks from the context of the Source.

import java.{lang, util}

import java.util.Collections

import org.apache.flink.streaming.api.checkpoint.ListCheckpointed

import org.apache.flink.streaming.api.functions.source.{RichParallelSourceFunction, SourceFunction}

import scala.collection.JavaConversions._

class CounterSource extends RichParallelSourceFunction[Long] with ListCheckpointed[java.lang.Long] {

@volatile

private var isRunning = true

private var offset = 0L

//Snapshot data

override def snapshotState(checkpointId: Long, timestamp: Long):

util.List[lang.Long] = {

Collections.singletonList(offset)

}

//Restore snapshot

override def restoreState(state: util.List[java.lang.Long]): Unit = {

for (s <- state) {

offset = s

}

}

//Operational output

override def run(ctx: SourceFunction.SourceContext[Long]): Unit = {

val lock = ctx.getCheckpointLock

while (isRunning) {

// output and state update are atomic

lock.synchronized({

Thread.sleep(1000)

ctx.collect(offset)

offset += 1

})

}

}

override def cancel(): Unit = {

isRunning = false

}

}

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.api.scala._

val env =StreamExecutionEnvironment.getExecutionEnvironment

env.addSource(new CounterSource)

.map(i=> i+" offset")

.print()

env.execute("Test case")

Broadcast State

In addition to Keyed State and Operator State, the third State of Flink is broadcast State. Broadcast State is introduced to support such a scenario: broadcast State is an Operator State supported by Flink. Using broadcast State, you can input data records in a Stream of Flink program, and then broadcast these data records to each Task downstream, so that these data records can be shared by all tasks, such as some data records for configuration. In this way, when each Task processes the records in its corresponding Stream, it reads these configurations to meet the actual data processing needs.

Broadcast State API

Generally, we will first create a Keyed or non Keyed Data Stream, then create a Broadcasted Stream, and finally connect (call the connect method) to the Broadcasted Stream through the Data Stream, so as to broadcast the broadcasted state to each Task downstream of the Data Stream. If the Data Stream is Keyed Stream, after connecting to the Broadcasted Stream, the KeyedBroadcastProcessFunction needs to be used to add processing ProcessFunction. The following is the API of KeyedBroadcastProcessFunction, and the code is as follows:

public abstract class KeyedBroadcastProcessFunction<KS, IN1, IN2, OUT> {

public abstract void processElement(IN1 value, ReadOnlyContext ctx, Collector<OUT> out) throws Exception;

public abstract void processBroadcastElement(IN2 value, Context ctx, Collector<OUT> out) throws Exception;

public void onTimer(long timestamp, OnTimerContext ctx, Collector<OUT> out) throws Exception;

}

The meanings of the parameters in the above generics are as follows:

- KS: indicates the type of Key that Flink program depends on when calling keyBy when building Stream from the upstream Source Operator;

- IN1: indicates the type of data record in the non Broadcast Data Stream;

- IN2: indicates the type of data record in the Broadcast Stream;

- OUT: indicates the type of output data record after being processed by processElement() and processBroadcastElement() methods of keyedprocessfunction.

If the Data Stream is a non keyed stream, after connecting to the Broadcasted Stream, the broadcasteprocessfunction needs to be used to add processing ProcessFunction. The following is the API of broadcasteprocessfunction, and the code is as follows:

public abstract class BroadcastProcessFunction<IN1, IN2, OUT> extends BaseBroadcastProcessFunction {

public abstract void processElement(final IN1 value, final ReadOnlyContext ctx, final Collector<OUT> out) throws Exception;

public abstract void processBroadcastElement(final IN2 value, final Context ctx, final Collector<OUT> out) throws Exception;

}

The meanings of the above generic parameters are the same as the last three in the generic type of keyedboardprocessfunction, except that KS generic parameters are not needed without calling the keyBy operation to partition the original Stream.

val env = StreamExecutionEnvironment.createLocalEnvironment()

val stream1 = env.socketTextStream("localhost", 9999)

.flatMap(_.split(" "))

.map((_, 1))

.setParallelism(3)

.keyBy(_._1)

val mapStateDescriptor = new MapStateDescriptor[String,(String,Int)]("user state",BasicTypeInfo.STRING_TYPE_INFO,createTypeInformation[(String,Int)])

val stream2 = env.socketTextStream("localhost", 8888)

.map(line => (line.split(",")(0), line.split(",")(1).toInt))

.broadcast(mapStateDescriptor)

stream1.connect(stream2).process(new KeyedBroadcastProcessFunction[String,(String,Int),(String,Int),String]{

override def processElement(in1: (String, Int), readOnlyContext: KeyedBroadcastProcessFunction[String, (String, Int), (String, Int), String]#ReadOnlyContext, collector: Collector[String]): Unit = {

val mapBroadstate = readOnlyContext.getBroadcastState(mapStateDescriptor)

println("in1:"+in1)

println("---------state---------")

for (i <- mapBroadstate.immutableEntries()){

println(i.getKey+" "+i.getValue)

}

}

override def processBroadcastElement(in2: (String, Int), context: KeyedBroadcastProcessFunction[String, (String, Int), (String, Int), String]#Context, collector: Collector[String]): Unit = {

context.getBroadcastState(mapStateDescriptor).put(in2._1,in2)

}

}).print()

env.execute("Test broadcast status")

More highlights