Spark adopts a large number of event listening methods to realize the communication between components on the driver side. This article will explain how to implement event listening in spark

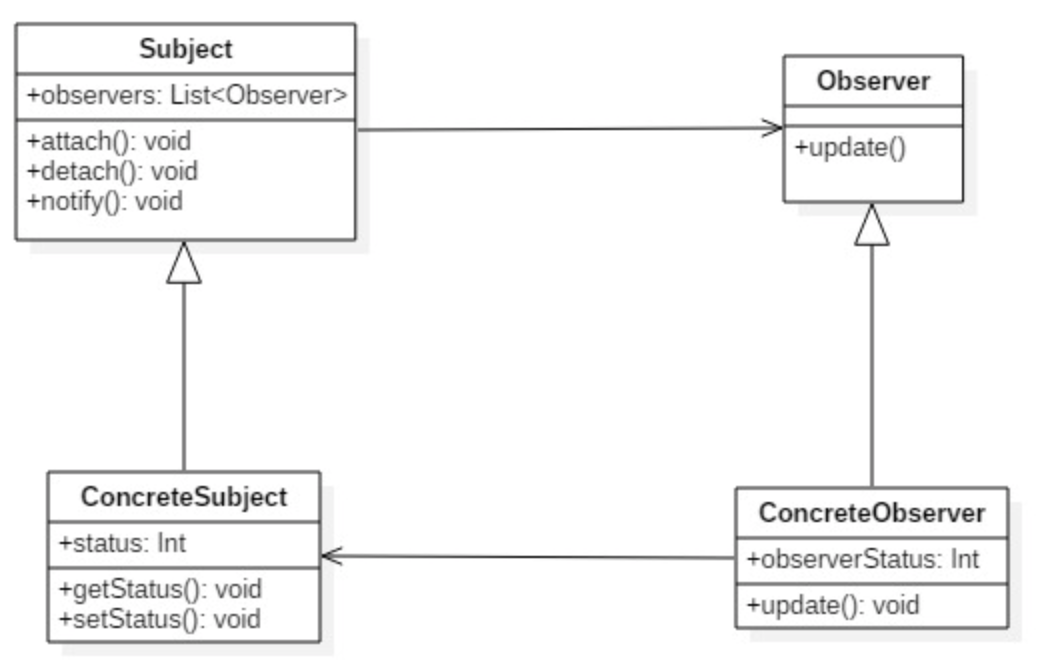

Observer mode and listener

There is an observer pattern in the design pattern, which establishes a dependency between objects. When an object state changes, it immediately notifies other objects, and other objects respond accordingly. Among them, the changed object is called observation target (also called subject), and the notified object is called observer. Multiple observers can be registered in one observation target. These observers have no connection, and their number can be increased or decreased as needed.

Event driven asynchronous programming

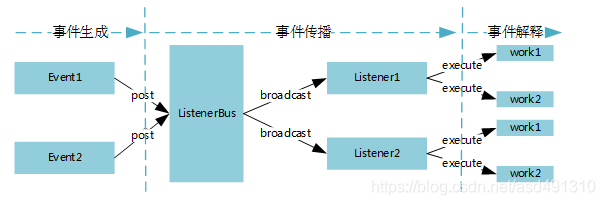

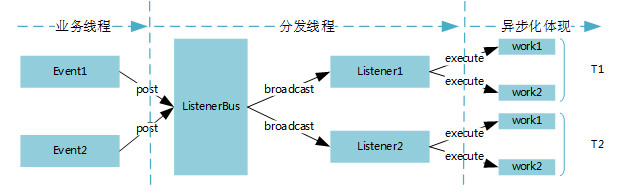

The Event framework within spark core implements the asynchronous programming mode based on events. Its biggest advantage is that it can improve the full utilization of physical resources by applications, squeeze physical resources to the greatest extent, and improve the processing efficiency of applications. The disadvantages are obvious, which reduces the readability of the application. Spark's Event based asynchronous programming framework consists of an Event framework and an asynchronous execution thread pool. The Event generated by the application is sent to the Listener bus, which then broadcasts the message to all listeners. Each Listener receives the Event to determine whether it is interested in the Event. If so, the logical program block corresponding to the Event will be executed in the thread pool exclusive to the Listener. The following figure shows the relationship among Event, Listener bus, Listener and Executor from the perspectives of Event generation, Event propagation and Event interpretation.

We look at asynchronous processing from the perspective of threads. Asynchronous processing is embodied in two stages: event propagation and event interpretation. The asynchronization of event interpretation realizes our event based asynchronous programming.

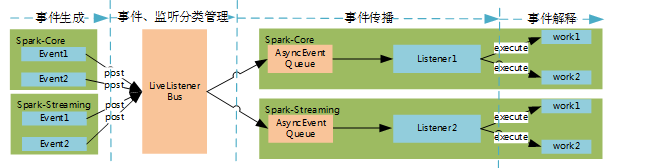

Implementation of Spark

Spark core and spark streaming adopt the idea of classification (divide and conquer) for management. Each major type of Event has its own Event and listener bus

Event

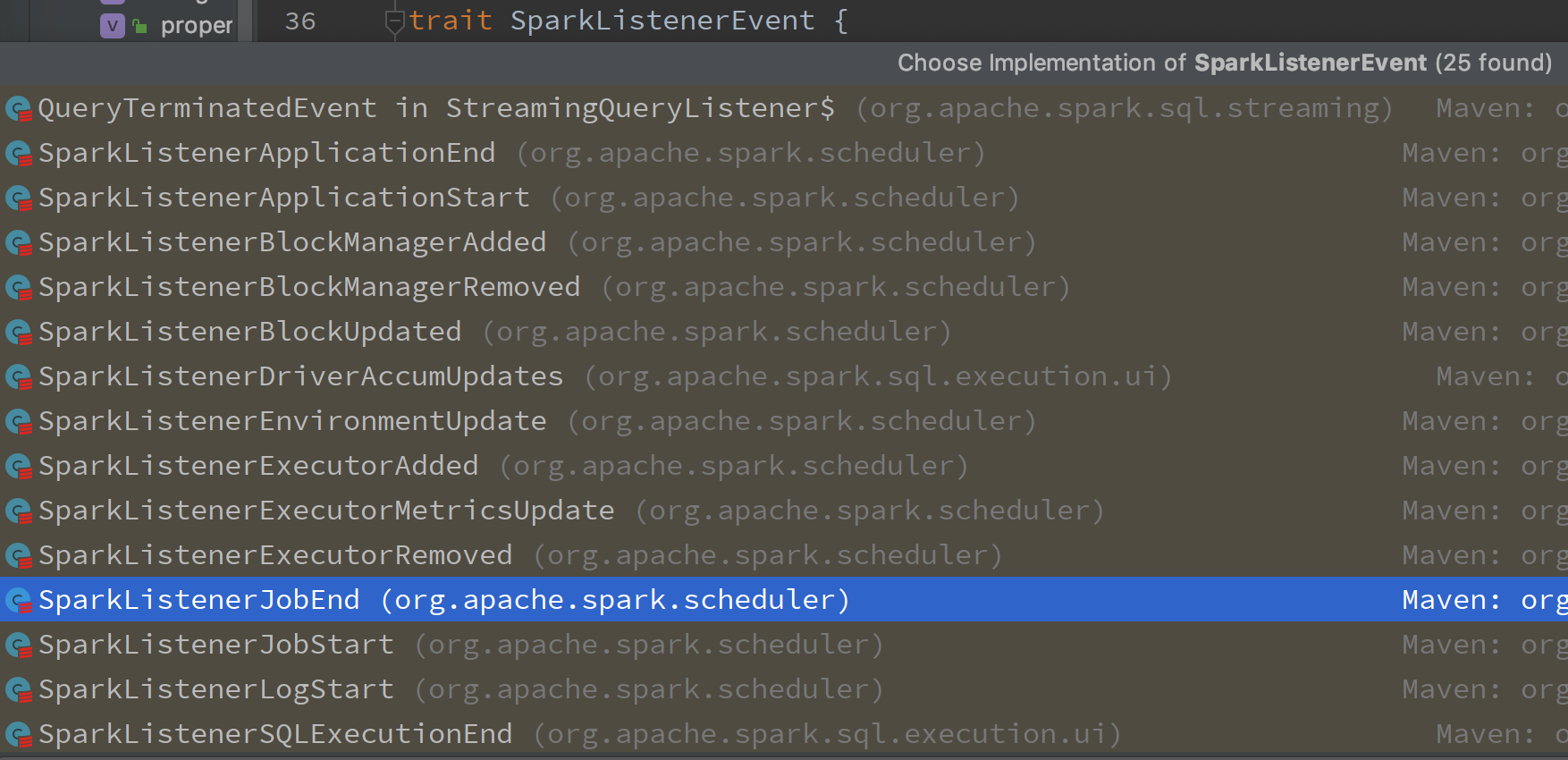

The core event trail of spark core is SparkListenerEvent, and the core event trail of spark streaming is StreamingListenerEvent

The following figure shows various event entity classes:

What should we pay attention to when defining events? We take sparklistener taskstart as an example to analyze the characteristics of an event.

- See the name and meaning of SparkListener task start. At first glance, we can guess that it is a task start event of SparkListener.

- Trigger condition: the trigger condition of an event must be clear and can clearly describe a behavior, and the behavior host should be unique. The host of SparkListenerTaskStart event generation is DAGScheduler. SparkListenerTaskStart is generated after BeginEvent event is generated by DAGScheduler.

- Event propagation: point point or BroadCast can be selected for event propagation, which can be weighed and selected according to business needs. The event framework of spark core and spark streaming adopts BroadCast mode.

- Event interpretation. An event can have one or more explanations. Spark core and spark streaming adopt BroadCast mode, so they support Listener interpretation of events. In principle, a Listener has only one interpretation of an event. AppStatusListener, EventLoggingListener and ExecutorAllocationManager explain sparklistener taskstart respectively. In designing the event framework, we can learn from the above four points according to the actual needs to design the most appropriate event framework.

Listner

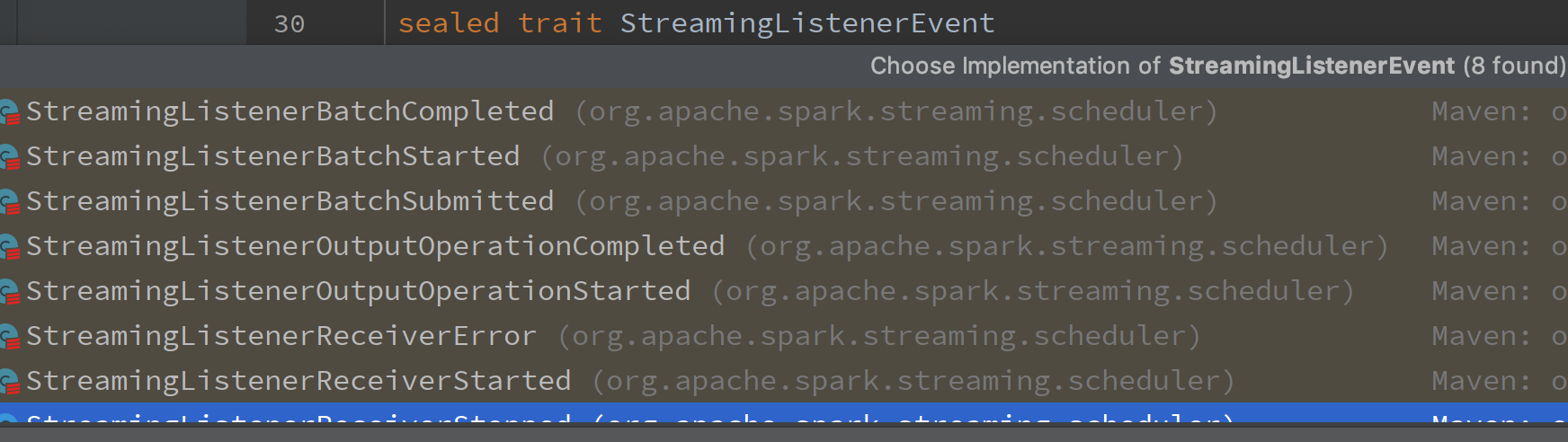

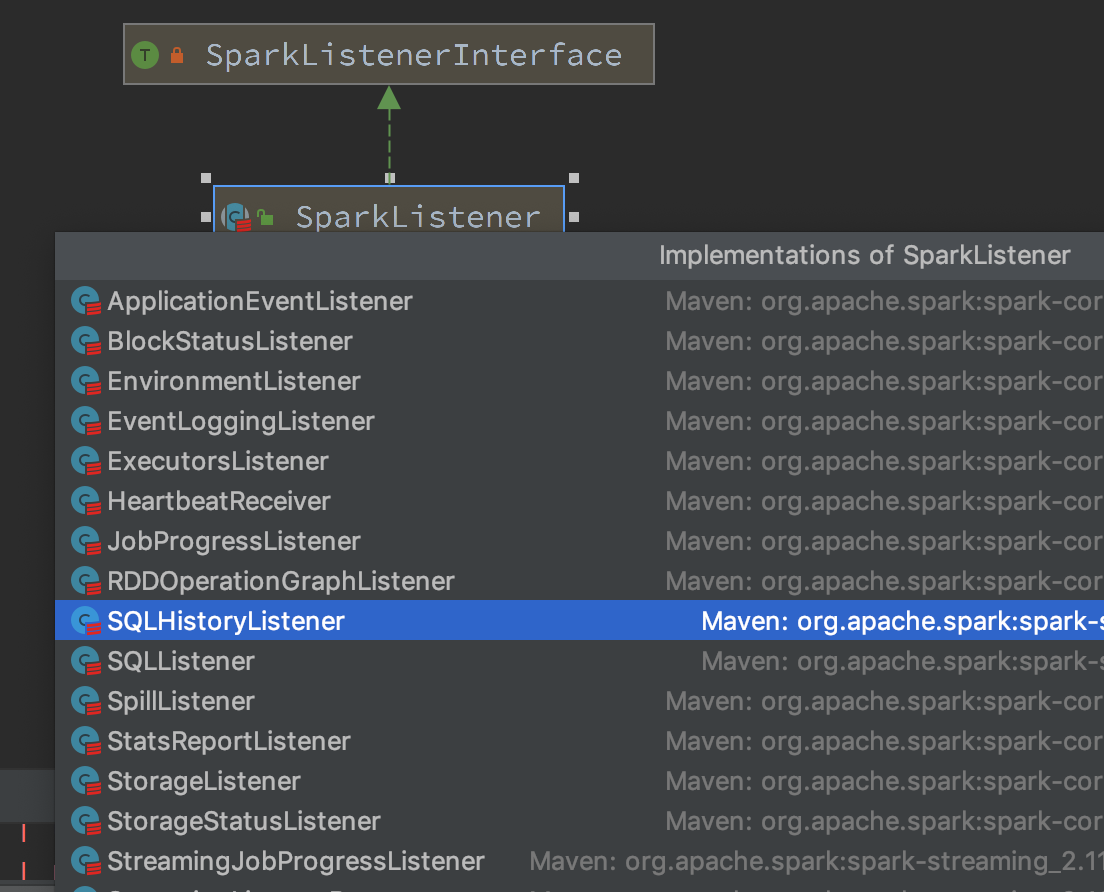

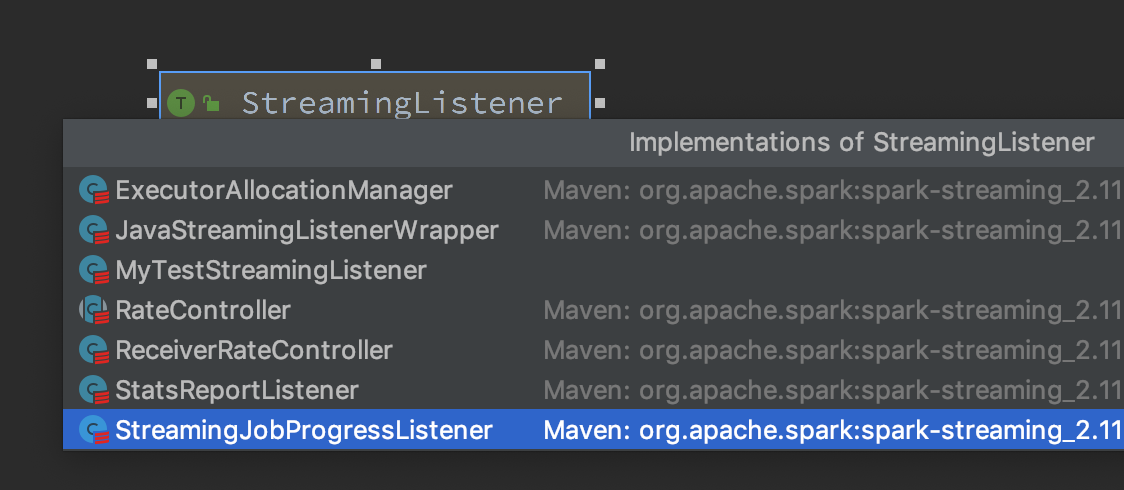

Spark core's core listener triat is SparkListener, and spark streaming's core listener triat StreamingListener. Both represent the abstraction of a kind of listening

The following figure shows some listening entity classes:

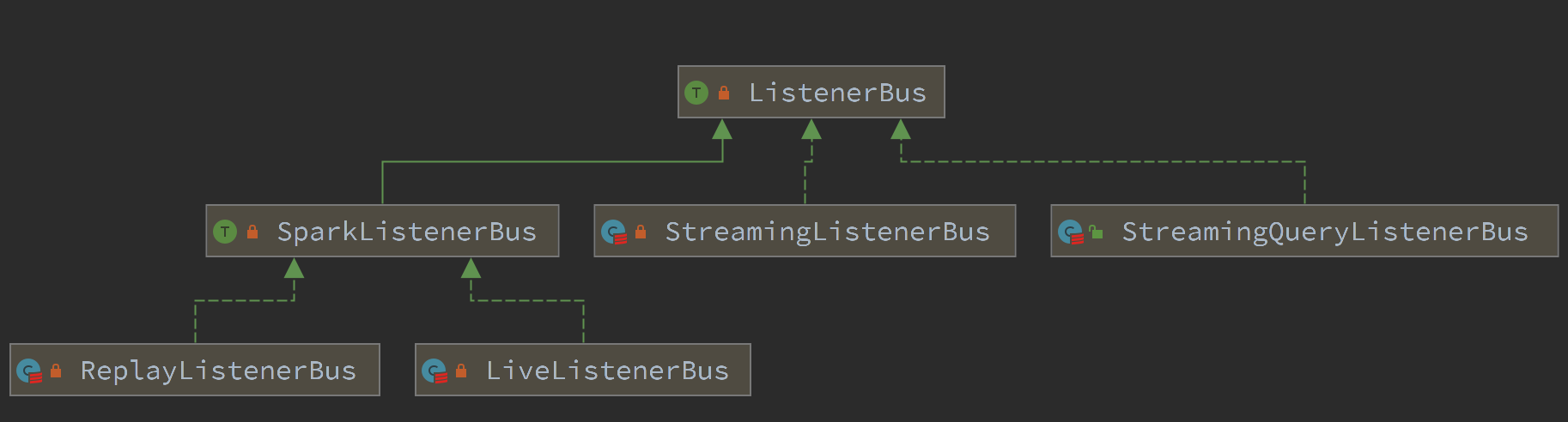

ListenerBus

During the running process of Listener bus object and Spark program, many functions of Driver end depend on event transmission and processing, and event bus plays a vital role in this process. The event bus improves the efficiency of Driver execution through asynchronous threads. The Listener registers in the ListenerBus object, and then implements event listening through the ListenerBus object (similar to the relationship between a computer and peripheral devices)

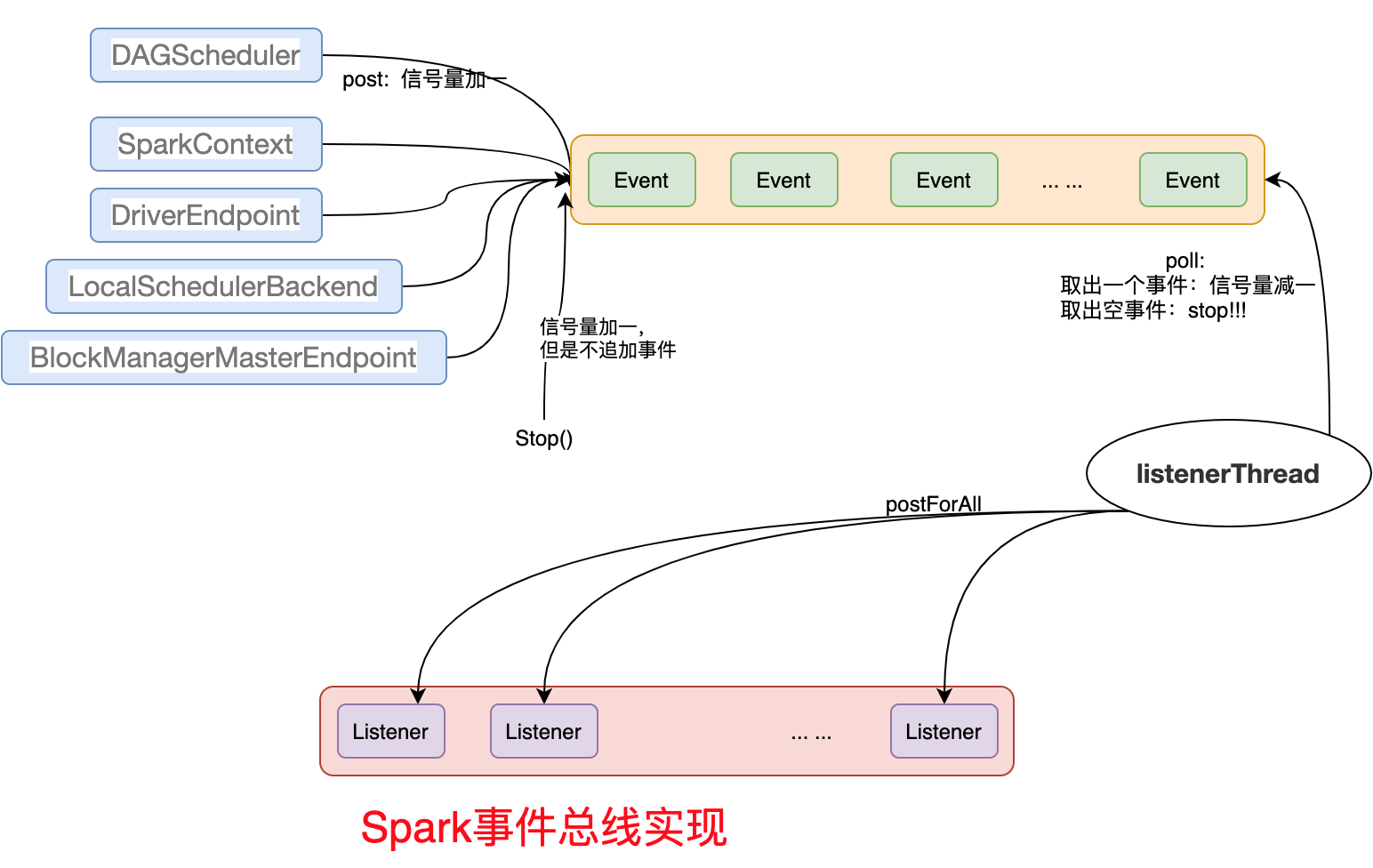

The start method directly starts a dispatchThread. Its core logic is to keep taking events from an event queue eventQueue. If the event is legal and the liverlistener bus is not shut down, the event will be notified to all registered listeners

The dispatch method is to add corresponding events to the event queue.

The Listener bus is used to manage all listeners. Spark core and spark streaming share the same trait Listener bus. Finally, the Listener is managed by using the AsyncEventQueue class.

LiveListenerBus:

Manage all registered listeners, create a unique AsyncEventQueue for a class of listeners, and broadcast events to all listeners. By default, four types of asynceventqueues can be provided, namely 'shared', 'appStatus',' executorManagement 'and' eventLog '. At present, spark core does not release the category setting, which means that there can only be the above four categories at most. From the perspective of rigorous design, the more categories, the better. For each additional category, there will be one more AsyncEventQueue instance. Each instance will contain an Event propagation thread, which still occupies more resources of the system.

Asynchronous event processing thread listenerThread

private val listenerThread = new Thread(name) {

setDaemon(true) //The thread itself is set as a daemon thread

override def run(): Unit = Utils.tryOrStopSparkContext(sparkContext) {

LiveListenerBus.withinListenerThread.withValue(true) {

while (true) {

eventLock.acquire()//Continuously acquire the semaphore. If the semaphore is reduced by one, it can be obtained, indicating that there are still events that have not been processed

self.synchronized {

processingEvent = true

}

try {

val event = eventQueue.poll //Get the event. The remove() and poll() methods delete the first element (head) from the queue.

if (event == null) {

// At this time, it indicates that there is no event, but the semaphore is still obtained, which indicates that the stop method is called

// Jump out of the while loop and close the daemon thread

if (!stopped.get) {

throw new IllegalStateException("Polling `null` from eventQueue means" +

" the listener bus has been stopped. So `stopped` must be true")

}

return

}

// Call the postxToAll(event: E) method of ListenerBus

postxToAll(event)

} finally {

self.synchronized {

processingEvent = false

}

}

}

}

}

}

Core attributes

private val started = new AtomicBoolean(false)

private val stopped = new AtomicBoolean(false)

//Storage event

private lazy val eventQueue = new LinkedBlockingQueue[SparkListenerEvent]

//A counter that represents the number of events generated and used in the queue. This semaphore is used to prevent the consumer thread from running idle

private val eventLock = new Semaphore(0)

Core method

start

LiveListenerBus is initialized in setupAndStartListenerBus of SparkContext, and the start method is called to start LiveListenerBus.

def start(): Unit = {

if (started.compareAndSet(false, true)) {

listenerThread.start() //Start consumer thread

} else {

throw new IllegalStateException(s"$name already started!")

}

stop

Stop LiveListenerBus, which will wait for the queue events to be processed, but lose all new events after stopping. It should be noted that stop may lead to long-term blocking, and the thread executing the stop method will be suspended. The thread executing the stop main method will not be awakened until all the dispatch threads in the asynceventqueue (four by default) exit.

def stop(): Unit = {

if (!started.get()) {

throw new IllegalStateException(s"Attempted to stop $name that has not yet started!")

}

if (stopped.compareAndSet(false, true)) {

// Call eventLock.release() so that listenerThread will poll `null` from `eventQueue` and know

// `stop` is called.

// Release a semaphore, but there is no event at this time, so the listener thread will get an empty event and know that it is time to stop

eventLock.release()

//Then wait for the consumer thread to shut down automatically

listenerThread.join()

} else {

// Keep quiet

}

}

post

Broadcast event propagation is adopted. This process is very fast. The main thread only needs to propagate the event to the AsyncEventQueue, and finally the AsyncEventQueue broadcasts it to the corresponding Listener

def post(event: SparkListenerEvent): Unit = {

if (stopped.get) {

// Drop further events to make `listenerThread` exit ASAP

logError(s"$name has already stopped! Dropping event $event")

return

}

// Add an event at the end of the event queue

// Difference between add () and offer (): both insert elements into the end of the queue. When the queue limit is exceeded, the add () method throws an exception for you to handle, while the offer () method directly returns false

val eventAdded = eventQueue.offer(event)

if (eventAdded) {

//If you successfully join the queue, add one to the semaphore

eventLock.release()

} else {

// If the event queue exceeds its capacity, new events will be deleted and these subclasses will be notified of the deletion event.

onDropEvent(event)

droppedEventsCounter.incrementAndGet()

}

val droppedEvents = droppedEventsCounter.get

if (droppedEvents > 0) {

// Don't log too frequently

// If the last time, the queue is full of event_ QUEUE_ If the value of capability = 1000 is set, it will be lost, and then a time will be recorded. If it continues to be lost, the log will be recorded every 60 seconds, otherwise the log will be full

if (System.currentTimeMillis() - lastReportTimestamp >= 60 * 1000) {

if (droppedEventsCounter.compareAndSet(droppedEvents, 0)) {

val prevLastReportTimestamp = lastReportTimestamp

lastReportTimestamp = System.currentTimeMillis()

// Record a warn log, indicating that this event was discarded

logWarning(s"Dropped $droppedEvents SparkListenerEvents since " +

new java.util.Date(prevLastReportTimestamp))

}

}

}

}

Complete process

-

Dagschedulers, SparkContext, BlockManagerMasterEndpoint, DriverEndpoint and LocalSchedulerBackend in the figure are the event sources of LiveListenerBus. They submit messages to the event queue by calling the post method of LiveListenerBus. For each post event, the number of signals is increased by one.

-

listenerThread keeps getting semaphores, and then takes events from the event queue. If it gets events, it calls postForAll to distribute the events to the registered listeners. Otherwise, it gets empty events. It understands that this is the ghost of the event bus. It calls stop, but every post event, so as to stop the event bus thread.