summary

I mainly share the concerned flink coding skills in the process of daily work.

Pay attention to details

Doris build table

The following fields are required

created_time datetime

modified_time datetime

is_ Deleted smallint (6) null comment "delete, 1 yes 0 no",

doris_ Delete tinyint (4) null default "0" comment "delete mark" this field is a business logic deletion field. When data is found to be physically deleted, this field is marked as 1

alter table uc_student_bak enable feature "BATCH_DELETE"; The key here is that the tag table can be deleted in batch, so that when the merge is marked as delete, Doris will be deleted_ The data with delete = 1 will be deleted

Custom SINK

Implement the RichSinkFunction interface. For different sink storage support, it is best to have a separate class to inherit the RichSinkFunction interface

Attach sink doris code

public class DorisSinkFunction extends RichSinkFunction<String> {

private static final Logger log = LoggerFactory.getLogger(DorisSinkFunction.class);

//Accumulator object

private final LongCounter counter = new LongCounter();

private HttpClientBuilder builder;

private String loadUrl;

private String authorization;

private String username;

private String password;

private String profile;

private String mergeType;

private String dbName;

private String tableName;

public static DorisSinkFunction of(String profile, String mergeType, String dbName, String tableName) {

return new DorisSinkFunction(profile, mergeType, dbName, tableName);

}

private DorisSinkFunction(String profile, String mergeType, String dbName, String tableName) {

this.profile = profile;

this.mergeType = mergeType;

this.dbName = dbName;

this.tableName = tableName;

}

private String basicAuthHeader() {

final String tobeEncode = username + ":" + password;

byte[] encoded = Base64.encodeBase64(tobeEncode.getBytes(StandardCharsets.UTF_8));

return "Basic " + new String(encoded);

}

private HttpClientBuilder httpClientBuilder() {

return HttpClients.custom()

//Add retry policy

.setRetryHandler(new HttpRequestRetryHandler() {

@Override

public boolean retryRequest(IOException exception, int executionCount, HttpContext context) {

//The exception exceeds 3 times. Stop and retry

if (executionCount > 3) {

return false;

}

try {

Thread.sleep(3000);

} catch (InterruptedException e) {

e.printStackTrace();

return false;

}

return true;

}

})

//Support redirection

.setRedirectStrategy(new DefaultRedirectStrategy() {

@Override

protected boolean isRedirectable(String method) {

return true;

}

});

}

@Override

public void open(Configuration parameters) throws Exception {

//Get configuration

final Properties props = PropertyUtils.getDorisProps(profile);

this.username = props.getProperty("username");

this.password = props.getProperty("password");

//Initialize authorization

this.authorization = basicAuthHeader();

//Initialize http client builder

this.builder = httpClientBuilder();

//Build doris stream load url

this.loadUrl = String.format("http://%s:%s/api/%s/%s/_stream_load",

props.getProperty("feHost"),

props.getProperty("httpPort"),

dbName, tableName);

//Register accumulator

getRuntimeContext().addAccumulator("counter", counter);

}

@Override

public void close() throws Exception {

log.warn(" write doris http client execute close, dt: {}", DateTimeUtils.getCurrentDt());

}

@Override

public void invoke(String value, Context context) throws Exception {

try (CloseableHttpClient client = this.builder.build()) {

//Create put object

final HttpPut put = new HttpPut(loadUrl);

put.setHeader(HttpHeaders.EXPECT, "100-continue");

put.setHeader(HttpHeaders.AUTHORIZATION, this.authorization);

put.setHeader("strip_outer_array", "true");//This configuration is required for batch inserting json arrays

put.setHeader("format", "json");

put.setHeader("label", UUID.randomUUID().toString().replace("-", ""));

//Add doris batch delete header

if ("MERGE".equalsIgnoreCase(mergeType)) {

put.setHeader("merge_type", "MERGE");

put.setHeader("delete", "doris_delete=1");

}

put.setEntity(new StringEntity(value, "UTF-8"));

//execute

try (final CloseableHttpResponse response = client.execute(put)) {

String loadResult = null;

String loadStatus = null;

if (response.getEntity() != null) {

loadResult = EntityUtils.toString(response.getEntity());

loadStatus = JSONObject.parseObject(loadResult).getString("Status");

}

final int statusCode = response.getStatusLine().getStatusCode();

if (statusCode != 200 || !"Success".equalsIgnoreCase(loadStatus)) {

String msg = String.format(" stream_load_failed, statusCode=%s loadResult=%s", statusCode, loadResult);

log.error(msg);

throw new RuntimeException(msg);

}

} catch (Exception e) {

log.error(" stream_load_to_doris execute error: {}", e);

throw new RuntimeException(" stream_load_to_doris execute error. ", e);

}

} catch (Exception e) {

log.error(" stream_load_to_doris invoke error: {}", e);

throw new RuntimeException(" stream_load_to_doris invoke error. ", e);

}

//Accumulator self addition

counter.add(1);

}

}

Customize various functions

- Simple map conversion implements MapFunction or FlatMapFunction interface. It is recommended that as long as it has business meaning, even if it is a basic string conversion, it should be made into a separate class

The constructor in a separate class can pass in the required parameters. Set the necessary values in JSONObject, the most commonly used scenario

The main methods of MapFunction are as follows: O map(T value) throws Exception; It can be found that this method only supports the most basic element to element conversion

The main methods of FlatMapFunction are as follows: void flatMap(T value, Collector out) throws Exception; It can be found that this method supports the conversion from one parameter to multiple parameters.

ProcessWindowFunction is applied to keyed windows. The core method process (key, context, iterative elements, collector out) can know that it is a window function based on KEYBy.

ProcessAllWindowFunction is applied to the non keyed window function process (context, iterative elements, collector out) throws exception. Through this method, it is known that it is a global window based on non KEYBY.

Streaming task configuration

Focus on configuration to solve task operation problems

Each real-time task can have a properties file to record key metadata, and each task runs based on these metadata; The commonly used properties format is as follows:

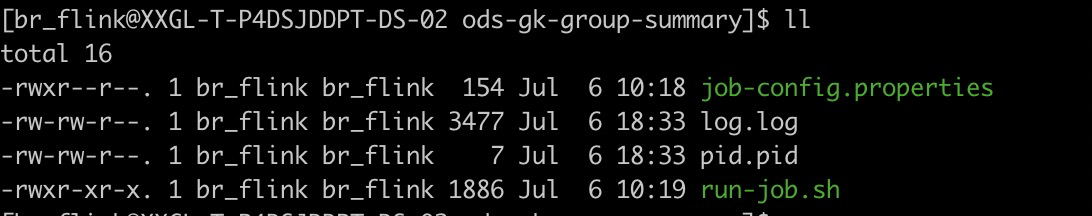

job-config.properties is generally in the same directory as running scripts

Subsection: if you start from the specified CK, you will not care what the StartFrom setting of kafka source is, but only start consumption from the offset stored in CK state;

To set env. Getcheckpointconfig(). Enableexternalized checkpoints (retain_on_cancellation); Otherwise, cp will be cleared after cancel

# Running environment prod production test test profile=prod # Task name jobName=dwd-rt-xx # Consumer group groupId=flink_dwd_rt_xx # Consumption topic sourceTopic=topic1 # sink topic sinkTopic=topic2 # The tables to be stored may be various data storage types such as tidb CK Doris HBase Oracle mysql sinkTable=table1 # topic to be stored sinkTopic= topic1 # Database types supported by sink sink=doris,tidb # The following mainly defines dimension table join. For example, get the corresponding field values from the dimension table according to b1, then execute map merge, which is the result of dimension table join, and finally change the key in the map to b2 xxx_mapping=a, b1->b2

After the above tasks are configured, you need to run the flink task. In the absence of a real-time computing platform, the simplest way is to create a submission fortress machine. The following information needs to be set on the fortress machine:

- Set up a tenant dedicated to submitting flick tasks

- Specify a directory to store various scripts

- Script run

Refer to the following for the script template

run-job.sh

cp stands for starting from the specified checkpoint

sp stands for starting from the specified savepoint

The default start is suitable for the first start

The following scripts can abstract public variables into separate scripts when running, such as common_env.sh

Then source.. /.. / common in the run script_ Env.sh introduces this public script.

#!/bin/bash

# flink startup client address

flink_bin=/xxx/flink-1.2.1-yarn/flink-1.12.1/bin/flink

# ck address

cp_root_path=hdfs://xxxcluster/flink_realedw/cluster/checkpoints

# Submit yarn task real-time queue

queue=xxxqueue

# Task name

app_name="ods-gk-xxx"

# Real time task main class

class_ref=cn.xxx.xxx.OdsGroupSummary

# Current real-time task jar package address

jar_dir=../xxx-1.0-SNAPSHOT-shaded.jar

# The current job depends on the relative path of the configuration file, which will be parsed in the real task

config_path=job-config.properties

# Default parallelism of real-time tasks

parallelism=1

# Grep JobID log.log | cut - D "" - F7 parse JobID from the flick startup log

# Grep 'yarn application - kill' log.log | cut - D "" - F5 parse the yarn job ID from the startup log

# --config-path $config_path this parameter corresponds to the address of the service configuration file

case $1 in

"cancel"){

echo "================ cancel flilk job ================"

yarn_app_id=`grep 'yarn application -kill' log.log |cut -d" " -f5`

job_id=`grep JobID log.log | cut -d" " -f7`

echo "LastAppId: $yarn_app_id LastJobID: $job_id"

$flink_bin cancel -m yarn-cluster -yid $yarn_app_id $job_id

};;

"cp"){

echo "================ start from checkpoint ================"

job_id=`grep JobID log.log | cut -d" " -f7`

cp_path=`hadoop fs -ls ${cp_root_path}/${job_id}/ | grep chk- | awk -F" " '{print$8}' |sort -nr |head -1`

echo "LastJobID: $job_id CheckpointPath: $cp_path"

nohup $flink_bin run -d -t yarn-per-job \

-Dyarn.application.queue=$queue \

-Dyarn.application.name=$app_name \

-p $parallelism \

-c $class_ref \

-s $cp_path \

$jar_dir \

--config-path $config_path >./log.log 2>&1 &

};;

"sp"){

echo "================ start from savepoint ================"

echo "SavepointPath: $2"

nohup $flink_bin run -d -t yarn-per-job \

-Dyarn.application.queue=$queue \

-Dyarn.application.name=$app_name \

-p $parallelism \

-c $class_ref \

-s $2 \

$jar_dir \

--config-path $config_path >./log.log 2>&1 &

};;

*){

echo "================ start ================"

nohup $flink_bin run -d -t yarn-per-job \

-Dyarn.application.queue=$queue \

-Dyarn.application.name=$app_name \

-p $parallelism \

-c $class_ref \

$jar_dir \

--config-path $config_path >./log.log 2>&1 &

};;

esac

# Write the shell script process ID to the pid.pid file

echo $! > ./pid.pid

hive on hbase

For the operation of HBASE, you can use the create appearance to create based on HBASE, and achieve the purpose of operating HBASE through the operation of external table hive.

## hbase DDL

create 'rtdw:dwd_rt_dim_ecproductdb_product_xxx', 'cf'

## hive on hbase DDL

CREATE EXTERNAL TABLE dwd.dwd_rt_dim_ecproductdb_product_xxx_hb (

key String,

id String,

product_id String

)

STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

WITH SERDEPROPERTIES ("hbase.columns.mapping" =

":key,cf:id,cf:product_id")

TBLPROPERTIES ("hbase.table.name" = "rtdw:dwd_rt_dim_ecproductdb_product_xxx");

## hive sql

insert overwrite table dwd.dwd_rt_dim_ecproductdb_product_course_hb values('111','222','xxx1'),('222','333','xxx2')

## After the above hive insertion, it will be found that the recent effect appears in HBASE, and HBASE also has results

Some good tools

Typeutils.casttostring the typeutils class contains many methods that force Object types to be converted to other types.

For various types of information in assembled SQL, you can directly refer to the java.sql.Types class. All type information is defined below.