catalogue

Introduction to producer consumer model

Implementation of ReentrantLock

Implementation of blocking queue

Lockless caching framework: Disruptor

Note: This article refers to [Java summary] producer consumer model_ zhujohnle's column - CSDN blog

Java implementation of producer consumer model - brief book

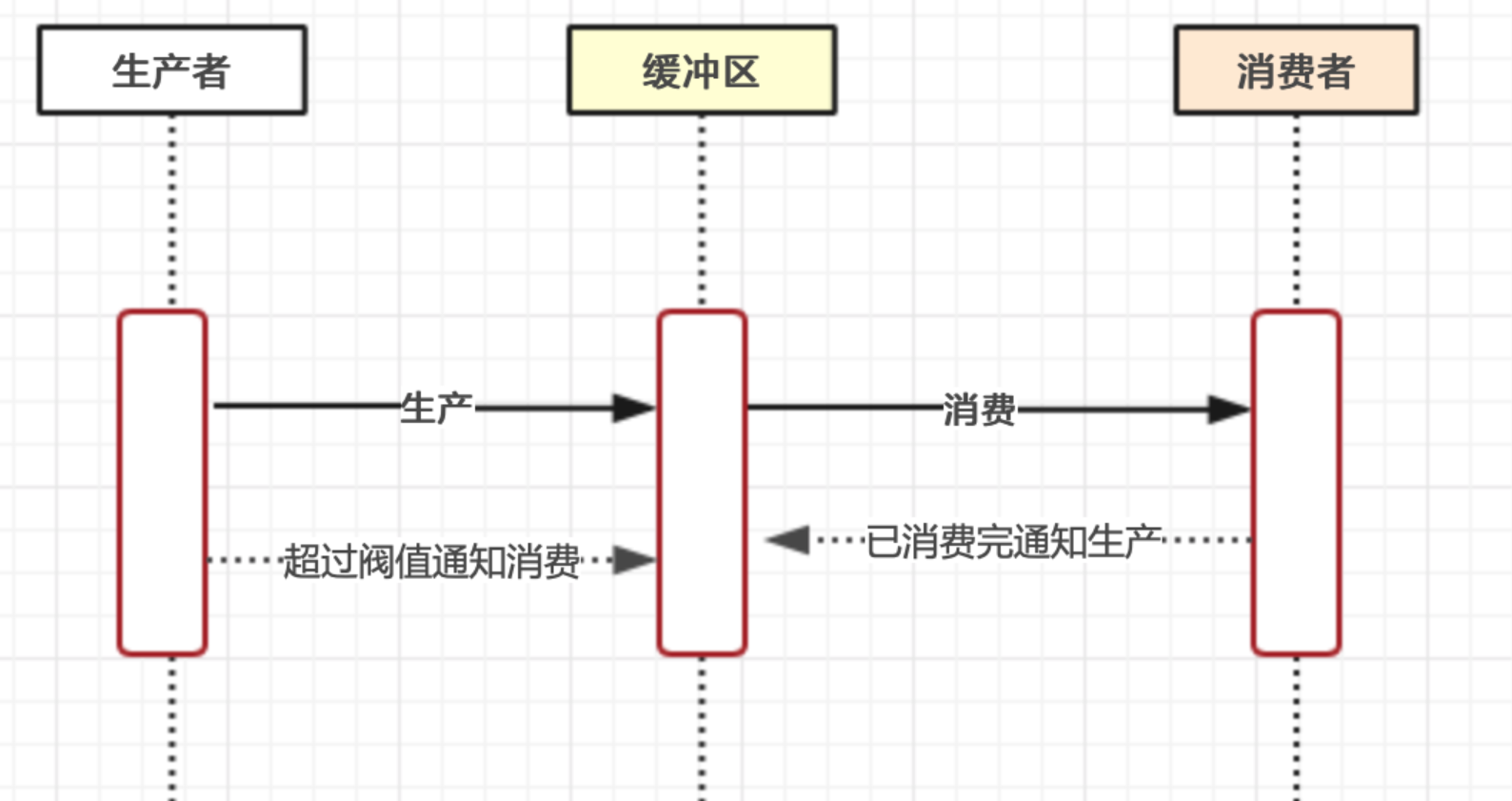

Introduction to producer consumer model

The main structure of producer consumer model is as follows. It is a typical case of thread synchronization. Now let's use java to do several ways of thread synchronization to implement the model

There are several preconditions to ensure the stable operation of a producer consumer model

The generator should have the ability of continuous generation

Consumers should have the ability to consume continuously

There is a certain threshold for the generation and consumption of producers. For example, when the total generation reaches 100, the production needs to be stopped and the consumption needs to be notified; Stop consumption and start production when consumption reaches 0;

wait,notify scheme

The wait and notify scheme mainly realizes thread switching and execution by using the wait method and notify method of the object. We can see that the object's wait and notify or notifyAll methods are processed by calling the corresponding methods of native. Back to the end, we still control the cpu to switch between different time slices

The following example is relatively simple. Simulate a case where the production speed is greater than the consumption speed. When the production reaches the threshold, stop production and inform consumers to wait. Consumers stop consuming when consumption reaches a certain threshold and inform producers to produce (notify all)

public class TestWaitNotifyConsumerAndProducer {

/*Current generated quantity*/

static int currentNum = 0;

/*Maximum generated quantity*/

static int MAX_NUM = 10;

/*Minimum consumption quantity*/

static int MIN_NUM = 0;

/*wait And notify control objects*/

private static final String lock = "lock";

public static void main(String args[]) {

//Create a producer

new Thread(new Producer()).start();

//Create two consumers

new Thread(new Consumer()).start();

new Thread(new Consumer()).start();

}

static class Producer implements Runnable {

public void product() {

while (true) {

try {

Thread.sleep(10);

} catch (InterruptedException e) {

e.printStackTrace();

}

synchronized (lock) {

currentNum++;

System.out.println("Producer now product num:" + currentNum);

lock.notifyAll();

if (currentNum == MAX_NUM) {

try {

lock.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

}

@Override

public void run() {

product();

}

}

static class Consumer implements Runnable {

public void consume() {

while (true) {

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

synchronized (lock) {

if (currentNum == MIN_NUM) {

lock.notifyAll();

continue;

}

System.out.println(new StringBuilder(Thread.currentThread().getName())

.append(" Consumer now consumption num:").append(currentNum));

currentNum--;

}

}

}

@Override

public void run() {

consume();

}

}

}

public class ProducerConsumer1 {

class Producer extends Thread {

private String threadName;

private Queue<Goods> queue;

private int maxSize;

public Producer(String threadName, Queue<Goods> queue, int maxSize) {

this.threadName = threadName;

this.queue = queue;

this.maxSize = maxSize;

}

@Override

public void run() {

while (true) {

//Simulate the time-consuming operation in the production process

Goods goods = new Goods();

try {

Thread.sleep(new Random().nextInt(1000));

} catch (InterruptedException e) {

e.printStackTrace();

}

synchronized (queue) {

while (queue.size() == maxSize) {

try {

System.out.println("The queue is full[" + threadName + "]Enter the waiting state");

queue.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

queue.add(goods);

System.out.println("[" + threadName + "]Produced a commodity:[" + goods.toString() + "],Current commodity quantity:" + queue.size());

queue.notifyAll();

}

}

}

}

class Consumer extends Thread {

private String threadName;

private Queue<Goods> queue;

public Consumer(String threadName, Queue<Goods> queue) {

this.threadName = threadName;

this.queue = queue;

}

@Override

public void run() {

while (true) {

Goods goods;

synchronized (queue) {

while (queue.isEmpty()) {

try {

System.out.println("The queue is empty[" + threadName + "]Enter the waiting state");

queue.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

goods = queue.remove();

System.out.println("[" + threadName + "]Consumed a commodity:[" + goods.toString() + "],Current commodity quantity:" + queue.size());

queue.notifyAll();

}

//Simulate the time-consuming operation in the consumption process

try {

Thread.sleep(new Random().nextInt(1000));

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

@Test

public void test() {

int maxSize = 5;

Queue<Goods> queue = new LinkedList<>();

Thread producer1 = new Producer("Producer 1", queue, maxSize);

Thread producer2 = new Producer("Producer 2", queue, maxSize);

Thread producer3 = new Producer("Producer 3", queue, maxSize);

Thread consumer1 = new Consumer("Consumer 1", queue);

Thread consumer2 = new Consumer("Consumer 2", queue);

producer1.start();

producer2.start();

producer3.start();

consumer1.start();

consumer2.start();

while (true) {

}

}

}1. Confirm that the object of the lock is queue;

2. Do not write the production process and consumption process in the synchronization block. These operations do not need to be synchronized. What is synchronized is only the two actions of putting in and taking out;

3. For continuous production and consumption, use while(true) {...} In this way, the operations of [production, put in] or [take out, consumption] are carried out all the time.

4. However, because the queue is locked in a synchronized way, it is either put in or taken out at the same time, and the two cannot be carried out at the same time.

Implementation of ReentrantLock

ReentrantLock is also Java util. A type of lock displayed in concurrent, which allows the same thread to repeatedly enter a section of execution code (recursion) and repeatedly lock. Repeated locking is equivalent to counter accumulation. Therefore, when a thread wants to release the lock, it needs to have the corresponding unlock execution.

The following code is still the generation and consumption model we talked about at the beginning, but it is implemented in a different way; Special attention should be paid here that the reentrant lock or recursive lock needs to appear in pairs. Otherwise, it may be thrown as follows when the Condition's await or signal is executed

java.lang.IllegalMonitorStateException

public class TestReentrantLockConsumerAndProducer {

/*Current generated quantity*/

static int currentNum = 0;

/*Maximum generated quantity*/

static int MAX_NUM = 10;

/*Minimum consumption quantity*/

static int MIN_NUM = 0;

//Create a lock object

private static Lock lock = new ReentrantLock();

//Variable with empty buffer

private static final Condition emptyCondition = lock.newCondition();

//Variable with full buffer

private static final Condition fullCondition = lock.newCondition();

public static void main(String args[]) {

//Create a producer

new Thread(new Producer()).start();

//Create two consumers

new Thread(new Consumer()).start();

new Thread(new Consumer()).start();

}

static class Producer implements Runnable {

public void product() {

while (true) {

try {

Thread.sleep(10);

} catch (InterruptedException e) {

e.printStackTrace();

}

lock.lock();

currentNum++;

System.out.println("Producer now product num:" + currentNum);

if (currentNum == MAX_NUM) {

emptyCondition.signal();

try {

fullCondition.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

lock.unlock();

}

}

@Override

public void run() {

product();

}

}

static class Consumer implements Runnable {

public void consume() {

while (true) {

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

lock.lock();

if (currentNum == MIN_NUM) {

fullCondition.signal();

try {

emptyCondition.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

continue;

}

System.out.println(new StringBuilder(Thread.currentThread().getName())

.append(" Consumer now consumption num:").append(currentNum));

currentNum--;

lock.unlock();

}

}

@Override

public void run() {

consume();

}

}

}

public class ProducerConsumer2 {

class Producer extends Thread {

private String threadName;

private Queue<Goods> queue;

private Lock lock;

private Condition notFullCondition;

private Condition notEmptyCondition;

private int maxSize;

public Producer(String threadName, Queue<Goods> queue, Lock lock, Condition notFullCondition, Condition notEmptyCondition, int maxSize) {

this.threadName = threadName;

this.queue = queue;

this.lock = lock;

this.notFullCondition = notFullCondition;

this.notEmptyCondition = notEmptyCondition;

this.maxSize = maxSize;

}

@Override

public void run() {

while (true) {

//Simulate the time-consuming operation in the production process

Goods goods = new Goods();

try {

Thread.sleep(new Random().nextInt(100));

} catch (InterruptedException e) {

e.printStackTrace();

}

lock.lock();

try {

while (queue.size() == maxSize) {

try {

System.out.println("The queue is full[" + threadName + "]Enter the waiting state");

notFullCondition.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

queue.add(goods);

System.out.println("[" + threadName + "]Produced a commodity:[" + goods.toString() + "],Current commodity quantity:" + queue.size());

notEmptyCondition.signalAll();

} finally {

lock.unlock();

}

}

}

}

class Consumer extends Thread {

private String threadName;

private Queue<Goods> queue;

private Lock lock;

private Condition notFullCondition;

private Condition notEmptyCondition;

public Consumer(String threadName, Queue<Goods> queue, Lock lock, Condition notFullCondition, Condition notEmptyCondition) {

this.threadName = threadName;

this.queue = queue;

this.lock = lock;

this.notFullCondition = notFullCondition;

this.notEmptyCondition = notEmptyCondition;

}

@Override

public void run() {

while (true) {

Goods goods;

lock.lock();

try {

while (queue.isEmpty()) {

try {

System.out.println("The queue is empty[" + threadName + "]Enter the waiting state");

notEmptyCondition.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

goods = queue.remove();

System.out.println("[" + threadName + "]Consumed a commodity:[" + goods.toString() + "],Current commodity quantity:" + queue.size());

notFullCondition.signalAll();

} finally {

lock.unlock();

}

//Simulate the time-consuming operation in the consumption process

try {

Thread.sleep(new Random().nextInt(100));

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

@Test

public void test() {

int maxSize = 5;

Queue<Goods> queue = new LinkedList<>();

Lock lock = new ReentrantLock();

Condition notEmptyCondition = lock.newCondition();

Condition notFullCondition = lock.newCondition();

Thread producer1 = new ProducerConsumer2.Producer("Producer 1", queue, lock, notFullCondition, notEmptyCondition, maxSize);

Thread producer2 = new ProducerConsumer2.Producer("Producer 2", queue, lock, notFullCondition, notEmptyCondition, maxSize);

Thread producer3 = new ProducerConsumer2.Producer("Producer 3", queue, lock, notFullCondition, notEmptyCondition, maxSize);

Thread consumer1 = new ProducerConsumer2.Consumer("Consumer 1", queue, lock, notFullCondition, notEmptyCondition);

Thread consumer2 = new ProducerConsumer2.Consumer("Consumer 2", queue, lock, notFullCondition, notEmptyCondition);

Thread consumer3 = new ProducerConsumer2.Consumer("Consumer 3", queue, lock, notFullCondition, notEmptyCondition);

producer1.start();

producer2.start();

producer3.start();

consumer1.start();

consumer2.start();

consumer3.start();

while (true) {

}

}

}Points to note:

Both putting and taking operations use the same lock, so at the same time, either putting or taking can not be carried out at the same time. Therefore, similar to the implementation using wait() and notify(), this implementation does not maximize the use of buffers (i.e. queues in the example). If you want to realize that you can put in and take out at the same time, you need to use two reentry locks to control the operation of putting in and taking out respectively. For specific implementation, please refer to LinkedBlockingQueue.

Implementation of blocking queue

The implementation of blocking queue is essentially based on reentrant lock, but it is further encapsulated and has a queue data structure

Here we use a data structure LinkedBlockingDeque's two-way queue structure. Of course, we can use any blocking queue. This is mainly used to automatically block and wait when the blocking queue exceeds the maximum capacity;

Several key methods are used here, such as PEEK, put, peeklast and take. Let's take a look at the relevant source code. We will not interpret the source code here too much. We can probably see that the internal implementation of the blocking queue also depends on the reentrant lock, and then dynamically manage the acquisition and release of the lock according to the operation of put and take.

| Method / mode of treatment | Throw exception | Return special value | Always blocked | Timeout exit |

|---|---|---|---|---|

| insert | add(e) | offer(e) | put(e) | offer(e, time, unit) |

| remove | remove() | poll() | take() | poll(time, unit) |

| inspect | element() | peek() | Not available | Not available |

Therefore, put() and take() are two blocking methods to be selected here.

/** Maximum number of items in the deque */

private final int capacity;

/** Main lock guarding all access */

final ReentrantLock lock = new ReentrantLock();

/** Condition for waiting takes */

private final Condition notEmpty = lock.newCondition();

/** Condition for waiting puts */

private final Condition notFull = lock.newCondition();

public E peekFirst() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

return (first == null) ? null : first.item;

} finally {

lock.unlock();

}

}

public E peekLast() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

return (last == null) ? null : last.item;

} finally {

lock.unlock();

}

}

//It can be seen that if the queue content is empty, it will be blocked

public E takeFirst() throws InterruptedException {

final ReentrantLock lock = this.lock;

lock.lock();

try {

E x;

while ( (x = unlinkFirst()) == null)

notEmpty.await();

return x;

} finally {

lock.unlock();

}

}

/**

* Removes and returns first element, or null if empty.

* For specific deletion operations, execute notFull. After the deletion is completed signal(); Release the blocking of notFull

*/

private E unlinkFirst() {

// assert lock.isHeldByCurrentThread();

Node<E> f = first;

if (f == null)

return null;

Node<E> n = f.next;

E item = f.item;

f.item = null;

f.next = f; // help GC

first = n;

if (n == null)

last = null;

else

n.prev = null;

--count;

notFull.signal();

return item;

}

/**

* Links node as last element, or returns false if full.

* When the addition is successful, release the signal of notEmpty

*/

private boolean linkLast(Node<E> node) {

// assert lock.isHeldByCurrentThread();

if (count >= capacity)

return false;

Node<E> l = last;

node.prev = l;

last = node;

if (first == null)

first = node;

else

l.next = node;

++count;

notEmpty.signal();

return true;

}

public class TestBlockQueueConsumerAndProducer {

/*Maximum generated quantity*/

static int MAX_NUM = 10;

private static LinkedBlockingDeque mBlockQueue = new LinkedBlockingDeque<Integer>(MAX_NUM);

public static void main(String args[]) {

//Create a producer

new Thread(new Producer()).start();

//Create two consumers

new Thread(new Consumer()).start();

//Create two consumers

new Thread(new Consumer()).start();

}

static class Producer implements Runnable {

public void product() {

while (true) {

if (mBlockQueue.peek() == null) {

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

for (int i = 1; i <= 10; i++) {

try {

mBlockQueue.put(i);

System.out.println("Producer now product num:" + i);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

}

@Override

public void run() {

product();

}

}

static class Consumer implements Runnable {

public void consume() {

while (true) {

try {

Thread.sleep(500);

} catch (InterruptedException e) {

e.printStackTrace();

}

Integer mLast = (Integer) mBlockQueue.peekLast();

if (mLast != null && mLast == MAX_NUM) {

try {

int num = (Integer) mBlockQueue.take();

System.out.println(new StringBuilder(Thread.currentThread().getName())

.append(" Consumer now consumption num:").append(num));

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

@Override

public void run() {

consume();

}

}

}

public class ProducerConsumer3 {

class Producer extends Thread {

private String threadName;

private BlockingQueue<Goods> queue;

public Producer(String threadName, BlockingQueue<Goods> queue) {

this.threadName = threadName;

this.queue = queue;

}

@Override

public void run() {

while (true){

Goods goods = new Goods();

try {

//Simulate the time-consuming operation in the production process

Thread.sleep(new Random().nextInt(100));

queue.put(goods);

System.out.println("[" + threadName + "]Produced a commodity:[" + goods.toString() + "],Current commodity quantity:" + queue.size());

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

class Consumer extends Thread {

private String threadName;

private BlockingQueue<Goods> queue;

public Consumer(String threadName, BlockingQueue<Goods> queue) {

this.threadName = threadName;

this.queue = queue;

}

@Override

public void run() {

while (true){

try {

Goods goods = queue.take();

System.out.println("[" + threadName + "]Consumed a commodity:[" + goods.toString() + "],Current commodity quantity:" + queue.size());

//Simulate the time-consuming operation in the consumption process

Thread.sleep(new Random().nextInt(100));

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

@Test

public void test() {

int maxSize = 5;

BlockingQueue<Goods> queue = new LinkedBlockingQueue<>(maxSize);

Thread producer1 = new ProducerConsumer3.Producer("Producer 1", queue);

Thread producer2 = new ProducerConsumer3.Producer("Producer 2", queue);

Thread producer3 = new ProducerConsumer3.Producer("Producer 3", queue);

Thread consumer1 = new ProducerConsumer3.Consumer("Consumer 1", queue);

Thread consumer2 = new ProducerConsumer3.Consumer("Consumer 2", queue);

producer1.start();

producer2.start();

producer3.start();

consumer1.start();

consumer2.start();

while (true) {

}

}

}If the LinkedBlockingQueue is used as a queue, it can be implemented: at the same time, it can be put in and taken out, because two reentry locks are used inside the LinkedBlockingQueue to control the taking out and putting in respectively.

If you use ArrayBlockingQueue as a queue implementation, you can only put or take it out at the same time, because only one reentry lock is used inside ArrayBlockingQueue to control concurrent modification operations.

Implementation using Semaphore

The premise is to be familiar with the usage of semaphore, especially the release() method. Semaphore does not have to acquire before release.

public class ProducerConsumer4 {

class Producer extends Thread {

private String threadName;

private Queue<Goods> queue;

private Semaphore queueSizeSemaphore;

private Semaphore concurrentWriteSemaphore;

private Semaphore notEmptySemaphore;

public Producer(String threadName, Queue<Goods> queue, Semaphore concurrentWriteSemaphore, Semaphore queueSizeSemaphore, Semaphore notEmptySemaphore) {

this.threadName = threadName;

this.queue = queue;

this.concurrentWriteSemaphore = concurrentWriteSemaphore;

this.queueSizeSemaphore = queueSizeSemaphore;

this.notEmptySemaphore = notEmptySemaphore;

}

@Override

public void run() {

while (true) {

//Simulate the time-consuming operation in the production process

Goods goods = new Goods();

try {

Thread.sleep(new Random().nextInt(100));

} catch (InterruptedException e) {

e.printStackTrace();

}

try {

queueSizeSemaphore.acquire();//Gets the semaphore whose queue is not full

concurrentWriteSemaphore.acquire();//Get read / write semaphore

queue.add(goods);

System.out.println("[" + threadName + "]Produced a commodity:[" + goods.toString() + "],Current commodity quantity:" + queue.size());

} catch (InterruptedException e) {

e.printStackTrace();

}finally {

concurrentWriteSemaphore.release();

notEmptySemaphore.release();

}

}

}

}

class Consumer extends Thread {

private String threadName;

private Queue<Goods> queue;

private Semaphore queueSizeSemaphore;

private Semaphore concurrentWriteSemaphore;

private Semaphore notEmptySemaphore;

public Consumer(String threadName, Queue<Goods> queue, Semaphore concurrentWriteSemaphore, Semaphore queueSizeSemaphore, Semaphore notEmptySemaphore) {

this.threadName = threadName;

this.queue = queue;

this.concurrentWriteSemaphore = concurrentWriteSemaphore;

this.queueSizeSemaphore = queueSizeSemaphore;

this.notEmptySemaphore = notEmptySemaphore;

}

@Override

public void run() {

while (true) {

Goods goods;

try {

notEmptySemaphore.acquire();

concurrentWriteSemaphore.acquire();

goods = queue.remove();

System.out.println("[" + threadName + "]Produced a commodity:[" + goods.toString() + "],Current commodity quantity:" + queue.size());

} catch (InterruptedException e) {

e.printStackTrace();

}finally {

concurrentWriteSemaphore.release();

queueSizeSemaphore.release();

}

//Simulate the time-consuming operation in the consumption process

try {

Thread.sleep(new Random().nextInt(100));

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

@Test

public void test() {

int maxSize = 5;

Queue<Goods> queue = new LinkedList<>();

Semaphore concurrentWriteSemaphore = new Semaphore(1);

Semaphore notEmptySemaphore = new Semaphore(0);

Semaphore queueSizeSemaphore = new Semaphore(maxSize);

Thread producer1 = new ProducerConsumer4.Producer("Producer 1", queue, concurrentWriteSemaphore, queueSizeSemaphore, notEmptySemaphore);

Thread producer2 = new ProducerConsumer4.Producer("Producer 2", queue, concurrentWriteSemaphore, queueSizeSemaphore, notEmptySemaphore);

Thread producer3 = new ProducerConsumer4.Producer("Producer 3", queue, concurrentWriteSemaphore, queueSizeSemaphore, notEmptySemaphore);

Thread consumer1 = new ProducerConsumer4.Consumer("Consumer 1", queue, concurrentWriteSemaphore, queueSizeSemaphore, notEmptySemaphore);

Thread consumer2 = new ProducerConsumer4.Consumer("Consumer 2", queue, concurrentWriteSemaphore, queueSizeSemaphore, notEmptySemaphore);

Thread consumer3 = new ProducerConsumer4.Consumer("Consumer 3", queue, concurrentWriteSemaphore, queueSizeSemaphore, notEmptySemaphore);

producer1.start();

producer2.start();

producer3.start();

consumer1.start();

consumer2.start();

consumer3.start();

while (true) {

}

}

}1 understand the meaning of the three semaphores in the code

Queuesizesemphore: (the number of licenses can be understood as how many more elements can be put into the queue). The initial number of licenses of this semaphore is the size of the warehouse, that is, maxSize; Every time the producer places a commodity, the semaphore - 1, that is, execute acquire(), indicating that an element has been added to the queue and a license needs to be reduced; Every time the consumer takes out a product, the semaphore + 1, that is, execute release(), indicating that an element has been missing from the queue, and then give you a license.

notEmptySemaphore: (the number of licenses can be understood as how many elements can be taken out of the queue). The initial number of licenses of this semaphore is 0; Every time the producer places a commodity, the semaphore + 1, that is, release() is executed, indicating that an element is added to the queue; Every time a consumer takes out a commodity, the semaphore is - 1, that is, execute acquire(), which means that an element is missing in the queue and a license needs to be reduced;

Concurrent writesemaphore is equivalent to a write lock. When putting in or taking out goods, you need to obtain and then release the license.

2. In the implementation, concurrent writesemaphore is used to control the concurrent write of the queue. At the same time, only one operation can be performed on the queue: put in or take out. If the semaphore in concurrentWriteSemaphore is initialized to a value of 2 or more, multiple producers will put it in or multiple consumers will consume it at the same time, and the LinkedList used is not allowed to modify it concurrently, otherwise overflow or empty will occur. Therefore, concurrentWriteSemaphore can only be set to 1, which leads to low performance similar to the way of using wait() / notify().

Lockless caching framework: Disruptor

It is not easy to realize the synchronization between thread and BlockingQueue, but it is not easy to realize the synchronization between thread and BlockingQueue. In the situation of high concurrency, its performance is not particularly superior. (concurrentlinkedqueue is a high-performance queue, but it does not implement the BlockingQueue interface, that is, it does not support blocking operations).

Disruptor is an efficient lockless cache queue developed by LMAX company. It implements a ring queue in a lock free way, which is very suitable for implementing producer and consumer patterns, such as the publication of events and messages.

1. Discard the lock mechanism and use CAS instead.

The Disruptor paper describes an experiment we have done. The test program calls a function that automatically increments a 64 bit counter by 500 million times. When a single thread has no lock, the program takes 300ms. If you add a lock (still single thread, no competition, only adding locks), the program takes 10000 MS, which is two orders of magnitude slower. What's more surprising is that if you add a thread (logically, it should be twice as fast as locking a single thread), it takes 224000ms.

Therefore, the cost of locks has actually been proved to be very large. If we reduce the use of locks and reduce the granularity of locks, this is another idea provided by disruptor.

2. Cache line filling is introduced to solve pseudo sharing.

Pseudo sharing refers to the problem of 123 level cache when there are multiple CPUs. Generally, the cache reads data in the way of cache lines, as shown in the figure. When both CPUs load X and Y into their first level cache, in fact, CPUs compete for X and y when writing, which will also lead to lock and reduced efficiency.

How to solve this problem? In fact, the same method as memory alignment is used, that is, align the data to the same size as the cache line (usually 64B) each time. In fact, memory alignment and cache line alignment for pseudo sharing are the same idea. Therefore, we can see such an internal class in the source code of disruptor. Why it is written like this is actually to cache line alignment.

private static class Padding

{

/** Set to -1 as sequence starting point */

public long nextValue = Sequence.INITIAL_VALUE, cachedValue = Sequence.INITIAL_VALUE, p2, p3, p4, p5, p6, p7;

}

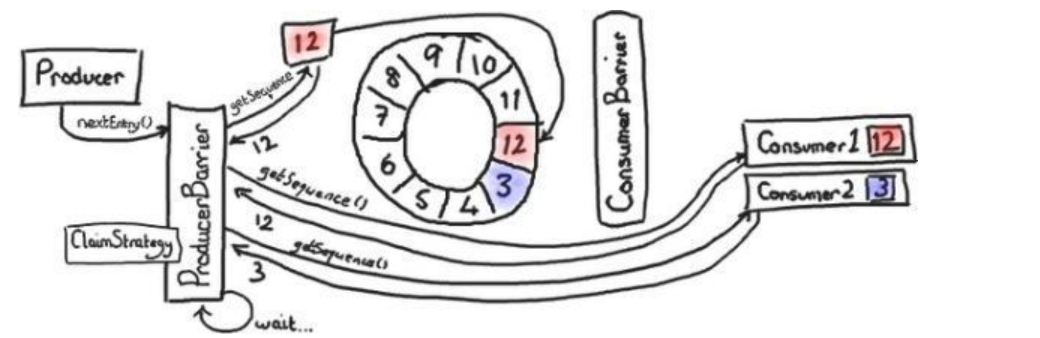

3. Use a unique data structure RingBuffer to replace Queue. The use of this data structure makes it possible to abandon locks and use CAS instead.

You can imagine, if you want to write a program for producers and consumers, what would you do?

Of course, most people will use a queue to implement. To put it bluntly, the queue is used as a buffer between producers and consumers, which indirectly synchronizes their speed and makes asynchronous programming possible.

When it comes to asynchronous programming, everyone may be familiar with it. In fact, the graphical interface of java is a typical example of asynchronous programming. As a consumer dealing with interface events, there is only one thread, while the producer updating the interface will be multiple threads. Due to the producer consumer model, the interface events produced by the producer will be saved in a queue, The built-in thread updates uniformly, which ensures that we will not artificially destroy the interface drawing process during programming, so as to improve the code quality and reduce the complexity. Incidentally net. If you update the interface with other threads, there will be many strange problems. Therefore, delegate also realizes that consumers are in a separate thread through an event queue.

Far away. In short, we will use a queue to deal with this problem. There are several problems here. First, if the producer produces too fast, the queue will become excessively inflated and occupy memory space after a certain time; Second, after looking at the implementation of the queue, you will find that in order to ensure the correctness of multiple thread access, you must lock the queue when operating. As mentioned earlier, the time will be several orders of magnitude slower after locking.

The design of the disruptor framework takes us out of this mindset. Do we have to use a growing queue? Do we have to lock the access queue? The answer is No.

The so-called ringbuffer is a ring queue. Let's see what he did to solve these two problems.

1. A ring queue means that it is connected end to end, that is, its size is limited, but ringbuffer is based on the assumption that producers and consumers are moving forward synchronously, and there is no side that is particularly fast. In this way, the pair of columns can be recycled without the cost of creating objects. In addition, because ringbuffer is of fixed size, Using an array to save, because the space is allocated in advance, the speed of positioning and searching is needless to say. So it naturally solves the first problem. But what if in actual use, producers are faster than consumers (or vice versa)? In other words, at a certain point in time, they will meet head to tail. What will happen at this time? We will explain this later.

2. In order to make the queue run safely in multi-threaded environment, the whole queue needs to be locked, which brings huge overhead. Let's look at how ringbuffer is lockless (for simplicity, discuss the case of one producer and one consumer). In order to explain this principle, we borrow other people's diagrams to illustrate this problem. For example, at present, there is a consumer staying at position 12. At this time, the producer assumes position 3. What is the next step of the producer? Producer will try to read 4 and find that there is no next consumer, so it can be obtained safely, so it changes it to 14, publish es the product to 14, and notifies a blocked consumer to get active. In this way, it is safe until 11 (here we assume that the producer is relatively fast). When the producer tries to access 12, it finds that it cannot continue, so it hands over control; When the consumer starts moving, it will call the waitFor method of the barrier. The waitFor sees that the nearest security node in front has reached 20 (21 is the producer), so it directly returns 20. Therefore, now the consumer can consume all products from 13 to 20 without lock. It can be imagined that this method is many times faster than synchronized.

producer

package cn.lonecloud.procum.disruptor;

import cn.lonecloud.procum.Data;

import com.lmax.disruptor.RingBuffer;

import java.nio.ByteBuffer;

/**

* @author lonecloud

* @version v1.0

* @date 3:02 PM may 7, 2018

*/

public class Producer {

//queue

private final RingBuffer<Data> dataRingBuffer;

public Producer(RingBuffer<Data> dataRingBuffer) {

this.dataRingBuffer = dataRingBuffer;

}

/**

* insert data

* @param s

*/

public void pushData(String s) {

//Get next location

long next = dataRingBuffer.next();

try {

//Get container

Data data = dataRingBuffer.get(next);

//Set data

data.setData(s);

} finally {

//insert

dataRingBuffer.publish(next);

}

}

}

consumer

package cn.lonecloud.procum.disruptor;

import cn.lonecloud.procum.Data;

import com.lmax.disruptor.WorkHandler;

/**

* @author lonecloud

* @version v1.0

* @date 3:01 PM may 7, 2018

*/

public class Customer implements WorkHandler<Data> {

@Override

public void onEvent(Data data) throws Exception {

System.out.println(Thread.currentThread().getName()+"---"+data.getData());

}

}

Data factory

package cn.lonecloud.procum.disruptor;

import cn.lonecloud.procum.Data;

import com.lmax.disruptor.EventFactory;

/**

* @author lonecloud

* @version v1.0

* @date 3:02 PM may 7, 2018

*/

public class DataFactory implements EventFactory<Data> {

@Override

public Data newInstance() {

return new Data();

}

}

Main function

package cn.lonecloud.procum.disruptor;

import cn.lonecloud.procum.Data;

import com.lmax.disruptor.RingBuffer;

import com.lmax.disruptor.dsl.Disruptor;

import java.util.UUID;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

/**

* @author lonecloud

* @version v1.0

* @date 3:09 PM may 7, 2018

*/

public class Main {

public static void main(String[] args) throws InterruptedException {

//Create thread pool

ExecutorService service = Executors.newCachedThreadPool();

//Create data factory

DataFactory dataFactory = new DataFactory();

//When setting the buffer size, it must be an index of 2, otherwise there will be exceptions

int buffersize = 1024;

Disruptor<Data> dataDisruptor = new Disruptor<Data>(dataFactory, buffersize,

service);

//Create consumer thread

dataDisruptor.handleEventsWithWorkerPool(

new Customer(),

new Customer(),

new Customer(),

new Customer(),

new Customer(),

new Customer(),

new Customer()

);

//start-up

dataDisruptor.start();

//Get its queue

RingBuffer<Data> ringBuffer = dataDisruptor.getRingBuffer();

for (int i = 0; i < 100; i++) {

//Create producer

Producer producer = new Producer(ringBuffer);

//Set content

producer.pushData(UUID.randomUUID().toString());

//Thread.sleep(1000);

}

}

}

There are several strategies:

1. BlockingWaitStrategy: blocking strategy, which saves the most CPU, but has the worst performance under high concurrency conditions

2. Sleepingwaitstrategy: wait indefinitely in the loop, which will cause high delay in processing data and have little impact on the production thread. Scenario: asynchronous log

3. YieldingWaitStrategy: in low latency situations, use the logical CPU that must ensure the remaining consumer threads

4. BusySpinWaitStrategy: the consumer thread will try its best to monitor the buffer changes crazily.