This article mainly summarizes some important knowledge of multithreading and high concurrency in Java Concurrent Programming for your reference. I hope it can be helpful to your study!

1.JMM data atomic operation

- Read: reads data from main memory

- Load: writes the data read from the main memory to the working memory

- Use: read data from working memory to calculate

- Assign: re assign the calculated value to the working memory

- Store: writes working memory data to main memory

- Write: assign the past variable value of store to the variable in main memory

- Lock: lock the main memory variable and identify it as a thread exclusive state

- Unlock: Unlock the main memory variable. After unlocking, other threads can lock the variable

2. Look at volatile keyword

(1) Start two threads

public class VolatileDemo {

private static boolean flag = false;

public static void main(String[] args) throws InterruptedException {

new Thread(() -> {

while (!flag){

}

System.out.println("Jump out while Cycle");

}).start();

Thread.sleep(2000);

new Thread(() -> changeFlage()).start();

}

private static void changeFlage() {

System.out.println("Start to change flag Before value");

flag = true;

System.out.println("change flag After value");

}

}

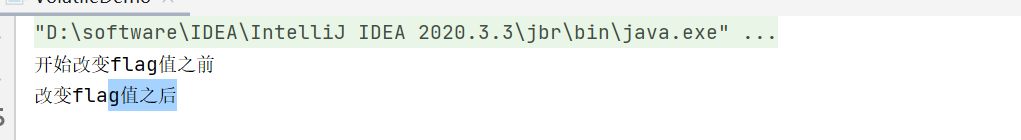

Before adding volatile, the while judgment of the first thread is always satisfied

(2) After adding volatile to the variable flag

public class VolatileDemo {

private static volatile boolean flag = false;

public static void main(String[] args) throws InterruptedException {

new Thread(() -> {

while (!flag){

}

System.out.println("Jump out while Cycle");

}).start();

Thread.sleep(2000);

new Thread(() -> changeFlage()).start();

}

private static void changeFlage() {

System.out.println("Start to change flag Before value");

flag = true;

System.out.println("change flag After value");

}

}

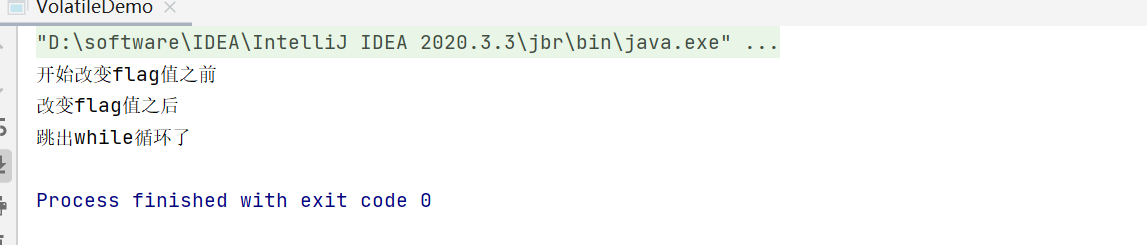

The while statement can satisfy the condition

(3) Principle explanation:

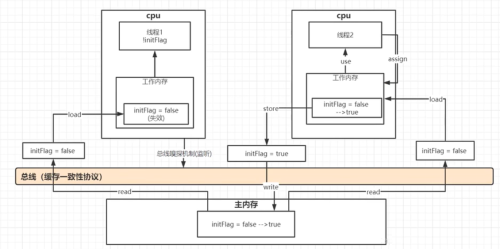

When the first thread is started, the flag variable reads data from the main memory through read, loads the data into the working memory of thread 1 using load, and reads the flag into the thread through use; Thread 2 is the same read operation. Thread 2 changes the value of the flag through assign. The flag stored in the working memory of thread 2 = true, then writes the flag to the bus through store, and the bus writes the flag to the memory through write; Since the two threads read the values in various working memory, which are copies of the main memory and do not communicate with each other, thread 1 is recycled all the time, and the flag of thread 1 is false.

After adding volatile, the cache consistency protocol (MESI) is added. The CPU senses the change of data through the bus sniffing mechanism, and the value in its cache becomes invalid. At this time, the thread will invalidate the flag stored in the working memory and re read the flag value from the main memory. At this time, the while condition is satisfied.

The volatile bottom layer is modified by the lock of assembly language. When the variable is modified, it is immediately written back to the main class to avoid instruction reordering

3. Three characteristics of concurrent programming

Visibility, ordering, atomicity

4. Double lock judgment mechanism to create single instance mode

public class DoubleCheckLockSinglenon {

private static volatile DoubleCheckLockSinglenon doubleCheckLockSingleon = null;

public DoubleCheckLockSinglenon(){}

public static DoubleCheckLockSinglenon getInstance(){

if (null == doubleCheckLockSingleon) {

synchronized(DoubleCheckLockSinglenon.class){

if(null == doubleCheckLockSingleon){

doubleCheckLockSingleon = new DoubleCheckLockSinglenon();

}

}

}

return doubleCheckLockSingleon;

}

public static void main(String[] args) {

System.out.println(DoubleCheckLockSinglenon.getInstance());

}

}

When a thread calls the getInstance method to create an object, first judge whether it is empty. If it is empty, lock the object. Otherwise, multiple threads will create a duplicate, and then judge whether it is empty again in the lock. When a new object is created, allocate space in memory, reset the init attribute of the object, and then perform initialization assignment.

In order to optimize the code execution efficiency, the cpu will reorder the codes that meet the as if serial and happens before principles. If the as if serial specifies that the execution code sequence in the thread does not affect the result output, the instruction will be reordered;

Happens before specifies the order of some locks. The unlock of the same object needs to appear before the next lock, etc.

Therefore, in order to prevent instruction rearrangement during new operation, assign the value first and then perform the zero setting operation, the volatile modifier needs to be added. After the volatile modifier is added, a memory barrier will be created during new operation. The high-speed cpu does not reorder the instructions, and the bottom layer is the lock keyword; The memory barrier is divided into LoadLoad, storestore, loadstore and storeload. The bottom layer is written by c + + code, and the c + + code calls the assembly language

5.synchronized keyword

(1) Before adding synchronized

package com.qingyun;

/**

* Synchronized keyword

*/

public class SynchronizedDemo {

public static void main(String[] args) throws InterruptedException {

Num num = new Num();

Thread t1 = new Thread(() -> {

for (int i = 0;i < 100000;i++) {

num.incrent();

}

});

t1.start();

for (int i = 0;i < 100000;i++) {

num.incrent();

}

t1.join();

System.out.println(num.getNum());

}

}

package com.qingyun;

public class Num {

public int num = 0;

public void incrent() {

num++;

}

public int getNum(){

return num;

}

}

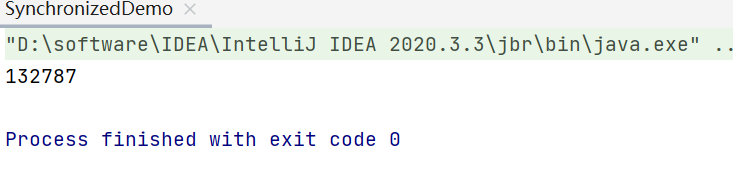

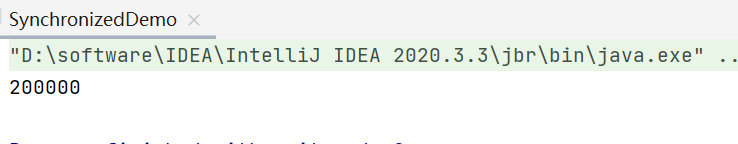

The output result is not what we want. Due to the method of adding thread and for loop at the same time, the final output result is not what we want

(2) With synchronized

public synchronized void incrent() {

num++;

}

//perhaps

public void incrent() {

synchronized(this){

num++;

}

}

The output result is what we want. The lock used at the bottom of the synchronized keyword is heavyweight lock, mutex lock, pessimistic lock, jdk1 For the lock before 6, the thread will be placed in a queue waiting for execution

6.AtomicIntger atomic operation

(1) The operation of adding 1 to an atom can be implemented using AtomicInteger. Compared with synchronized, the performance is greatly improved

public class Num {

// public int num = 0;

AtomicInteger atomicInteger = new AtomicInteger();

public void incrent() {

atomicInteger.incrementAndGet(); //Atom plus 1

}

public int getNum(){

return atomicInteger.get();

}

}

AtomicInteger source code has a value field, which is decorated with volatile, and the underlying layer of volatile is decorated with lock to ensure the correctness of multi-threaded concurrent results

private volatile int value;

(2) What the atomicInteger.incrementAndGet() method does: first obtain the value of value, add 1 to the value, then compare the old value with atomicInteger, and set the newValue when it is equal. Because the values may not be equal when using multithreading, use while for circular comparison, and launch it after equal execution

while(true) {

int oldValue = atomicInteger.get();

int newValue = oldValue+1;

if(atomicInteger.compareAndSet(oldValue,newValue)){

break;

}

}

(3) atomicInteger.compareAndSet sets the new value only after comparing the value, that is, CAS: no lock, optimistic lock and lightweight lock. synchroznied has thread blocking, uplink text switching and operating system scheduling, which is time-consuming. CAS always performs circular comparison, which is more efficient

(4) compareAndSetInt uses native modification at the bottom layer, c + + code at the bottom layer, which realizes the atomicity problem, and code lock cmpxchqq in assembly language, which is a cache line lock

(5) ABA problem: thread 1 finds a variable, and thread 2 executes quickly. It also gets the variable, modifies the value of the variable, and then quickly modifies it back to the original value. In this way, the value of the variable has changed once. When the thread repeatedly executes compareAndSet, although the value has not changed before, it has changed, resulting in Aba problem

(6) To solve the ABA problem, add a version to the variable. Each time you operate the variable version, add 1. The JDK version lock has an AtomicStampedReference. In this way, even if the variable is modified by other threads and then restored to the original value, the version number is inconsistent.

7. Lock optimization

(1) The heavyweight lock will put the waiting threads in the queue. The heavyweight lock locks the monitor, which will occupy resources for up-down switching; if there are too many threads in the lightweight lock, it will spin and consume cpu

(2) After jdk1.6, the lock is upgraded to stateless - "" biased lock (lock id specified) - "" lightweight lock (spin expansion) - "" heavyweight lock (queue storage) "

(3) Create an object. At this time, the object is stateless. When a thread is started and another object is created, the bias lock is enabled, and the lock will not be released after the bias lock is executed. When another thread is enabled, when two threads are trying to grab the object, the bias lock is immediately upgraded to a lightweight lock. When another thread is created to grab the object lock, the bias lock is upgraded from a lightweight lock Level is a heavyweight lock

(4) Segmented CAS, the bottom layer has a base record variable value. When multiple thread classes access this variable, the base value will be divided into multiple cells to form an array. Each cell corresponds to the CAS processing of one or more threads, avoiding the spin idling of threads. This is still a lightweight lock. When returning data, the bottom layer calls the sum of all cell arrays and bases

public class Num {

LongAdder longAdder = new LongAdder();

public void incrent() {

longAdder.increment();

}

public long getNum(){

return longAdder.longValue();

}

}

public long longValue() {

return sum();

}public long sum() {

Cell[] as = cells; Cell a;

long sum = base;

if (as != null) {

for (int i = 0; i < as.length; ++i) {

if ((a = as[i]) != null)

sum += a.value;

}

}

return sum;

}This is the end of this article on some important knowledge about multithreading and high concurrency in Java Concurrent Programming. For more information about multithreading and high concurrency in Java Concurrent Programming, please search W3Cschool Previous articles or continue to browse the relevant articles below!