1. Introduction to Twitter Text Emotional Classification Contest

Practice match address: https://www.heywhale.com/home/activity/detail/611cbe90ba12a0001753d1e9/content

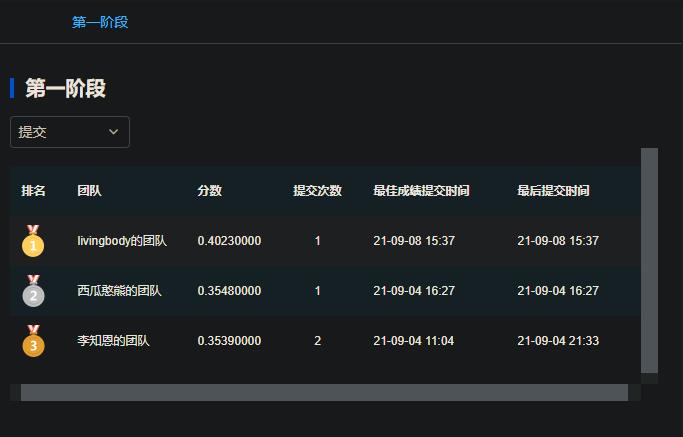

This exercise has 13 emotional categories, so the score is low...

Twitter tweets have many features. First, unlike Facebook, tweets are text-based and can be registered and downloaded through the Twitter interface, making it easy to use as a corpus for natural language processing. Second, Twitter specifies that each tweet should not exceed 140.In fact, the text in the tweet is not the same length and is usually short. Some have only one sentence or even one phrase, which makes it difficult to classify and label the emotions. Moreover, the tweet is often made by people who like it. It contains more emotional elements, has more colloquial content, has abbreviations everywhere, and uses many network terms, such as emotional symbols, new words.Slang and slang are everywhere. As a result, they are very different from formal text. Twitter tweets will not be as effective if they are emotionally categorized using emotional categorization methods suitable for formal text.

Public sentiment plays an increasingly important role in many fields, including movie reviews, consumer confidence, political elections, stock trend prediction and so on. Emotional analysis for public media content is a basic work to analyze public sentiment.

2. Basic Data Situation

The dataset is based on the Twitter dataset published by Twitter users and has made some adjustments to some fields. All field information should be based on the field information provided in this exercise.

The field information is referenced below:

- Unique ID of tweet_id string tweet data, such as test_0, train_1024

- ContentString Twitter Content

- Lael int Twitter Emotional Category, 13 Emotions

The training set train.csv contains 3w data, including tweet_id,content,label, and the test set test.csv contains 1w data, including tweet_id,content.

tweet_id,content,label tweet_1,Layin n bed with a headache ughhhh...waitin on your call...,1 tweet_2,Funeral ceremony...gloomy friday...,1 tweet_3,wants to hang out with friends SOON!,2 tweet_4,"@dannycastillo We want to trade with someone who has Houston tickets, but no one will.",3 tweet_5,"I should be sleep, but im not! thinking about an old friend who I want. but he's married now. damn, & he wants me 2! scandalous!",1 tweet_6,Hmmm. http://www.djhero.com/ is down,4 tweet_7,@charviray Charlene my love. I miss you,1 tweet_8,cant fall asleep,3

!head /home/mw/input/Twitter4903/train.csv

tweet_id,content,label tweet_0,@tiffanylue i know i was listenin to bad habit earlier and i started freakin at his part =[,0 tweet_1,Layin n bed with a headache ughhhh...waitin on your call...,1 tweet_2,Funeral ceremony...gloomy friday...,1 tweet_3,wants to hang out with friends SOON!,2 tweet_4,"@dannycastillo We want to trade with someone who has Houston tickets, but no one will.",3 tweet_5,"I should be sleep, but im not! thinking about an old friend who I want. but he's married now. damn, & he wants me 2! scandalous!",1 tweet_6,Hmmm. http://www.djhero.com/ is down,4 tweet_7,@charviray Charlene my love. I miss you,1 tweet_8,cant fall asleep,3

!head /home/mw/input/Twitter4903/test.csv

tweet_id,content tweet_0,Re-pinging @ghostridah14: why didn't you go to prom? BC my bf didn't like my friends tweet_1,@kelcouch I'm sorry at least it's Friday? tweet_2,The storm is here and the electricity is gone tweet_3,So sleepy again and it's not even that late. I fail once again. tweet_4,"Wondering why I'm awake at 7am,writing a new song,plotting my evil secret plots muahahaha...oh damn it,not secret anymore" tweet_5,I ate Something I don't know what it is... Why do I keep Telling things about food tweet_6,so tired and i think i'm definitely going to get an ear infection. going to bed "early" for once. tweet_7,It is so annoying when she starts typing on her computer in the middle of the night! tweet_8,Screw you @davidbrussee! I only have 3 weeks...

!head /home/mw/input/Twitter4903/submission.csv

tweet_id,label tweet_0,0 tweet_1,0 tweet_2,0 tweet_3,0 tweet_4,0 tweet_5,0 tweet_6,0 tweet_7,0 tweet_8,0

3. Data Set Definition

1. Environmental preparation

# Environment preparation (recommended GPU environment, good speed. pip install paddlepaddle-gpu) !pip install paddlepaddle !pip install -U paddlenlp

2. Get the maximum length of a sentence

# Customize the read method of PaddleNLP dataset

import pandas as pd

train = pd.read_csv('/home/mw/input/Twitter4903/train.csv')

test = pd.read_csv('/home/mw/input/Twitter4903/test.csv')

sub = pd.read_csv('/home/mw/input/Twitter4903/submission.csv')

print('Maximum Content Length %d'%(max(train['content'].str.len())))

Maximum Content Length 166

3. Define datasets

# Define Read Functions

def read(pd_data):

for index, item in pd_data.iterrows():

yield {'text': item['content'], 'label': item['label'], 'qid': item['tweet_id'].strip('tweet_')}

# Split training set, tester from paddle.io import Dataset, Subset from paddlenlp.datasets import MapDataset from paddlenlp.datasets import load_dataset dataset = load_dataset(read, pd_data=train,lazy=False) dev_ds = Subset(dataset=dataset, indices=[i for i in range(len(dataset)) if i % 5== 1]) train_ds = Subset(dataset=dataset, indices=[i for i in range(len(dataset)) if i % 5 != 1])

# View training set

for i in range(5):

print(train_ds[i])

{'text': '@tiffanylue i know i was listenin to bad habit earlier and i started freakin at his part =[', 'label': 0, 'qid': '0'}

{'text': 'Funeral ceremony...gloomy friday...', 'label': 1, 'qid': '2'}

{'text': 'wants to hang out with friends SOON!', 'label': 2, 'qid': '3'}

{'text': '@dannycastillo We want to trade with someone who has Houston tickets, but no one will.', 'label': 3, 'qid': '4'}

{'text': "I should be sleep, but im not! thinking about an old friend who I want. but he's married now. damn, & he wants me 2! scandalous!", 'label': 1, 'qid': '5'}

# Converting to MapDataset type train_ds = MapDataset(train_ds) dev_ds = MapDataset(dev_ds) print(len(train_ds)) print(len(dev_ds))

24000 6000

4. Model Selection

In recent years, a large number of studies have shown that large-scale corpus-based pre-training models (PTM) can learn common language representation, which is conducive to downstream NLP tasks, while avoiding training models from scratch. With the development of computing power, the emergence of depth models (i.e. Transformer) and the enhancement of training skills make PTM develop continuously and become shallow and deeper.

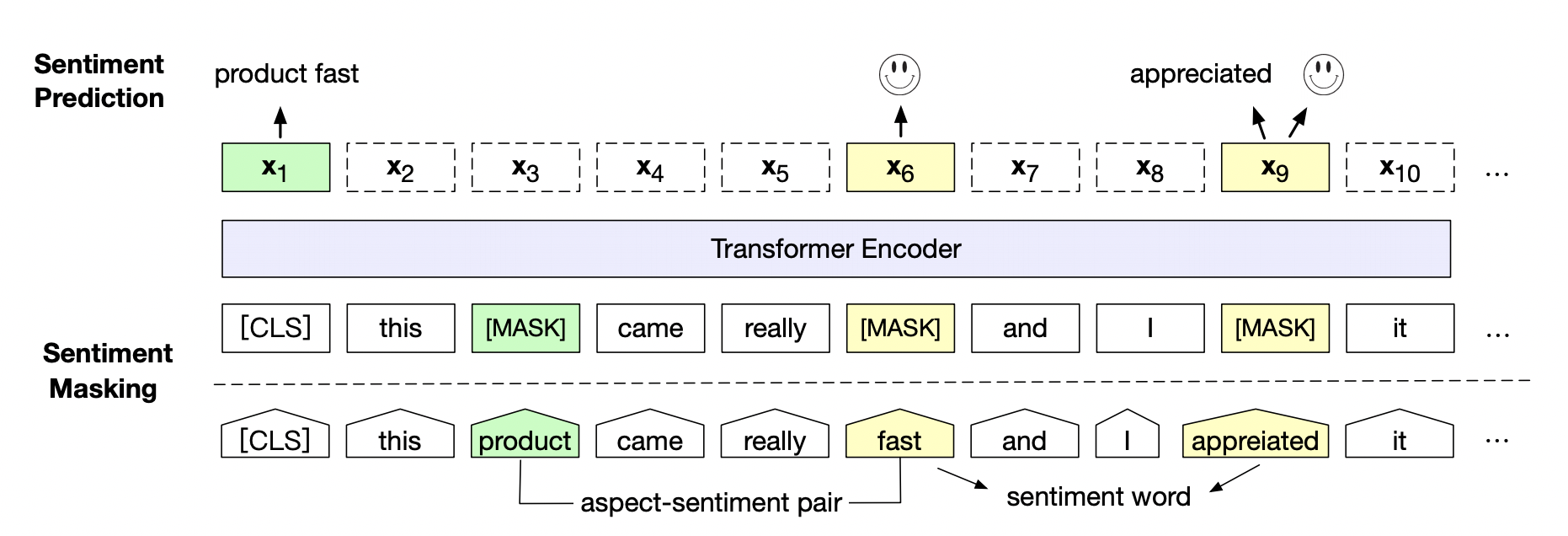

Sentiment Knowledge Enhanced Pre-training for Sentiment Analysis SKEP uses an emotional knowledge enhancement pre-training model to surpass SOTA in 14 typical tasks of Chinese-English emotional analysis, which has been developed by ACLHired in 2020. SKEP is an emotional pre-training algorithm based on emotional knowledge enhancement proposed by Baidu Research Team. This algorithm uses unsupervised methods to automatically mine emotional knowledge, and then uses emotional knowledge to build pre-training goals, so that the machine can learn to understand emotional semantics. SKEP provides a unified and powerful emotional semantics representation for all kinds of emotional analysis tasks.

Paper address:https://arxiv.org/abs/2005.05635

The Baidu Research Team further validated the effect of SKEP, an emotional pre-training model, on three typical emotional analysis tasks, Sentence-level Sentiment Classification, Aspect-level Sentiment Classification, Opinion Role Labeling.

Specific experimental results reference:https://github.com/baidu/Senta#skep

PaddleNLP has implemented the SKEP pre-training model, which can be loaded in a single line of code.

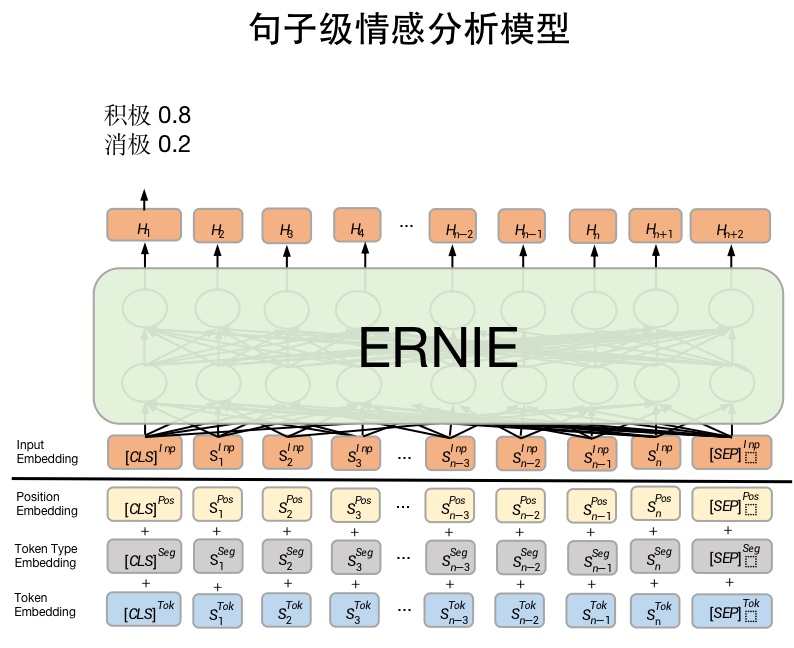

Sentence-level affective analysis model is SkepForSequenceClassification, a common model for SKEP fine-tune text classification. It first extracts the semantic features of sentences through SKEP, then classifies them.

!pip install regex

Looking in indexes: https://mirror.baidu.com/pypi/simple/ Requirement already satisfied: regex in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (2021.8.28)

1.Skep Model Loading

SkepForSequenceClassification can be used for Sentence-Level Affective Analysis and Target-Level Affective Analysis tasks. It obtains the representation of the input text through the pre-training model SKEP, and then classifies the text representation.

- pretrained_model_name_or_path: Model name. Supports "skep_ernie_1.0_large_ch" and "skep_ernie_2.0_large_en".

** "skep_ernie_1.0_large_ch": is the Chinese pre-training model obtained by the SKEP model on the basis of the pre-training ernie_1.0_large_ch and continuing the pre-training on a large amount of Chinese data; - "skep_ernie_2.0_large_en": is the English pre-training model which is based on the pre-training ernie_2.0_large_en and continues to pre-train on a large amount of English data;

- num_classes: Number of dataset classification classes.

Reference for more information on SKEP model implementation:https://github.com/PaddlePaddle/PaddleNLP/tree/develop/paddlenlp/transformers/skep

from paddlenlp.transformers import SkepForSequenceClassification, SkepTokenizer # Specify model name, one-click load model model = SkepForSequenceClassification.from_pretrained(pretrained_model_name_or_path="skep_ernie_2.0_large_en", num_classes=13) # Similarly, the corresponding Tokenizer is loaded by specifying the model name one-click to process text data, such as slicing token, token_id, and so on. tokenizer = SkepTokenizer.from_pretrained(pretrained_model_name_or_path="skep_ernie_2.0_large_en")

[2021-09-16 10:11:58,665] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/skep_ernie_2.0_large_en/skep_ernie_2.0_large_en.pdparams [2021-09-16 10:12:10,133] [ INFO] - Found /home/aistudio/.paddlenlp/models/skep_ernie_2.0_large_en/skep_ernie_2.0_large_en.vocab.txt

2. Introducing Visual Dl

from visualdl import LogWriter

writer = LogWriter("./log")

3. Data Processing

The SKEP model processes text at word granularity, and we can use the SkipTokenizer built into PaddleNLP to do one-click processing.

def convert_example(example,

tokenizer,

max_seq_length=512,

is_test=False):

# Processing the original data into a readable format for the model, enocded_inputs is a dict that contains fields such as input_ids, token_type_ids, and so on

encoded_inputs = tokenizer(

text=example["text"], max_seq_len=max_seq_length)

# input_ids: The corresponding token id in the vocabulary when the text is split into tokens

input_ids = encoded_inputs["input_ids"]

# token_type_ids: Does the current token belong to Sentence 1 or Sentence 2, which is the segment ids expressed in the figure above

token_type_ids = encoded_inputs["token_type_ids"]

if not is_test:

# label: emotional polarity category

label = np.array([example["label"]], dtype="int64")

return input_ids, token_type_ids, label

else:

# qid: the number of each data

qid = np.array([example["qid"]], dtype="int64")

return input_ids, token_type_ids, qid

def create_dataloader(dataset,

trans_fn=None,

mode='train',

batch_size=1,

batchify_fn=None):

if trans_fn:

dataset = dataset.map(trans_fn)

shuffle = True if mode == 'train' else False

if mode == "train":

sampler = paddle.io.DistributedBatchSampler(

dataset=dataset, batch_size=batch_size, shuffle=shuffle)

else:

sampler = paddle.io.BatchSampler(

dataset=dataset, batch_size=batch_size, shuffle=shuffle)

dataloader = paddle.io.DataLoader(

dataset, batch_sampler=sampler, collate_fn=batchify_fn)

return dataloader

4. Definition of evaluation function

import numpy as np

import paddle

@paddle.no_grad()

def evaluate(model, criterion, metric, data_loader):

model.eval()

metric.reset()

losses = []

for batch in data_loader:

input_ids, token_type_ids, labels = batch

logits = model(input_ids, token_type_ids)

loss = criterion(logits, labels)

losses.append(loss.numpy())

correct = metric.compute(logits, labels)

metric.update(correct)

accu = metric.accumulate()

# print("eval loss: %.5f, accu: %.5f" % (np.mean(losses), accu))

model.train()

metric.reset()

return np.mean(losses), accu

5. Definition of superparameters

Once you have defined the loss function, optimizer, and evaluation criteria, you can begin training.

Recommended hyperparameter settings:

- batch_size = 100

- max_seq_length = 166

- batch_size = 100

- learning_rate = 4e-5

- epochs = 32

- warmup_proportion = 0.1

- weight_decay = 0.01

The batch_size and max_seq_length sizes can be adjusted based on the size of the display memory at runtime.

import os

from functools import partial

import numpy as np

import paddle

import paddle.nn.functional as F

from paddlenlp.data import Stack, Tuple, Pad

# Batch data size

batch_size = 100

# Maximum text sequence length 166

max_seq_length = 166

# Batch data size

batch_size = 100

# Define the maximum learning rate during training

learning_rate = 4e-5

# Training Rounds

epochs = 32

# Learning rate preheating ratio

warmup_proportion = 0.1

# Weight decay factor, similar to model regularization strategy, to avoid model overfitting

weight_decay = 0.01

# Data processing into a model readable data format

trans_func = partial(

convert_example,

tokenizer=tokenizer,

max_seq_length=max_seq_length)

# Compose data into batches, such as

# Maximum length of text sequence padding to batch data

# Stack each data label together

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # input_ids

Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # token_type_ids

Stack() # labels

): [data for data in fn(samples)]

train_data_loader = create_dataloader(

train_ds,

mode='train',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

dev_data_loader = create_dataloader(

dev_ds,

mode='dev',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

# Define superparameters, loss, optimizer, etc.

from paddlenlp.transformers import LinearDecayWithWarmup

import time

num_training_steps = len(train_data_loader) * epochs

lr_scheduler = LinearDecayWithWarmup(learning_rate, num_training_steps, warmup_proportion)

# AdamW Optimizer

optimizer = paddle.optimizer.AdamW(

learning_rate=lr_scheduler,

parameters=model.parameters(),

weight_decay=weight_decay,

apply_decay_param_fun=lambda x: x in [

p.name for n, p in model.named_parameters()

if not any(nd in n for nd in ["bias", "norm"])

])

criterion = paddle.nn.loss.CrossEntropyLoss() # Cross-Entropy Loss Function

metric = paddle.metric.Accuracy() # Acracy evaluation index

5. Training

Train and save the best results

# Open Training

global_step = 0

best_val_acc=0

tic_train = time.time()

best_accu = 0

for epoch in range(1, epochs + 1):

for step, batch in enumerate(train_data_loader, start=1):

input_ids, token_type_ids, labels = batch

# Feed data to model

logits = model(input_ids, token_type_ids)

# Calculate loss function value

loss = criterion(logits, labels)

# Prediction Classification Probability Value

probs = F.softmax(logits, axis=1)

# Calculate acc

correct = metric.compute(probs, labels)

metric.update(correct)

acc = metric.accumulate()

global_step += 1

if global_step % 10 == 0:

print(

"global step %d, epoch: %d, batch: %d, loss: %.5f, accu: %.5f, speed: %.2f step/s"

% (global_step, epoch, step, loss, acc,

10 / (time.time() - tic_train)))

tic_train = time.time()

# Reverse Gradient Return, Update Parameters

loss.backward()

optimizer.step()

lr_scheduler.step()

optimizer.clear_grad()

if global_step % 100 == 0 and:

# Model for evaluating current training

eval_loss, eval_accu = evaluate(model, criterion, metric, dev_data_loader)

print("eval on dev loss: {:.8}, accu: {:.8}".format(eval_loss, eval_accu))

# Add eval log display

writer.add_scalar(tag="eval/loss", step=global_step, value=eval_loss)

writer.add_scalar(tag="eval/acc", step=global_step, value=eval_accu)

# Join train log display

writer.add_scalar(tag="train/loss", step=global_step, value=loss)

writer.add_scalar(tag="train/acc", step=global_step, value=acc)

save_dir = "best_checkpoint"

# Join Save

if eval_accu>best_val_acc:

if not os.path.exists(save_dir):

os.mkdir(save_dir)

best_val_acc=eval_accu

print(f"The model is saved in {global_step} Step, Best eval Accuracy is{best_val_acc:.8f}!")

save_param_path = os.path.join(save_dir, 'best_model.pdparams')

paddle.save(model.state_dict(), save_param_path)

fh = open('best_checkpoint/best_model.txt', 'w', encoding='utf-8')

fh.write(f"The model is saved in {global_step} Step, Best eval Accuracy is{best_val_acc:.8f}!")

fh.close()

global step 10, epoch: 1, batch: 10, loss: 2.64415, accu: 0.08400, speed: 0.96 step/s

global step 20, epoch: 1, batch: 20, loss: 2.48083, accu: 0.09050, speed: 0.98 step/s

global step 30, epoch: 1, batch: 30, loss: 2.36845, accu: 0.10933, speed: 0.98 step/s

global step 40, epoch: 1, batch: 40, loss: 2.24933, accu: 0.13750, speed: 1.00 step/s

global step 50, epoch: 1, batch: 50, loss: 2.14947, accu: 0.15380, speed: 0.97 step/s

global step 60, epoch: 1, batch: 60, loss: 2.03459, accu: 0.17100, speed: 0.96 step/s

global step 70, epoch: 1, batch: 70, loss: 2.23222, accu: 0.18414, speed: 1.01 step/s

global step 80, epoch: 1, batch: 80, loss: 2.02445, accu: 0.19787, speed: 0.98 step/s

global step 90, epoch: 1, batch: 90, loss: 1.99580, accu: 0.21122, speed: 0.99 step/s

global step 100, epoch: 1, batch: 100, loss: 1.85458, accu: 0.22500, speed: 0.99 step/s

global step 110, epoch: 1, batch: 110, loss: 2.05261, accu: 0.23627, speed: 0.90 step/s

global step 120, epoch: 1, batch: 120, loss: 1.84400, accu: 0.24592, speed: 0.99 step/s

global step 130, epoch: 1, batch: 130, loss: 1.92655, accu: 0.25562, speed: 0.92 step/s

global step 140, epoch: 1, batch: 140, loss: 1.99768, accu: 0.26236, speed: 0.98 step/s

global step 150, epoch: 1, batch: 150, loss: 1.85960, accu: 0.26867, speed: 0.92 step/s

global step 160, epoch: 1, batch: 160, loss: 1.83365, accu: 0.27381, speed: 1.00 step/s

global step 170, epoch: 1, batch: 170, loss: 1.96721, accu: 0.27912, speed: 1.00 step/s

global step 180, epoch: 1, batch: 180, loss: 1.90745, accu: 0.28483, speed: 0.97 step/s

global step 190, epoch: 1, batch: 190, loss: 1.84279, accu: 0.28989, speed: 1.01 step/s

global step 200, epoch: 1, batch: 200, loss: 1.86470, accu: 0.29455, speed: 1.01 step/s

global step 210, epoch: 1, batch: 210, loss: 1.93000, accu: 0.29814, speed: 1.01 step/s

global step 220, epoch: 1, batch: 220, loss: 1.73139, accu: 0.30259, speed: 0.99 step/s

global step 230, epoch: 1, batch: 230, loss: 1.64350, accu: 0.30657, speed: 0.97 step/s

global step 240, epoch: 1, batch: 240, loss: 1.85946, accu: 0.30896, speed: 0.98 step/s

global step 250, epoch: 2, batch: 10, loss: 1.59776, accu: 0.31360, speed: 0.97 step/s

global step 260, epoch: 2, batch: 20, loss: 1.68481, accu: 0.31696, speed: 0.97 step/s

global step 270, epoch: 2, batch: 30, loss: 1.57796, accu: 0.32022, speed: 1.01 step/s

global step 280, epoch: 2, batch: 40, loss: 1.62955, accu: 0.32379, speed: 0.97 step/s

global step 290, epoch: 2, batch: 50, loss: 1.71856, accu: 0.32717, speed: 0.96 step/s

global step 300, epoch: 2, batch: 60, loss: 1.83410, accu: 0.33013, speed: 1.00 step/s

global step 310, epoch: 2, batch: 70, loss: 1.71729, accu: 0.33274, speed: 0.95 step/s

global step 320, epoch: 2, batch: 80, loss: 1.87017, accu: 0.33466, speed: 0.94 step/s

global step 330, epoch: 2, batch: 90, loss: 1.63287, accu: 0.33624, speed: 0.98 step/s

global step 340, epoch: 2, batch: 100, loss: 1.63449, accu: 0.33812, speed: 1.01 step/s

global step 350, epoch: 2, batch: 110, loss: 1.88042, accu: 0.33986, speed: 0.86 step/s

global step 360, epoch: 2, batch: 120, loss: 1.86673, accu: 0.34186, speed: 0.96 step/s

global step 370, epoch: 2, batch: 130, loss: 1.79094, accu: 0.34368, speed: 0.95 step/s

global step 380, epoch: 2, batch: 140, loss: 1.90380, accu: 0.34532, speed: 1.00 step/s

global step 390, epoch: 2, batch: 150, loss: 2.00253, accu: 0.34741, speed: 0.99 step/s

global step 400, epoch: 2, batch: 160, loss: 1.78489, accu: 0.34863, speed: 0.95 step/s

global step 410, epoch: 2, batch: 170, loss: 1.81632, accu: 0.35005, speed: 1.01 step/s

global step 420, epoch: 2, batch: 180, loss: 1.87907, accu: 0.35057, speed: 0.86 step/s

global step 430, epoch: 2, batch: 190, loss: 1.70331, accu: 0.35184, speed: 0.95 step/s

global step 440, epoch: 2, batch: 200, loss: 1.73915, accu: 0.35343, speed: 0.89 step/s

global step 450, epoch: 2, batch: 210, loss: 1.82473, accu: 0.35509, speed: 0.98 step/s

global step 460, epoch: 2, batch: 220, loss: 1.67623, accu: 0.35635, speed: 0.99 step/s

global step 470, epoch: 2, batch: 230, loss: 1.66429, accu: 0.35698, speed: 0.97 step/s

global step 480, epoch: 2, batch: 240, loss: 2.00171, accu: 0.35852, speed: 1.00 step/s

global step 490, epoch: 3, batch: 10, loss: 1.46232, accu: 0.36037, speed: 0.95 step/s

global step 500, epoch: 3, batch: 20, loss: 1.56414, accu: 0.36330, speed: 1.01 step/s

global step 510, epoch: 3, batch: 30, loss: 1.82421, accu: 0.36506, speed: 0.98 step/s

global step 520, epoch: 3, batch: 40, loss: 1.57123, accu: 0.36715, speed: 0.94 step/s

global step 530, epoch: 3, batch: 50, loss: 1.47920, accu: 0.36958, speed: 1.00 step/s

global step 540, epoch: 3, batch: 60, loss: 1.62726, accu: 0.37078, speed: 0.99 step/s

global step 550, epoch: 3, batch: 70, loss: 1.50935, accu: 0.37296, speed: 0.92 step/s

global step 560, epoch: 3, batch: 80, loss: 1.63874, accu: 0.37498, speed: 0.98 step/s

global step 570, epoch: 3, batch: 90, loss: 1.50575, accu: 0.37649, speed: 1.02 step/s

global step 580, epoch: 3, batch: 100, loss: 1.90150, accu: 0.37738, speed: 0.95 step/s

global step 590, epoch: 3, batch: 110, loss: 1.65904, accu: 0.37903, speed: 0.96 step/s

global step 600, epoch: 3, batch: 120, loss: 1.63923, accu: 0.37992, speed: 0.99 step/s

global step 610, epoch: 3, batch: 130, loss: 1.48320, accu: 0.38195, speed: 0.99 step/s

global step 620, epoch: 3, batch: 140, loss: 1.76689, accu: 0.38279, speed: 0.92 step/s

global step 630, epoch: 3, batch: 150, loss: 1.73281, accu: 0.38379, speed: 1.00 step/s

global step 640, epoch: 3, batch: 160, loss: 1.43865, accu: 0.38480, speed: 0.98 step/s

global step 650, epoch: 3, batch: 170, loss: 1.63121, accu: 0.38577, speed: 1.01 step/s

global step 660, epoch: 3, batch: 180, loss: 1.62170, accu: 0.38667, speed: 0.95 step/s

global step 670, epoch: 3, batch: 190, loss: 1.60727, accu: 0.38755, speed: 0.99 step/s

global step 680, epoch: 3, batch: 200, loss: 1.55675, accu: 0.38831, speed: 1.01 step/s

global step 690, epoch: 3, batch: 210, loss: 1.65629, accu: 0.38913, speed: 1.03 step/s

global step 700, epoch: 3, batch: 220, loss: 1.66252, accu: 0.38986, speed: 0.97 step/s

global step 710, epoch: 3, batch: 230, loss: 1.69281, accu: 0.39049, speed: 0.95 step/s

global step 730, epoch: 4, batch: 10, loss: 1.11771, accu: 0.39342, speed: 0.92 step/s

global step 740, epoch: 4, batch: 20, loss: 1.45022, accu: 0.39574, speed: 1.00 step/s

global step 750, epoch: 4, batch: 30, loss: 1.26379, accu: 0.39795, speed: 0.97 step/s

global step 760, epoch: 4, batch: 40, loss: 1.36250, accu: 0.39983, speed: 0.97 step/s

global step 770, epoch: 4, batch: 50, loss: 1.60295, accu: 0.40148, speed: 0.93 step/s

global step 780, epoch: 4, batch: 60, loss: 1.21330, accu: 0.40344, speed: 0.92 step/s

global step 790, epoch: 4, batch: 70, loss: 1.51777, accu: 0.40513, speed: 0.98 step/s

global step 800, epoch: 4, batch: 80, loss: 1.23135, accu: 0.40697, speed: 1.02 step/s

global step 810, epoch: 4, batch: 90, loss: 1.46843, accu: 0.40854, speed: 0.92 step/s

global step 820, epoch: 4, batch: 100, loss: 1.34306, accu: 0.41028, speed: 1.01 step/s

global step 830, epoch: 4, batch: 110, loss: 1.33455, accu: 0.41189, speed: 0.99 step/s

global step 840, epoch: 4, batch: 120, loss: 1.59838, accu: 0.41326, speed: 1.00 step/s

global step 850, epoch: 4, batch: 130, loss: 1.30507, accu: 0.41486, speed: 1.02 step/s

global step 860, epoch: 4, batch: 140, loss: 1.36544, accu: 0.41640, speed: 0.99 step/s

global step 870, epoch: 4, batch: 150, loss: 1.44319, accu: 0.41760, speed: 0.97 step/s

global step 880, epoch: 4, batch: 160, loss: 1.46359, accu: 0.41899, speed: 1.02 step/s

global step 890, epoch: 4, batch: 170, loss: 1.50019, accu: 0.42052, speed: 0.99 step/s

global step 900, epoch: 4, batch: 180, loss: 1.30336, accu: 0.42167, speed: 0.98 step/s

global step 910, epoch: 4, batch: 190, loss: 1.25850, accu: 0.42281, speed: 0.97 step/s

global step 920, epoch: 4, batch: 200, loss: 1.41024, accu: 0.42375, speed: 1.01 step/s

global step 930, epoch: 4, batch: 210, loss: 1.41329, accu: 0.42478, speed: 0.98 step/s

global step 940, epoch: 4, batch: 220, loss: 1.67653, accu: 0.42569, speed: 1.01 step/s

global step 950, epoch: 4, batch: 230, loss: 1.50050, accu: 0.42694, speed: 1.01 step/s

global step 960, epoch: 4, batch: 240, loss: 1.41239, accu: 0.42789, speed: 0.96 step/s

global step 970, epoch: 5, batch: 10, loss: 0.95649, accu: 0.43040, speed: 0.85 step/s

global step 980, epoch: 5, batch: 20, loss: 0.96014, accu: 0.43315, speed: 0.96 step/s

global step 990, epoch: 5, batch: 30, loss: 1.14329, accu: 0.43565, speed: 1.03 step/s

global step 1000, epoch: 5, batch: 40, loss: 0.99162, accu: 0.43807, speed: 1.00 step/s

global step 1010, epoch: 5, batch: 50, loss: 1.00752, accu: 0.44064, speed: 0.98 step/s

global step 1020, epoch: 5, batch: 60, loss: 0.84584, accu: 0.44330, speed: 0.97 step/s

global step 1030, epoch: 5, batch: 70, loss: 0.90016, accu: 0.44553, speed: 0.96 step/s

global step 1040, epoch: 5, batch: 80, loss: 1.16934, accu: 0.44787, speed: 1.00 step/s

global step 1050, epoch: 5, batch: 90, loss: 1.27199, accu: 0.45010, speed: 0.97 step/s

global step 1060, epoch: 5, batch: 100, loss: 1.05648, accu: 0.45210, speed: 1.03 step/s

global step 1070, epoch: 5, batch: 110, loss: 1.12440, accu: 0.45396, speed: 0.99 step/s

global step 1080, epoch: 5, batch: 120, loss: 1.01042, accu: 0.45562, speed: 1.00 step/s

global step 1090, epoch: 5, batch: 130, loss: 1.02570, accu: 0.45746, speed: 1.00 step/s

global step 1100, epoch: 5, batch: 140, loss: 1.18459, accu: 0.45929, speed: 1.01 step/s

global step 1110, epoch: 5, batch: 150, loss: 1.08677, accu: 0.46113, speed: 0.96 step/s

global step 1120, epoch: 5, batch: 160, loss: 1.08068, accu: 0.46296, speed: 0.94 step/s

global step 1130, epoch: 5, batch: 170, loss: 1.11996, accu: 0.46470, speed: 0.93 step/s

global step 1140, epoch: 5, batch: 180, loss: 0.98735, accu: 0.46618, speed: 0.96 step/s

global step 1150, epoch: 5, batch: 190, loss: 0.83020, accu: 0.46777, speed: 0.97 step/s

global step 1160, epoch: 5, batch: 200, loss: 1.02403, accu: 0.46910, speed: 1.01 step/s

global step 1170, epoch: 5, batch: 210, loss: 1.10786, accu: 0.47059, speed: 1.03 step/s

global step 1180, epoch: 5, batch: 220, loss: 1.17426, accu: 0.47188, speed: 1.01 step/s

global step 1190, epoch: 5, batch: 230, loss: 0.99592, accu: 0.47337, speed: 1.01 step/s

global step 1200, epoch: 5, batch: 240, loss: 1.22779, accu: 0.47463, speed: 0.97 step/s

global step 1210, epoch: 6, batch: 10, loss: 0.79520, accu: 0.47740, speed: 0.98 step/s

global step 1220, epoch: 6, batch: 20, loss: 0.62312, accu: 0.48019, speed: 0.92 step/s

global step 1230, epoch: 6, batch: 30, loss: 0.72736, accu: 0.48255, speed: 1.00 step/s

global step 1240, epoch: 6, batch: 40, loss: 0.71147, accu: 0.48499, speed: 0.96 step/s

global step 1250, epoch: 6, batch: 50, loss: 0.95492, accu: 0.48750, speed: 0.92 step/s

global step 1260, epoch: 6, batch: 60, loss: 0.70950, accu: 0.48984, speed: 1.00 step/s

global step 1270, epoch: 6, batch: 70, loss: 0.54798, accu: 0.49219, speed: 0.97 step/s

global step 1280, epoch: 6, batch: 80, loss: 0.88758, accu: 0.49453, speed: 1.00 step/s

global step 1290, epoch: 6, batch: 90, loss: 0.57468, accu: 0.49690, speed: 0.97 step/s

global step 1300, epoch: 6, batch: 100, loss: 0.74073, accu: 0.49918, speed: 0.99 step/s

global step 1310, epoch: 6, batch: 110, loss: 0.68409, accu: 0.50125, speed: 0.97 step/s

global step 1320, epoch: 6, batch: 120, loss: 0.60330, accu: 0.50342, speed: 0.98 step/s

global step 1330, epoch: 6, batch: 130, loss: 0.60949, accu: 0.50525, speed: 0.98 step/s

global step 1340, epoch: 6, batch: 140, loss: 0.80353, accu: 0.50725, speed: 1.02 step/s

global step 1350, epoch: 6, batch: 150, loss: 0.59309, accu: 0.50919, speed: 0.98 step/s

global step 1360, epoch: 6, batch: 160, loss: 0.80102, accu: 0.51124, speed: 1.02 step/s

global step 1370, epoch: 6, batch: 170, loss: 0.91419, accu: 0.51322, speed: 0.98 step/s

global step 1380, epoch: 6, batch: 180, loss: 0.80532, accu: 0.51507, speed: 0.95 step/s

global step 1390, epoch: 6, batch: 190, loss: 0.82733, accu: 0.51694, speed: 0.98 step/s

global step 1400, epoch: 6, batch: 200, loss: 0.73622, accu: 0.51882, speed: 1.00 step/s

global step 1410, epoch: 6, batch: 210, loss: 0.69222, accu: 0.52035, speed: 0.99 step/s

global step 1420, epoch: 6, batch: 220, loss: 0.64658, accu: 0.52210, speed: 1.01 step/s

global step 1430, epoch: 6, batch: 230, loss: 0.60616, accu: 0.52357, speed: 0.93 step/s

global step 1440, epoch: 6, batch: 240, loss: 0.79926, accu: 0.52510, speed: 1.00 step/s

global step 1450, epoch: 7, batch: 10, loss: 0.32951, accu: 0.52747, speed: 0.99 step/s

global step 1460, epoch: 7, batch: 20, loss: 0.51243, accu: 0.52978, speed: 0.99 step/s

global step 1470, epoch: 7, batch: 30, loss: 0.29857, accu: 0.53229, speed: 1.01 step/s

global step 1480, epoch: 7, batch: 40, loss: 0.36025, accu: 0.53460, speed: 0.98 step/s

global step 1490, epoch: 7, batch: 50, loss: 0.32897, accu: 0.53702, speed: 0.99 step/s

global step 1500, epoch: 7, batch: 60, loss: 0.50516, accu: 0.53922, speed: 0.94 step/s

global step 1510, epoch: 7, batch: 70, loss: 0.47188, accu: 0.54139, speed: 1.02 step/s

global step 1520, epoch: 7, batch: 80, loss: 0.34037, accu: 0.54367, speed: 0.99 step/s

global step 1530, epoch: 7, batch: 90, loss: 0.46746, accu: 0.54590, speed: 1.00 step/s

global step 1540, epoch: 7, batch: 100, loss: 0.39657, accu: 0.54804, speed: 0.98 step/s

global step 1550, epoch: 7, batch: 110, loss: 0.26162, accu: 0.55014, speed: 0.87 step/s

global step 1560, epoch: 7, batch: 120, loss: 0.39623, accu: 0.55212, speed: 0.97 step/s

global step 1570, epoch: 7, batch: 130, loss: 0.35755, accu: 0.55408, speed: 0.95 step/s

global step 1580, epoch: 7, batch: 140, loss: 0.55955, accu: 0.55597, speed: 0.94 step/s

global step 1590, epoch: 7, batch: 150, loss: 0.42387, accu: 0.55794, speed: 0.93 step/s

global step 1600, epoch: 7, batch: 160, loss: 0.61937, accu: 0.55976, speed: 0.92 step/s

global step 1610, epoch: 7, batch: 170, loss: 0.49945, accu: 0.56151, speed: 0.93 step/s

global step 1620, epoch: 7, batch: 180, loss: 0.43885, accu: 0.56331, speed: 0.92 step/s

global step 1630, epoch: 7, batch: 190, loss: 0.46327, accu: 0.56512, speed: 0.95 step/s

global step 1640, epoch: 7, batch: 200, loss: 0.28069, accu: 0.56698, speed: 0.99 step/s

global step 1650, epoch: 7, batch: 210, loss: 0.48011, accu: 0.56877, speed: 0.95 step/s

global step 1660, epoch: 7, batch: 220, loss: 0.80093, accu: 0.57042, speed: 0.98 step/s

global step 1670, epoch: 7, batch: 230, loss: 0.47589, accu: 0.57219, speed: 1.03 step/s

global step 1680, epoch: 7, batch: 240, loss: 0.47784, accu: 0.57394, speed: 0.98 step/s

global step 1690, epoch: 8, batch: 10, loss: 0.26370, accu: 0.57604, speed: 0.98 step/s

global step 1700, epoch: 8, batch: 20, loss: 0.20907, accu: 0.57805, speed: 0.99 step/s

global step 1710, epoch: 8, batch: 30, loss: 0.14743, accu: 0.58009, speed: 0.95 step/s

global step 1720, epoch: 8, batch: 40, loss: 0.24629, accu: 0.58206, speed: 0.96 step/s

global step 1730, epoch: 8, batch: 50, loss: 0.17558, accu: 0.58400, speed: 0.95 step/s

global step 1740, epoch: 8, batch: 60, loss: 0.21151, accu: 0.58598, speed: 1.01 step/s

global step 1750, epoch: 8, batch: 70, loss: 0.18187, accu: 0.58795, speed: 1.01 step/s

global step 1760, epoch: 8, batch: 80, loss: 0.42727, accu: 0.58976, speed: 0.96 step/s

global step 1770, epoch: 8, batch: 90, loss: 0.21492, accu: 0.59163, speed: 1.00 step/s

global step 1780, epoch: 8, batch: 100, loss: 0.17096, accu: 0.59342, speed: 0.91 step/s

global step 1790, epoch: 8, batch: 110, loss: 0.31054, accu: 0.59527, speed: 0.99 step/s

global step 1800, epoch: 8, batch: 120, loss: 0.25554, accu: 0.59717, speed: 0.93 step/s

global step 1810, epoch: 8, batch: 130, loss: 0.26881, accu: 0.59887, speed: 1.00 step/s

global step 1820, epoch: 8, batch: 140, loss: 0.17372, accu: 0.60064, speed: 0.93 step/s

global step 1830, epoch: 8, batch: 150, loss: 0.32673, accu: 0.60229, speed: 1.01 step/s

global step 1840, epoch: 8, batch: 160, loss: 0.20629, accu: 0.60397, speed: 0.98 step/s

global step 1850, epoch: 8, batch: 170, loss: 0.23317, accu: 0.60565, speed: 1.01 step/s

global step 1860, epoch: 8, batch: 180, loss: 0.24937, accu: 0.60724, speed: 0.98 step/s

global step 1870, epoch: 8, batch: 190, loss: 0.39971, accu: 0.60885, speed: 0.99 step/s

global step 1880, epoch: 8, batch: 200, loss: 0.33303, accu: 0.61040, speed: 0.99 step/s

global step 1890, epoch: 8, batch: 210, loss: 0.29457, accu: 0.61194, speed: 0.96 step/s

global step 1900, epoch: 8, batch: 220, loss: 0.31219, accu: 0.61350, speed: 1.00 step/s

global step 1910, epoch: 8, batch: 230, loss: 0.28200, accu: 0.61503, speed: 0.92 step/s

global step 1920, epoch: 8, batch: 240, loss: 0.25267, accu: 0.61657, speed: 1.03 step/s

global step 1930, epoch: 9, batch: 10, loss: 0.16737, accu: 0.61829, speed: 0.94 step/s

global step 1940, epoch: 9, batch: 20, loss: 0.22459, accu: 0.61994, speed: 1.02 step/s

global step 1950, epoch: 9, batch: 30, loss: 0.11283, accu: 0.62169, speed: 0.90 step/s

global step 1960, epoch: 9, batch: 40, loss: 0.08618, accu: 0.62340, speed: 0.99 step/s

global step 1970, epoch: 9, batch: 50, loss: 0.19492, accu: 0.62506, speed: 0.97 step/s

global step 1980, epoch: 9, batch: 60, loss: 0.15329, accu: 0.62660, speed: 0.96 step/s

global step 1990, epoch: 9, batch: 70, loss: 0.13334, accu: 0.62825, speed: 0.95 step/s

global step 2000, epoch: 9, batch: 80, loss: 0.15485, accu: 0.62986, speed: 1.01 step/s

global step 2010, epoch: 9, batch: 90, loss: 0.07164, accu: 0.63150, speed: 1.02 step/s

global step 2020, epoch: 9, batch: 100, loss: 0.22111, accu: 0.63304, speed: 0.99 step/s

global step 2030, epoch: 9, batch: 110, loss: 0.17983, accu: 0.63458, speed: 1.01 step/s

global step 2040, epoch: 9, batch: 120, loss: 0.12174, accu: 0.63603, speed: 0.98 step/s

global step 2050, epoch: 9, batch: 130, loss: 0.13381, accu: 0.63751, speed: 0.97 step/s

global step 2060, epoch: 9, batch: 140, loss: 0.09572, accu: 0.63903, speed: 1.02 step/s

global step 2070, epoch: 9, batch: 150, loss: 0.18560, accu: 0.64051, speed: 0.96 step/s

global step 2080, epoch: 9, batch: 160, loss: 0.16867, accu: 0.64200, speed: 1.01 step/s

global step 2090, epoch: 9, batch: 170, loss: 0.20489, accu: 0.64343, speed: 0.96 step/s

global step 2100, epoch: 9, batch: 180, loss: 0.20788, accu: 0.64486, speed: 0.98 step/s

global step 2110, epoch: 9, batch: 190, loss: 0.11892, accu: 0.64627, speed: 1.02 step/s

global step 2120, epoch: 9, batch: 200, loss: 0.17778, accu: 0.64759, speed: 1.00 step/s

global step 2160, epoch: 9, batch: 240, loss: 0.29260, accu: 0.65298, speed: 0.98 step/s

global step 2170, epoch: 10, batch: 10, loss: 0.10163, accu: 0.65444, speed: 0.98 step/s

global step 2180, epoch: 10, batch: 20, loss: 0.12620, accu: 0.65585, speed: 1.01 step/s

global step 2190, epoch: 10, batch: 30, loss: 0.13914, accu: 0.65722, speed: 0.95 step/s

global step 2210, epoch: 10, batch: 50, loss: 0.05152, accu: 0.66004, speed: 1.03 step/s

global step 2220, epoch: 10, batch: 60, loss: 0.20240, accu: 0.66141, speed: 1.00 step/s

global step 2230, epoch: 10, batch: 70, loss: 0.12380, accu: 0.66274, speed: 1.01 step/s

global step 2240, epoch: 10, batch: 80, loss: 0.13620, accu: 0.66406, speed: 0.99 step/s

global step 2250, epoch: 10, batch: 90, loss: 0.10355, accu: 0.66534, speed: 0.95 step/s

global step 2260, epoch: 10, batch: 100, loss: 0.09715, accu: 0.66665, speed: 1.01 step/s

global step 2270, epoch: 10, batch: 110, loss: 0.15655, accu: 0.66797, speed: 0.99 step/s

global step 2280, epoch: 10, batch: 120, loss: 0.18626, accu: 0.66927, speed: 0.99 step/s

global step 2290, epoch: 10, batch: 130, loss: 0.06124, accu: 0.67058, speed: 0.97 step/s

global step 2300, epoch: 10, batch: 140, loss: 0.13277, accu: 0.67189, speed: 1.00 step/s

global step 2310, epoch: 10, batch: 150, loss: 0.09802, accu: 0.67314, speed: 0.94 step/s

global step 2320, epoch: 10, batch: 160, loss: 0.19472, accu: 0.67438, speed: 0.98 step/s

global step 2330, epoch: 10, batch: 170, loss: 0.13796, accu: 0.67556, speed: 0.95 step/s

global step 2340, epoch: 10, batch: 180, loss: 0.13404, accu: 0.67675, speed: 0.97 step/s

global step 2350, epoch: 10, batch: 190, loss: 0.19633, accu: 0.67793, speed: 1.04 step/s

global step 2360, epoch: 10, batch: 200, loss: 0.08400, accu: 0.67908, speed: 1.03 step/s

global step 2370, epoch: 10, batch: 210, loss: 0.15028, accu: 0.68023, speed: 0.95 step/s

global step 2380, epoch: 10, batch: 220, loss: 0.12565, accu: 0.68137, speed: 0.98 step/s

global step 2390, epoch: 10, batch: 230, loss: 0.16214, accu: 0.68250, speed: 1.00 step/s

global step 2400, epoch: 10, batch: 240, loss: 0.15614, accu: 0.68362, speed: 0.99 step/s

global step 2410, epoch: 11, batch: 10, loss: 0.09459, accu: 0.68480, speed: 0.98 step/s

global step 2420, epoch: 11, batch: 20, loss: 0.05925, accu: 0.68595, speed: 1.00 step/s

global step 2430, epoch: 11, batch: 30, loss: 0.16846, accu: 0.68710, speed: 0.92 step/s

global step 2440, epoch: 11, batch: 40, loss: 0.17300, accu: 0.68822, speed: 0.98 step/s

global step 2450, epoch: 11, batch: 50, loss: 0.09830, accu: 0.68938, speed: 0.93 step/s

global step 2460, epoch: 11, batch: 60, loss: 0.19317, accu: 0.69054, speed: 0.97 step/s

global step 2470, epoch: 11, batch: 70, loss: 0.11055, accu: 0.69166, speed: 0.92 step/s

global step 2480, epoch: 11, batch: 80, loss: 0.01810, accu: 0.69277, speed: 0.98 step/s

global step 2490, epoch: 11, batch: 90, loss: 0.04222, accu: 0.69392, speed: 0.98 step/s

global step 2500, epoch: 11, batch: 100, loss: 0.06748, accu: 0.69502, speed: 0.98 step/s

global step 2510, epoch: 11, batch: 110, loss: 0.12367, accu: 0.69609, speed: 0.98 step/s

global step 2520, epoch: 11, batch: 120, loss: 0.10427, accu: 0.69718, speed: 0.97 step/s

global step 2530, epoch: 11, batch: 130, loss: 0.09972, accu: 0.69821, speed: 0.99 step/s

global step 2540, epoch: 11, batch: 140, loss: 0.06466, accu: 0.69928, speed: 0.95 step/s

global step 2550, epoch: 11, batch: 150, loss: 0.13024, accu: 0.70035, speed: 0.97 step/s

global step 2560, epoch: 11, batch: 160, loss: 0.05148, accu: 0.70139, speed: 0.95 step/s

global step 2570, epoch: 11, batch: 170, loss: 0.10767, accu: 0.70244, speed: 0.99 step/s

global step 2580, epoch: 11, batch: 180, loss: 0.04264, accu: 0.70349, speed: 1.00 step/s

global step 2590, epoch: 11, batch: 190, loss: 0.06067, accu: 0.70454, speed: 0.96 step/s

global step 2600, epoch: 11, batch: 200, loss: 0.15608, accu: 0.70556, speed: 0.99 step/s

global step 2610, epoch: 11, batch: 210, loss: 0.15551, accu: 0.70656, speed: 0.93 step/s

global step 2620, epoch: 11, batch: 220, loss: 0.15605, accu: 0.70751, speed: 0.97 step/s

global step 2630, epoch: 11, batch: 230, loss: 0.16408, accu: 0.70848, speed: 1.00 step/s

global step 2640, epoch: 11, batch: 240, loss: 0.17679, accu: 0.70943, speed: 1.03 step/s

global step 2650, epoch: 12, batch: 10, loss: 0.03879, accu: 0.71043, speed: 0.89 step/s

global step 2660, epoch: 12, batch: 20, loss: 0.05025, accu: 0.71142, speed: 0.98 step/s

global step 2670, epoch: 12, batch: 30, loss: 0.05381, accu: 0.71241, speed: 0.97 step/s

global step 2680, epoch: 12, batch: 40, loss: 0.17475, accu: 0.71340, speed: 0.95 step/s

global step 2690, epoch: 12, batch: 50, loss: 0.11156, accu: 0.71438, speed: 0.97 step/s

global step 2700, epoch: 12, batch: 60, loss: 0.17223, accu: 0.71533, speed: 0.92 step/s

global step 2710, epoch: 12, batch: 70, loss: 0.03778, accu: 0.71630, speed: 1.01 step/s

global step 2720, epoch: 12, batch: 80, loss: 0.05190, accu: 0.71726, speed: 1.01 step/s

global step 2730, epoch: 12, batch: 90, loss: 0.05545, accu: 0.71823, speed: 0.91 step/s

global step 2740, epoch: 12, batch: 100, loss: 0.07203, accu: 0.71915, speed: 1.00 step/s

global step 2750, epoch: 12, batch: 110, loss: 0.05418, accu: 0.72008, speed: 0.97 step/s

global step 2760, epoch: 12, batch: 120, loss: 0.06207, accu: 0.72099, speed: 1.03 step/s

global step 2770, epoch: 12, batch: 130, loss: 0.05777, accu: 0.72192, speed: 1.00 step/s

global step 2780, epoch: 12, batch: 140, loss: 0.03805, accu: 0.72282, speed: 0.98 step/s

global step 2790, epoch: 12, batch: 150, loss: 0.08774, accu: 0.72373, speed: 1.00 step/s

global step 2800, epoch: 12, batch: 160, loss: 0.13304, accu: 0.72462, speed: 0.98 step/s

global step 2810, epoch: 12, batch: 170, loss: 0.06018, accu: 0.72549, speed: 0.97 step/s

global step 2820, epoch: 12, batch: 180, loss: 0.10196, accu: 0.72634, speed: 0.99 step/s

global step 2830, epoch: 12, batch: 190, loss: 0.02596, accu: 0.72720, speed: 1.01 step/s

global step 2840, epoch: 12, batch: 200, loss: 0.05425, accu: 0.72805, speed: 0.98 step/s

global step 2850, epoch: 12, batch: 210, loss: 0.15891, accu: 0.72890, speed: 0.98 step/s

global step 2860, epoch: 12, batch: 220, loss: 0.03764, accu: 0.72976, speed: 0.98 step/s

global step 2870, epoch: 12, batch: 230, loss: 0.19953, accu: 0.73057, speed: 0.96 step/s

global step 2880, epoch: 12, batch: 240, loss: 0.08136, accu: 0.73144, speed: 0.97 step/s

global step 2890, epoch: 13, batch: 10, loss: 0.08326, accu: 0.73229, speed: 0.92 step/s

global step 2900, epoch: 13, batch: 20, loss: 0.04907, accu: 0.73313, speed: 1.02 step/s

global step 2910, epoch: 13, batch: 30, loss: 0.09300, accu: 0.73396, speed: 0.99 step/s

global step 2920, epoch: 13, batch: 40, loss: 0.07260, accu: 0.73482, speed: 0.97 step/s

global step 2930, epoch: 13, batch: 50, loss: 0.07982, accu: 0.73566, speed: 0.92 step/s

global step 2950, epoch: 13, batch: 70, loss: 0.05648, accu: 0.73729, speed: 0.96 step/s

global step 2960, epoch: 13, batch: 80, loss: 0.04701, accu: 0.73809, speed: 0.94 step/s

global step 2970, epoch: 13, batch: 90, loss: 0.04051, accu: 0.73889, speed: 0.99 step/s

global step 2980, epoch: 13, batch: 100, loss: 0.01216, accu: 0.73969, speed: 1.01 step/s

global step 2990, epoch: 13, batch: 110, loss: 0.05054, accu: 0.74048, speed: 1.01 step/s

global step 3000, epoch: 13, batch: 120, loss: 0.06083, accu: 0.74127, speed: 0.98 step/s

global step 3010, epoch: 13, batch: 130, loss: 0.06594, accu: 0.74203, speed: 1.00 step/s

global step 3020, epoch: 13, batch: 140, loss: 0.01730, accu: 0.74282, speed: 1.01 step/s

global step 3030, epoch: 13, batch: 150, loss: 0.01625, accu: 0.74360, speed: 0.96 step/s

global step 3040, epoch: 13, batch: 160, loss: 0.03407, accu: 0.74437, speed: 0.95 step/s

global step 3050, epoch: 13, batch: 170, loss: 0.01999, accu: 0.74512, speed: 0.94 step/s

global step 3060, epoch: 13, batch: 180, loss: 0.07507, accu: 0.74589, speed: 0.99 step/s

global step 3070, epoch: 13, batch: 190, loss: 0.05114, accu: 0.74664, speed: 1.00 step/s

global step 3080, epoch: 13, batch: 200, loss: 0.02621, accu: 0.74739, speed: 1.00 step/s

global step 3090, epoch: 13, batch: 210, loss: 0.08471, accu: 0.74814, speed: 1.00 step/s

global step 3100, epoch: 13, batch: 220, loss: 0.08308, accu: 0.74889, speed: 1.00 step/s

global step 3110, epoch: 13, batch: 230, loss: 0.05290, accu: 0.74965, speed: 1.01 step/s

global step 3120, epoch: 13, batch: 240, loss: 0.10682, accu: 0.75038, speed: 1.00 step/s

global step 3130, epoch: 14, batch: 10, loss: 0.04611, accu: 0.75112, speed: 0.98 step/s

global step 3140, epoch: 14, batch: 20, loss: 0.10133, accu: 0.75187, speed: 0.99 step/s

global step 3150, epoch: 14, batch: 30, loss: 0.06149, accu: 0.75260, speed: 1.01 step/s

global step 3160, epoch: 14, batch: 40, loss: 0.03526, accu: 0.75334, speed: 1.01 step/s

global step 3170, epoch: 14, batch: 50, loss: 0.05824, accu: 0.75409, speed: 0.92 step/s

global step 3180, epoch: 14, batch: 60, loss: 0.05532, accu: 0.75484, speed: 1.00 step/s

global step 3190, epoch: 14, batch: 70, loss: 0.01612, accu: 0.75556, speed: 0.99 step/s

global step 3200, epoch: 14, batch: 80, loss: 0.00389, accu: 0.75626, speed: 0.92 step/s

global step 3210, epoch: 14, batch: 90, loss: 0.03826, accu: 0.75697, speed: 0.95 step/s

global step 3220, epoch: 14, batch: 100, loss: 0.07837, accu: 0.75764, speed: 1.02 step/s

global step 3230, epoch: 14, batch: 110, loss: 0.03807, accu: 0.75833, speed: 0.97 step/s

global step 3240, epoch: 14, batch: 120, loss: 0.11972, accu: 0.75897, speed: 0.92 step/s

global step 3250, epoch: 14, batch: 130, loss: 0.09694, accu: 0.75964, speed: 0.97 step/s

global step 3260, epoch: 14, batch: 140, loss: 0.05271, accu: 0.76031, speed: 0.96 step/s

global step 3270, epoch: 14, batch: 150, loss: 0.08641, accu: 0.76098, speed: 0.98 step/s

global step 3280, epoch: 14, batch: 160, loss: 0.07319, accu: 0.76164, speed: 0.92 step/s

global step 3290, epoch: 14, batch: 170, loss: 0.01758, accu: 0.76230, speed: 0.83 step/s

global step 3300, epoch: 14, batch: 180, loss: 0.04453, accu: 0.76296, speed: 0.93 step/s

global step 3310, epoch: 14, batch: 190, loss: 0.03207, accu: 0.76360, speed: 0.99 step/s

global step 3320, epoch: 14, batch: 200, loss: 0.08266, accu: 0.76424, speed: 0.98 step/s

global step 3330, epoch: 14, batch: 210, loss: 0.10719, accu: 0.76489, speed: 1.01 step/s

global step 3340, epoch: 14, batch: 220, loss: 0.02822, accu: 0.76556, speed: 0.96 step/s

global step 3350, epoch: 14, batch: 230, loss: 0.04420, accu: 0.76619, speed: 0.99 step/s

global step 3360, epoch: 14, batch: 240, loss: 0.07753, accu: 0.76681, speed: 0.74 step/s

global step 3370, epoch: 15, batch: 10, loss: 0.01795, accu: 0.76746, speed: 0.72 step/s

global step 3380, epoch: 15, batch: 20, loss: 0.11499, accu: 0.76811, speed: 0.74 step/s

global step 3390, epoch: 15, batch: 30, loss: 0.00584, accu: 0.76874, speed: 0.98 step/s

global step 3400, epoch: 15, batch: 40, loss: 0.03364, accu: 0.76941, speed: 0.91 step/s

global step 3410, epoch: 15, batch: 50, loss: 0.03413, accu: 0.77005, speed: 0.97 step/s

global step 3420, epoch: 15, batch: 60, loss: 0.20039, accu: 0.77066, speed: 1.00 step/s

global step 3430, epoch: 15, batch: 70, loss: 0.07094, accu: 0.77130, speed: 0.96 step/s

global step 3440, epoch: 15, batch: 80, loss: 0.02087, accu: 0.77191, speed: 0.93 step/s

global step 3450, epoch: 15, batch: 90, loss: 0.01463, accu: 0.77253, speed: 0.98 step/s

global step 3460, epoch: 15, batch: 100, loss: 0.11981, accu: 0.77315, speed: 1.00 step/s

global step 3470, epoch: 15, batch: 110, loss: 0.09386, accu: 0.77375, speed: 0.93 step/s

global step 3480, epoch: 15, batch: 120, loss: 0.04492, accu: 0.77434, speed: 0.97 step/s

global step 3490, epoch: 15, batch: 130, loss: 0.02024, accu: 0.77493, speed: 1.01 step/s

global step 3500, epoch: 15, batch: 140, loss: 0.12838, accu: 0.77549, speed: 1.03 step/s

global step 3510, epoch: 15, batch: 150, loss: 0.04906, accu: 0.77607, speed: 1.01 step/s

global step 3520, epoch: 15, batch: 160, loss: 0.04938, accu: 0.77663, speed: 0.99 step/s

global step 3530, epoch: 15, batch: 170, loss: 0.03127, accu: 0.77722, speed: 0.94 step/s

global step 3540, epoch: 15, batch: 180, loss: 0.01037, accu: 0.77781, speed: 0.98 step/s

global step 3550, epoch: 15, batch: 190, loss: 0.01388, accu: 0.77840, speed: 0.92 step/s

global step 3560, epoch: 15, batch: 200, loss: 0.07802, accu: 0.77897, speed: 1.00 step/s

global step 3570, epoch: 15, batch: 210, loss: 0.05052, accu: 0.77956, speed: 0.98 step/s

global step 3580, epoch: 15, batch: 220, loss: 0.04648, accu: 0.78012, speed: 0.91 step/s

global step 3590, epoch: 15, batch: 230, loss: 0.04214, accu: 0.78070, speed: 0.97 step/s

global step 3600, epoch: 15, batch: 240, loss: 0.06827, accu: 0.78125, speed: 0.98 step/s

eval on dev loss: 4.2006474, accu: 0.34116667

Save model in 3600 steps, best eval Accuracy is 0.34116667!

global step 3610, epoch: 16, batch: 10, loss: 0.05446, accu: 0.98700, speed: 0.31 step/s

global step 3620, epoch: 16, batch: 20, loss: 0.06428, accu: 0.98850, speed: 1.02 step/s

global step 3630, epoch: 16, batch: 30, loss: 0.02755, accu: 0.99067, speed: 0.98 step/s

global step 3640, epoch: 16, batch: 40, loss: 0.01581, accu: 0.99025, speed: 0.99 step/s

global step 3650, epoch: 16, batch: 50, loss: 0.03213, accu: 0.98940, speed: 0.99 step/s

global step 3660, epoch: 16, batch: 60, loss: 0.01965, accu: 0.98950, speed: 0.99 step/s

global step 3670, epoch: 16, batch: 70, loss: 0.01155, accu: 0.99057, speed: 0.99 step/s

global step 3680, epoch: 16, batch: 80, loss: 0.03869, accu: 0.98975, speed: 0.96 step/s

global step 3690, epoch: 16, batch: 90, loss: 0.05119, accu: 0.98933, speed: 1.00 step/s

global step 3700, epoch: 16, batch: 100, loss: 0.00744, accu: 0.98990, speed: 0.99 step/s

eval on dev loss: 4.4295754, accu: 0.33633333

global step 3710, epoch: 16, batch: 110, loss: 0.03117, accu: 0.98600, speed: 0.35 step/s

global step 3720, epoch: 16, batch: 120, loss: 0.03541, accu: 0.98450, speed: 0.95 step/s

global step 3730, epoch: 16, batch: 130, loss: 0.04614, accu: 0.98367, speed: 0.99 step/s

global step 3740, epoch: 16, batch: 140, loss: 0.02205, accu: 0.98275, speed: 0.99 step/s

global step 3750, epoch: 16, batch: 150, loss: 0.00965, accu: 0.98380, speed: 1.00 step/s

global step 3760, epoch: 16, batch: 160, loss: 0.01183, accu: 0.98483, speed: 0.97 step/s

global step 3770, epoch: 16, batch: 170, loss: 0.02469, accu: 0.98514, speed: 0.99 step/s

global step 3780, epoch: 16, batch: 180, loss: 0.06996, accu: 0.98500, speed: 0.99 step/s

global step 3790, epoch: 16, batch: 190, loss: 0.03074, accu: 0.98467, speed: 1.00 step/s

global step 3800, epoch: 16, batch: 200, loss: 0.03565, accu: 0.98500, speed: 0.93 step/s

eval on dev loss: 4.2906199, accu: 0.34216667

Model saved in 3800 steps, best eval Accuracy is 0.34216667!

global step 3810, epoch: 16, batch: 210, loss: 0.04649, accu: 0.98500, speed: 0.30 step/s

global step 3820, epoch: 16, batch: 220, loss: 0.08561, accu: 0.98350, speed: 0.95 step/s

global step 3830, epoch: 16, batch: 230, loss: 0.03310, accu: 0.98533, speed: 0.91 step/s

global step 3840, epoch: 16, batch: 240, loss: 0.08985, accu: 0.98525, speed: 0.96 step/s

global step 3850, epoch: 17, batch: 10, loss: 0.02764, accu: 0.98680, speed: 0.97 step/s

global step 3860, epoch: 17, batch: 20, loss: 0.04410, accu: 0.98783, speed: 0.98 step/s

global step 3870, epoch: 17, batch: 30, loss: 0.06611, accu: 0.98729, speed: 1.01 step/s

global step 3880, epoch: 17, batch: 40, loss: 0.03119, accu: 0.98725, speed: 0.94 step/s

global step 3890, epoch: 17, batch: 50, loss: 0.05714, accu: 0.98722, speed: 0.93 step/s

global step 3900, epoch: 17, batch: 60, loss: 0.01392, accu: 0.98720, speed: 0.93 step/s

eval on dev loss: 4.3350282, accu: 0.33266667

global step 3910, epoch: 17, batch: 70, loss: 0.00464, accu: 0.99400, speed: 0.34 step/s

global step 3920, epoch: 17, batch: 80, loss: 0.00904, accu: 0.99400, speed: 1.00 step/s

global step 3930, epoch: 17, batch: 90, loss: 0.17276, accu: 0.99167, speed: 0.95 step/s

global step 3940, epoch: 17, batch: 100, loss: 0.04980, accu: 0.99275, speed: 1.01 step/s

global step 3950, epoch: 17, batch: 110, loss: 0.00733, accu: 0.99220, speed: 1.00 step/s

global step 3960, epoch: 17, batch: 120, loss: 0.04312, accu: 0.99267, speed: 0.94 step/s

global step 3970, epoch: 17, batch: 130, loss: 0.03863, accu: 0.99229, speed: 0.94 step/s

global step 3980, epoch: 17, batch: 140, loss: 0.00217, accu: 0.99213, speed: 0.97 step/s

global step 3990, epoch: 17, batch: 150, loss: 0.03819, accu: 0.99167, speed: 0.96 step/s

global step 4000, epoch: 17, batch: 160, loss: 0.02629, accu: 0.99120, speed: 0.92 step/s

eval on dev loss: 4.3951426, accu: 0.32183333

global step 4010, epoch: 17, batch: 170, loss: 0.01393, accu: 0.98700, speed: 0.34 step/s

global step 4020, epoch: 17, batch: 180, loss: 0.03148, accu: 0.98800, speed: 0.98 step/s

global step 4030, epoch: 17, batch: 190, loss: 0.02380, accu: 0.98833, speed: 0.97 step/s

global step 4040, epoch: 17, batch: 200, loss: 0.00428, accu: 0.98825, speed: 0.99 step/s

global step 4050, epoch: 17, batch: 210, loss: 0.04218, accu: 0.98820, speed: 0.96 step/s

global step 4060, epoch: 17, batch: 220, loss: 0.00900, accu: 0.98817, speed: 0.92 step/s

global step 4070, epoch: 17, batch: 230, loss: 0.13526, accu: 0.98700, speed: 0.97 step/s

global step 4080, epoch: 17, batch: 240, loss: 0.12859, accu: 0.98687, speed: 0.99 step/s

global step 4090, epoch: 18, batch: 10, loss: 0.05099, accu: 0.98711, speed: 0.96 step/s

global step 4100, epoch: 18, batch: 20, loss: 0.00881, accu: 0.98710, speed: 1.00 step/s

eval on dev loss: 4.4507546, accu: 0.32516667

global step 4110, epoch: 18, batch: 30, loss: 0.05934, accu: 0.98800, speed: 0.34 step/s

global step 4120, epoch: 18, batch: 40, loss: 0.00273, accu: 0.98950, speed: 0.97 step/s

global step 4130, epoch: 18, batch: 50, loss: 0.02397, accu: 0.98933, speed: 0.91 step/s

global step 4140, epoch: 18, batch: 60, loss: 0.00946, accu: 0.99050, speed: 0.98 step/s

global step 4150, epoch: 18, batch: 70, loss: 0.02849, accu: 0.99160, speed: 1.03 step/s

global step 4160, epoch: 18, batch: 80, loss: 0.00931, accu: 0.99167, speed: 1.00 step/s

global step 4170, epoch: 18, batch: 90, loss: 0.03216, accu: 0.99214, speed: 1.01 step/s

global step 4180, epoch: 18, batch: 100, loss: 0.00480, accu: 0.99150, speed: 0.97 step/s

global step 4190, epoch: 18, batch: 110, loss: 0.02336, accu: 0.99100, speed: 0.98 step/s

global step 4200, epoch: 18, batch: 120, loss: 0.00858, accu: 0.99110, speed: 0.94 step/s

eval on dev loss: 4.4317684, accu: 0.34533333

Model saved in 4200 steps, best eval Accuracy is 0.34533333!

global step 4210, epoch: 18, batch: 130, loss: 0.06743, accu: 0.99000, speed: 0.30 step/s

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

<ipython-input-18-bbe0ef9babd6> in <module>

10 logits = model(input_ids, token_type_ids)

11 # Calculate loss function value

---> 12 loss = criterion(logits, labels)

13 # Prediction Classification Probability Value

14 probs = F.softmax(logits, axis=1)

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/dygraph/layers.py in __call__(self, *inputs, **kwargs)

900 self._built = True

901

--> 902 outputs = self.forward(*inputs, **kwargs)

903

904 for forward_post_hook in self._forward_post_hooks.values():

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/loss.py in forward(self, input, label)

403 axis=self.axis,

404 use_softmax=self.use_softmax,

--> 405 name=self.name)

406

407 return ret

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/functional/loss.py in cross_entropy(input, label, weight, ignore_index, reduction, soft_label, axis, use_softmax, name)

1390 input, label, 'soft_label', soft_label, 'ignore_index',

1391 ignore_index, 'numeric_stable_mode', True, 'axis', axis,

-> 1392 'use_softmax', use_softmax)

1393

1394 if weight is not None:

KeyboardInterrupt:

Visual DL visual training, keep abreast of training trends, do not waste energy

6. Forecasting

After the training, restart the environment, release the memory, and start predicting

# data fetch

import pandas as pd

from paddlenlp.datasets import load_dataset

from paddle.io import Dataset, Subset

from paddlenlp.datasets import MapDataset

test = pd.read_csv('/home/mw/input/Twitter4903/test.csv')

# data fetch

def read_test(pd_data):

for index, item in pd_data.iterrows():

yield {'text': item['content'], 'label': 0, 'qid': item['tweet_id'].strip('tweet_')}

test_ds = load_dataset(read_test, pd_data=test,lazy=False) # Converting to MapDataset type test_ds = MapDataset(test_ds) print(len(test_ds))

def convert_example(example,

tokenizer,

max_seq_length=512,

is_test=False):

# Processing the original data into a readable format for the model, enocded_inputs is a dict that contains fields such as input_ids, token_type_ids, and so on

encoded_inputs = tokenizer(

text=example["text"], max_seq_len=max_seq_length)

# input_ids: The corresponding token id in the vocabulary when the text is split into tokens

input_ids = encoded_inputs["input_ids"]

# token_type_ids: Does the current token belong to Sentence 1 or Sentence 2, which is the segment ids expressed in the figure above

token_type_ids = encoded_inputs["token_type_ids"]

if not is_test:

# label: emotional polarity category

label = np.array([example["label"]], dtype="int64")

return input_ids, token_type_ids, label

else:

# qid: the number of each data

qid = np.array([example["qid"]], dtype="int64")

return input_ids, token_type_ids, qid

def create_dataloader(dataset,

trans_fn=None,

mode='train',

batch_size=1,

batchify_fn=None):

if trans_fn:

dataset = dataset.map(trans_fn)

shuffle = True if mode == 'train' else False

if mode == "train":

sampler = paddle.io.DistributedBatchSampler(

dataset=dataset, batch_size=batch_size, shuffle=shuffle)

else:

sampler = paddle.io.BatchSampler(

dataset=dataset, batch_size=batch_size, shuffle=shuffle)

dataloader = paddle.io.DataLoader(

dataset, batch_sampler=sampler, collate_fn=batchify_fn)

return dataloader

from paddlenlp.transformers import SkepForSequenceClassification, SkepTokenizer # Specify model name, one-click load model model = SkepForSequenceClassification.from_pretrained(pretrained_model_name_or_path="skep_ernie_2.0_large_en", num_classes=13) # Similarly, the corresponding Tokenizer is loaded by specifying the model name one-click to process text data, such as slicing token, token_id, and so on. tokenizer = SkepTokenizer.from_pretrained(pretrained_model_name_or_path="skep_ernie_2.0_large_en")

from functools import partial

import numpy as np

import paddle

import paddle.nn.functional as F

from paddlenlp.data import Stack, Tuple, Pad

batch_size=16

max_seq_length=166

# Processing test set data

trans_func = partial(

convert_example,

tokenizer=tokenizer,

max_seq_length=max_seq_length,

is_test=True)

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # input

Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # segment

Stack() # qid

): [data for data in fn(samples)]

test_data_loader = create_dataloader(

test_ds,

mode='test',

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

# Load Model

import os

# Change the loaded parameter paths according to the actual operation

params_path = 'best_checkpoint/best_model.pdparams'

if params_path and os.path.isfile(params_path):

# Loading model parameters

state_dict = paddle.load(params_path)

model.set_dict(state_dict)

print("Loaded parameters from %s" % params_path)

results = []

# Switch model to evaluation mode, turn off random factors such as dropout

model.eval()

for batch in test_data_loader:

input_ids, token_type_ids, qids = batch

# Feed data to model

logits = model(input_ids, token_type_ids)

# Forecast classification

probs = F.softmax(logits, axis=-1)

idx = paddle.argmax(probs, axis=1).numpy()

idx = idx.tolist()

qids = qids.numpy().tolist()

results.extend(zip(qids, idx))

# Write predictions, submit

with open( "submission.csv", 'w', encoding="utf-8") as f:

# f.write("Data ID, rating\n")

f.write("tweet_id,label\n")

for (idx, label) in results:

f.write('tweet_'+str(idx[0])+","+str(label)+"\n")

7. Notes

- 1. It is relatively convenient to read flat files using pandas

- 2.max_seq_length is more appropriate to use pandas statistic maximum

- 3. Use pandas to analyze data distribution

- 4.PaddleNLP has a special accumulation in natural language processing and is very convenient to learn from github.

8. What is PaddleNLP?

1.gitee address

https://gitee.com/paddlepaddle/PaddleNLP/blob/develop/README.md

2. Introduction

Paddle NLP 2.0 is the core text area library of the propeller ecology. It has three main features: easy-to-use text area API, multi-scene application examples, and high performance distributed training. It aims to improve the development efficiency of the developer text area and provides NLP task best practices based on the core framework of propeller 2.0.

-

Easy-to-use Text Domain API

- Provides domain API s ranging from data loading, text preprocessing, model networking evaluation, to reasoning acceleration: support for rich Chinese dataset loading Dataset API Flexible and efficient data preprocessing Data API ; offering a 60+ pre-training model Transformer API And so on, which can greatly improve the efficiency of NLP task modeling and iteration.

-

Example application of multiple scenarios

- Coverage NLP from Academic to Industrial Level Application examples Based on the new API system of Propeller Core Framework 2.0, it provides the best practices for the development of Propeller 2.0 framework in the text field.

-

High Performance Distributed Training

- Based on the advanced automatic hybrid precision optimization strategy of the propeller core framework, combined with the distributed Fleet API, the 4D hybrid parallel strategy is supported, which can efficiently complete the model training of very large-scale parameters.