Basic structure of TCN

The time-domain convolution data proposed by temporal. Network in 2018 can be seen in detail paper.

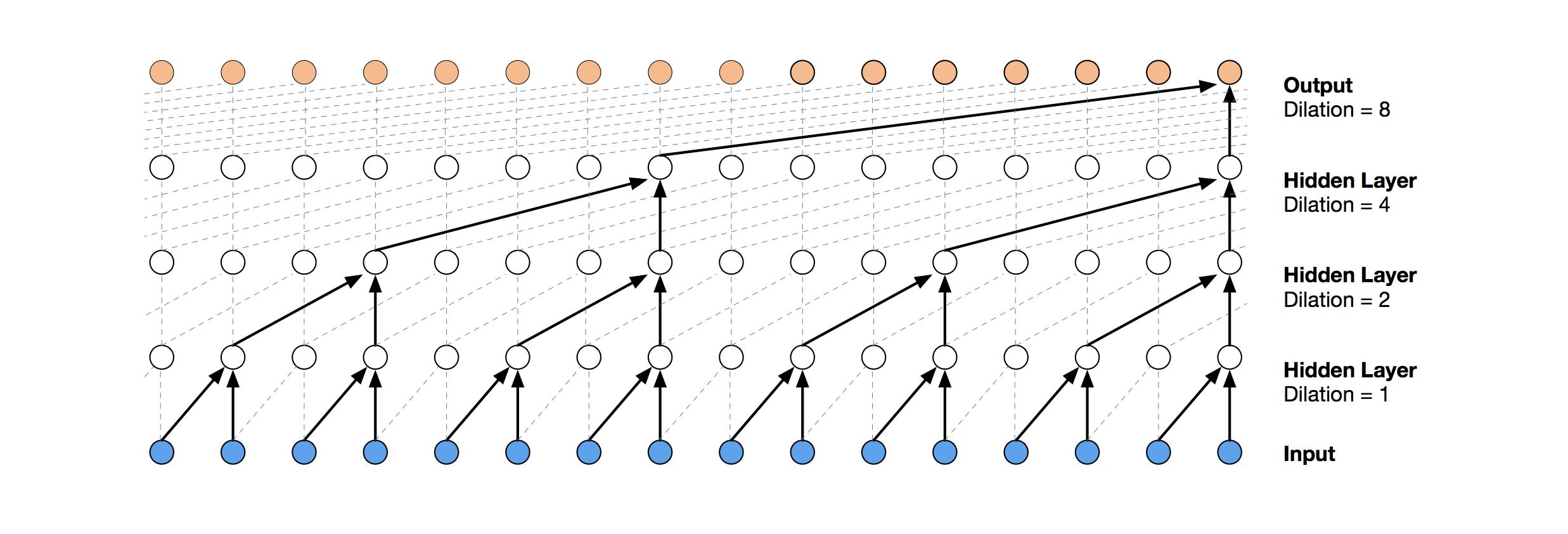

1. Causal Convolution

Causal convolution is shown in the figure above. For the value of t-Time of the upper layer, it only depends on the value of t-Time of the lower layer and its previous value. The difference from the traditional convolution neural network is that causal convolution can not see the future data. It is a one-way structure, not two-way. In other words, there must be antecedents before there can be consequences. It is a strict time constraint model, so it is called causal convolution.

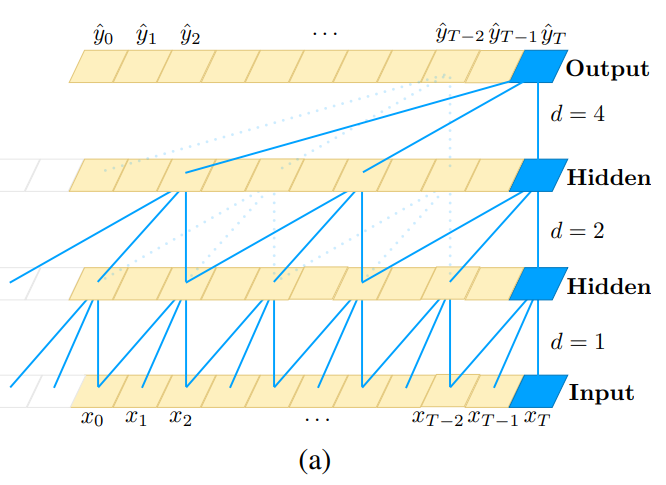

Dilated Convolution

Dilated Convolution is also called void convolution

Pure causal convolution still has the problem of traditional convolution neural network, that is, the length of modeling time is limited by the size of convolution kernel. If you want to get longer dependencies, you need to stack many layers linearly. In order to solve this problem, researchers proposed expansion convolution, as shown in the figure below.

Unlike the traditional convolution, the expansion convolution allows the input to be sampled at intervals during the convolution, and the sampling rate is determined by d d d) control. Bottom d = 1 d = 1 d=1 means that each point is sampled in the process of input, and the middle layer d = 2 d = 2 d=2 means that every two points are sampled as input during the input process. Generally speaking, the higher the level, the greater the value of d. Therefore, the expansion convolution makes the size of the effective window increase exponentially with the number of layers. By this method, the convolution network can use fewer layers and obtain a large receptive field.

Residual Connections

Residual connection is proved to be an effective method to train deep networks, which allows the network to transmit information in a cross layer manner.

In this paper, a residual block is constructed to replace the convolution layer. As shown in the figure above, a residual block contains two layers of convolution and nonlinear mapping, and WeightNorm and Dropout are added to each layer to regularize the network.

TCN summary

advantage

(1) Parallelism. When a sentence is given, TCN can process sentences in parallel without sequential processing like RNN.

(2) Flexible receptive field. The size of the receptive field of TCN is determined by the number of layers, the size of convolution kernel and expansion coefficient. It can be customized flexibly according to different tasks and different characteristics.

(3) Gradient stability. RNN often has the problems of gradient disappearance and gradient explosion, which is mainly caused by sharing parameters in different time periods. Like the traditional convolutional neural network, TCN does not have the problems of gradient disappearance and gradient explosion.

(4) Lower memory. When using RNN, it needs to save the information of each step, which will occupy a lot of memory. The convolution kernel of TCN is shared in one layer, and the memory usage is lower.

shortcoming

(1) TCN may not be so adaptable in transfer learning. This is because the amount of historical information required for model prediction may be different in different fields. Therefore, when a model is migrated from a problem requiring less memory information to a problem requiring longer memory, the performance of TCN may be very poor because its receptive field is not large enough.

(2) The TCN described in this paper is still a unidirectional structure. Pure unidirectional structures are still very useful in tasks such as speech recognition and speech synthesis. However, most texts use a two-way structure. TCN can be easily extended to bidirectional structure, just use the traditional convolution structure instead of causal convolution.

(3) After all, TCN is a variant of convolutional neural network. Although the use of extended convolution can expand the receptive field, it is still limited. Compared with Transformer, the feature that any length of relevant information can be obtained is still very poor. The application of TCN in text remains to be tested.

TCN application

MINST handwritten numeral classification

Multiple features correspond to one label, i.e. (xi1,xi2,xi3,... xin) - yi

Local environment:

Python 3.6 IDE:Pycharm

Library version:

keras 2.2.0 numpy 1.16.2 tensorflow 1.9.0

1. Download data set

2. Create TCN Py, enter the following code

Code reference: Keras-based TCN

# TCN for minst data

from tensorflow.examples.tutorials.mnist import input_data

from keras.models import Model

from keras.layers import add, Input, Conv1D, Activation, Flatten, Dense

# Load data load data

def read_data(path):

mnist = input_data.read_data_sets(path, one_hot=True)

train_x, train_y = mnist.train.images.reshape(-1, 28, 28), mnist.train.labels,

valid_x, valid_y = mnist.validation.images.reshape(-1, 28, 28), mnist.validation.labels,

test_x, test_y = mnist.test.images.reshape(-1, 28, 28), mnist.test.labels

return train_x, train_y, valid_x, valid_y, test_x, test_y

# Residual block

def ResBlock(x, filters, kernel_size, dilation_rate):

r = Conv1D(filters, kernel_size, padding='same', dilation_rate=dilation_rate, activation='relu')(

x) # first convolution

r = Conv1D(filters, kernel_size, padding='same', dilation_rate=dilation_rate)(r) # Second convolution

if x.shape[-1] == filters:

shortcut = x

else:

shortcut = Conv1D(filters, kernel_size, padding='same')(x) # shortcut (shortcut)

o = add([r, shortcut])

# Activation function

o = Activation('relu')(o)

return o

# Sequence Model

def TCN(train_x, train_y, valid_x, valid_y, test_x, test_y, classes, epoch):

inputs = Input(shape=(28, 28))

x = ResBlock(inputs, filters=32, kernel_size=3, dilation_rate=1)

x = ResBlock(x, filters=32, kernel_size=3, dilation_rate=2)

x = ResBlock(x, filters=16, kernel_size=3, dilation_rate=4)

x = Flatten()(x)

x = Dense(classes, activation='softmax')(x)

model = Model(input=inputs, output=x)

# View network structure

model.summary()

# Compile model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Training model

model.fit(train_x, train_y, batch_size=500, nb_epoch=epoch, verbose=2, validation_data=(valid_x, valid_y))

# Assessment model

pre = model.evaluate(test_x, test_y, batch_size=500, verbose=2)

print('test_loss:', pre[0], '- test_acc:', pre[1])

# There are 10 MINST numbers from 0-9, i.e. 10 categories

classes = 10

epoch = 30

train_x, train_y, valid_x, valid_y, test_x, test_y = read_data('MNIST_data')

#print(train_x, train_y)

TCN(train_x, train_y, valid_x, valid_y, test_x, test_y, classes, epoch)

3. Results

test_loss: 0.05342669463425409 - test_acc: 0.987100002169609

Multiple labels

Multiple features correspond to multiple labels, such as (xi1,xi2,xi3,... xin) - (yi1, yi2)

Just modify the above code, reconstruct the training and test data, and set the corresponding input and output dimensions, parameters and other information.

Local environment:

Python 3.6 IDE:Pycharm

Library version:

keras 2.2.0 numpy 1.16.2 pandas 0.24.1 sklearn 0.20.1 tensorflow 1.9.0

Specific code:

# TCN for indoor location

import math

from tensorflow.examples.tutorials.mnist import input_data

from keras.models import Model

from keras.layers import add, Input, Conv1D, Activation, Flatten, Dense

import numpy as np

import pandas

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import mean_squared_error

# Create timing data

def create_dataset(dataset, look_back=1):

dataX, dataY = [], []

for i in range(len(dataset)-look_back-1):

a = dataset[i:(i+look_back), :]

dataX.append(a)

dataY.append(dataset[i + look_back, -2:])

return np.array(dataX), np.array(dataY)

# Residual block

def ResBlock(x, filters, kernel_size, dilation_rate):

r = Conv1D(filters, kernel_size, padding='same', dilation_rate=dilation_rate, activation='relu')(

x) # first convolution

r = Conv1D(filters, kernel_size, padding='same', dilation_rate=dilation_rate)(r) # Second convolution

if x.shape[-1] == filters:

shortcut = x

else:

shortcut = Conv1D(filters, kernel_size, padding='same')(x) # shortcut (shortcut)

o = add([r, shortcut])

o = Activation('relu')(o) # Activation function

return o

# Sequence Model

def TCN(train_x, train_y, test_x, test_y, look_back, n_features, n_output, epoch):

inputs = Input(shape=(look_back, n_features))

x = ResBlock(inputs, filters=32, kernel_size=3, dilation_rate=1)

x = ResBlock(x, filters=32, kernel_size=3, dilation_rate=2)

x = ResBlock(x, filters=16, kernel_size=3, dilation_rate=4)

x = Flatten()(x)

x = Dense(n_output, activation='softmax')(x)

model = Model(input=inputs, output=x)

# View network structure

model.summary()

# Compile model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Training model

model.fit(train_x, train_y, batch_size=500, nb_epoch=epoch, verbose=2)

# Assessment model

pre = model.evaluate(test_x, test_y, batch_size=500, verbose=2)

# print(pre)

print('test_loss:', pre[0], '- test_acc:', pre[1])

# Common parameters

np.random.seed(7)

features = 24

output = 2

EPOCH = 30

look_back = 5

trainPath = '../data/train.csv'

testPath = '../data/test.csv'

trainData = pandas.read_csv(trainPath, engine='python')

testData = pandas.read_csv(testPath, engine='python')

# features = 1

dataset = trainData.values

dataset = dataset.astype('float32')

datatestset = testData.values

datatestset = datatestset.astype('float32')

# print(dataset)

# normalize the dataset

scaler = MinMaxScaler(feature_range=(0, 1))

dataset = scaler.fit_transform(dataset)

datatestset = scaler.fit_transform(datatestset)

trainX, trainY = create_dataset(dataset, look_back)

testX, testY = create_dataset(datatestset, look_back)

# print(trainX)

print(len(trainX), len(testX))

print(testX.shape)

# reshape input to be [samples, time steps, features]

trainX = np.reshape(trainX, (trainX.shape[0], trainX.shape[1], features))

testX = np.reshape(testX, (testX.shape[0], testX.shape[1], features))

# train_x, train_y, valid_x, valid_y, test_x, test_y = read_data('MNIST_data')

print(trainX, trainY)

TCN(trainX, trainY, testX, testY, look_back, features, output, EPOCH)

To be continued

reference material

An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling

TCN-Time Convolutional Network