Denoising autoencoder (DAE)

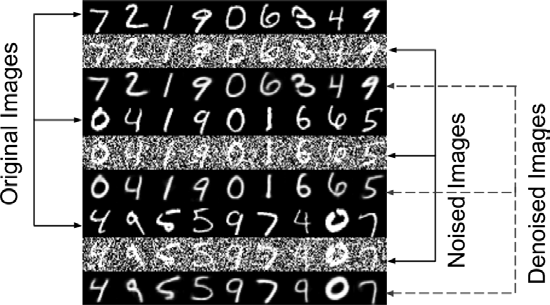

Before introducing the denoising autoencoder (DAE), first introduce an example of the use scene of DAE. When we take photos at night or in other dark environments, our photos are always filled with a lot of noise, which seriously affects the image quality, and the purpose of DAE is to remove the noise in these images. In order to better explain the DAE, we use a simple MNIST data set for demonstration to focus on the knowledge of DAE. As shown in the figure below, three sets of MNIST numbers are displayed. The top row of each group is original images; The middle line shows the input of DAE (noisy images). These inputs are the original images damaged by noise. When there is too much noise, it will be difficult for us to understand the damaged numbers; The last line shows the output of the DAE (Denoised Images).

Tips: if you don't know much about the self encoder, you can refer to it Detailed explanation and implementation of self encoder model (implemented by tensorflow2.x).

Next, let's actually build a DAE to eliminate noise in the image.

DAE model architecture

According to the introduction of DAE, the input can be defined as:

According to the introduction of DAE, the input can be defined as:

x

=

x

o

r

i

g

+

n

o

i

s

e

x = x_{orig} + noise

x=xorig+noise

among

x

o

r

i

g

x_{orig}

xorig , indicates noise

n

o

i

s

e

noise

noise destroys the original MNIST image, and the purpose of the encoder is to learn the latent vector

z

z

z. The loss function of DAE is expressed as:

L

(

x

o

r

i

g

,

x

~

)

=

M

S

E

=

1

m

∑

i

=

1

i

=

m

(

x

o

r

i

g

i

−

x

~

i

)

2

\mathcal L(x_{orig}, \tilde x)=MSE=\frac 1 m \sum_{i=1} ^{i=m}(x_{orig_i}-\tilde x_i)^2

L(xorig,x~)=MSE=m1i=1∑i=m(xorigi−x~i)2

Among them, m m m is the dimension of the output, for example, in the MNIST dataset, m = w i d t h × h e i g h t × c h a n n e l s = 28 × 28 × 1 = 784 m=width × height×channels=28 × 28 × 1 = 784 m=width×height×channels=28×28×1=784. x o r i g i x_{orig_i} xorigi and x i x_i xi , respectively x o r i g x_{orig} xorig # and x ~ \tilde x Elements in x ~.

DAE implementation

Data preprocessing

In order to realize DAE, we first need to construct a training data set. The input data is the MNIST number with noise, and the training output data is the original clean MNIST number. The added noise needs to meet the Gaussian distribution and mean value μ = 0.5 μ = 0.5 μ= 0.5, standard deviation σ = 0.5 σ = 0.5 σ= 0.5. Since adding random noise may produce invalid pixel values less than 0 or greater than 1, the pixel values need to be trimmed to the range of [0.0, 1.0].

import numpy as np

import tensorflow as tf

from tensorflow import keras

from matplotlib import pyplot as plt

from PIL import Image

# Data loading

(x_train,_),(x_test,_) = keras.datasets.mnist.load_data()

# Data preprocessing

image_size = x_train.shape[1]

x_train = np.reshape(x_train,[-1,image_size,image_size,1])

x_test = np.reshape(x_test,[-1,image_size,image_size,1])

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

# Generate Gaussian noise

noise = np.random.normal(loc=0.5,scale=0.5,size=x_train.shape)

x_train_noisy = x_train + noise

noise = np.random.normal(loc=0.5,scale=0.5,size=x_test.shape)

x_test_noisy = x_test + noise

# Crop pixel values to within [0.0, 1.0]

x_train_noisy = np.clip(x_train_noisy,0.0,1.0)

x_test_noisy = np.clip(x_test_noisy,0.0,1.0)

Model construction and model training

# Super parameter

input_shape = (image_size,image_size,1)

batch_size = 32

kernel_size = 3

latent_dim = 16

layer_filters = [32,64]

"""

Model

"""

#encoder

inputs = keras.layers.Input(shape=input_shape,name='encoder_input')

x = inputs

for filters in layer_filters:

x = keras.layers.Conv2D(filters=filters,

kernel_size=kernel_size,

strides=2,

activation='relu',

padding='same')(x)

shape = keras.backend.int_shape(x)

x = keras.layers.Flatten()(x)

latent = keras.layers.Dense(latent_dim,name='latent_vector')(x)

encoder = keras.Model(inputs,latent,name='encoder')

encoder.summary()

# decoder

latent_inputs = keras.layers.Input(shape=(latent_dim,),name='decoder_input')

x = keras.layers.Dense(shape[1]*shape[2]*shape[3])(latent_inputs)

x = keras.layers.Reshape((shape[1],shape[2],shape[3]))(x)

for filters in layer_filters[::-1]:

x = keras.layers.Conv2DTranspose(filters=filters,

kernel_size=kernel_size,

strides=2,

padding='same',

activation='relu')(x)

outputs = keras.layers.Conv2DTranspose(filters=1,

kernel_size=kernel_size,

padding='same',

activation='sigmoid',

name='decoder_output')(x)

decoder = keras.Model(latent_inputs,outputs,name='decoder')

decoder.summary

autoencoder = keras.Model(inputs,decoder(encoder(inputs)),name='autoencoder')

autoencoder.summary()

# Model compilation and training

autoencoder.compile(loss='mse',optimizer='adam')

autoencoder.fit(x_train_noisy,

x_train,validation_data=(x_test_noisy,x_test),

epochs=10,

batch_size=batch_size)

# Model test

x_decoded = autoencoder.predict(x_test_noisy)

rows,cols = 3,9

num = rows * cols

imgs = np.concatenate([x_test[:num],x_test_noisy[:num],x_decoded[:num]])

imgs = imgs.reshape((rows * 3, cols, image_size, image_size))

imgs = np.vstack(np.split(imgs,rows,axis=1))

imgs = imgs.reshape((rows * 3,-1,image_size,image_size))

imgs = np.vstack([np.hstack(i) for i in imgs])

imgs = (imgs * 255).astype(np.uint8)

plt.figure()

plt.axis('off')

plt.imshow(imgs,interpolation='none',cmap='gray')

plt.show()

Effect display

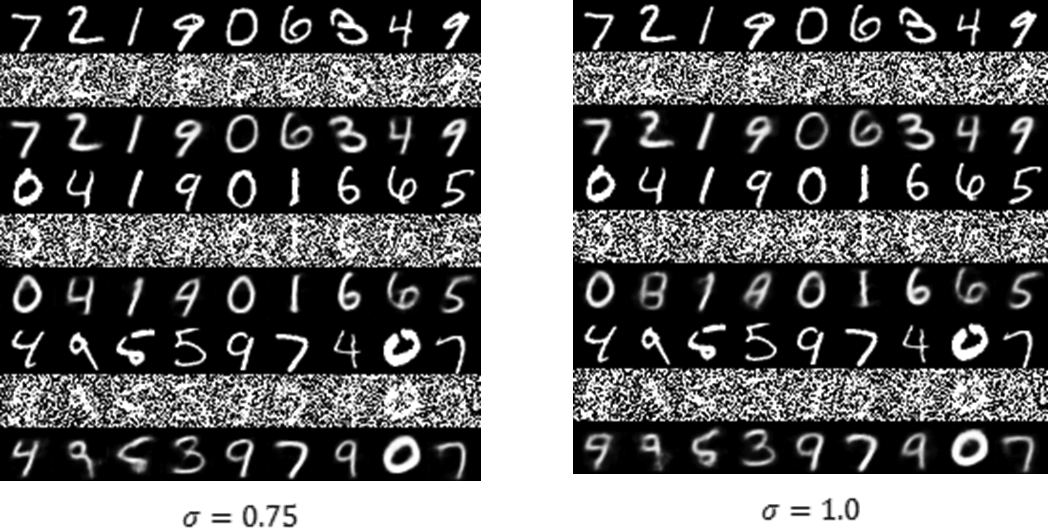

As shown in the figure above, when the noise level changes from

σ

=

0.5

σ=0.5

σ= 0.5 to

σ

=

0.75

σ=0.75

σ= 0.75 and

σ

=

1.0

σ=1.0

σ= At 1.0, DAE has certain robustness and can better restore the original image. However, in

σ

=

1.0

σ=1.0

σ= At 1.0, some numbers were not restored correctly.