The text file "a dream of Red Mansions. txt" contains the contents of the first 20 chapters of the novel "a dream of Red Mansions", and the file "elimination words" contains words that do not need statistics, that is, words that need to be excluded.

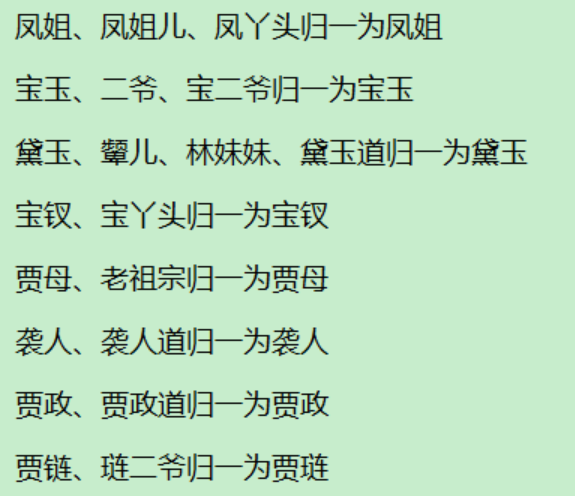

In this question, the following names need to be unified:

It is required to count the name of the person who has been delivered no less than 40 times, and write the name of the person and the number of appearances in descending order into the csv file.

catalogue

1. Read the content of the article and use jieba library for word segmentation

2. Add a stop word to the list

5. Word frequency statistical analysis, written in CSV format

preface

In many novels, there are many nicknames of people's names, such as "Monkey King", "Sun Walker", "splash monkey" and "Sun Dasheng", which are all the same person. In order to count the words that appear in the text, we need to "normalize" these words and designate these names as one name for statistics.

1, Train of thought

1. The cumbersome part of this question is to first read the file (source text file and "stop words" file), then segment the word, and then compare it with "stop words" to eliminate all stop words in the text.

2. Make statistics on the loop structure, and store the names into variables.

3. Normalize the name of the conditional control structure and use the get function for statistics.

4. Sort the calculated word frequency, and then list the results of word frequency exceeding 40.

2, Steps

1. Read the content of the article and use jieba library for word segmentation

The code is as follows (example):

import jieba f = "The Dream of Red Mansion.txt" sf = "Stop words.txt" #Read the article content and use jieba library for word segmentation txt=jieba.lcut(open(f,'r',encoding='utf-8').read())

2. Add a stop word to the list

The code is as follows (example):

#Add stop words to the list

stop_words=[]

with open(sf,'r',encoding='utf-8') as f:

for i in f.read().splitlines():

stop_words.append(i)The file opening method here can also be changed to:

f=open(sf,'r',encoding='utf-8')

3. Eliminate stop words

#Eliminate stop words

txt0=[]

for x in txt:

if x not in stop_words:

txt0.append(x)4. Normalization

#Loop out each word, and count the word frequency after processing the name

counts={}

for word in txt0:

if len(word)==1:

continue

elif word=='Sister Feng' or word=='Phoenix girl':

rword='Miss Luo Yu feng'

elif word=='Second master' or word=='Bao Erye':

rword='Baoyu'

elif word=='Frown' or word=='Mei Mei Lin' or word=='Dai Yudao':

rword='Daiyu'

elif word=='Treasure girl':

rword='Baochai'

elif word=='Old ancestor':

rword='Jia Mu'

elif word=='Attack humanity':

rword='Attack people'

elif word=='Zheng Dao Jia':

rword='Zheng Jia'

elif word=='Lian Erye':

rword='Jia Lian'

else:

rword=word

counts[rword]=counts.get(rword,0)+15. Word frequency statistical analysis, written in CSV format

#Count the word frequency

ls=list(counts.items())

ls.sort(key=lambda x:x[1], reverse=True)

with open (r'result.csv','a',encoding='gbk') as f:

for i in ls:

key,value=i

if value<40:

break

f.write(key+','+str(value)+'\n')3, Merge code

import jieba

f = "The Dream of Red Mansion.txt"

sf = "Stop words.txt"

#Read the article content and use jieba library for word segmentation

txt=jieba.lcut(open(f,'r',encoding='utf-8').read())

#Add stop words to the list

stop_words=[]

f=open(sf,'r',encoding='utf-8')

for i in f.read().splitlines():

stop_words.append(i)

#Eliminate stop words

txt0=[]

for x in txt:

if x not in stop_words:

txt0.append(x)

#Loop out each word, and count the word frequency after processing the name

counts={}

for word in txt0:

if len(word)==1:

continue

elif word=='Sister Feng' or word=='Phoenix girl':

rword='Miss Luo Yu feng'

elif word=='Second master' or word=='Bao Erye':

rword='Baoyu'

elif word=='Frown' or word=='Mei Mei Lin' or word=='Dai Yudao':

rword='Daiyu'

elif word=='Treasure girl':

rword='Baochai'

elif word=='Old ancestor':

rword='Jia Mu'

elif word=='Attack humanity':

rword='Attack people'

elif word=='Zheng Dao Jia':

rword='Zheng Jia'

elif word=='Lian Erye':

rword='Jia Lian'

else:

rword=word

counts[rword]=counts.get(rword,0)+1

#Count the word frequency

ls=list(counts.items())

ls.sort(key=lambda x:x[1], reverse=True)

with open (r'result.csv','a',encoding='gbk') as f:

for i in ls:

key,value=i

if value<40:

break

f.write(key+','+str(value)+'\n')

f.close()

summary

The title requires to analyze and process the contents of Hongloumeng. txt file, and finally output the names that appear more than 40 times. First, open the file, read the file content and segment words, and stop using words. txt file content is also read out. Store the word segmentation results in the list data and iterate through the data. Those with a length less than 2 are used in the stop words

continue discards it. When these conditions are not met, analyze the vocabulary, unify the names, and then use d[i=d. get(i.0)+1 for word frequency statistics. Then take out the data of dictionary D and sort it through list(d.items()) Convert it into a list, sort it through the sort method, then open the csv file, and write those more than 40 times into the file, but those more than 40 times are not necessarily human names. Therefore, extract those that are not human names, create a list outside the program loop, store these copies, and judge inside the loop. When there are these words, do not count them. At this time, execute again Line program, you can observe that there are only people's names left in the file.