preface

In a normal production environment, we sometimes encounter host disk and other hardware failures that cause the host OS system to fail to start, or the OS system itself fails to repair. At this time, there is no other way except to reinstall the OS system, but how to join the original RAC cluster after reinstallation

👇👇 The following experimental process will take you step by step to join the RAC cluster of OS reinstallation nodes 👇👇

Experimental environment preparation

1.RAC deployment

There are many about rac deployment on csdn, which will be skipped here

☀️ If you have time to write rac later, the connection will be added here. You can look forward to it ☀️

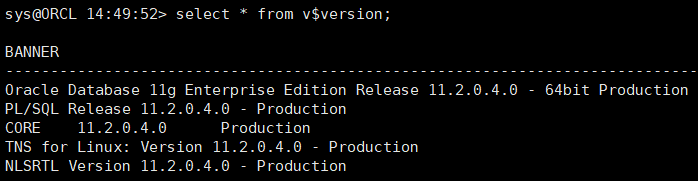

2. Environmental parameters

| host name | OS | Public IP | VIP | Private IP |

|---|---|---|---|---|

| rac1 | rhel7.6 | 192.168.56.5 | 192.168.56.7 | 10.10.1.1 |

| rac2 | rhel7.6 | 192.168.56.6 | 192.168.56.8 | 10.10.1.1 |

3. Analog OS failure

Due to time reasons, the mode of directly deleting the GI and DB software of node 2 is adopted to install a new OS system after reinstallation 🌜

- Cluster status

[grid@rac1:/home/grid]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.LISTENER.lsnr

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.OCR.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2 Started

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.ons

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac2

ora.cvu

1 ONLINE ONLINE rac2

ora.oc4j

1 ONLINE ONLINE rac2

ora.orcl.db

1 ONLINE ONLINE rac1 Open

2 ONLINE ONLINE rac2 Open

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE ONLINE rac2

ora.scan1.vip

1 ONLINE ONLINE rac2

- Uninstall GI and DB of node 2

[root@rac2:/root]$ rm -rf /etc/oracle [root@rac2:/root]$ rm -rf /etc/ora* [root@rac2:/root]$ rm -rf /u01 [root@rac2:/root]$ rm -rf /tmp/CVU* [root@rac2:/root]$ rm -rf /tmp/.oracle [root@rac2:/root]$ rm -rf /var/tmp/.oracle [root@rac2:/root]$ rm -f /etc/init.d/init.ohasd [root@rac2:/root]$ rm -f /etc/systemd/system/oracle-ohasd.service [root@rac2:/root]$ rm -rf /etc/init.d/ohasd

- Confirm the cluster status again

[grid@rac1:/home/grid]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE rac1

ora.LISTENER.lsnr

ONLINE ONLINE rac1

ora.OCR.dg

ONLINE ONLINE rac1

ora.asm

ONLINE ONLINE rac1 Started

ora.gsd

OFFLINE OFFLINE rac1

ora.net1.network

ONLINE ONLINE rac1

ora.ons

ONLINE ONLINE rac1

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1

ora.cvu

1 ONLINE ONLINE rac1

ora.oc4j

1 ONLINE ONLINE rac1

ora.orcl.db

1 ONLINE ONLINE rac1 Open

2 ONLINE OFFLINE

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE INTERMEDIATE rac1 FAILED OVER

ora.scan1.vip

1 ONLINE ONLINE rac1

- Confirm node 2 Environment

[root@rac2:/]$ ll total 28 drwxr-xr-x. 2 oracle oinstall 6 Sep 25 19:32 backup lrwxrwxrwx. 1 root root 7 Sep 24 15:31 bin -> usr/bin dr-xr-xr-x. 4 root root 4096 Sep 25 20:47 boot drwxr-xr-x 20 root root 3640 Sep 26 15:20 dev drwxr-xr-x. 144 root root 8192 Jan 14 2022 etc drwxr-xr-x. 5 root root 46 Sep 25 19:32 home lrwxrwxrwx. 1 root root 7 Sep 24 15:31 lib -> usr/lib lrwxrwxrwx. 1 root root 9 Sep 24 15:31 lib64 -> usr/lib64 drwxr-xr-x. 2 root root 6 Dec 15 2017 media drwxr-xr-x. 2 root root 6 Dec 15 2017 mnt drwxr-xr-x. 4 root root 32 Sep 25 21:26 opt dr-xr-xr-x 178 root root 0 Jan 14 2022 proc dr-xr-x---. 15 root root 4096 Sep 26 15:20 root drwxr-xr-x 37 root root 1140 Sep 26 15:20 run lrwxrwxrwx. 1 root root 8 Sep 24 15:31 sbin -> usr/sbin drwxr-xr-x. 3 root root 4096 Sep 25 22:28 soft drwxr-xr-x. 2 root root 6 Dec 15 2017 srv dr-xr-xr-x 13 root root 0 Sep 26 15:40 sys drwxrwxrwt. 13 root root 4096 Sep 26 15:40 tmp drwxr----T 4 root root 32 Sep 25 21:59 user_root drwxr-xr-x. 13 root root 155 Sep 24 15:31 usr drwxr-xr-x. 20 root root 282 Sep 24 15:48 var [root@rac2:/]$ ps -ef | grep grid root 5847 4091 0 15:41 pts/0 00:00:00 grep --color=auto grid [root@rac2:/]$ ps -ef | grep asm root 5852 4091 0 15:41 pts/0 00:00:00 grep --color=auto asm [root@rac2:/]$ ps -ef | grep oracle root 5856 4091 0 15:41 pts/0 00:00:00 grep --color=auto oracle #There is no u01 directory under the root directory, and there are no grid and oracle user processes

🔫 The environment is confirmed to be correct, and then start the actual operation

Actual combat record

Before the key nodes join the cluster, the pre configuration is the same as the environment configuration for installing RAC, which is omitted here! Students who are not clear can consult the information by themselves

💥💥 Be sure to carefully check various parameter configurations. Because you forgot to create relevant directories, Eason reported various errors in the later links 😭

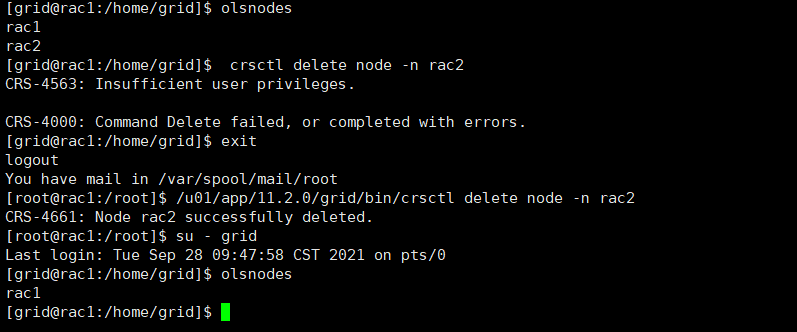

1. Clear the OCR entry for reinstalling the host

[grid@rac1:/home/grid]$ olsnodes [root@rac1:/root]$ /u01/app/11.2.0/grid/bin/crsctl delete node -n rac2

✒️ To check whether the above steps are successful, you can execute olsnodes in the existing, and the reinstalled host should not appear in the list.

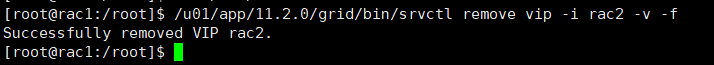

2. Delete the VIP information of the reinstalled host from OCR

[root@rac1:/root]$ /u01/app/11.2.0/grid/bin/srvctl remove vip -i rac2 -v -f Successfully removed VIP rac2.

⛔ After clearing the VIP of node 2, it is best to restart the network service, otherwise the IP address of the operating system layer will not be released

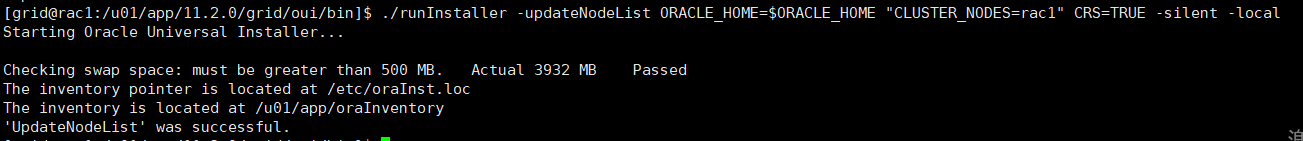

3. Clear the inventory information of the GI and DB home of the reinstalled host

- Clear Inventory of GI

[grid@rac1:/u01/app/11.2.0/grid/oui/bin]$ ./runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME "CLUSTER_NODES=rac1" CRS=TRUE -silent -local

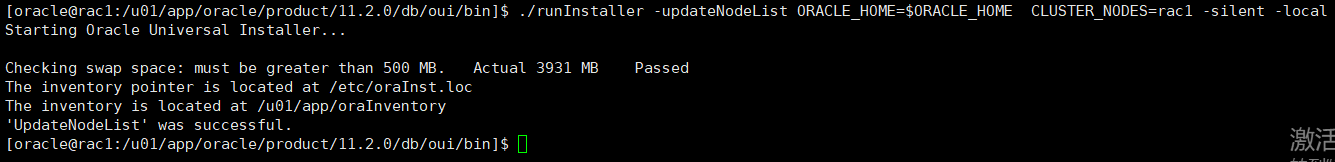

- Clearing DB of Inventory

[oracle@rac1:/u01/app/oracle/product/11.2.0/db/oui/bin]$ ./runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME CLUSTER_NODES=rac1 -silent -local

📢 Note: cluster here_ Nodes is the list of remaining nodes

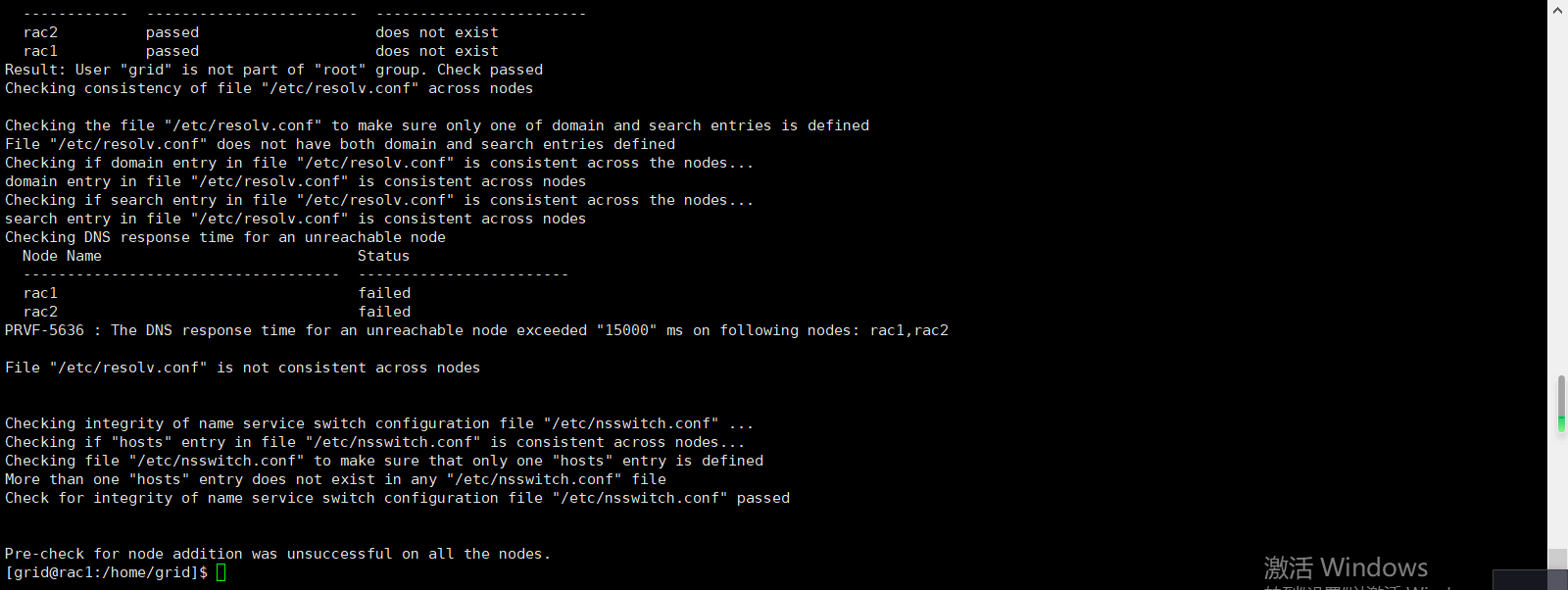

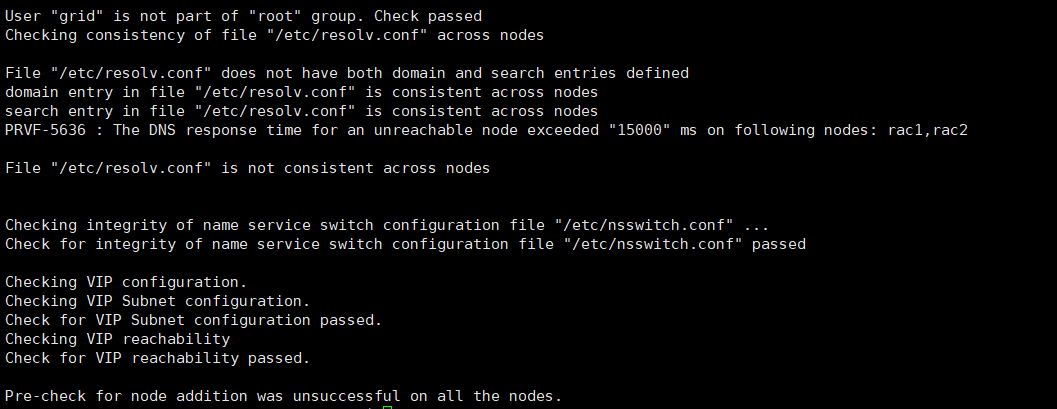

4. CVU inspection

[grid@rac1:/home/grid]$ /u01/app/11.2.0/grid/bin/./cluvfy stage -pre nodeadd -n rac2 -verbose

Check the verification information. Individual failed can be ignored, such as resolv Conf and other parsing file configuration

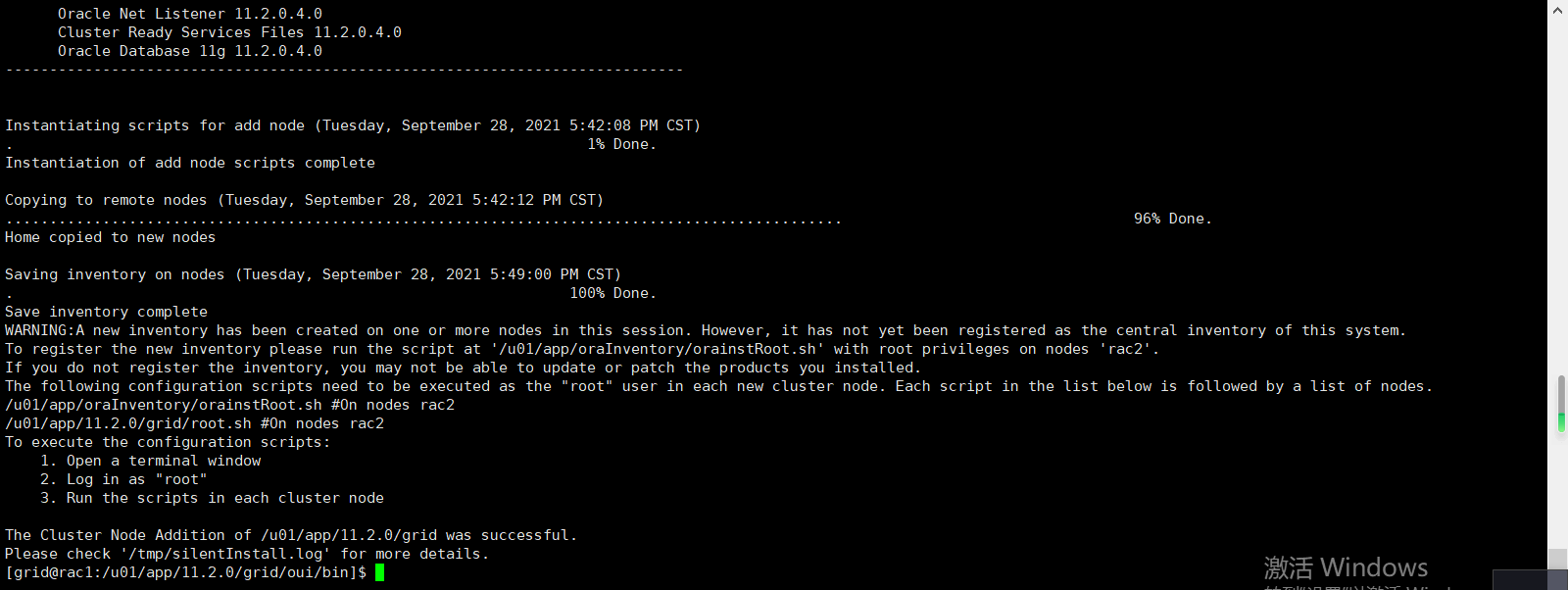

5. Execute addnode on node 1 sh

[grid@rac1:/u01/app/11.2.0/grid/oui/bin]$ export IGNORE_PREADDNODE_CHECKS=Y

[grid@rac1:/u01/app/11.2.0/grid/oui/bin]$ ./addNode.sh -silent "CLUSTER_NEW_NODES={rac2}" "CLUSTER_NEW_VIRTUAL_HOSTNAMES={rac2-vip}"

👑 If you do not ignore the previous verification failure option, adding a node directly will generate an error, which is the same as the verification when installing rac 👑

💎 The node was successfully added after ignoring the verification failure option

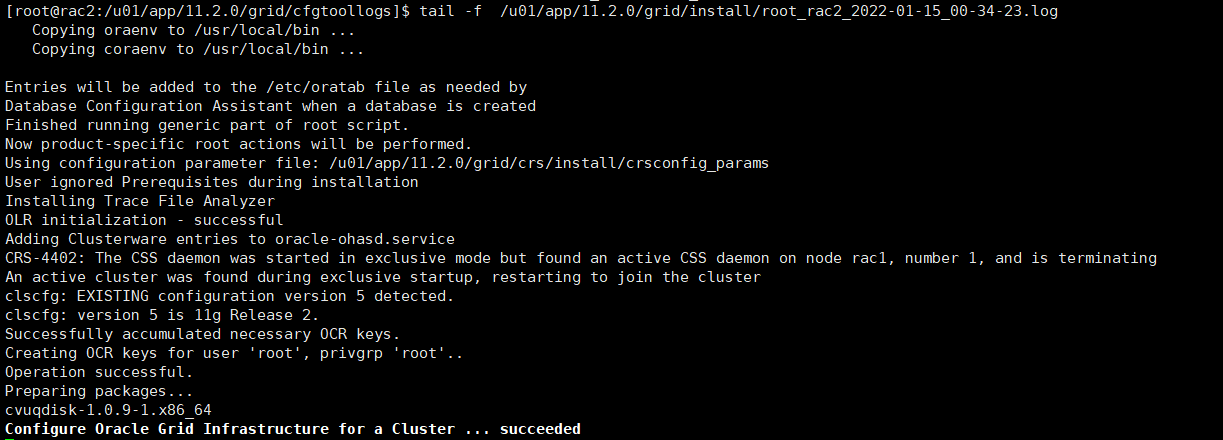

6. Run the script at node 2 to start the CRS stack

[root@rac2:/root]$ /u01/app/oraInventory/orainstRoot.sh [root@rac2:/root]$ /u01/app/11.2.0/grid/root.sh

- Check cluster status

[grid@rac2:/home/grid]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.LISTENER.lsnr

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.OCR.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2 Started

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.ons

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1

ora.cvu

1 ONLINE ONLINE rac1

ora.oc4j

1 ONLINE ONLINE rac1

ora.orcl.db

1 ONLINE ONLINE rac1 Open

2 ONLINE OFFLINE

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE ONLINE rac2

ora.scan1.vip

1 ONLINE ONLINE rac1

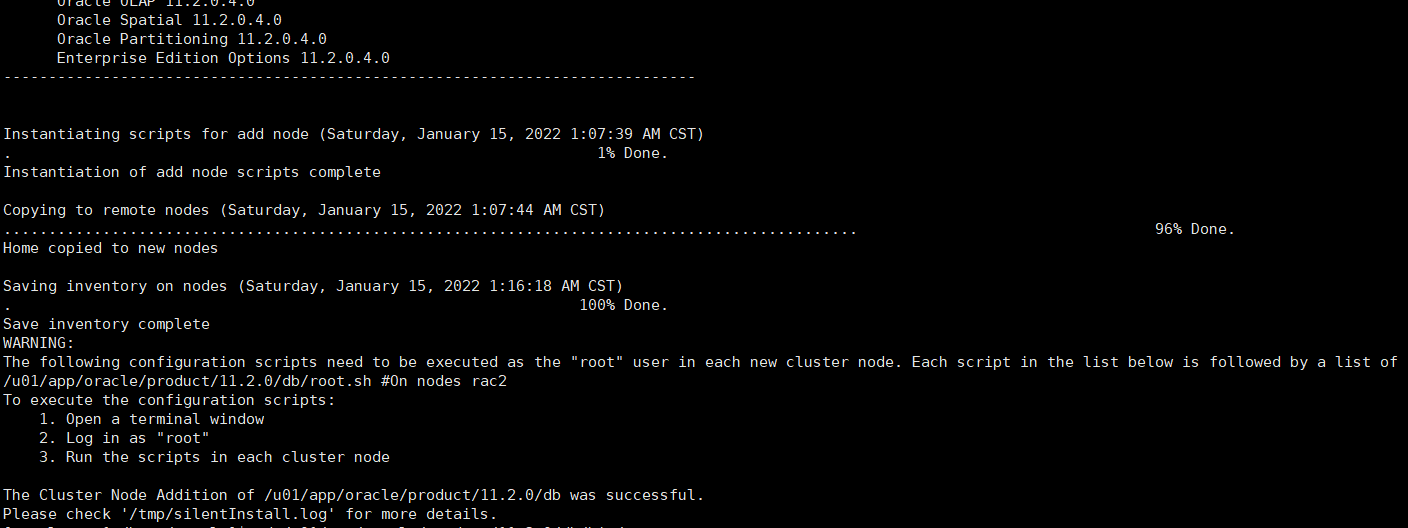

7. Execute addnode on node 1 sh

[oracle@rac1:/home/oracle]$ /u01/app/oracle/product/11.2.0/db/oui/bin/addNode.sh -silent "CLUSTER_NEW_NODES={rac2}"

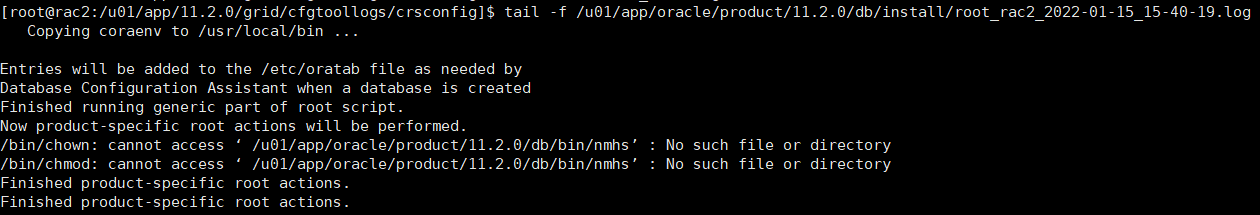

- Execute root SH script

[root@rac2:/dev]$ /u01/app/oracle/product/11.2.0/db/root.sh

📛 For the 11g version on rhel7, the nmhs file prompted here does not exist. You can manually copy it from node 1 and modify the permissions

8. Start node 2 instance

Remember what else Eason did before clearing the original node information ❓

Did you just clean up the nventory information ❓

🚀 Now there is instance information of node 2 in the cluster and in the database. What should I do? Start it directly 🚀

❤️ Don't worry, you have to modify pfile before starting ❤️

[oracle@rac2:/u01/app/oracle/product/11.2.0/db/dbs]$ mv initorcl1.ora initorcl2.ora [grid@rac1:/u01/app/oracle/product/11.2.0/db]$ srvctl start database -d orcl

- Cluster status

[grid@rac1:/u01/app/oracle/product/11.2.0/db]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.LISTENER.lsnr

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.OCR.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2 Started

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.ons

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1

ora.cvu

1 ONLINE ONLINE rac1

ora.oc4j

1 ONLINE ONLINE rac1

ora.orcl.db

1 ONLINE ONLINE rac1 Open

2 ONLINE ONLINE rac2 Open

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE ONLINE rac2

ora.scan1.vip

1 ONLINE ONLINE rac1

OK, reinstall the node and join the cluster successfully