This paper is based on the official video playback plugin for encapsulation

https://github.com/flutter/plugins/tree/master/packages/video_player/video_player

In the daily development, it is inevitable to meet the needs of video development; with the increasingly active technology of Flutter, there will be a demand for video functions. If the video player functions provided by the official are directly moved into use, it will be found that they need to be further encapsulated in many places.

1, Integrated video playback

First of all, because the company's Android uses ijkplayer to realize video playback function and the official plug-in uses exoplayer, we will transform the corresponding class of VideoPlayerPlugin to replace the relevant exoplayer with ijkplayer in our own App during integration, so that the benefits of this method can not only be reduced because the introduction of exoplayer brings greater package to the App Size, and can also reuse the native code.

Secondly, we need to know about the principle of some official video playing plug-ins, which can be summed up in one sentence: Texture;

The definition of the texture class in the Flutter end is as follows:

class Texture extends LeafRenderObjectWidget {

const Texture({

Key key,

@required this.textureId,

}) : assert(textureId != null),

super(key: key);

}

Therefore, each texture corresponds to a required textureId, which is the key point for video playback. For how to generate textureId natively, you can roughly see the source code of the official plug-in:

TextureRegistry textures = registrar.textures(); TextureRegistry.SurfaceTextureEntry textureEntry = textures.createSurfaceTexture(); textureId = textureEntry.id()

Question 1: when will this textureId be generated?

When we call video initialization on the fluent side, we will call the plugin's create method:

final Map<dynamic, dynamic> response = await _channel.invokeMethod( 'create', dataSourceDescription, ); _textureId = response['textureId'];

Question 2: how do I transfer data when I get textureId?

When the Fletter side calls create, the native side will generate a textureId and register a new EventChannel; the relevant data of video playback, such as: initialized, completed, bufferingupdate, bufferingstart and bufferingend, will be called back to each specific video Widget of the Fletter side from And realize the next logic.

How to generate a new EventChannel on the native side:

TextureRegistry.SurfaceTextureEntry textureEntry = textures.createSurfaceTexture();

String eventChannelName = "flutter.io/videoPlayer/videoEvents" + textureEntry.id();

EventChannel eventChannel =

new EventChannel(

registrar.messenger(), eventChannelName);

How to monitor on the Flutter end:

void eventListener(dynamic event) {

final Map<dynamic, dynamic> map = event;

switch (map['event']) {

case 'initialized':

value = value.copyWith(

duration: Duration(milliseconds: map['duration']),

size: Size(map['width']?.toDouble() ?? 0.0,

map['height']?.toDouble() ?? 0.0),

);

initializingCompleter.complete(null);

_applyLooping();

_applyVolume();

_applyPlayPause();

break;

......

}

}

void errorListener(Object obj) {

final PlatformException e = obj;

LogUtil.d("----------- ErrorListener Code = ${e.code}");

value = VideoPlayerValue.erroneous(e.code);

_timer?.cancel();

}

_eventSubscription = _eventChannelFor(_textureId)

.receiveBroadcastStream()

.listen(eventListener, onError: errorListener);

return initializingCompleter.future;

}

EventChannel _eventChannelFor(int textureId) {

return EventChannel('flutter.io/videoPlayer/videoEvents$textureId');

}

Of course, the logic of some walks that need to trigger operations, such as pause / play / fast forward, is a little different. They go to the same plugin that calls the create method (that is, the plugin corresponding to "flitter. IO / videoplayer"):

// Such as play / pause call mode

final MethodChannel _channel = const MethodChannel('flutter.io/videoPlayer')

_channel.invokeMethod( 'play', <String, dynamic>{'textureId': _textureId});

At this point, the video playback function of Flutter can be realized.

2, Implementation of video list interface

A ListView can be used as a sliding control in the Flutter as in the ordinary list interface. However, the video interface is a little different in that it needs to be paused when the currently playing item is not visible, and the previous playing video needs to be paused when clicking another video.

First of all, for the processing method that the video in the previous playback needs to be paused when clicking another video:

It's better to deal with this situation. My processing method here is to register a click callback for each video Widget. When another click plays, when traversing the callback, it finds that the current video is playing, the pause operation is performed:

///Control the last video needs to be paused when clicking play

playCallback = () {

if (controller.value.isPlaying) {

setState(() {

controller.pause();

});

}

};

VideoPlayerController.playCallbacks.add(playCallback);

Secondly, when the item is not visible during sliding, it needs to stop playing; the main principle in this case is to obtain the Rect (region) of the sliding view, and then pause when the bottom of the video rect is smaller than the top of the sliding view or the top of the current video view is smaller than the bottom of the sliding view.

Question 1: how to get the Rect of a Widget?

///Return to the corresponding Rect area

static Rect getRectFromKey(BuildContext currentContext) {

var object = currentContext?.findRenderObject();

var translation = object?.getTransformTo(null)?.getTranslation();

var size = object?.semanticBounds?.size;

if (translation != null && size != null) {

return new Rect.fromLTWH(translation.x, translation.y, size.width, size.height);

} else {

return null;

}

}

Question 2: how to judge the sliding out of the screen according to the sliding?

Register a monitor for the video Widget, and make a callback judgment when sliding:

///Call back to video Widget when sliding ListView

scrollController = ScrollController();

scrollController.addListener(() {

if (videoScrollController.scrollOffsetCallbacks.isNotEmpty) {

for (ScrollOffsetCallback callback in videoScrollController.scrollOffsetCallbacks) {

callback();

}

}

});

When the video Widget receives a slide callback:

scrollOffsetCallback = () {

itemRect = VideoScrollController.getRectFromKey(videoBuildContext);

///Height of status bar + title bar (with a little deviation)

int toolBarAndStatusBarHeight = 44 + 25;

if (itemRect != null && videoScrollController.rect != null &&

(itemRect.top > videoScrollController.rect.bottom || itemRect.bottom - toolBarAndStatusBarHeight < videoScrollController.rect.top)) {

if (controller.value.isPlaying) {

setState(() {

LogUtil.d("=============== Currently playing, need to pause playing when being removed from the screen ======");

controller.pause();

});

}

}

};

videoScrollController?.scrollOffsetCallbacks?.add(scrollOffsetCallback);

At this point, the real list video interface solves the function of pause / play when related sliding or clicking.

3, Full screen switching

For video function, the requirement of full screen is very common, so this function is indispensable in the Flutter end. For full screen function, refer to the open source library https://github.com/brianegan/chewie Train of thought.

The main principle is to use the unique principle of the textureId of each texture, which is different from the native way of View deduction. According to the overall code of the open source library, it is relatively simple without much trouble:

///Exit full screen

_popFullScreenWidget() {

Navigator.of(context).pop();

}

///Switch to full screen

_pushFullScreenWidget() async {

final TransitionRoute<Null> route = new PageRouteBuilder<Null>(

settings: new RouteSettings(isInitialRoute: false),

pageBuilder: _fullScreenRoutePageBuilder,

);

SystemChrome.setEnabledSystemUIOverlays([]);

SystemChrome.setPreferredOrientations([

DeviceOrientation.landscapeLeft,

DeviceOrientation.landscapeRight,

]);

await Navigator.of(context).push(route);

SystemChrome.setEnabledSystemUIOverlays(SystemUiOverlay.values);

SystemChrome.setPreferredOrientations([

DeviceOrientation.portraitUp,

DeviceOrientation.portraitDown,

DeviceOrientation.landscapeLeft,

DeviceOrientation.landscapeRight,

]);

}

So, the above is probably the common requirements and functions in the development of Flutter; of course, in the specific or more abnormal video requirements, it needs to be further improved based on this scheme.

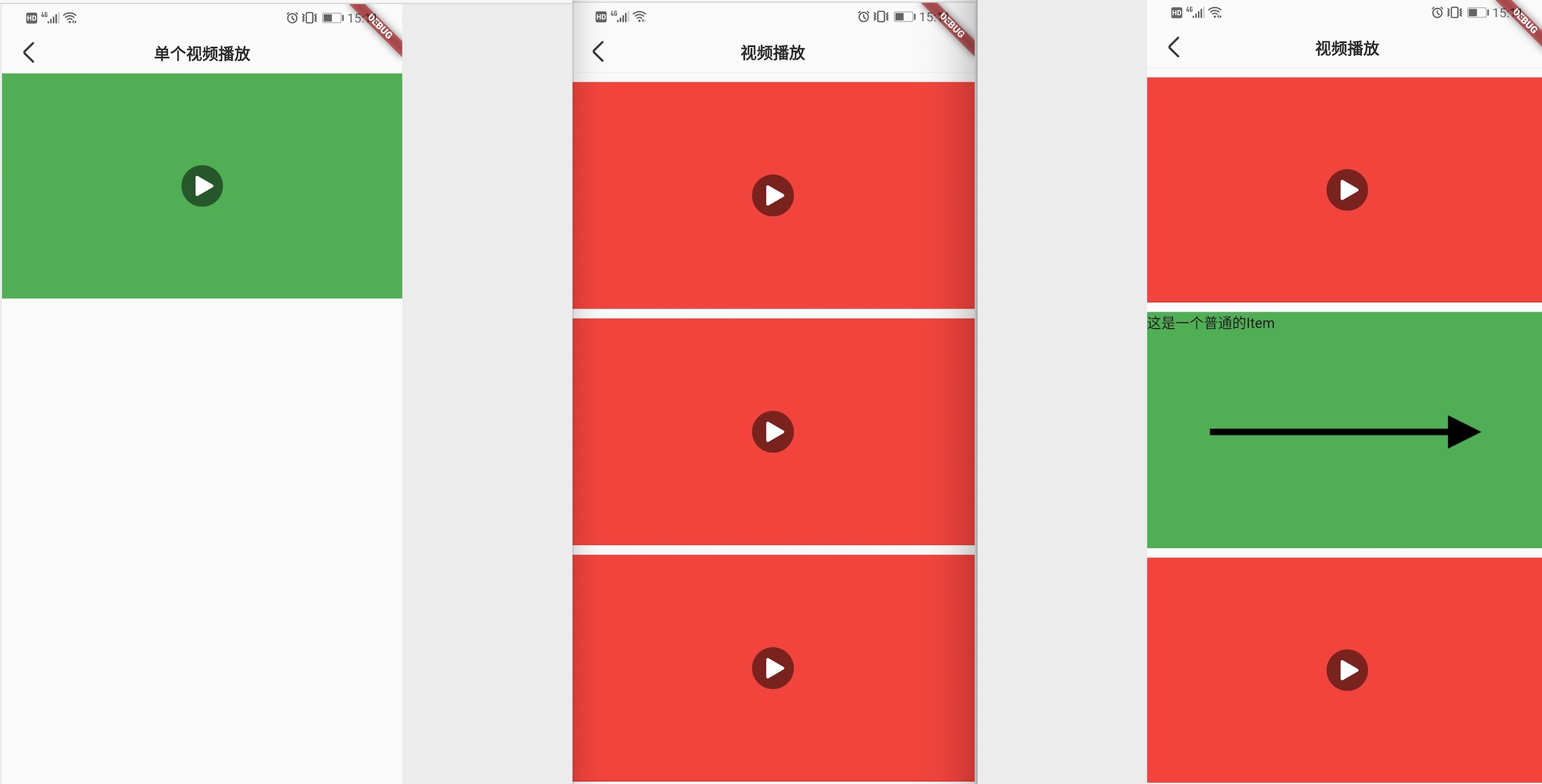

Attach several renderings of demo: