I suggest you take a look at the source code of concurrent HashMap of Java 7. The source code of that version is a textbook of Java multithreaded programming. In the source code of Java 7, the author is very cautious about the use of pessimistic locks. Most of them are converted to spin lock and volatile to obtain the same semantics. Even if they have to be used in the end, the author will reduce the critical area of locks through various techniques.

Spin lock is a better choice when the critical area is relatively small, because it avoids the context switching of threads due to blocking, but it is also a lock in essence. During spin waiting, only one thread can enter the critical area, and other threads will only spin and consume the time slice of CPU.

The implementation of ConcurrentHashMap in Java 8 avoids the limitations of spin lock and provides higher concurrency performance through some ingenious design and skills. If the source code of Java 7 is teaching us how to convert pessimistic lock into spin lock, we can even see the methods and skills of how to convert spin lock into no lock in Java 8.

Read the book

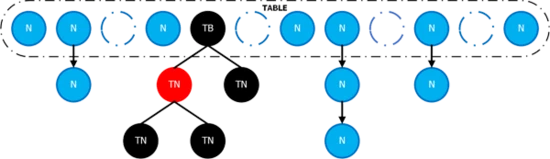

Before starting this article, you should first have such a picture in your mind. If some students are familiar with HashMap, this picture should not be unfamiliar. In fact, the concurrent HashMap and HashMap of Java 8 are basically the same in the design of the overall data structure.

Concurrent HashMap in Java 7 uses a lot of programming skills to improve performance, but there is still much room for improvement in the design of Segment. The design of concurrent HashMap in Java 7 can be improved in the following aspects:

-

During the expansion of a Segment, the non expansion thread must suspend the waiting for the write operation of the Segment

-

The read operation of ConcurrentHashMap needs to be hashed twice. In fact, there is additional performance loss in the case of more reads and less writes

-

Although the implementation of the size() method first attempts to read without lock, if another thread does write operation in this process, the thread calling size() will lock the whole ConcurrentHashMap, which is the only global lock of the whole ConcurrentHashMap. This is still a performance hazard for the underlying components

-

In extreme cases (for example, the client implements a poorly performing hash function), the complexity of the get() method degrades to O(n).

For 1 and 2, the design of Java 8 discards the use of Segment and reduces the granularity of pessimistic lock to the bucket dimension. Therefore, there is no need to hash twice when calling get. The design of size() is the biggest highlight in the Java 8 version, which will be described in detail in later articles. As for red and black trees, this article still does not elaborate too much. The following pages will dig into the details and read the book thick. The modules involved include initialization, put method, capacity expansion method, transfer and size() method. Other modules, such as hash function, have little change, so we won't go into it further.

Preparation knowledge

ForwardingNode

static final class ForwardingNode<K,V> extends Node<K,V> {

final Node<K,V>[] nextTable;

ForwardingNode(Node<K,V>[] tab) {

// MOVED = -1, the hash value of ForwardingNode is - 1

super(MOVED, null, null, null);

this.nextTable = tab;

}

}

In addition to ordinary nodes and treenodes, ConcurrentHashMap also introduces a new data type ForwardingNode. We only show its construction method here. ForwardingNode has two functions:

- In the process of dynamic capacity expansion, it indicates that a bucket has been copied to the new bucket array

- If the get method is called during dynamic capacity expansion, the ForwardingNode will forward the request to the new bucket array to avoid blocking the call of the get method. When constructing, the ForwardingNode will save the expanded bucket array nextTable.

UNSAFE.compareAndSwap***

This is a tool for implementing CAS in Java 8 version of ConcurrentHashMap. Taking int type as an example, its method is defined as follows:

/**

* Atomically update Java variable to <tt>x</tt> if it is currently

* holding <tt>expected</tt>.

* @return <tt>true</tt> if successful

*/

public final native boolean compareAndSwapInt(Object o, long offset,

int expected,

int x);

The corresponding semantics are:

If the offset value of the object o starting address is equal to expected, set the value to x and return true to indicate that the update is successful. Otherwise, return false to indicate that CAS failed

initialization

public ConcurrentHashMap(int initialCapacity, float loadFactor, int concurrencyLevel) {

if (!(loadFactor > 0.0f) || initialCapacity < 0 || concurrencyLevel <= 0) // Check parameters

throw new IllegalArgumentException();

if (initialCapacity < concurrencyLevel)

initialCapacity = concurrencyLevel;

long size = (long)(1.0 + (long)initialCapacity / loadFactor);

int cap = (size >= (long)MAXIMUM_CAPACITY) ?

MAXIMUM_CAPACITY : tableSizeFor((int)size); // tableSizeFor, the algorithm for finding not less than 2^n of size, jdk1 It is said in HashMap of 8

this.sizeCtl = cap;

}

Even the most complex initialization method code is relatively simple. Here we only need to pay attention to two points:

- In Java 7, concurrencyLevel is the length of the Segment array

Segment has been discarded in 8, so concurrencyLevel is only a reserved field and has no practical significance - sizeCtl appears for the first time. If this value is equal to - 1, it indicates that the system is initializing. If it is other negative numbers, it indicates that the system is expanding. During capacity expansion, the lower sixteen bits of sizeCtl binary are equal to the number of threads expanded plus one, and the upper sixteen bits (except symbol bits) contain the size information of bucket array

put method

public V put(K key, V value) {

return putVal(key, value, false);

}

The put method forwards the call to the putVal method:

final V putVal(K key, V value, boolean onlyIfAbsent) {

if (key == null || value == null) throw new NullPointerException();

int hash = spread(key.hashCode());

int binCount = 0;

for (Node<K,V>[] tab = table;;) {

Node<K,V> f; int n, i, fh;

// [A] Delay initialization

if (tab == null || (n = tab.length) == 0)

tab = initTable();

// [B] The current bucket is empty. Update it directly

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {

if (casTabAt(tab, i, null,

new Node<K,V>(hash, key, value, null)))

break; // no lock when adding to empty bin

}

// [C] If the first element of the current bucket is a ForwardingNode node, the thread attempts to join the expansion

else if ((ffh = f.hash) == MOVED)

tab = helpTransfer(tab, f);

// [D] Otherwise, traverse the linked list or tree in the bucket and insert

else {

// Fold it up temporarily and see it in detail later

}

}

// [F] When the process comes here, it indicates that it has been put successfully, and the total number of records in the map is added by one

addCount(1L, binCount);

return null;

}

From the perspective of the whole code structure, the process is relatively clear. I marked several very important steps in parentheses and letters, and the put method still involves a lot of knowledge points

Initialization of bucket array

private final Node<K,V>[] initTable() {

Node<K,V>[] tab; int sc;

while ((tab = table) == null || tab.length == 0) {

if ((sc = sizeCtl) < 0)

// It indicates that there is already a thread initializing, and this thread starts to spin

Thread.yield(); // lost initialization race; just spin

else if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {

// CAS guarantees that only one thread can go to this branch

try {

if ((tab = table) == null || tab.length == 0) {

int n = (sc > 0) ? sc : DEFAULT_CAPACITY;

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];

tabtable = tab = nt;

// sc = n - n/4 = 0.75n

sc = n - (n >>> 2);

}

} finally {

// Restoring sizectl > 0 is equivalent to releasing the lock

sizeCtl = sc;

}

break;

}

}

return tab;

}

In the process of initializing bucket array, how can the system ensure that there will be no concurrency problem? The key point is the use of spin lock. When multiple threads execute initTable method, CAS can ensure that only one thread can enter the real initialization branch, and other threads are spin waiting. In this code, we focus on three points:

- As mentioned above, when a thread starts initializing the bucket array, it will set sizeCtl to - 1 through CAS, and other threads will start spin waiting with this flag

- After the initialization of bucket array, the value of sizeCtl is restored to a positive number, which is equal to 0.75 times the length of bucket array. The meaning of this value is consistent with the THRESHOLD in HashMap, which is the critical point for the system to trigger capacity expansion

- In the finally statement, CAS is not used for sizeCtl operation because CAS guarantees that only one thread can execute to this place

Add first element of bucket array

static final <K,V> Node<K,V> tabAt(Node<K,V>[] tab, int i) {

return (Node<K,V>)U.getObjectVolatile(tab, ((long)i << ASHIFT) + ABASE);

}

static final <K,V> boolean casTabAt(Node<K,V>[] tab, int i,

Node<K,V> c, Node<K,V> v) {

return U.compareAndSwapObject(tab, ((long)i << ASHIFT) + ABASE, c, v);

}

The second branch of the put method will use tabAt to determine whether the current bucket is empty. If so, it will be written through CAS. tabAt will get the latest element in the bucket through the UNSAFE interface. casTabAt ensures that there will be no concurrency problem through CAS. If CAS fails, it will enter other branches through a loop

Determine whether to add new threads for capacity expansion

In this place, we will talk about the sizeCtl flag bit in detail. The temporary variable rs is returned by the resizeStamp method

static final int resizeStamp(int n) {

// RESIZE_STAMP_BITS = 16

return Integer.numberOfLeadingZeros(n) | (1 << (RESIZE_STAMP_BITS - 1));

}

Because the input parameter n is a value of type int, all integers The return value of numberofleadingzeros (n) is between 0 and 32 if converted to binary

-

Integer. The maximum value of 00000000 is 00000000

00100000 -

Integer. The minimum value of numberofleadingzeros (n) is 00000000 00000000 00000000

00000000

Therefore, the return value of resizeStamp is between 00000000 00000000 100000000 00000000 and 00000000 00000000 100000000. From the range of the return value, we can see that the upper 16 bits of the return value of resizeStamp are all 0 and do not contain any information. Therefore, in concurrenthashmap, the return value of resizeStamp will be shifted by 16 bits to the left and put into sizeCtl, which is why the high 16 bits of sizeCtl contain the whole Map size. With this analysis, the longer if judgment in this code can be understood

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || transferIndex <= 0)

break;

(sc >>> RESIZE_STAMP_SHIFT) != rs Ensure that all threads are expanded based on the same old bucket array

transferIndex <= 0 A thread has completed the capacity expansion task

As for sc = = rs + 1 | sc = = rs + Max_ If the two criteria of resizers are careful, students will find it difficult to understand. This place is indeed a BUG of JDK. This BUG has been repaired in JDK 12. For details, please refer to the official website of Oracle: https://bugs.java.com/bugdatabase/view_bug.do?bug_id=JDK-8214427, the two judgment conditions should be written as follows: sc = = (rs < < resize_stamp_shift) + 1 | sc = = (rs < < resize_stamp_shift) + max_ Resizers, because it is meaningless to directly compare rs and sc, there must be shift operation. What does it mean

- SC = = (RS < < resize_stamp_shift) + 1 the current number of threads for capacity expansion is 0, that is, the capacity expansion has been completed, so there is no need to add new threads for capacity expansion

- sc == (rs << RESIZE_STAMP_SHIFT) +

MAX_RESIZERS has reached the maximum number of threads participating in capacity expansion, so there is no need to add new threads for capacity expansion

The logic of real capacity expansion is in the transfer method, which we will see in detail later, but there is a small detail that can be noticed in advance. If nextTable has been initialized, transfer will return the reference of nextTable, and the new bucket array can be operated directly in the future.

Insert new value

If the bucket array has been initialized and the bucket to be expanded has also been expanded, and there are elements in the bucket located according to the hash, the process is the same as that of an ordinary HashMap. The only difference is that the current bucket should be locked at this time. See the code:

final V putVal(K key, V value, boolean onlyIfAbsent) {

if (key == null || value == null) throw new NullPointerException();

int hash = spread(key.hashCode());

int binCount = 0;

for (Node<K,V>[] tab = table;;) {

Node<K,V> f; int n, i, fh;

if (tab == null || (n = tab.length) == 0)// fold

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {// Fold}

else if ((ffh = f.hash) == MOVED)// fold

else {

V oldVal = null;

synchronized (f) {

// Pay attention to this humble judgment condition here

if (tabAt(tab, i) == f) {

if (fh >= 0) { // The node with FH > = 0 is a linked list, otherwise it is a tree node or ForwardingNode

binCount = 1;

for (Node<K,V> e = f;; ++binCount) {

K ek;

if (e.hash == hash &&

((eek = e.key) == key ||

(ek != null && key.equals(ek)))) {

oldVal = e.val; // If there are values in the linked list, update them directly

if (!onlyIfAbsent)

e.val = value;

break;

}

Node<K,V> pred = e;

if ((ee = e.next) == null) {

// If the process goes here, it means that there is no value in the linked list and it is directly connected to the tail of the linked list

pred.next = new Node<K,V>(hash, key, value, null);

break;

}

}

}

// The operation of red black tree is skipped first

}

}

}

}

// put succeeded, the number of map elements + 1

addCount(1L, binCount);

return null;

}

In this code, we should pay special attention to an insignificant judgment condition (the context has been marked in the source code): tabAt(tab, i) == f. The purpose of this judgment is to deal with the competition between the thread calling the put method and the expansion thread. Because synchronized is a blocking lock, if the thread calling the put method and the expansion thread operate the same bucket at the same time, and the thread calling the put method fails to compete for the lock, when the thread obtains the lock again, the element in the current bucket will become a ForwardingNode, and tabat (tab, I) will appear= F.

Multi thread dynamic capacity expansion

private final void transfer(Node<K,V>[] tab, Node<K,V>[] nextTab) {

int n = tab.length, stride;

if ((stride = (NCPU > 1) ? (n >>> 3) / NCPU : n) < MIN_TRANSFER_STRIDE)

stride = MIN_TRANSFER_STRIDE; // subdivide range

if (nextTab == null) { // Initialize a new bucket array

try {

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n << 1];

nextTab = nt;

} catch (Throwable ex) { // try to cope with OOME

sizeCtl = Integer.MAX_VALUE;

return;

}

nextTabnextTable = nextTab;

transferIndex = n;

}

int nextn = nextTab.length;

ForwardingNode<K,V> fwd = new ForwardingNode<K,V>(nextTab);

boolean advance = true;

boolean finishing = false; // to ensure sweep before committing nextTab

for (int i = 0, bound = 0;;) {

Node<K,V> f; int fh;

while (advance) {

int nextIndex, nextBound;

if (--i >= bound || finishing)

advance = false;

else if ((nextIndex = transferIndex) <= 0) {

i = -1;

advance = false;

}

else if (U.compareAndSwapInt

(this, TRANSFERINDEX, nextIndex,

nextBound = (nextIndex > stride ?

nextIndex - stride : 0))) {

bound = nextBound;

i = nextIndex - 1;

advance = false;

}

}

if (i < 0 || i >= n || i + n >= nextn) {

int sc;

if (finishing) {

nextTable = null;

table = nextTab;

sizeCtl = (n << 1) - (n >>> 1);

return;

}

if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) {

// Determine whether it will be the last capacity expansion thread

if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT)

return;

finishing = advance = true;

i = n; // recheck before commit

}

}

else if ((f = tabAt(tab, i)) == null)

advance = casTabAt(tab, i, null, fwd);

else if ((ffh = f.hash) == MOVED) // Only the last resizing thread has a chance to execute this branch

advance = true; // already processed

else { // The copying process is similar to that of HashMap and will not be repeated here

synchronized (f) {

// fold

}

}

}

}

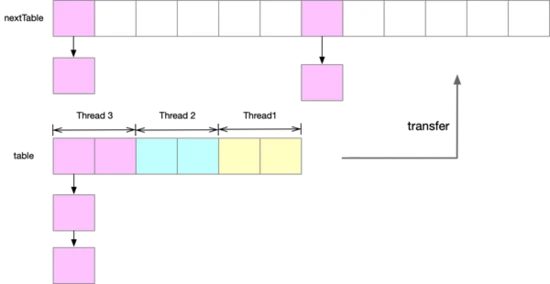

Before delving into the details of the source code, let's take a look at several features of the capacity expansion of ConcurrentHashMap in Java 8 according to the following figure:

- The new bucket array nextTable is twice the length of the original bucket array, which is consistent with the previous HashMap

- The thread participating in the capacity expansion also copies the elements in the table into the new bucket array nextTable in segments

- Bucket the elements in a bucket array are evenly distributed in the two buckets in the new bucket array, and the bucket subscript differs by n (the length of the old bucket array), which is still consistent with HashMap

How do threads work together

Let's first look at a key variable transferIndex, which is a variable modified by volatile. This can ensure that all threads must read the latest value.

private transient volatile int transferIndex;

This value will be initialized by the first thread participating in capacity expansion, because only the first thread participating in capacity expansion meets the condition nextTab == null

if (nextTab == null) { // initiating

try {

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n << 1];

nextTab = nt;

} catch (Throwable ex) { // try to cope with OOME

sizeCtl = Integer.MAX_VALUE;

return;

}

nextTabnextTable = nextTab;

transferIndex = n;

}

On the basis of understanding the transferIndex attribute, the above loop is easy to understand

while (advance) {

int nextIndex, nextBound;

// When bound < = i < = transferindex, i self subtraction jumps out of this cycle and continues to work

if (--i >= bound || finishing)

advance = false;

// All tasks for capacity expansion have been claimed, and this thread ends working

else if ((nextIndex = transferIndex) <= 0) {

i = -1;

advance = false;

}

// Otherwise, claim a new copy task and update the value of transferIndex through 'CAS'

else if (U.compareAndSwapInt

(this, TRANSFERINDEX, nextIndex,

nextBound = (nextIndex > stride ?

nextIndex - stride : 0))) {

bound = nextBound;

i = nextIndex - 1;

advance = false;

}

}

transferIndex is like a cursor. When each thread claims a copy task, it will be updated to transferIndex - stripe through CAS. CAS can ensure that the transferIndex can be reduced to 0 according to the step of stripe.

The last capacity expansion thread needs secondary confirmation?

For each capacity expansion thread, the variable i of the for loop represents the subscript of the bucket to be copied in the bucket array. The upper and lower limits of this value are calculated through the cursor transferIndex and step string. When i decreases to a negative number, it indicates that the current capacity expansion thread has completed the capacity expansion task. At this time, the process will go to this branch:

// I > = n | I + n > = nextn now it doesn't seem to be available

if (i < 0 || i >= n || i + n >= nextn) {

int sc;

if (finishing) { // [A] Complete the whole expansion process

nextTable = null;

table = nextTab;

sizeCtl = (n << 1) - (n >>> 1);

return;

}

// [B] Judge whether it is the last capacity expansion thread. If so, you need to scan the bucket array again and confirm it again

if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) {

// (sc - 2) == resizeStamp(n) << RESIZE_ STAMP_ The shift description is the last expansion thread

if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT)

return;

// Scan the bucket array again and make a secondary confirmation

finishing = advance = true;

i = n; // recheck before commit

}

}

Because the variable finishing is initialized to false, when the thread enters this if branch for the first time, it will first execute the branch annotated with [B]. At the same time, because the lower 16 bits of sizeCtl are initialized to the number of threads participating in capacity expansion plus one, so when the condition (SC - 2)= resizeStamp(n) << RESIZE_ STAMP_ When shift is satisfied, it can be proved that the current thread is the last thread for capacity expansion. At this time, set i to N, scan the bucket array again, and set finishing to true to ensure that after the bucket array is scanned, it can enter the branch annotated with [A] to end capacity expansion.

There is a problem here. According to our previous analysis, the capacity expansion threads can cooperate with each other to ensure that the segments of the bucket array they are responsible for are not repeated or leaked. Why do we need to make secondary confirmation here? A developer also consulted Doug lea (address: http://cs.oswego.edu/pipermail/concurrency-interest/2020-July/017171.html ), his reply is:

Yes, this is a valid point; thanks. The post-scan was needed in a previous version, and could be removed. It does not trigger often enough to matter though, so is for now another minor tweak that might be included next time CHM is updated.

Although Doug used the wording of could be, not often enough, etc. in his email, he also confirmed that the second check of the last capacity expansion thread is unnecessary. The specific replication process is similar to HashMap. Interested readers can turn to the high-end interview and never entangle too much in the red and black tree of HashMap.

size() method

addCount() method

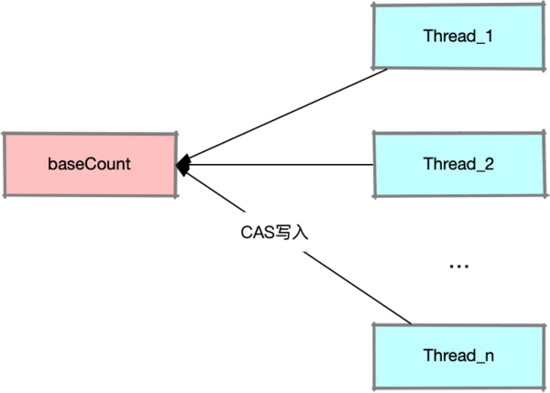

// Member variable that records the total number of map elements private transient volatile long baseCount;

At the end of the put method, there is an addCount method. Since putVal has successfully added an element here, the addCount method is used to maintain the total number of elements in the current ConcurrentHashMap. In the ConcurrentHashMap, there is a variable baseCount to record the number of elements in the map, as shown in the following figure, If n threads operate the baseCount variable through CAS at the same time, one and only one thread will succeed, and other threads will fall into endless spin, which will certainly bring performance bottlenecks.

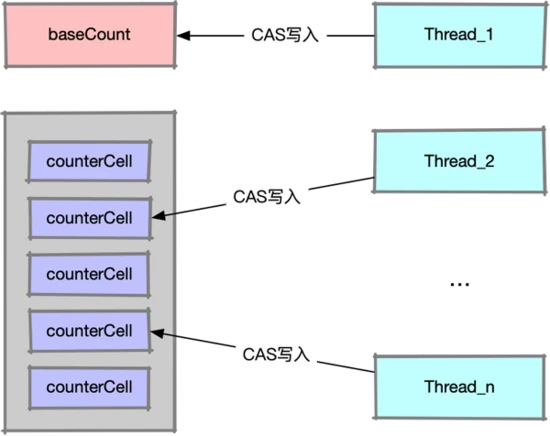

In order to prevent a large number of threads from spinning and waiting to write to baseCount, ConcurrentHashMap introduces an auxiliary queue, as shown in the figure below. Now the threads operating baseCount can be dispersed into this auxiliary queue. When calling size(), you only need to add the values in baseCount and auxiliary queue. In this way, you can call size() without locking.

The auxiliary queue is an array of type CounterCell:

@sun.misc.Contended static final class CounterCell {

volatile long value;

CounterCell(long x) { value = x; }

}

It can be simply understood as just wrapping a long variable value. Another problem to be solved is how to know which value in the operation auxiliary queue for a specific thread? The answer is the following:

static final int getProbe() {

return UNSAFE.getInt(Thread.currentThread(), PROBE);

}

The getProbe method will return a unique ID code of the current thread, and this value will not change. Therefore, the return value of getProbe and the length of the auxiliary queue can be calculated to obtain the specific subscript. Its return value may be 0. If it returns 0, threadlocalrandom needs to be called Localinit() initialization. There are two details to note in the addCount method

private final void addCount(long x, int check) {

CounterCell[] as; long b, s;

// Note that the judgment conditions here are skilled

if ((as = counterCells) != null ||

!U.compareAndSwapLong(this, BASECOUNT, b = baseCount, s = b + x)) {

CounterCell a; long v; int m;

boolean uncontended = true;

if (as == null || (m = as.length - 1) < 0 ||

(a = as[ThreadLocalRandom.getProbe() & m]) == null ||

// The variable uncontended records whether the CAS operation is successful

!(uncontended =

U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x))) {

fullAddCount(x, uncontended);

return;

}

if (check <= 1)

return;

s = sumCount();

}

if (check >= 0) {

// Check whether the capacity needs to be expanded, and see in detail later

}

}

Detail 1:

First of all, we should pay attention to the if judgment conditions that have just come in the method:

if ((as = counterCells) != null ||

!U.compareAndSwapLong(this, BASECOUNT, b = baseCount, s = b + x)) {

}

The author skillfully uses the logic short circuit here, if (as = countercells)= If NULL, the following CAS will not be executed. Why do you set it like this? The author has two considerations:

-

The reason is if (as = countercells)= Null indicates that the auxiliary queue has been initialized. Compared with all threads waiting for the variable baseCount, allowing threads to operate the value in the queue through CAS is more likely to succeed, because the maximum length of the auxiliary queue is a positive integer power greater than 2 of the current number of processors, which can support greater concurrency

-

If the auxiliary queue has not been initialized and the queue is not created until it is necessary, how to judge the "necessity"? It depends on whether the CAS operation on baseCount is successful. If it fails, it indicates that the concurrency of the current system is high and needs the assistance of queue. Otherwise, operate baseCount directly

Detail 2:

Only if the auxiliary queue already exists and is managed by threadlocalrandom It is a normal defensive judgment that getProbe () will do CAS operation when the location determined in the auxiliary queue is not null, but uncontended records whether CAS is successful, or if it fails, it will call ThreadLocalRandom. in fullAddCount. Advanceprobe changes an ID code to adjust the position of the current thread in the auxiliary queue to avoid all threads waiting in the same pit of the auxiliary queue.

fullAddCount() method

// See LongAdder version for explanation

// wasUncontended records whether the caller CAS succeeds. If it fails, change an element of the auxiliary queue to continue CAS

private final void fullAddCount(long x, boolean wasUncontended) {

int h;

if ((h = ThreadLocalRandom.getProbe()) == 0) {

ThreadLocalRandom.localInit(); // force initialization

h = ThreadLocalRandom.getProbe();

wasUncontended = true;

}

boolean collide = false; // True if last slot nonempty

for (;;) {

CounterCell[] as; CounterCell a; int n; long v;

// [A] If the auxiliary queue has been created, operate the auxiliary queue directly

if ((as = counterCells) != null && (n = as.length) > 0) {

if ((a = as[(n - 1) & h]) == null) {

if (cellsBusy == 0) { // Try to attach new Cell

CounterCell r = new CounterCell(x); // Optimistic create

if (cellsBusy == 0 &&

U.compareAndSwapInt(this, CELLSBUSY, 0, 1)) {

boolean created = false;

try { // Recheck under lock

CounterCell[] rs; int m, j;

if ((rs = counterCells) != null &&

(m = rs.length) > 0 &&

rs[j = (m - 1) & h] == null) {

rs[j] = r;

created = true;

}

} finally {

cellsBusy = 0;

}

if (created)

break;

continue; // Slot is now non-empty

}

}

collide = false;

}

else if (!wasUncontended) // If the caller CAS fails, this round of idling will continue with subscript change in the next cycle

wasUncontended = true; // Continue after rehash

else if (U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x))

break;

else if (counterCells != as || n >= NCPU)

// If the length of the auxiliary queue has exceeded the number of CPU s, this round of idling will continue with subscript change in the next cycle

collide = false; // At max size or stale

else if (!collide) // If the last operation fails (CAS fails or new counter cell fails), this round of idling will continue with subscript change in the next cycle

collide = true;

else if (cellsBusy == 0 && // If two consecutive operations on the auxiliary queue fail, consider capacity expansion

U.compareAndSwapInt(this, CELLSBUSY, 0, 1)) {

try {

if (counterCells == as) {// Expand table unless stale

CounterCell[] rs = new CounterCell[n << 1];

for (int i = 0; i < n; ++i)

rs[i] = as[i];

counterCells = rs;

}

} finally {

cellsBusy = 0;

}

collide = false;

continue; // Retry with expanded table

}

// If the last operation fails or the caller CAS fails, it will go here and change the subscript of the auxiliary queue to be operated

h = ThreadLocalRandom.advanceProbe(h);

}

// [B] If the secondary queue has not been created, it will be locked

else if (cellsBusy == 0 && counterCells == as &&

U.compareAndSwapInt(this, CELLSBUSY, 0, 1)) {

boolean init = false;

try { // Initialize table

if (counterCells == as) {

CounterCell[] rs = new CounterCell[2];

rs[h & 1] = new CounterCell(x);

counterCells = rs;

init = true;

}

} finally {

cellsBusy = 0;

}

if (init)

break;

}

// [C] If the auxiliary queue creation fails (lock taking fails), try the direct operation ` baseCount`

else if (U.compareAndSwapLong(this, BASECOUNT, v = baseCount, v + x))

break; // Fall back on using base

}

}

Because counterCells is an ordinary array, its write operations, including initialization, capacity expansion and element writing, need to be locked. The way to lock is to spin lock the global variable cellsBusy. First look at the three outermost branches:

- [B] If the auxiliary queue has not been created, it will be locked

- [C] If the creation of the auxiliary queue fails due to the failure of obtaining the lock, try to write the variable baseCount. What if it succeeds

- [A] If the auxiliary operation queue has been created, the corresponding auxiliary operation queue will be deleted

There are many branch codes marked with [A] in the comment. The main idea is that if an element in the auxiliary queue fails through CAS or locking, first call threadlocalrandom Advanceprobe (H) changes the element in the queue to continue the operation. Whether the operation is successful or not will be recorded in the temporary variable collapse. If the next operation still fails, it indicates that the amount of concurrency at this time is relatively large and needs to be expanded. If the length of the auxiliary queue has exceeded the number of CPUs, the capacity will not be expanded and the operation of changing one element will continue, because the maximum number of threads that can run at the same time will not exceed the number of CPUs of the computer.

In this process, there are four details that still need attention:

Detail 1:

counterCells is just an ordinary array, so it is not thread safe, so its write operation needs to be locked to ensure concurrency safety

Detail 2:

When locking, the author makes a double check action. I think some articles interpret it as "double check similar to the singleton mode". This is wrong. The reason why the author does this is mentioned in the previous article. First, the first check cellsBusy == 0 is the basis for the process to go down. If cellsBusy == 1, the lock will fail and exit directly, Call H = threadlocalrandom advanceProbe(h); After updating h, try again. If cellsBusy == 0 passes the verification, call CounterCell r = new CounterCell(x); Initialize a counter cell to reduce the size of the critical area of the spin lock, so as to improve the concurrency performance

Detail 3:

When locking, first judge whether cellsBusy is 0. If it is 1, it will directly declare that locking fails. Why do you do this? Because the direct reading of volatile consumes less than calling the CAS operation of UNSAFE. If the direct reading of cellsBusy can judge that the lock is failed, there is no need to call more time-consuming CAS

Detail 4:

The change of cellsBusy from 0 to 1 calls CAS, but setting 1 to 0 only uses assignment operation. This is because CAS can ensure that only one thread can go to this statement. Therefore, assignment operation can be used to change the value of cellsBusy.

sumCount

The first two methods are to record the number of elements in ConcurrentHashMap to baseCount and auxiliary queues. When you call size(), you only need to add these values together.

public int size() {

long n = sumCount();

return ((n < 0L) ? 0 :

(n > (long)Integer.MAX_VALUE) ? Integer.MAX_VALUE :

(int)n);

}

final long sumCount() {

CounterCell[] as = counterCells; CounterCell a;

long sum = baseCount;

if (as != null) {

for (int i = 0; i < as.length; ++i) {

if ((a = as[i]) != null)

sum += a.value;

}

}

return sum;

}

Share programmers' stories, dry goods and materials.

Mainstream technical materials such as interview questions of first-line large factories and high concurrency: JSYH1w