The article is too long, and the contents are as follows:

Docker architecture

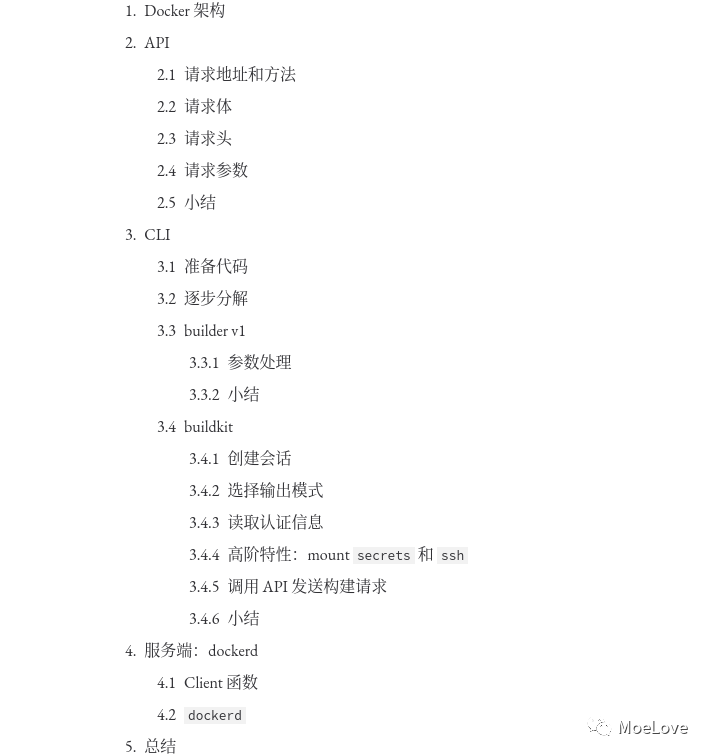

Here, we first have a general understanding of docker from a macro perspective. On the whole, it is a C/S architecture; The "docker" command we usually use is its CLI client, and its server is "dockerd". In Linux system, we usually use "systemd" for management, so we can use "systemctl start docker" to start the service. (however, please note that whether dockerd , can run or not has nothing to do with , systemd , you can start the service directly through , dockerd , just as you normally execute an ordinary binary program. Note that you need root permission)

In fact

Docker architecture

(source: docker overview)

The interaction between docker CLI and dockerd is completed through the REST API. When we execute docker version, the filtering API can see the following output:

➜ ~ docker version |grep API API version: 1.41 API version: 1.41 (minimum version 1.12)

The upper line is the API version of Docker CLI, and the lower line represents the API version of dockerd. There is a bracket after it because Docker has good compatibility. Here, it means that its minimum compatible API version is 1.12.

For our project development of C/S architecture, API is generally the first, so let's take a look at the API first.

Of course, the main body of this article is related to building the system, so let's just look at building related API s directly.

Next, we will talk about CLI, and the code is based on V20 10.5 shall prevail. Finally, the server docked.

API

After the official release of each version, the Docker maintenance team will publish the API documents. You can browse online through the Docker Engine API or build the API documents by yourself.

First, enter the source code warehouse of clone Docker and execute "make swagger docs" in the project warehouse. You can start a container and expose the port to the local "9000" port at the same time http://127.0.0.1:9000 Access local API documentation.

(MoeLove) ➜ git clone https://github.com/docker/docker.git docker (MoeLove) ➜ cd docker (MoeLove) ➜ docker git:(master) git checkout -b v20.10.5 v20.10.5 (MoeLove) ➜ docker git:(v20.10.5) make swagger-docs API docs preview will be running at http://localhost:9000

Open http://127.0.0.1:9000/#operation/ImageBuild At this address, you can see the API required for building images in version 1.41. We will analyze this API.

Request address and method

The interface address is / v1 41 / build method is "POST". We can use a newer version of "curl" tool to verify this interface (we need to use -- unix socket to connect to the UNIX Domain Socket monitored by Docker). Dockerd: by default, it listens in / var / run / Docker Socket. Of course, you can also pass the -- host parameter to dockerd to listen for UNIX sockets on HTTP ports or other paths

/ # curl -X POST --unix-socket /var/run/docker.sock localhost/v1.41/build

{"message":"Cannot locate specified Dockerfile: Dockerfile"}

From the above output, we can see that we have indeed accessed the interface, and the response of the interface is to prompt the need for a Dockerfile

Request body

"A tar archive compressed with one of the following algorithms: identity (no compression), gzip, bzip2, xz. string

"The request body is a # tar # archive file. You can choose non compression, gzip, bzip2, xz # compression and other forms. These compression formats will not be introduced, but it is worth noting that if compression is used, the transmission volume will become smaller, that is, the network consumption will be reduced accordingly. However, compression / decompression requires CPU and other computing resources, which is a trade-off point when we optimize the construction of large-scale images.

Request header

Because the file to be sent is a # tar archive file, the content type # defaults to # application/x-tar. Another header that will be sent is "X-Registry-Config", which is the configuration information of Docker Registry encoded by Base64. The content is the same as $home / docker/config. The information in # auths # in JSON # is consistent.

These configuration information will be automatically written to $home / docker/config. JSON file. This information is transmitted to the # dockerd # and used as the authentication information of the pull image during the construction process.

Request parameters

The last is the request parameters. There are many parameters. You can basically see the corresponding meaning through "docker build --help". We won't expand them one by one here. Some key parameters will be introduced later.

Summary

Above, we introduced the API related to # Docker # building images. We can directly access the API documents of Docker Engine. Or build a local API document service through the source code warehouse and access it with a browser.

Through the API, we also know that the request body required by the interface is a "tar" archive file (compression algorithm can be selected for compression), and its request header will carry the user's authentication information in the image warehouse. This reminds us that when using remote , docked , to build, please pay attention to security and try to use , tls , for encryption to avoid data leakage.

CLI

The API has been introduced. Let's take a look at the "Docker" CLI. I introduced in my previous article that there are two building systems in Docker. One is the v1 version of "builder" and the other is the v2 version of "BuildKit". Let's go deep into the source code to see their respective behaviors when building images.

Prepare code

The code warehouse for CLI is in https://github.com/docker/cli The code of this article is based on v2.0 10.5} shall prevail.

Use this version of the code by following these steps:

(MoeLove) ➜ git clone https://github.com/docker/cli.git (MoeLove) ➜ cd cli (MoeLove) ➜ cli git:(master) git checkout -b v20.10.5 v20.10.5

Stepwise decomposition

Docker is the client tool we use to interact with dockerd. As for the part related to construction, we are familiar with "docker build" or "docker image build". In 19.03, we added "docker builder build", but in fact they are the same, just making an alias:

// cmd/docker/docker.go#L237

if v, ok := aliasMap["builder"]; ok {

aliases = append(aliases,

[2][]string{{"build"}, {v, "build"}},

[2][]string{{"image", "build"}, {v, "build"}},

)

}

The real entry function is actually cli / command / image / build go; The logic to distinguish how to call is as follows:

func runBuild(dockerCli command.Cli, options buildOptions) error {

buildkitEnabled, err := command.BuildKitEnabled(dockerCli.ServerInfo())

if err != nil {

return err

}

if buildkitEnabled {

return runBuildBuildKit(dockerCli, options)

}

//The actual logic for the builder is omitted

}

Here is to judge whether it supports {buildkit

// cli/command/cli.go#L176

func BuildKitEnabled(si ServerInfo) (bool, error) {

buildkitEnabled := si.BuildkitVersion == types.BuilderBuildKit

if buildkitEnv := os.Getenv("DOCKER_BUILDKIT"); buildkitEnv != "" {

var err error

buildkitEnabled, err = strconv.ParseBool(buildkitEnv)

if err != nil {

return false, errors.Wrap(err, "DOCKER_BUILDKIT environment variable expects boolean value")

}

}

return buildkitEnabled, nil

}

Of course, you can get two information from here:

-

The build kit can be started through the configuration of # dockerd #. At / etc / docker / daemon Add the following content to JSON , and restart , dockerd ,

{

"features": {

"buildkit": true

}

}

-

You can also enable the "buildkit" support on the "docker" cli, and the CLI configuration can override the server configuration. Via # export DOCKER_BUILDKIT=1 , you can turn on the support of , buildkit , and set it to 0 to turn it off (0/false/f/F and so on are the same results)

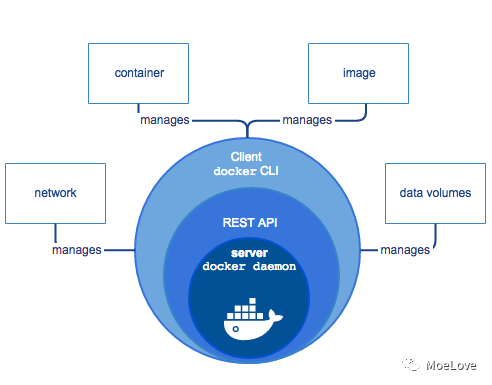

From the above introduction, we can see that for the original default builder, the entry logic is "runBuild", while for the builder using buildkit, it is "runbuildkit". Next, we will decompose the two step by step.

builder v1

The runBuild function roughly goes through the following stages:

Parameter processing

The first part is the processing and verification of parameters.

-

stream and compress cannot be used at the same time.

Because if we specify "compress", the CLI will use "gzip" to compress the build context, so we can't handle the build context well through the "stream" mode.

Of course, you may also think that technically, compression and streaming have no inevitable conflict and can be realized. Indeed, from a technical point of view, the two are not completely impossible to exist together, but simply increase the action of decompression. However, when the "stream" mode is enabled to compress and decompress each file, it will be a great waste of resources and increase its complexity. Therefore, it is directly restricted in the CLI and it is not allowed to use "compress" and "stream" at the same time

-

You cannot use # stdin # to read # Dockerfile # and # build context at the same time.

During construction, if we pass the name of # Dockerfile # to -, it means that its contents are read from # stdin #.

For example, there are three files {foo} bar} and {Dockerfile} in a directory. The contents of {Dockerfile} are passed to {docker build} through {stdin} through the pipeline

(MoeLove) ➜ x ls bar Dockerfile foo (MoeLove) ➜ x cat Dockerfile | DOCKER_BUILDKIT=0 docker build -f - . Sending build context to Docker daemon 15.41kB Step 1/3 : FROM scratch ---> Step 2/3 : COPY foo foo ---> a2af45d66bb5 Step 3/3 : COPY bar bar ---> cc803c675dd2 Successfully built cc803c675dd2

It can be seen that the image can be successfully built by passing "Dockerfile" through "stdin". Next, we try to pass in the build context through stdin.

(MoeLove) ➜ x tar -cvf x.tar foo bar Dockerfile foo bar Dockerfile (MoeLove) ➜ x cat x.tar| DOCKER_BUILDKIT=0 docker build -f Dockerfile - Sending build context to Docker daemon 10.24kB Step 1/3 : FROM scratch ---> Step 2/3 : COPY foo foo ---> 09319712e220 Step 3/3 : COPY bar bar ---> ce88644a7395 Successfully built ce88644a7395

You can see that the image can also be successfully built by passing "build context" through "stdin".

But what happens when the build context of - docker - file is specified?

(MoeLove) ➜ x DOCKER_BUILDKIT=0 docker build -f - - invalid argument: can't use stdin for both build context and dockerfile

You'll report a mistake. Therefore, you cannot use , stdin , to read , Dockerfile , and , build context at the same time.

-

build context supports four behaviors.

switch {

case options.contextFromStdin():

//Omit

case isLocalDir(specifiedContext):

//Omit

case urlutil.IsGitURL(specifiedContext):

//Omit

case urlutil.IsURL(specifiedContext):

//Omit

default:

return errors.Errorf("unable to prepare context: path %q not found", specifiedContext)

}

It is transferred from # stdin # as demonstrated above. What is passed to # stdin # is the # tar # archive file. Of course, you can also specify a specific PATH. We usually use the} docker build This is the usage;

Alternatively, you can specify the address of a "git" warehouse, and the CLI will call the "git" command to "clone" the warehouse to a temporary directory for use;

The last one is to give a URL address, which can be a specific Dockerfile address or a download address of a tar archive file.

These are basically literal differences. As for the behavior difference of CLI, it is mainly the last one. When the URL address is a specific "Dockerfile" file address, in this case, "build context" is equivalent to only "Dockerfile" itself, so you can't use the specification such as "COPY". As for "ADD", you can only use the accessible external address.

-

Available Docker ignore ignore unnecessary files

I have shared relevant contents in previous articles. Here we look at its implementation logic.

// cli/command/image/build/dockerignore.go#L13

func ReadDockerignore(contextDir string) ([]string, error) {

var excludes []string

f, err := os.Open(filepath.Join(contextDir, ".dockerignore"))

switch {

case os.IsNotExist(err):

return excludes, nil

case err != nil:

return nil, err

}

defer f.Close()

return dockerignore.ReadAll(f)

}

-

. dockeignore , is a fixed file name and needs to be placed in the root directory of , build context ,. As mentioned earlier, using the URL address of a "Dockerfile" file as the way of "build context" input cannot be used dockerignore .

-

The. Dockeignore file may not exist, but if an error is encountered during reading, an error will be thrown.

-

Pass Docker ignore , will filter out the content that you don't want to add to the image, or filter out the content that has nothing to do with the image.

Finally, the CLI will pass the contents in build context After dockeignore , filtering, it is packaged into a real , build context , that is, the real build context. That's why sometimes you find yourself writing "COPY xx xx" in "Dockerfile", but you don't find the file in the end. It's likely to be Docker ignore. This is conducive to optimizing the transmission pressure between CLI and dockerd.

-

Docker CLI will also read ~ / docker/config.json.

This is basically consistent with the content described in the previous API section. The authentication information is passed to the "dockerd" through the "X-Registry-Config" header for identity verification when the image needs to be pulled.

-

Call the API for the actual build task

When all the required checksum information is ready, start calling dockercli The client sends the request to the # dockerd through the API interface encapsulated by # to carry out the actual construction task.

response, err := dockerCli.Client().ImageBuild(ctx, body, buildOptions)

if err != nil {

if options.quiet {

fmt.Fprintf(dockerCli.Err(), "%s", progBuff)

}

cancel()

return err

}

defer response.Body.Close()

Here, in fact, the process handled by the CLI is basically completed in the process of one construction, and then the progress output is carried out according to the passed parameters or the image ID is written to a file. This part will not be expanded.

Summary

The whole process is roughly as follows:

docker builder processing flow

Start with the entry function "runBuild" and judge whether it supports "buildkit". If it does not support "buildkit", continue to use v1's "builder". Next, read various parameters and execute various processing logic according to different parameters. It should be noted here that both Dockerfile and build context can be read from files or stdin. Please pay attention to the specific use. In addition Dockeignore file can filter out some files in "build context". When using it, you can optimize the construction efficiency through this method. Of course, it should be noted that there is no "build context" when obtaining "Dockerfile" through URL, so commands like "COPY" cannot be used. When all the build context and parameters are ready, then call the encapsulated client and send these requests to dockerd according to the API introduced at the beginning of this article for real build logic.

Finally, when the build is completed, the CLI determines whether to display the build progress or results according to the parameters.

buildkit

Next, let's take a look at how to execute the build. The method entry is the same as that of "builder". However, at "buildkitEnabled", because the "buildkit" support is enabled, we jump to "runbuildkit".

func runBuild(dockerCli command.Cli, options buildOptions) error {

buildkitEnabled, err := command.BuildKitEnabled(dockerCli.ServerInfo())

if err != nil {

return err

}

if buildkitEnabled {

return runBuildBuildKit(dockerCli, options)

}

//The actual logic for the builder is omitted

}

Create session

However, unlike builder , the , trySession , function is executed once.

// cli/command/image/build_buildkit.go#L50

s, err := trySession(dockerCli, options.context, false)

if err != nil {

return err

}

if s == nil {

return errors.Errorf("buildkit not supported by daemon")

}

What is this function used for? Let's find the file where the function is located: cli/command/image/build_session.go

// cli/command/image/build_session.go#L29

func trySession(dockerCli command.Cli, contextDir string, forStream bool) (*session.Session, error) {

if !isSessionSupported(dockerCli, forStream) {

return nil, nil

}

sharedKey := getBuildSharedKey(contextDir)

s, err := session.NewSession(context.Background(), filepath.Base(contextDir), sharedKey)

if err != nil {

return nil, errors.Wrap(err, "failed to create session")

}

return s, nil

}

Of course, it also includes the most important "isSessionSupported" function:

// cli/command/image/build_session.go#L22

func isSessionSupported(dockerCli command.Cli, forStream bool) bool {

if !forStream && versions.GreaterThanOrEqualTo(dockerCli.Client().ClientVersion(), "1.39") {

return true

}

return dockerCli.ServerInfo().HasExperimental && versions.GreaterThanOrEqualTo(dockerCli.Client().ClientVersion(), "1.31")

}

isSessionSupported is obviously used to judge whether to support Session. Here, because we will pass in "forStream" as "false", and the current API version is 1.41 larger than 1.39, this function will return "true". In fact, the same logic has been executed in # builder # except that after passing the -- stream # parameter, use # Session # to obtain a long connection to achieve the processing capacity of # stream #.

That's why there's a dockercli ServerInfo(). HasExperimental && versions. GreaterThanOrEqualTo(dockerCli.Client(). Clientversion(), "1.31") is the reason for the existence of this judgment.

When {Session} is confirmed to be supported, it will call} Session Newsession - create a new Session.

// github.com/moby/buildkit/session/session.go#L47

func NewSession(ctx context.Context, name, sharedKey string) (*Session, error) {

id := identity.NewID()

var unary []grpc.UnaryServerInterceptor

var stream []grpc.StreamServerInterceptor

serverOpts := []grpc.ServerOption{}

if span := opentracing.SpanFromContext(ctx); span != nil {

tracer := span.Tracer()

unary = append(unary, otgrpc.OpenTracingServerInterceptor(tracer, traceFilter()))

stream = append(stream, otgrpc.OpenTracingStreamServerInterceptor(span.Tracer(), traceFilter()))

}

unary = append(unary, grpcerrors.UnaryServerInterceptor)

stream = append(stream, grpcerrors.StreamServerInterceptor)

if len(unary) == 1 {

serverOpts = append(serverOpts, grpc.UnaryInterceptor(unary[0]))

} else if len(unary) > 1 {

serverOpts = append(serverOpts, grpc.UnaryInterceptor(grpc_middleware.ChainUnaryServer(unary...)))

}

if len(stream) == 1 {

serverOpts = append(serverOpts, grpc.StreamInterceptor(stream[0]))

} else if len(stream) > 1 {

serverOpts = append(serverOpts, grpc.StreamInterceptor(grpc_middleware.ChainStreamServer(stream...)))

}

s := &Session{

id: id,

name: name,

sharedKey: sharedKey,

grpcServer: grpc.NewServer(serverOpts...),

}

grpc_health_v1.RegisterHealthServer(s.grpcServer, health.NewServer())

return s, nil

}

It creates a long connection session, and the next operations will be based on this session. The following operations are roughly the same as those of the builder. First, judge the form of the "context" provided; Of course, it is also the same as "builder". It is not allowed to obtain "Dockerfile" and "build context" from "stdin" at the same time.

switch {

case options.contextFromStdin():

//Omit processing logic

case isLocalDir(options.context):

//Omit processing logic

case urlutil.IsGitURL(options.context):

//Omit processing logic

case urlutil.IsURL(options.context):

//Omit processing logic

default:

return errors.Errorf("unable to prepare context: path %q not found", options.context)

}

The reason why the processing logic here is consistent with v1 # builder # mainly lies in the user experience. The function of the current CLI has been basically stable and users have been used to it. Therefore, even if # BuildKit # is added, it has not caused much change to the operation logic of the main body.

Select output mode

BuildKit , supports three different output modes , local , tar , and normal mode (i.e. stored in , dockerd , in the format of - o type=local,dest=path , which is also convenient to use if you need to distribute the built image or browse the files in the image.

outputs, err := parseOutputs(options.outputs)

if err != nil {

return errors.Wrapf(err, "failed to parse outputs")

}

for _, out := range outputs {

switch out.Type {

case "local":

//Omit

case "tar":

//Omit

}

}

In fact, it supports a fourth mode, called "cacheonly", but it does not have a very intuitive output like the three modes mentioned above, and there may be few users, so it is not written separately.

Read authentication information

dockerAuthProvider := authprovider.NewDockerAuthProvider(os.Stderr) s.Allow(dockerAuthProvider)

The behavior here is basically the same as that of the builder mentioned above. There are two main points to pay attention to:

-

Allow() function

func (s *Session) Allow(a Attachable) {

a.Register(s.grpcServer)

}

The {Allow} function allows access to a given service through the grpc session mentioned above.

-

authprovider

authprovider , is a set of abstract interfaces provided by , BuildKit , through which you can access the configuration file on the machine and get the authentication information. The behavior is basically the same as that of , builder ,.

Higher order features: secrets # and # ssh

I have talked about the use of these two high-order features in other articles. I won't use them more in this article. I'll just take a general look at the principles and logic of this part.

secretsprovider and sshprovider are provided by buildkit. Using these two features, you can build Docker images more safely and flexibly.

func parseSecretSpecs(sl []string) (session.Attachable, error) {

fs := make([]secretsprovider.Source, 0, len(sl))

for _, v := range sl {

s, err := parseSecret(v)

if err != nil {

return nil, err

}

fs = append(fs, *s)

}

store, err := secretsprovider.NewStore(fs)

if err != nil {

return nil, err

}

return secretsprovider.NewSecretProvider(store), nil

}

In terms of "secrets", the final "parseSecret" will complete format related verification and so on;

func parseSSHSpecs(sl []string) (session.Attachable, error) {

configs := make([]sshprovider.AgentConfig, 0, len(sl))

for _, v := range sl {

c := parseSSH(v)

configs = append(configs, *c)

}

return sshprovider.NewSSHAgentProvider(configs)

}

As for "ssh", it is basically the same as "secrets" above. ssh forwarding is allowed through "sshprovider", which will not be further expanded here.

Call API to send build request

There are two main situations here.

-

When "build context" is read from "stdin" and is a "tar" file

buildID := stringid.GenerateRandomID()

if body != nil {

eg.Go(func() error {

buildOptions := types.ImageBuildOptions{

Version: types.BuilderBuildKit,

BuildID: uploadRequestRemote + ":" + buildID,

}

response, err := dockerCli.Client().ImageBuild(context.Background(), body, buildOptions)

if err != nil {

return err

}

defer response.Body.Close()

return nil

})

}

It will execute the above logic, but at the same time, it should be noted that this is using Golang's goroutine, which is not the end here. The code after this part of the code will also be executed. This brings us to another situation (usually).

-

Use doBuild to complete the logic

eg.Go(func() error {

defer func() {

s.Close()

}()

buildOptions := imageBuildOptions(dockerCli, options)

buildOptions.Version = types.BuilderBuildKit

buildOptions.Dockerfile = dockerfileName

buildOptions.RemoteContext = remote

buildOptions.SessionID = s.ID()

buildOptions.BuildID = buildID

buildOptions.Outputs = outputs

return doBuild(ctx, eg, dockerCli, stdoutUsed, options, buildOptions)

})

What will doBuild do? It also calls the API and sends a build request to dockerd.

func doBuild(ctx context.Context, eg *errgroup.Group, dockerCli command.Cli, stdoutUsed bool, options buildOptions, buildOptions types.ImageBuildOptions, at session.Attachable) (finalErr error) {

response, err := dockerCli.Client().ImageBuild(context.Background(), nil, buildOptions)

if err != nil {

return err

}

defer response.Body.Close()

//Omit

}

From the above introduction, we can make a small summary first. When "build context" is read from "stdin" and is a "tar" archive, it will actually send two / build requests to "dockerd". In general, it will only send one request.

What's the difference here? We won't expand here. We'll explain it later when we talk about the docked server.

Summary

Here, we analyze the process of building images with the CLI supported by build kit. The general process is as follows:

Start with the entry function "runBuild" to determine whether it supports "buildkit". If "buildkit" is supported, call "runBuild kit". Unlike v1's # builder #, after the # buildkit # is opened, a long connection session will be created first and maintained all the time. Secondly, it is the same as "builder". Judge the source, format and verification parameters of "build context". Of course, buildkit supports three different output formats: tar, local, or normally stored in the Docker directory. In addition, it is a new high-level feature in the "buildkit", which can configure functions such as "secrets" and "ssh" keys. Finally, call the API to interact with dockerd to complete the construction of the image.

Server: dockerd

The API, CLI v1 , builder , and cli , buildkit are introduced respectively above. Next, let's take a look at the specific principle and logic of the server.

Client function

Remember the # ImageBuild # function that interacts with the server through the API in the above section? Before we begin the introduction of dockerd , let's take a look at the specific content of the client interface.

// github.com/docker/docker/client/image_build.go#L20

func (cli *Client) ImageBuild(ctx context.Context, buildContext io.Reader, options types.ImageBuildOptions) (types.ImageBuildResponse, error) {

query, err := cli.imageBuildOptionsToQuery(options)

if err != nil {

return types.ImageBuildResponse{}, err

}

headers := http.Header(make(map[string][]string))

buf, err := json.Marshal(options.AuthConfigs)

if err != nil {

return types.ImageBuildResponse{}, err

}

headers.Add("X-Registry-Config", base64.URLEncoding.EncodeToString(buf))

headers.Set("Content-Type", "application/x-tar")

serverResp, err := cli.postRaw(ctx, "/build", query, buildContext, headers)

if err != nil {

return types.ImageBuildResponse{}, err

}

osType := getDockerOS(serverResp.header.Get("Server"))

return types.ImageBuildResponse{

Body: serverResp.body,

OSType: osType,

}, nil

}

There is nothing too special, and the behavior is consistent with the API. Here we confirm that it does access the / build interface, so let's take a look at the / build interface of dockerd and see what it does when building the image.

dockerd

Since this article focuses on the parts related to the construction of the system, we won't go into too much detail about the contents irrelevant to the construction. Let's directly look at what happens when the CLI sends a request through the / build interface.

Let's first look at the API entry:

// api/server/router/build/build.go#L32

func (r *buildRouter) initRoutes() {

r.routes = []router.Route{

router.NewPostRoute("/build", r.postBuild),

router.NewPostRoute("/build/prune", r.postPrune),

router.NewPostRoute("/build/cancel", r.postCancel),

}

}

dockerd , provides a set of RESTful back-end interface services, and the entry of processing logic is the , postBuild , function above.

There are many contents of this function. Let's decompose its main steps.

buildOptions, err := newImageBuildOptions(ctx, r)

if err != nil {

return errf(err)

}

The newImageBuildOptions function is used to construct the construction parameters. It converts the parameters submitted through the API into the parameter form actually required by the construction action.

buildOptions.AuthConfigs = getAuthConfigs(r.Header)

The getauthconfig function is used to get authentication information from the request header

imgID, err := br.backend.Build(ctx, backend.BuildConfig{

Source: body,

Options: buildOptions,

ProgressWriter: buildProgressWriter(out, wantAux, createProgressReader),

})

if err != nil {

return errf(err)

}

Here we need to pay attention: the real construction process is about to begin. Use the Build function of backend to complete the real Build process

// api/server/backend/build/backend.go#L53

func (b *Backend) Build(ctx context.Context, config backend.BuildConfig) (string, error) {

options := config.Options

useBuildKit := options.Version == types.BuilderBuildKit

tagger, err := NewTagger(b.imageComponent, config.ProgressWriter.StdoutFormatter, options.Tags)

if err != nil {

return "", err

}

var build *builder.Result

if useBuildKit {

build, err = b.buildkit.Build(ctx, config)

if err != nil {

return "", err

}

} else {

build, err = b.builder.Build(ctx, config)

if err != nil {

return "", err

}

}

if build == nil {

return "", nil

}

var imageID = build.ImageID

if options.Squash {

if imageID, err = squashBuild(build, b.imageComponent); err != nil {

return "", err

}

if config.ProgressWriter.AuxFormatter != nil {

if err = config.ProgressWriter.AuxFormatter.Emit("moby.image.id", types.BuildResult{ID: imageID}); err != nil {

return "", err

}

}

}

if !useBuildKit {

stdout := config.ProgressWriter.StdoutFormatter

fmt.Fprintf(stdout, "Successfully built %s\n", stringid.TruncateID(imageID))

}

if imageID != "" {

err = tagger.TagImages(image.ID(imageID))

}

return imageID, err

}

This function looks long, but its main functions are as follows:

-

Newtager , is used to label the image, that is, the content related to our - t , parameter, which is not expanded here.

-

Call different build back ends by judging whether the build kit is used.

useBuildKit := options.Version == types.BuilderBuildKit

var build *builder.Result

if useBuildKit {

build, err = b.buildkit.Build(ctx, config)

if err != nil {

return "", err

}

} else {

build, err = b.builder.Build(ctx, config)

if err != nil {

return "", err

}

}

-

Process actions after the build is complete.

After this function, it is the analysis of v1 # builder # and # buildkit # Dockerfile # and the operation of # build context # respectively.

The content involved here is closely related to my next article "best practices for efficiently building Docker images", so I won't expand it here. Please look forward to the next article.

summary

Firstly, this paper introduces the C/S architecture of Docker and the API used to build the image. The API documents can be viewed online or built locally. After the step-by-step decomposition of the source code between the v1 # builder and the CLI builder, the difference between the v1 # builder and the CLI builder is implemented. Finally, we went deep into the source code of {dockerd} and learned about the calls to different build backend. So far, the principle and main code of Docker image construction are introduced.

Original link: https://mp.weixin.qq.com/s?__biz=MzI2ODAwMzUwNA==&mid=2649295920&idx=1&sn=b89577714e45ef6d1944be3e209b415b&scene=21#wechat_redirect