Advantages of thread pool

1,Threads are scarce resources. Using thread pool can reduce the number of threads created and destroyed, and each working thread can be reused. 2,The number of working threads in the thread pool can be adjusted according to the affordability of the system to prevent the server from crashing due to excessive memory consumption.

Creation of thread pool

public ThreadPoolExecutor(int corePoolSize,

int maximumPoolSize,

long keepAliveTime,

TimeUnit unit,

BlockingQueue<Runnable> workQueue,

RejectedExecutionHandler handler)

corePoolSize: number of core threads in the thread pool

maximumPoolSize: maximum number of threads in the thread pool

keepAliverTime: the maximum survival time of idle redundant threads when the number of active threads is greater than the number of core threads

Unit: the unit of survival time

workQueue: the queue where tasks are stored

Handler: handler for tasks that exceed thread range and queue capacity

Implementation principle of thread pool

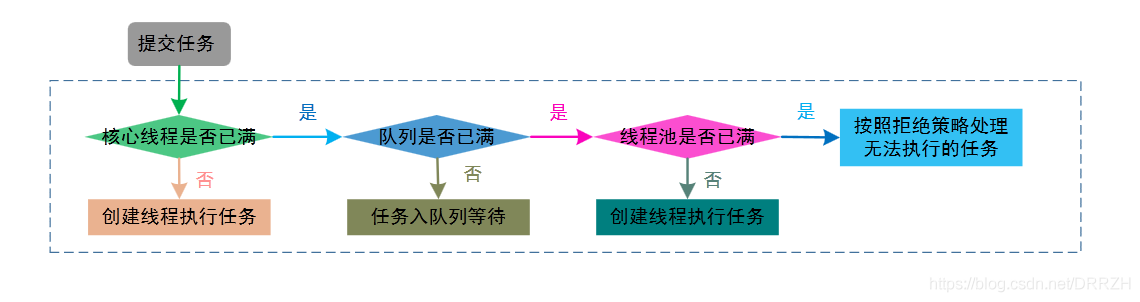

Submit a task to the thread pool. The processing flow of the thread pool is as follows:

1. Judge whether all the core threads in the thread pool are executing tasks. If not (the core thread is idle or there are core threads not created), create a new worker thread to execute tasks. If all the core threads are executing tasks, enter the next process.

2. The thread pool determines whether the work queue is full. If the work queue is not full, the newly submitted task is stored in the work queue. If the work queue is full, proceed to the next process.

3. Judge whether all threads in the thread pool are working. If not, create a new working thread to execute the task. If it is full, it is left to the saturation strategy to handle the task.

When a task is submitted to the thread pool, it will first judge whether the current number of threads is less than the corePoolSize. If it is less than, it will create a thread to execute the submitted task. Otherwise, it will put the task into the workQueue queue. If the workQueue is full, it will judge whether the current number of threads is less than the maximumPoolSize. If it is less than, it will create a thread to execute the task, otherwise it will call the handler, To indicate that the thread pool refused to receive a task.

The top-level interface of thread pool in Java is Executor. Our common implementation class is ThreadPoolExecutor. Its construction method is as follows:

public ThreadPoolExecutor(int corePoolSize, //The maximum number of core threads in the thread pool

int maximumPoolSize,//Maximum number of threads in the thread pool

long keepAliveTime,//Indicates the lifetime of an idle thread.

TimeUnit unit,//Represents the unit of keepAliveTime.

BlockingQueue<Runnable> workQueue,//Blocking queue for caching tasks

ThreadFactory threadFactory,//

RejectedExecutionHandler handler//Indicates the policy adopted when the workQueue is full and the number of threads in the pool reaches maximumPoolSize, and the thread pool refuses to add new tasks.

) {

this(corePoolSize, maximumPoolSize, keepAliveTime, unit, workQueue,

threadFactory, defaultHandler);

}

Note that the thread pool does not mark which threads are core threads and which are non core threads. The thread pool only cares about the number of core threads.

For the thread pool rejection policy, the handler can generally take the following four values.

BlockingQueue blocks the queue and follows FIFO. At any time, no matter how high the concurrency is, only one thread can queue in or out of the queue. Is a thread safe queue.

Queues are divided into bounded and unbounded

Bounded queue: when the queue is full, only outgoing operations can be performed. All incoming operations must wait, that is, they are blocked.

The queue is empty and can only be queued. All queued operations must wait, that is, they are blocked.

workQueue: it determines the queuing policy of cache tasks. For different application scenarios, we may adopt different queuing strategies, which requires different types of blocking queues. There are two commonly used blocking queues in the thread pool:

- SynchronousQueue: no tasks are cached in this queue. When submitting a task to the thread pool, if there is no idle thread to run the task, the enlistment operation will be blocked. When there is a thread to get the task, the dequeue operation will wake up the thread performing the enlistment operation. From this feature, SynchronousQueue is an unbounded queue. Therefore, when SynchronousQueue is used as the blocking queue of the thread pool, the parameter maximumPoolSizes has no effect.

- LinkedBlockingQueue: as the name suggests, it is a queue implemented with a linked list. It can be bounded or unbounded, but unbounded is used by default in Executors.

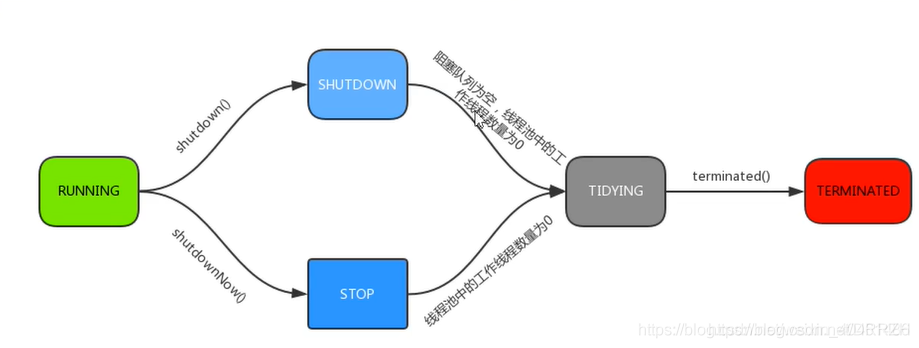

Five states of thread pool

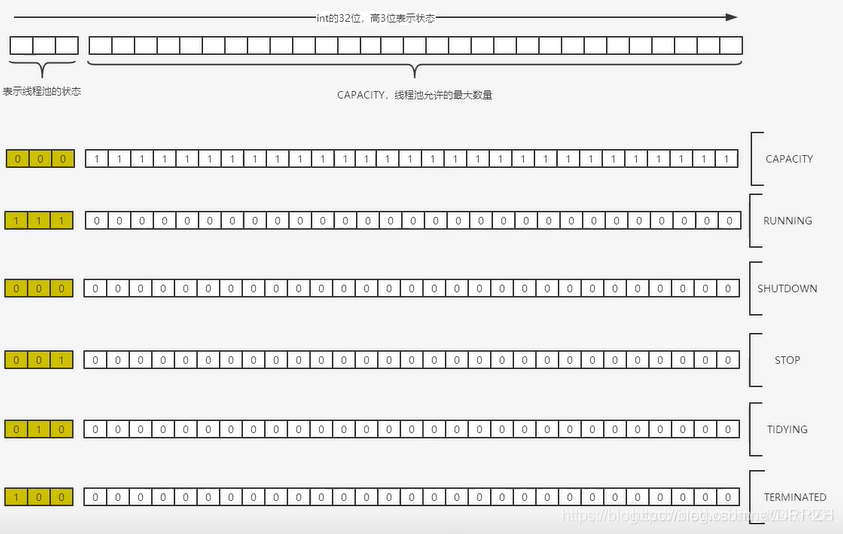

1.Running Be able to accept new tasks and handle added tasks. 2.Shutdown Do not accept new tasks. You can process added tasks. 3.Stop Do not accept new tasks, do not process added tasks, and interrupt the task being processed. 4.Tidying All tasks have been terminated, ctl Recorded"Number of tasks"Is 0, ctl It is responsible for recording the running status and the number of active threads of the thread pool. 5.Terminated When the thread pool terminates completely, the thread pool becomes terminated Status.

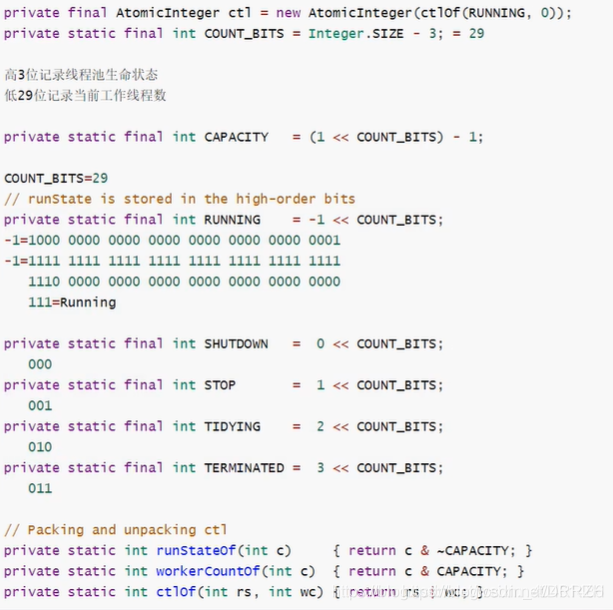

An atomic class ctl is used to represent the thread pool life state. It is used to ensure the safety of thread pool in high concurrency state.

Source code analysis:

Factory method of thread pool in Executors

In order to prevent users from mistakenly matching the parameters of the ThreadPoolExecutor constructor and create the ThreadPoolExecutor object more conveniently and concisely, the Executors class is defined in JavaSE, and the eexctors class provides a method to create a common thread pool. The following is the source code for creating thread pools commonly used by Executors.

It can be seen from the source code that Executors indirectly call the overloaded ThreadPoolExecutor constructor and help users configure different parameters according to different application scenarios.

1.newCachedThreadPool: use SynchronousQueue as the blocking queue. The queue is unbounded, and the idle time limit of the thread is 60 seconds. This type of thread pool is very suitable for IO intensive services, because IO requests are dense, large in number, unsustainable, and the server-side CPU waits for a long time for IO response. In order to improve CPU utilization, the server should create a thread for each IO request to prevent the CPU from being idle because of waiting for IO response.

public static ExecutorService newCachedThreadPool() {

return new ThreadPoolExecutor(0, Integer.MAX_VALUE,

60L, TimeUnit.SECONDS,

new SynchronousQueue<Runnable>());

}

2.newFixedThreadPool: the number of core threads needs to be specified. The number of core threads is the same as the maximum number of threads. LinkedBlockingQueue is used as the blocking queue. The queue is unbounded and the thread idle time is 0 seconds. This type of thread pool can be used for CPU intensive work. In this work, the CPU is busy with computing and rarely idle. Because the number of threads that the CPU can really execute concurrently is certain (such as four cores and eight threads), it makes little sense for those threads that need a lot of CPU computing to create more threads than the number of threads that the CPU can really execute concurrently.

public static ExecutorService newFixedThreadPool(int nThreads) {

return new ThreadPoolExecutor(nThreads, nThreads,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>());

}

3.newSingleThreadExecutor: there is only one thread working in the pool, and the blocking queue is unbounded. It can ensure that tasks are executed in the order of task submission.

public static ExecutorService newSingleThreadExecutor() {

return new FinalizableDelegatedExecutorService

(new ThreadPoolExecutor(1, 1,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>()));

}

4.newScheduleThreadPool: create a thread pool with a fixed length, and support timed and periodic task execution, as well as timed and periodic task execution.

public static ExecutorService newScheduleThreadPool() {

return new ThreadPoolExecutor

(0, Integer.MAX_VALUE,60L,TimeUnit.SECONDS,new SynchronousQueue<Runnable>());

}