The concurrent programming technology is a necessary knowledge in the development of Internet applications. The author of this article starts to analyze thread pools to gain a better understanding of concurrent programming in Java.

* Above Thread Knowledge Compilation for Java Concurrent Programming The purpose and benefits of threads have been described. Thread pools are designed to better use threads and maximize their value.

There are three ways to create a thread pool:

The first is created by the constructor method of ThreadPoolExecutor. There are seven parameters in total, as seen from the full construction parameters of ThreadPoolExecutor.

public ThreadPoolExecutor( int corePoolSize, //Core threads size int maximumPoolSize, //maximum threads long keepAliveTime, //thread lifetime TimeUnit unit after exceeding the number of core threads, //lifetime unit BlockingQueue<Runnable>workQueue, //task queue ThreadFactory threadFactory, //Factory RejectedExecutionHandler for Thread Creation //Rejection Policy Processor) {}The second, the constructor of the ScheduledThreadPoolExecutor class, creates a pool of threads that execute regularly or at a certain frequency. (

public ScheduledThreadPoolExecutor( int corePoolSize,//Number of core threads ThreadFactory threadFactory, //Workshop that created threads * RejectedExecutionHandler //Pool Full Rejection Policy * super (corePoolSize, Integer.MAX_VALUE, 0, NANOSECONDS, new DelayedWorkQueue (), threadFactory, handler);} Public ScheduledFuture<?> ScheduleAtFixedRate (Runnable command, //Timed tasks) * Long initialDelay, //First delay start time long period, //Periodic cycle TimeUnit unit//Periodic cycle unit (if (command == null || unit == null) throw new NullPointerException (); if (period <= 0) throw new Illegal ArgumentException (); ScheduledFutureTask <Void> SFT = new ScheduledFutureTask <Void> (command, null, trigger (initialDelay, unit), unit.Nanos (period); RunnableScheduledFuture <Void> t = decorateTask (command, sft); Sft. OuterTask = t; DelayedExecute (t); Return t;}

The third static method using the factory Executors class

//Elastic scalable thread pool with 0 core thread size and Integer maximum number of threads. MAX_ VALUE, as long as it is less than Integer. MAX_ VALUE creates a thread to join the thread pool and recycles thread resources as long as the thread has more than 60s free time. This may result in too many threads being created, exhausting system resources, using synchronous queues, not storing tasks, not having the ability to stack tasks, relying heavily on the thread resource public static Executor Service newCachedThreadPool () {return new ThreadPoolExecutor (0, Integer.MAX_VALUE, 60L, TimeUnit.SECONDS, new SynchronousQueue <Runnable> ();}//Fixed thread size thread pool, the number of core threads and the maximum number of threads are the same, //Queue is unbound, as long as the number of threads is less than the number of core threads, add threads, //As long as it is greater than the number of core threads, join the unbounded queue. The thread size is fixed, //If the task takes too long to execute, it may cause the task to accumulate too long. Public static ExecutorService newFixedThreadPool (int nThreads) {return new ThreadPoolExecutor (nThreads, nThreads,... 0L,... TimeUnit.MILLISECONDS,... New LinkedBlockingQueue <Runnable>();}// Thread size is only 1 thread pool, queue uses bounded blocking queue, Tasks will execute public static Executor Service newSingleThreadExecutor () return new Finalizable DelegatedExecutor Service (new ThreadPoolExecutor (1, 1, 0L, TimeUnit.MILLISECONDS, new LinkedBlockingQueue <Runnable>()) in the order of submission;}

//Create a single timed pool of threads, which is often used in middleware, such as rocketmq,dubbo//for performing some timed operations in the background. Public static ScheduledExecutor Service newSingleThreadScheduledExecutor () {return new DelegatedScheduledExecutor Service (new ScheduledThreadPoolExecutor (1);}In the Java development manual produced by Alibaba, it is explicitly forcible to use the Executors class to create thread pools because of the risk of exhausting resources through Executors.

Thread pool principles

Storage structure for thread pools: Threads are encapsulated in the Worker class and use HashSet to store idle threads

/** * Set containing all worker threads in pool. Accessed only when * holding mainLock. */ private final HashSet<Worker> workers = new HashSet<Worker>();

Submit the task to the thread pool:

java.util.concurrent.ThreadPoolExecutor#execute(Runnable r) process

// 1. If the number of threads currently running is less than the number of core threads, start a core thread and make r the first task executed by that thread//2. If the number of core threads is full, add tasks to the task queue//3. If the task queue is full, increase the number of non-core threads until the maximum number of threads//4 is reached. Then execute the rejection policy RejectedExecutionHandler // AbortPolicy (throw exception, default) DiscardPolicy // DiscardOldestPolicy (throw poll) The next task in the task queue will execute the task-header task. And try to execute the current task) / / CallerRunsPolicy (caller thread directly calls run method) public void execute (Runnable command) {if (command == null) throw new NullPointerException () ;/* * Proceed in 3 steps: * * 1. If fewer than corePoolSize threads are running, try to * start a new thread with the given command as its first * task. The call to addWorker atomically checks runState and * workerCount, and so prevents false alarms that would add * threads when it shouldn't, by returning false. * * 2. If a task can be successful queued, then we still need * to double-check if we should have added a thread * (because existing ones died since last checking) or that * the pool shut down since entry into this method. So we * recheck state and if necessary roll back the enqueuing if * stopped, or start a new thread if there are none. * * 3. If we cannot queue task, then we try to add a new * thread. If it fails, we know we are shut down or saturated * and so reject the task. */ Int c = ctl. Get(); If (workerCountOf(c) < corePoolSize) {if (addWorker (command, true)) return; C = ctl.get();} If (isRunning(c) && workQueue. Offer (command) {int recheck = ctl.get (); if (! IsRunning (recheck) & & remove (command)) reject (command); else if (workerCountOf (recheck) == 0) addWorker (null, false);} Else if (! AddWorker (command, false)) reject (command);} Thread pools can also be preheated to pre-create the number of core threads

java.util.concurrent.ThreadPoolExecutor#prestartCoreThread java.util.concurrent.ThreadPoolExecutor#ensurePrestart //Start at least one thread, java.util.concurrent.ThreadPoolExecutor#prestartAllCoreThreads // Start all core threads // Control core thread idle time java.util.concurrent.ThreadPoolExecutor#allowCoreThreadTimeOut //Turn on core thread recycling (no recycling by default)//Submit Task 2 java. Util. Concurrent. AbstractExecutorService#submit (java.util.concurrent.Callable<T>)//also calls execute but constructs the task into FutureTask java. Util. Concurrent. FutureTask#FutureTask (java.util.concurrent.Callable<V>) FutureTask implements the run method of the Runnable interface and holds a reference to Callable. The FutureTask task was committed to the thread pool. // The thread pool calls this run method public void run () {if (state!= NEW |!) RUNNER.compareAndSet (this, null, Thread.currentThread()) return; {Callable<V> C = callable; if (c!= null & & State == NEW) {V result; Boolean ran; try; {//Call method result = c.call(); ran = true;} Catch (Throwable ex) {result = null; ran = false; setException (ex);} If(ran) //Set completion status and result Locksupport.unpark wakes up set (result) on threads waiting for results;}} Finally {// runner must be non-null until state is settled to // prevent concurrent calls to run () runner = null; // state must be re-read after nulling runner to prevent // leaked interrupts int = state; if (s >= INTERRUPTING) HandlePossibleCancellationInterrupt(s);}}Thread Pool Submit Task Logical Flowchart

Working principle:

final void runWorker(Worker w) { Thread wt = Thread.currentThread(); Runnable task = w.firstTask; w.firstTask = null; w.unlock(); // Allow interrupts Boolean completedAbruptly = true; Try {try // Keep getting tasks from the queue. While (task!= null | (task = getTask ())!= null) {w.lock () ;// If pool is stopping, ensure thread is interrupted; // If not, ensure thread is not interrupted. This // requires a recheck in second case to deal with // shutdownNow race while clearing interrupt if ((runStateAtLeast (ctl.get ()) | (Thread.interrupted ()) && runStateAtLeast (ctl.get (), STOP)) &&! Wt.isInterrupted()) wt.interrupt(); Try {beforeExecute (wt, task); // You can extend the thread pool to monitor Throwable thrown = null; try { Catch (RuntimeException x) {thrown = x; throw x;} Catch (Error x) {thrown = x; throw x;} Catch (Throwable x) {thrown = x; throw new Error (x);} Finally {afterExecute (task, thrown); //You can extend the thread pool for monitoring}} finally {task = null; w.completedTasks++; w.unlock();}} CompletedAbruptly = false;} Finally {processWorkerExit (w, completedAbruptly);}}Task Packaging Class Worker:

//Inherited aqs Each task needs to be locked before execution by private final class Worker extends AbstractQueuedSynchronizer implements Runnable {/** * This class will never be serialized, but we provide a * serialVersionUID to suppress a javac warning. */ private static final long serialVersionUID = 613829485518333L; /** Thread this worker is running in. Null if factory fails. */ final Thread thread; /** Initial task to R Un. Possibly null. */ Runnable firstTask; /** Per-thread task counter */ volatile long completedTasks; /** * Creates with given first task and thread from ThreadFactory. * @ Param first Task the first task (null if none) */ Worker (Runnable first Task) {setState (-1); // inhibit interrupts until runWorker this.first Task = first Task; this.thread = getThreadFactory (). newThread (this);} /** Delegates main run loop to outer runWorker */ public void run() {runWorker(this);} // Lock methods // / The value 0 represents the unlocked state. // The value 1 represents the locked state. Protected Boolean isHeldExclusively () {return getState ()!= 0;} Protected Boolean tryAcquire (int unused) {if (compareAndSetState (0, 1)) {setExclusiveOwnerThread (Thread.currentThread()); return true;} Return false;} Protected Boolean tryRelease (int unused) {setExclusiveOwnerThread (null); setState (0); return true;} Public void lock () {acquire (1);} Public Boolean tryLock () {return tryAcquire (1);} Public void unlock () {release (1);} Public Boolean isLocked () {return isHeldExclusively ();} Void interruptIfStarted () {Thread; if (getState () >= 0 && (t = thread)!= null && t.isInterrupted (){try {t.interrupt ();} Catch (SecurityException ignore) {}}}Thread pool has three queue choices:

1. SynchronousQueue Synchronizer Queue does not store tasks but transfers them

Delegate tasks directly to threads, and create threads without threads (without the ability to stack queues to hold tasks), which can lead to continuous thread creation, occupy system resources memory cpu, and avoid locking dependent tasks. This is suitable for task threads with shorter execution times.

2,LinkedBlockingQueue

Unbounded queue jdk's native thread pool causes the maximum number of threads to be configured ineffectively, tasks can always be submitted in the queue, and if the threads are busy, it causes the threads to wait too long (tasks may accumulate for too long to execute), many tasks may be unused, and it also consumes system resource memory cpu

3,ArrayBlockingQueue

Bounded size, queues full will create non-core threads.

Using large queues (task queues) and small pools (number of core threads, maximum number of threads) minimizes CPU usage, operating system resources, and context switching overhead, but can result in artificially low throughput (less processing tasks at the same time) that are I/O bound, and the system may schedule more threads.

Using small queues* usually requires a larger pool size, which makes the cpu busier, but * may encounter unacceptable scheduling overhead, which also reduces throughput. (

Therefore, the queue size needs to be properly configured to maximize the utilization of cpu resources and improve system throughput.

How to set the thread pool size for classic interview questions:

If the behavior of the tasks to be executed varies greatly, multiple thread pools need to be set so that each thread pool can be adjusted according to its own workload. (

Considerations: number of cpu s, memory size, whether the task is compute-intensive, io-intensive, or both?

Assume scenarios: Number of cpus: N, Ucpu target cpu utilization size (0=<Ucpu<=1). W/C = Task wait time / Task calculation time.

For computationally intensive tasks: optimal utilization can be achieved when the thread pool size is set to N+1

For tasks that contain IO or other blocking operations, the thread pool can be larger because it will not always execute, and the ratio of task wait time to computation time can be estimated. (

How do I achieve thread reuse? (

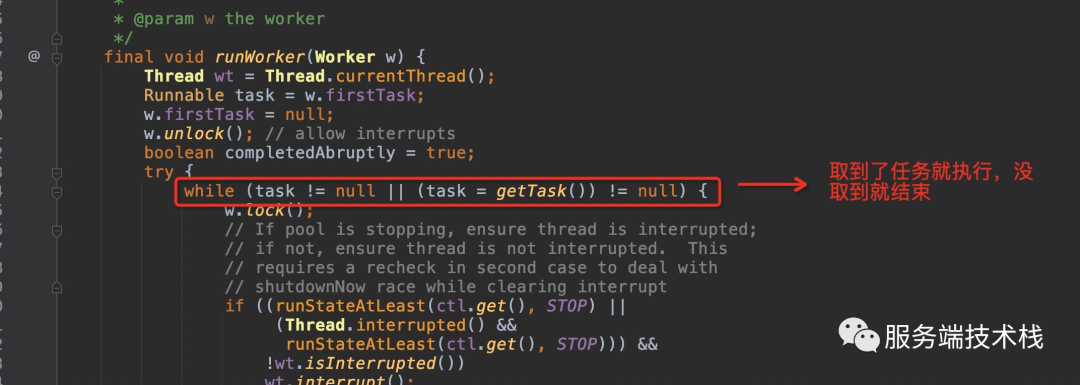

1. Thread keeps taking tasks from the task queue to execute, and ends the thread without taking them.

Get Task Implementation:

How threads that exceed the core thread recycle: When the number of core threads is exceeded, the fetch task returns after a blocked wait period of time.

When the thread pool stops, threads are also recycled:

Recycling threads use cas mechanisms to ensure concurrent recycling issues:

How does the thread pool change when the maximum number of threads is reduced? Recycle redundant threads

As you can see from the code diagrams above, the thread pool implements core functions such as thread recycling and thread reuse in the Get Tasks to Execute method.

Thread pool is still a lot of knowledge, this paper introduces so much, in fact, there is knowledge about the state of the thread pool, this note is complex, has not been clarified, and will be more clear in the future.

2022 Go on, keep learning, persevere is victory ✌ Neodymium