Master + Slave

Introduction to master-slave mode

Master-slave mode: it is the simplest of the three cluster modes. It is mainly based on Redis's Master-Slave replication feature architecture. Usually, we will set one master node and N slave nodes; By default, the master node is responsible for processing the user's IO operations, while the slave node will back up the data of the master node and provide external read operations. The main features are as follows:

- In the master-slave mode, when a node is damaged, it will back up the data to other Redis instances, which can recover the lost data to a large extent.

- In the master-slave mode, load balancing can be ensured, which will not be described here

- In the master-slave mode, the master node and the slave node are read-write separated. Users can not only read data from the master node, but also easily read data from the slave node, which alleviates the pressure of the host to a certain extent.

- The slave node can also support writing data, but the data written from the slave node will not be synchronized to the master node and other slave nodes.

From the above, it is not difficult to see that under the master-slave mode, Redis must ensure that the master node will not go down. Once the primary node goes down, other nodes will not compete to be called the primary node. At this time, Redis will lose the ability to write. This is fatal in the production environment.

Master slave rapid deployment

Mirror usage: https://hub.docker.com/r/bitnami/redis

github address: https://github.com/bitnami/bitnami-docker-redis

I won't talk about docker compose installation. If you don't understand it, install it according to the article: https://blog.csdn.net/weixin_45444133/article/details/110588884

Write docker compose yaml

version: '3'

services:

#reids-master

redis-master:

user: root

container_name: redis-master

image: docker.io/bitnami/redis:6.2

environment:

- REDIS_REPLICATION_MODE=master

- REDIS_PASSWORD=masterpassword123

ports:

- '6379:6379'

volumes:

- ./redis/r1:/bitnami

networks:

- redis-cluster-network

#reids-slave1

redis-slave1:

user: root

container_name: redis-slave1

image: docker.io/bitnami/redis:6.2

environment:

- REDIS_REPLICATION_MODE=slave

- REDIS_MASTER_HOST=redis-master

- REDIS_PASSWORD=masterpassword123

- REDIS_MASTER_PASSWORD=masterpassword123

depends_on:

- redis-master

volumes:

- ./redis/r2:/bitnami

networks:

- redis-cluster-network

#reids-slave2

redis-slave2:

user: root

container_name: redis-slave2

image: docker.io/bitnami/redis:6.2

environment:

- REDIS_REPLICATION_MODE=slave

- REDIS_MASTER_HOST=redis-master

- REDIS_PASSWORD=masterpassword123

- REDIS_MASTER_PASSWORD=masterpassword123

depends_on:

- redis-master

volumes:

- ./redis/r3:/bitnami

networks:

- redis-cluster-network

networks:

redis-cluster-network:

driver: bridge

ipam:

config:

- subnet: 192.168.88.0/24

Start & verify

docker-compose up -d #Enter the container for verification docker exec -it redis-master /bin/bash #Inside container redis-cli -a masterpassword123 #Log in to get the following information to verify the master-slave 127.0.0.1:6379> info ... # Replication role:master connected_slaves:2 slave0:ip=192.168.88.3,port=6379,state=online,offset=28,lag=1 slave1:ip=192.168.88.4,port=6379,state=online,offset=28,lag=1 ...

Master slave deployment completed

Sentinel

Sentinel mode introduction

Sentinel mode: it is a certain change based on the master-slave mode, which can provide high availability for Redis. In actual production, the server will not inevitably encounter some emergencies: server downtime, power failure, hardware damage and so on. Once these situations occur, the consequences are often immeasurable. Sentinel mode can help us avoid the disastrous consequences caused by these accidents to a certain extent. In fact, the core of sentinel mode is master-slave replication. However, compared with the master-slave mode, there is an election mechanism to elect a new master node from all slave nodes when the master node is down and cannot be written. The implementation of the campaign mechanism depends on starting a sentinel process in the system.

sentinel features:

- Monitoring: it will monitor whether the master server and the slave server are working normally.

- Notification: it can tell the system administrator or program through the API that there is a problem with an instance in the cluster.

- Failover: when the master node has a problem, it will elect a node from all slave nodes and make it a new master node.

- Provide primary server address: it can also provide the user with the address of the current primary node. After failover, the user can know the current master node address without any modification.

Sentinel can also be clustered and multiple sentinels can be deployed. Sentinel can automatically discover other sentinels in Redis cluster through publishing and subscription. When sentinel finds other sentinel processes, it will put them into a list, which stores all the discovered sentinel processes.

All sentinels in the cluster will not fail over the same primary node concurrently. Failover will only start with the first sentinel. When the first failover fails, the next one will be attempted. When a slave node is selected as the new master node, the failover is successful (instead of waiting until all slave nodes are configured with a new master node). During this process, if the old master node is restarted, there will be no master node. In this case, you can only restart the cluster.

When a new master node is selected, the configuration information of the slave node selected as the new master node will be rewritten by sentinel to the configuration information of the old master node. After rewriting, broadcast the configuration of the new master node to all slave nodes.

Sentry rapid deployment

Mirror usage: https://hub.docker.com/r/bitnami/redis-sentinel

github address: https://github.com/bitnami/bitnami-docker-redis-sentinel

Write docker compose yml

Sentinel adds sentinel service on the basis of master-slave to monitor the master status of redis

You can check the parameters on github, which is officially written in great detail

version: '3'

services:

#reids-master

redis-master:

user: root

container_name: redis-master

image: docker.io/bitnami/redis:6.2

environment:

- REDIS_REPLICATION_MODE=master

- REDIS_PASSWORD=masterpassword123

ports:

- '6379:6379'

volumes:

- ./redis/r1:/bitnami

networks:

- redis-cluster-network

#reids-slave1

redis-slave1:

user: root

container_name: redis-slave1

image: docker.io/bitnami/redis:6.2

environment:

- REDIS_REPLICATION_MODE=slave

- REDIS_MASTER_HOST=redis-master

- REDIS_PASSWORD=masterpassword123

- REDIS_MASTER_PASSWORD=masterpassword123

depends_on:

- redis-master

volumes:

- ./redis/r2:/bitnami

networks:

- redis-cluster-network

#reids-slave2

redis-slave2:

user: root

container_name: redis-slave2

image: docker.io/bitnami/redis:6.2

environment:

- REDIS_REPLICATION_MODE=slave

- REDIS_MASTER_HOST=redis-master

- REDIS_PASSWORD=masterpassword123

- REDIS_MASTER_PASSWORD=masterpassword123

depends_on:

- redis-master

volumes:

- ./redis/r3:/bitnami

networks:

- redis-cluster-network

#redisn sentry

redis-sentinel:

image: 'bitnami/redis-sentinel:6.2'

environment:

- REDIS_MASTER_HOST=redis-master

- REDIS_MASTER_PASSWORD=masterpassword123

depends_on:

- redis-master

- redis-slave1

- redis-slave2

networks:

- redis-cluster-network

networks:

redis-cluster-network:

driver: bridge

ipam:

config:

- subnet: 192.168.88.0/24

Start & verify

#By default, the sentinel image is switched from master to slave with the consent of two sentinels. All three sentinels are started here docker-compose up --scale redis-sentinel=3 -d

In order to start the update later, write a script here

#! /bin/bash

main(){

case $1 in

start)

# By default, the master-slave switch is performed only with the consent of two sentinels. All three sentinels are started here

docker-compose up --scale redis-sentinel=3 -d

;;

update)

docker-compose up --scale redis-sentinel=3 -d

;;

stop)

docker-compose down

;;

*)

echo "start | stop | update"

;;

esac

}

main $1

Add execution permission

chmod +x start.sh #Test it ./start.sh update

The verification process is not shown here. The operation process is very simple. Here is a brief description:

- Enter the redis master to check whether it is the master, and check the number of slave redis

- Enter the sentinel service container, redis cli - P 26379 and execute info. You should see that there are three sentinels displayed: master0: name = mymaster, status = OK, address = 192.168 88.2:6379,slaves=2,sentinels=3

- Stop the redis master service and view the log output of monitoring and switching by docker compose logs - F -- tail = 200

- After switching, enter the switched container to verify whether the current service is the master of redis. If yes, the sentinel mode is successful

Cluster

Introduction to cluster mode

Redis cluster is an assembly that provides data sharing among multiple redis nodes.

Redis cluster does not support the command to process multiple keys, because it needs to move data between different nodes, which can not achieve the performance like redis, and may lead to unpredictable errors under high load

Redis cluster provides a certain degree of availability through partition. In the actual environment, when a node is down or unreachable, continue to process commands Advantages of redis cluster:

- Automatically split data to different nodes.

- If some nodes of the whole cluster fail or are unreachable, the command can continue to be processed.

Redis Cluster data fragmentation

Redis Cluster does not use consistent hashing, but a different form of fragmentation, in which each key is conceptually a part of what we call a hash slot.

There are 16384 hash slots in the Redis cluster. To calculate the hash slot of a given key, we only need to take the CRC16 modulus 16384 of the key.

Each node in the Redis cluster is responsible for a subset of hash slots. For example, you may have a cluster with three nodes, including:

- Node A contains hash slots from 0 to 5500.

- Node B contains hash slots from 5501 to 11000.

- Node C contains hash slots from 11001 to 16383.

This allows nodes to be easily added and removed from the cluster. For example, if I want to add A new node D, I need to move some hash slots from nodes A, B, C to d. Similarly, if I want to delete node A from the cluster, I can move the hash slot provided by A to B and C. When node A is empty, I can completely delete it from the cluster.

Since moving hashing slots from one node to another does not require stopping operations, adding and deleting nodes or changing the percentage of hash slots held by nodes does not require any downtime.

Redis Cluster supports multi key operations, as long as all keys involved in a single command execution (or the whole transaction, or Lua script execution) belong to the same hash slot. Users can use the concept called hash tag to force multiple keys to become part of the same hash slot.

The Redis Cluster specification records the hash tag, but the important point is that if there is a substring between the {} brackets in the key, only the contents in the string will be hashed. Therefore, for example, this{foo}key and another{foo}key are guaranteed to be in the same hash slot and can be used together in commands with multiple keys as parameters.

Redis Cluster master-slave mode

In order to remain available when the primary node subset fails or cannot communicate with most nodes, the Redis cluster uses the master-slave model, where each hash slot has from 1 (the primary node itself) to N copies (N -1 additional slave nodes).

In our example cluster containing nodes A, B and C, if node B fails, the cluster cannot continue because we no longer have A way to serve hash slots in the range of 5501-11000.

However, when the cluster is created (or later), we add a slave node for each master node, so that the final cluster is composed of master nodes a, B and C and slave nodes A1, B1 and C1. In this way, if node B fails, the system can continue to run.

If node B1 replicates B and B fails, the cluster will promote node B1 to a new master and continue to operate normally.

However, it should be noted that if nodes B and B1 fail at the same time, Redis Cluster will not continue to operate.

Redis cluster consistency assurance

Redis Cluster cannot guarantee strong consistency. In fact, this means that in some cases, Redis Cluster may lose the writes confirmed by the system to the client.

The first reason Redis Cluster loses writes is because it uses asynchronous replication. This means that the following occurs during writing:

- Your client writes to master B.

- Master B replies OK to your client.

- Master device B propagates writes to its slave devices B1, B2, and B3.

As you can see, B will not wait for confirmation from B1, B2 and B3 before replying to the client, because this is a prohibitive delay penalty for Redis. Therefore, if your client writes some content, B will confirm the write, but will crash before that, and can send the write in to its slave, One of the slave stations (no write received) can be promoted to the master station and lose the write forever.

This is very similar to what happens with most databases configured to flush data to disk per second, so you can infer this because of past experience with traditional database systems that do not involve distributed systems. Similarly, you can improve consistency by forcing the database to flush data to disk before replying to the client, but this usually leads to prohibitively low performance. In the case of Redis Cluster, this is equivalent to synchronous replication.

Basically, there is a trade-off between performance and consistency.

Redis cluster supports synchronous writing when absolutely necessary WAIT Command implementation. This greatly reduces the possibility of lost writes. However, please note that even if synchronous replication is used, Redis Cluster does not achieve strong consistency: in more complex fault scenarios, it is always possible to select the slave that cannot receive writes as the master.

Another noteworthy scenario is that the Redis cluster will lose writes, which occurs during network partition. The client is isolated from a few instances (including at least one primary instance).

Taking our six node cluster as an example, it is composed of a, B, C, A1, B1 and C1, with three master s and three slave nodes. There is also a client, which we call Z1.

After the partition occurs, there may be a, C, A1, B1 and C1 on one side of the partition and B and Z1 on the other side.

Z1 can still write to B, and B will accept its writing. If the partition recovers in a short time, the cluster will continue to operate normally. However, if the partition lasts long enough for B1 to be promoted as the master node on most sides of the partition, the writes sent by Z1 to B at the same time will be lost.

Note that there is a maximum window for the amount of writes that Z1 can send to B: if the majority side of the partition has enough time to elect a slave as the master, each master node on the minority side will stop accepting writes.

This amount of time is a very important configuration instruction of Redis Cluster, which is called node timeout.

After the node times out, the primary node is considered to have failed and can be replaced by one of its replicas. Similarly, after the node times out, the master node cannot perceive most other master nodes, and it enters an error state and stops accepting writes.

Refer to the official document for the introduction and deployment of cluster mode: https://redis.io/topics/cluster-tutorial

Cluster rapid deployment

Mirror usage: https://hub.docker.com/r/bitnami/redis-cluster

github address: https://github.com/bitnami/bitnami-docker-redis-cluster

Write docker compose yml

cluster mode solves the problem of data sharing through data fragmentation, and provides data replication and failover functions at the same time.

You can check the parameters on github, which is officially written in great detail

version: '3'

services:

redis-node-0:

user: root

container_name: redis-node-0

image: docker.io/bitnami/redis-cluster:6.2

ports:

- 16379:6379

volumes:

- ./redis_data-0:/bitnami/redis/data

environment:

- 'REDIS_PASSWORD=bitnami'

- 'REDIS_NODES=redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

networks:

redis-cluster-network:

ipv4_address: 192.168.88.2

redis-node-1:

user: root

container_name: redis-node-1

image: docker.io/bitnami/redis-cluster:6.2

ports:

- 16380:6379

volumes:

- ./redis_data-1:/bitnami/redis/data

environment:

- 'REDIS_PASSWORD=bitnami'

- 'REDIS_NODES=redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

networks:

redis-cluster-network:

ipv4_address: 192.168.88.3

redis-node-2:

user: root

container_name: redis-node-2

image: docker.io/bitnami/redis-cluster:6.2

ports:

- 16381:6379

volumes:

- ./redis_data-2:/bitnami/redis/data

environment:

- 'REDIS_PASSWORD=bitnami'

- 'REDIS_NODES=redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

networks:

redis-cluster-network:

ipv4_address: 192.168.88.4

redis-node-3:

user: root

container_name: redis-node-3

image: docker.io/bitnami/redis-cluster:6.2

ports:

- 16382:6379

volumes:

- ./redis_data-3:/bitnami/redis/data

environment:

- 'REDIS_PASSWORD=bitnami'

- 'REDIS_NODES=redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

networks:

redis-cluster-network:

ipv4_address: 192.168.88.5

redis-node-4:

user: root

container_name: redis-node-4

image: docker.io/bitnami/redis-cluster:6.2

ports:

- 16383:6379

volumes:

- ./redis_data-4:/bitnami/redis/data

environment:

- 'REDIS_PASSWORD=bitnami'

- 'REDIS_NODES=redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

networks:

redis-cluster-network:

ipv4_address: 192.168.88.6

redis-node-5:

user: root

container_name: redis-node-5

image: docker.io/bitnami/redis-cluster:6.2

ports:

- 16384:6379

volumes:

- ./redis_data-5:/bitnami/redis/data

depends_on:

- redis-node-0

- redis-node-1

- redis-node-2

- redis-node-3

- redis-node-4

environment:

- 'REDIS_PASSWORD=bitnami'

- 'REDISCLI_AUTH=bitnami'

- 'REDIS_CLUSTER_REPLICAS=1'

- 'REDIS_NODES=redis-node-0 redis-node-1 redis-node-2 redis-node-3 redis-node-4 redis-node-5'

- 'REDIS_CLUSTER_CREATOR=yes'

networks:

redis-cluster-network:

ipv4_address: 192.168.88.7

networks:

redis-cluster-network:

driver: bridge

ipam:

config:

- subnet: 192.168.88.0/24

Start & verify

#start-up docker-compose up -d

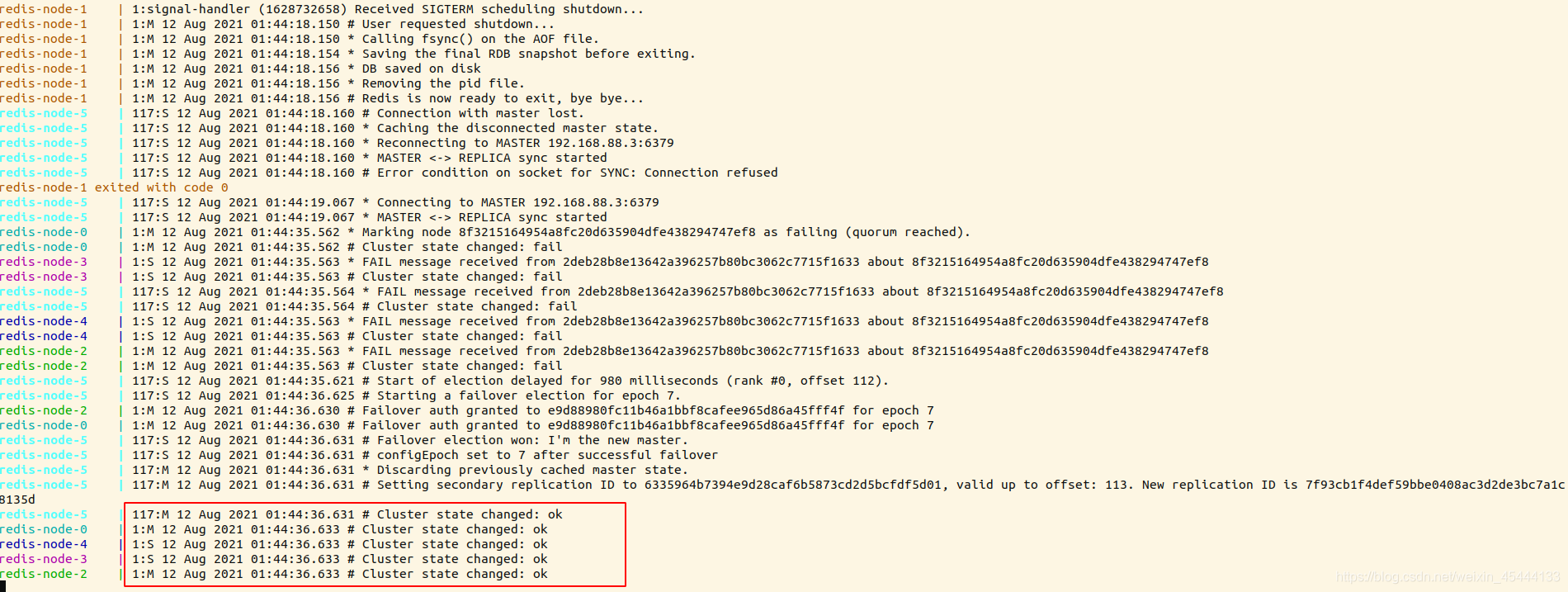

The process is not completely displayed. Post some key pictures

cluster common command reference: https://www.cnblogs.com/kevingrace/p/7910692.html

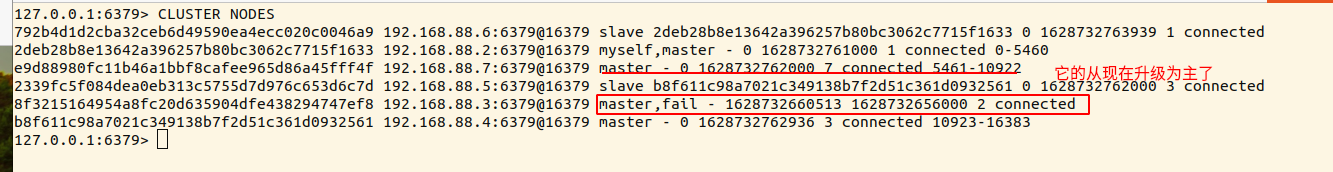

You can see that the cluster has selected a new master, and the other nodes verify that the cluster communication is intact

When the container executes cluster nodes, you can see that the cluster upgrades the slave server of the previous master to a new master

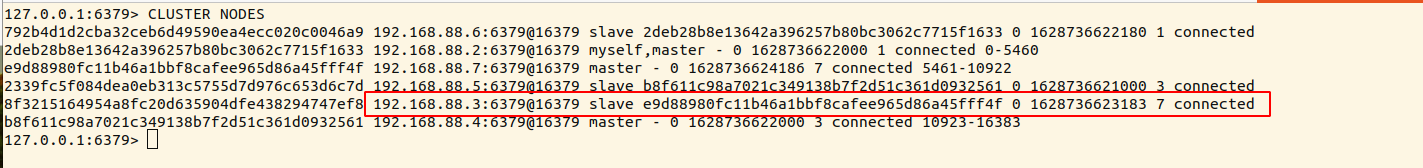

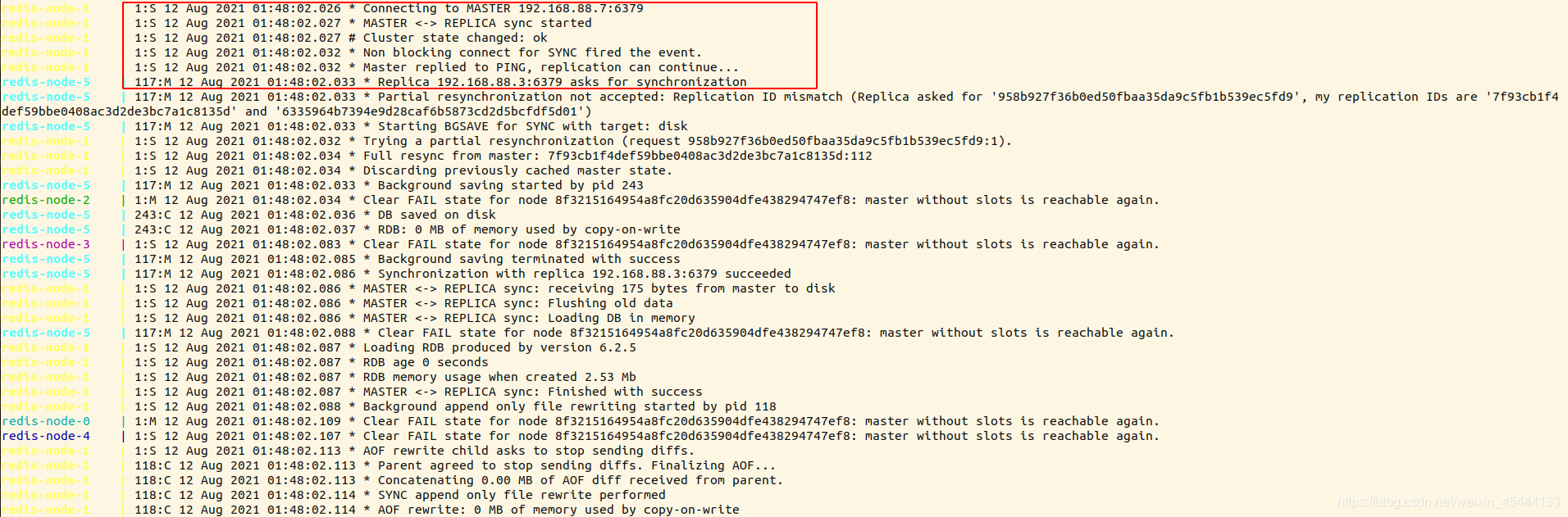

Restore the master server that was stopped before. When you check it again, you can see that it does not directly replace the master server, but becomes a slave server under the master server

And send a request to the master for data synchronization

The above three redis cluster modes are now deployed.

Record the technology deployment used in the work.