Traditional bio-blocked io transmission is inefficient because it needs to wait for queuing every time data is transmitted. with the development of jdk step by step, nio non-blocking technology is becoming more and more popular.

What is Reactor

Reactor can be understood as reactor mode. When a subject changes, all dependent entities are notified. However, the observer mode is associated with a single event source, while the reactor mode is associated with multiple event sources.

NIO has a major class Selector, which is similar to an observer, as long as we tell the Selector that we need to know about the Socket Channel, we go on to do something else. When an event occurs, he will notify us that a set of Selection Key is returned, and we read these keys, we will get the Socket Channel we just registered, and then we read from the Channel. Then we can process the data.

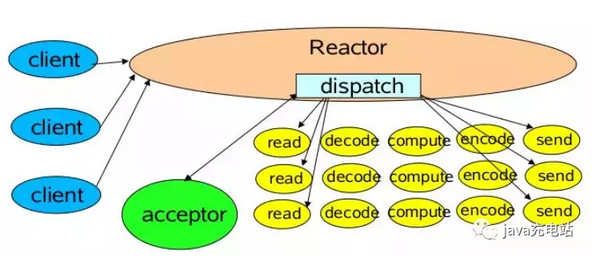

Reactor mode of single thread

The structure diagram of the single thread pattern in Reactor:

When multiple requests are sent to the server, they are processed by the reactor. The corresponding code is as follows:

import lombok.extern.slf4j.Slf4j;

import java.io.IOException;

import java.net.InetSocketAddress;

import java.nio.ByteBuffer;

import java.nio.channels.SelectionKey;

import java.nio.channels.Selector;

import java.nio.channels.ServerSocketChannel;

import java.nio.channels.SocketChannel;

import java.util.Iterator;

import java.util.Set;

/**

* @author idea

* @data 2019/4/11

*/

@Slf4j

public class NioServer {

public static void main(String[] args) {

try {

Selector selector = Selector.open();

ServerSocketChannel serverSocketChannel = ServerSocketChannel.open();

serverSocketChannel.configureBlocking(false);

serverSocketChannel.bind(new InetSocketAddress(9090));

serverSocketChannel.register(selector, SelectionKey.OP_ACCEPT);

System.out.println("server is open!");

while (true) {

if (selector.select() > 0) {

Set<SelectionKey> keys = selector.selectedKeys();

Iterator<SelectionKey> iterator = keys.iterator();

while (iterator.hasNext()) {

SelectionKey selectionKey = iterator.next();

if (selectionKey.isReadable()) {

SocketChannel socketChannel = (SocketChannel) selectionKey.channel();

ByteBuffer byteBuffer = ByteBuffer.allocate(1024);

int len = 0;

//When the pipeline's data is read out

while ((len = (socketChannel.read(byteBuffer))) > 0) {

byteBuffer.flip();

System.out.println(new String(byteBuffer.array(), 0, len));

byteBuffer.clear();

}

} else if (selectionKey.isAcceptable()) {

//The first time you link to a server, you need to build a channel

ServerSocketChannel acceptServerSocketChannel = (ServerSocketChannel) selectionKey.channel();

//Access channel

SocketChannel socketChannel = acceptServerSocketChannel.accept();

//Set to non-blocking

socketChannel.configureBlocking(false);

//Register readable listening events

socketChannel.register(selector, SelectionKey.OP_READ);

System.out.println("[server]Receive new links");

}

iterator.remove();

}

}

}

} catch (IOException e) {

log.error("[server]Exceptions occur, information is{}", e);

}

}

}

One obvious disadvantage of single-threaded Reactor is that when processing requests, channel processing of different states and request monitoring are all carried out on a single thread. (Multiple Channels can be registered on the same Selector object, which implements one thread to monitor multiple request states at the same time. (Channel) Therefore, it is inefficient. So there's a second Reactor pattern.

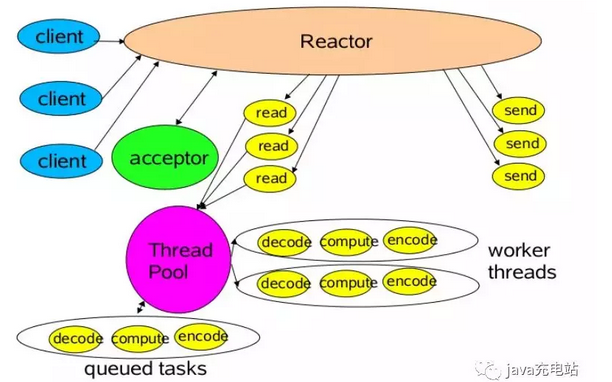

Multi-threaded Reactor mode

In the original single-threaded mode, one thread handles multiple requests at the same time, but all read and write requests and data processing are in the same thread, which can not make full use of the advantages of multi-cpu, so this multi-threaded Reactor mode was born.

The basic structure of the multi-threaded Reactor pattern is as follows:

The code is as follows:

import lombok.extern.slf4j.Slf4j;

import java.io.IOException;

import java.net.InetSocketAddress;

import java.nio.channels.SelectionKey;

import java.nio.channels.Selector;

import java.nio.channels.ServerSocketChannel;

import java.nio.channels.SocketChannel;

import java.util.Iterator;

import java.util.Set;

/**

* @author idea

* @data 2019/4/11

*/

@Slf4j

public class Server {

public static void main(String[] args) {

try {

Selector selector = Selector.open();

ServerSocketChannel serverSocketChannel = ServerSocketChannel.open();

serverSocketChannel.configureBlocking(false);

serverSocketChannel.bind(new InetSocketAddress(9090));

serverSocketChannel.register(selector, SelectionKey.OP_ACCEPT);

System.out.println("[server]Start the server");

while (true) {

if (selector.selectNow() < 0) {

continue;

}

Set<SelectionKey> keys = selector.selectedKeys();

Iterator<SelectionKey> iterator = keys.iterator();

while (iterator.hasNext()) {

SelectionKey selectionKey = iterator.next();

if (selectionKey.isReadable()) {

Processor processor = (Processor) selectionKey.attachment();

processor.process(selectionKey);

} else if (selectionKey.isAcceptable()) {

ServerSocketChannel acceptServerSocketChannel = (ServerSocketChannel) selectionKey.channel();

SocketChannel socketChannel = acceptServerSocketChannel.accept();

socketChannel.configureBlocking(false);

SelectionKey key = socketChannel.register(selector, SelectionKey.OP_READ);

//Binding processor threads

key.attach(new Processor());

System.out.println("[server]Receive new links");

}

iterator.remove();

}

}

} catch (IOException e) {

log.error("[server]Exceptions occur, information is{}", e);

}

}

}

As you can see from the code, each time the corresponding OP_READ event is registered by the corresponding channel, an object can be attached to the corresponding SelectionKey (in this case, attach a Processor object, which handles the read request), and the object can be retrieved after the read event is obtained.

See the corresponding Processor object code again

import java.nio.ByteBuffer;

import java.nio.channels.SelectionKey;

import java.nio.channels.SocketChannel;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.LinkedBlockingQueue;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

/**

* processor

*

* @author idea

* @data 2019/4/11

*/

public class Processor {

private static final ExecutorService service = new ThreadPoolExecutor(16, 16,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>());

public void process(SelectionKey selectionKey) {

service.submit(() -> {

ByteBuffer buffer = ByteBuffer.allocate(1024);

SocketChannel socketChannel = (SocketChannel) selectionKey.channel();

int count = socketChannel.read(buffer);

if (count < 0) {

socketChannel.close();

selectionKey.cancel();

System.out.println("Read the end!");

return null;

} else if (count == 0) {

return null;

}

System.out.println("Read content:" + new String(buffer.array()));

return null;

});

}

}

A thread pool needs to be opened for data processing tasks. In this way, the pressure of data processing is shared to the thread pool for execution, and the advantage of multi-threading is fully utilized. The connection of new threads and the io operation of data are run in different threads respectively.

In the multi-threaded Reactor mode mentioned above, there are special nio-acceptor threads to listen on the server and receive the tcp connection of the client. Then there is a special thread pool to process the reading, sending, encoding and decoding of messages. A NiO processes N links at the same time, and each link corresponds to only one NIO thread. (prevents concurrent operations from occurring). It seems that this arrangement is very good, and it can really solve the problems of most application scenarios.

In extreme cases, however, there are still drawbacks. A single NIO thread is responsible for monitoring and processing all client connections, and there may be performance problems. For example, concurrent millions of client connections, or the server needs secure authentication for the client handshake, but authentication itself is very performance-degrading. In such scenarios, a single Acceptor thread may suffer from performance deficiencies. In order to solve the performance problem, a third Reactor thread model, master-slave Reactor multithreading model, is proposed.

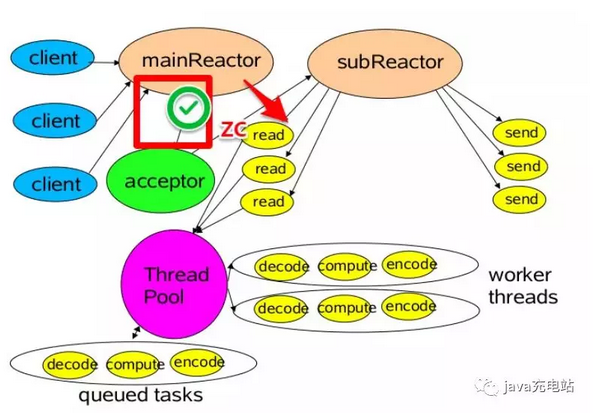

Master-slave Reactor multithreading mode

The main and subordinate Reactor thread model is characterized by that the server is no longer a single NIO thread for receiving client connections, but an independent NIO thread pool. After Acceptor receives the client TCP connection request processing (including access authentication, etc.), it registers the newly created Socket Channel to an IO thread in the IO thread pool, which is responsible for the reading, writing and encoding of the Socket Channel. Acceptor thread pool is only used for client's login, handshake and security authentication. Once the link is established successfully, the link is registered on the IO thread of the back-end SubReactor thread pool, and the IO thread is responsible for subsequent IO operations.

Corresponding codes:

import java.io.IOException;

import java.net.InetSocketAddress;

import java.nio.channels.SelectionKey;

import java.nio.channels.Selector;

import java.nio.channels.ServerSocketChannel;

import java.nio.channels.SocketChannel;

import java.util.Iterator;

import java.util.Set;

/**

* @author idea

* @data 2019/4/11

*/

public class Server {

public static void main(String[] args) throws IOException {

Selector selector = Selector.open();

ServerSocketChannel serverSocketChannel = ServerSocketChannel.open();

serverSocketChannel.configureBlocking(false);

serverSocketChannel.bind(new InetSocketAddress(1234));

//Initialize the channel, flagged as accept type

serverSocketChannel.register(selector, SelectionKey.OP_ACCEPT);

int coreNum = Runtime.getRuntime().availableProcessors();

Processor[] processors = new Processor[coreNum];

for (int i = 0; i < processors.length; i++) {

processors[i] = new Processor();

}

int index = 0;

//Always blocked

while (selector.select() > 0) {

//Get the collection of selectionkey

Set<SelectionKey> keys = selector.selectedKeys();

Iterator<SelectionKey> iterator = keys.iterator();

while (iterator.hasNext()) {

SelectionKey key = iterator.next();

if (key.isAcceptable()) {

ServerSocketChannel acceptServerSocketChannel = (ServerSocketChannel) key.channel();

SocketChannel socketChannel = acceptServerSocketChannel.accept();

socketChannel.configureBlocking(false);

System.out.println("Accept request from {}" + socketChannel.getRemoteAddress());

Processor processor = processors[(int) ((index++) / coreNum)];

processor.addChannel(socketChannel);

}

iterator.remove();

}

}

}

}

Processor part code:

import lombok.extern.slf4j.Slf4j;

import java.io.IOException;

import java.nio.ByteBuffer;

import java.nio.channels.ClosedChannelException;

import java.nio.channels.SelectionKey;

import java.nio.channels.Selector;

import java.nio.channels.SocketChannel;

import java.nio.channels.spi.SelectorProvider;

import java.util.Iterator;

import java.util.Set;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

@Slf4j

public class Processor {

private static final ExecutorService service =

Executors.newFixedThreadPool(2 * Runtime.getRuntime().availableProcessors());

private Selector selector;

public Processor() throws IOException {

this.selector = SelectorProvider.provider().openSelector();

start();

}

public void addChannel(SocketChannel socketChannel) throws ClosedChannelException {

socketChannel.register(this.selector, SelectionKey.OP_READ);

}

public void start() {

service.submit(() -> {

while (true) {

if (selector.selectNow() <= 0) {

continue;

}

Set<SelectionKey> keys = selector.selectedKeys();

Iterator<SelectionKey> iterator = keys.iterator();

while (iterator.hasNext()) {

SelectionKey key = iterator.next();

iterator.remove();

if (key.isReadable()) {

ByteBuffer buffer = ByteBuffer.allocate(1024);

SocketChannel socketChannel = (SocketChannel) key.channel();

int count = socketChannel.read(buffer);

if (count < 0) {

socketChannel.close();

key.cancel();

System.out.println("End of reading" + socketChannel);

continue;

} else if (count == 0) {

System.out.println("Client information size:" + socketChannel);

continue;

} else {

System.out.println("Client information:" + new String(buffer.array()));

}

}

}

}

});

}

}Usually in Internet companies, Reactor mode is used in some high concurrent application scenarios, which replaces the common multi-threading processing mode, saves system resources and improves system throughput. Similar to some netty frameworks, the core principle is actually to design and develop through the Reactor pattern of nio.