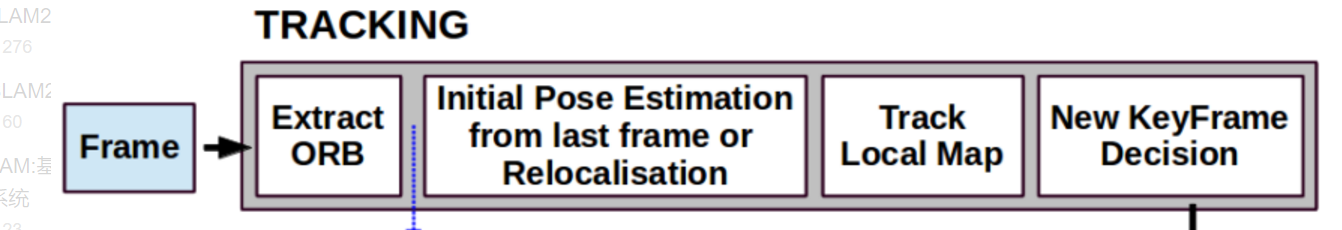

1.Tracking framework

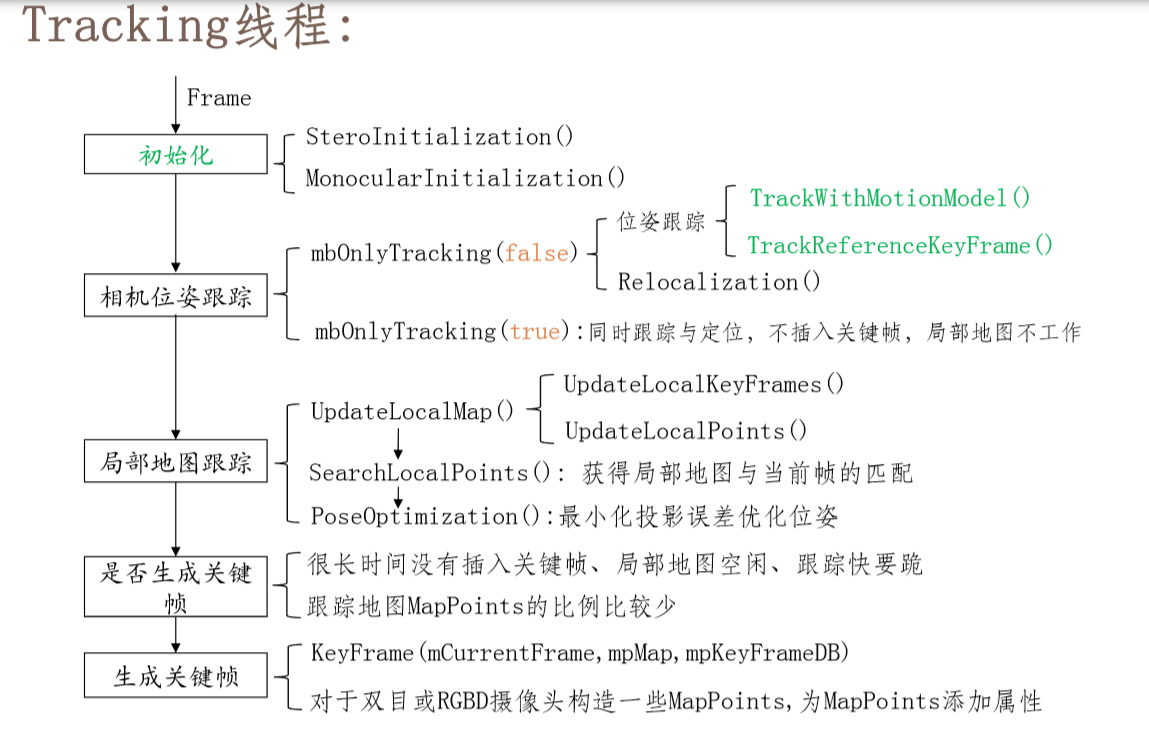

Flow chart of Tracking thread:

Main functions corresponding to each process (from wubo @ bubble robot):

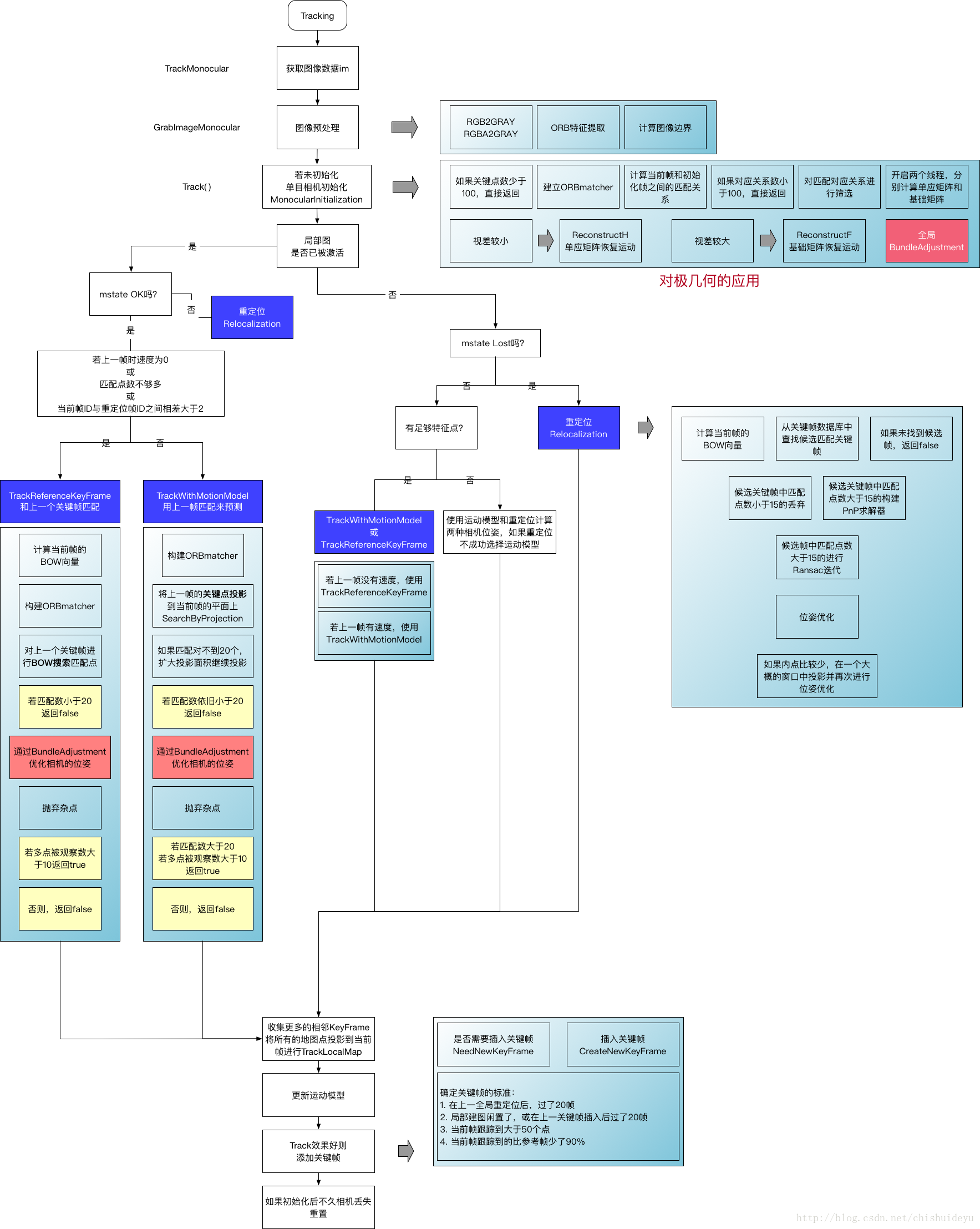

Overall flow chart of Tracking

The picture above shows tracking CC speaks very clearly.

After the tracking thread obtains the image data, it will pass it to the function GrabImageStereo, GrabImageRGBD or GrabImageMonocular for preprocessing. Here I take GrabImageMonocular as an example.

GrabImageMonocular(const cv::Mat &im, const double ×tamp)

| Function function | 1. Convert the image to mImGray and initialize mCurrentFrame; 2. Conduct the tracking process and output the transformation matrix from the world coordinate system to the camera coordinate system of the frame |

| im | input image |

| timestamp | time stamp |

cv::Mat Tracking::GrabImageMonocular(const cv::Mat &im, const double ×tamp)

{

mImGray = im;//Read image

// Step 1: convert RGB or RGBA image to grayscale image

if(mImGray.channels()==3)

{

if(mbRGB)

cvtColor(mImGray,mImGray,CV_RGB2GRAY);

else

cvtColor(mImGray,mImGray,CV_BGR2GRAY);

}

else if(mImGray.channels()==4)

{

if(mbRGB)

cvtColor(mImGray,mImGray,CV_RGBA2GRAY);

else

cvtColor(mImGray,mImGray,CV_BGRA2GRAY);

}

// Step 2: construct Frame

if(mState==NOT_INITIALIZED || mState==NO_IMAGES_YET)// The previous state without successful initialization is NO_IMAGES_YET

mCurrentFrame = Frame(mImGray,timestamp,mpIniORBextractor,mpORBVocabulary,mK,mDistCoef,mbf,mThDepth);

else

mCurrentFrame = Frame(mImGray,timestamp,mpORBextractorLeft,mpORBVocabulary,mK,mDistCoef,mbf,mThDepth);

// Step 3: track

Track();

return mCurrentFrame.mTcw.clone();

}The data flows to Track (). Because the code is too long, I will paste comments in several paragraphs.

void Tracking::Track()

| step | 1. Judge the tracking status: if it is not initialized, perform MonocularInitialization() and StereoInitialization() for monocular and non monocular respectively, and update the map view. 2. If the initialization is successful, track it next. There are two options for tracking status in ORB-SLAM (judged by mbOnlyTracking) (1) Only track without drawing (2) Tracking and mapping at the same time: After initialization, ORB-SLAM has three tracking models to choose from a.TrackWithMotionModel(); Motion model: estimate the pose of the current frame according to the motion model - track the map points of the previous frame according to the uniform motion model - optimize the pose. b.TrackReferenceKeyFrame(); Key frame model: BoW searches the matching points between the current frame and the reference frame - takes the pose of the previous frame as the initial value of the current frame - and obtains the pose by optimizing the re projection error of 3D-2D. c.Relocalization(); Relocation model: calculate the BoW of the current frame - detect the candidate frames that meet the relocation conditions - search the matching points between the current frame and the candidate frame through the BoW - conduct PnP pose estimation if there are more than 15 points - optimize. The selection method of these three models: First, assume that the camera is at constant speed (i.e. Rt is the same as the previous frame), and then calculate the matching points (if there are enough matching points, it is considered that the tracking is successful). If there are few matching points, it indicates that the constant speed model is invalid, then select the reference frame model (i.e. feature matching, PnP solution). If the reference frame model also cannot be tracked, it indicates that there is no correlation between the two frame keys, At this time, it is necessary to relocate, that is, match with the generated key frame (see if it has reached the place it has been before) to determine the camera pose. If the relocation is still unsuccessful, it means that the tracking is completely lost. Either wait for the camera to rotate or reset. |

A. initialization section

void Tracking::Track()

{

// track consists of two parts: estimating motion and tracking local map

// mState is the state of tracking

// SYSTME_NOT_READY, NO_IMAGE_YET, NOT_INITIALIZED, OK, LOST

// No if the image has been reset or run for the first time_ IMAGE_ Yet status

if(mState==NO_IMAGES_YET)

{

mState = NOT_INITIALIZED;

}

// mLastProcessedState stores the latest state of Tracking and is used for drawing in FrameDrawer

mLastProcessedState=mState;

// Get Map Mutex -> Map cannot be changed

unique_lock<mutex> lock(mpMap->mMutexMapUpdate);

// Step 1: initialize

if(mState==NOT_INITIALIZED)//Determine whether to initialize

{

if(mSensor==System::STEREO || mSensor==System::RGBD)//Binocular or depth camera

StereoInitialization();//Binocular initialization

else

MonocularInitialization();//Monocular initialization

mpFrameDrawer->Update(this);

if(mState!=OK)

return;

}

}B. Tracking step 1 Track the previous frame or reference frame or relocate

else// Step 2: tracking

{

// System is initialized. Track Frame. The system completes initialization and tracks the frame

// bOK is a temporary variable used to indicate whether each function is executed successfully

bool bOK;

// Initial camera pose estimation using motion model or relocation (if tracking is lost)

// In the viewer, there is a switch menuLocalizationMode, which controls whether to activate localizationmode and ultimately mbOnlyTracking

// mbOnlyTracking equal to false indicates normal VO mode (with map update), mbOnlyTracking equal to true indicates that the user manually selects the positioning mode

if(!mbOnlyTracking)

{

// Local Mapping is activated. This is the normal behaviour, unless

// you explicitly activate the "only tracking" mode.

// Normal initialization succeeded

if(mState==OK)

{

// Local Mapping might have changed some MapPoints tracked in last frame

// Check and update the MapPoints replaced in the previous frame

// Update MapPoints replaced by Fuse function and SearchAndFuse function

CheckReplacedInLastFrame();

// Step 2.1: track the previous frame or reference frame or relocate

// The motion model is empty or has just finished repositioning

// mCurrentFrame. MNID < mnlastrelocframeid + 2 indicates that the rigid relocation is less than two frames

// TrackWithMotionModel should be preferred as long as mVelocity is not empty

// mnLastRelocFrameId: the last relocated frame

if(mVelocity.empty() || mCurrentFrame.mnId<mnLastRelocFrameId+2)

{

// The pose of the previous frame is taken as the initial pose of the current frame

// Find the matching points of the feature points of the current frame in the reference frame by BoW

// The pose can be obtained by optimizing the 3D point re projection error corresponding to each feature point

bOK = TrackReferenceKeyFrame();//Tracking reference frame

}

else

{

// Set the initial pose of the current frame according to the constant speed model

// Find the matching points of the feature points of the current frame in the reference frame by projection

// The pose can be obtained by optimizing the projection error of the 3D point corresponding to each feature point

bOK = TrackWithMotionModel();//The pose of the current frame is predicted according to the fixed motion speed model

if(!bOK)

// TrackReferenceKeyFrame is a tracking reference frame. It cannot predict the bit attitude of the current frame according to the fixed motion speed model. It can be matched through bow acceleration (SearchByBow)

// Finally, the optimized pose is obtained through optimization

bOK = TrackReferenceKeyFrame();

}

}

else

{

// BOW search, PnP, solving pose

bOK = Relocalization()//Relocation successful or not

}

}

else

{

// Localization Mode: Local Mapping is deactivated

// Only tracking is performed, and the local map does not work

// Step 2.1: track the previous frame or reference frame or relocate

// tracking lost it

if(mState==LOST)

{

bOK = Relocalization();//Flag to judge whether relocation is successful or not

}

else

{

// mbVO is a variable only when mbOnlyTracking is true

// mbVO is false, which means that this frame matches many MapPoints, and the tracking is normal,

// mbVO is true, indicating that this frame matches very few MapPoints, less than 10, and the rhythm of kneeling

if(!mbVO)

{

// In last frame we tracked enough MapPoints in the map

// If mbVO is 0, it indicates that this frame matches many 3D map points, which is very good

if(!mVelocity.empty())

{

bOK = TrackWithMotionModel();

}

else

{

bOK = TrackReferenceKeyFrame();

}

}

else

{

// In last frame we tracked mainly "visual odometry" points. In the last frame, we mainly tracked the visual odometer points

// We compute two camera poses, one from motion model and one doing relocalization. We calculate the camera pose from the motion model and relocation

// If relocalization is sucessfull we choose that solution, otherwise we retain

// the "visual odometry" solution.

// If mbVO is 1, it indicates that this frame matches very few 3D map points, less than 10. The rhythm of kneeling needs to be tracked and positioned

bool bOKMM = false;//Whether the motion model is successful or not

bool bOKReloc = false;//Relocation success judgment flag

vector<MapPoint*> vpMPsMM;//Record map points

vector<bool> vbOutMM;//Record outer point

cv::Mat TcwMM;//Transformation matrix

if(!mVelocity.empty())//Have speed

{

bOKMM = TrackWithMotionModel();//Tracking with motion model

vpMPsMM = mCurrentFrame.mvpMapPoints;//Record map points

vbOutMM = mCurrentFrame.mvbOutlier;//Record outer point

TcwMM = mCurrentFrame.mTcw.clone();//Transform matrix of current frame

}

bOKReloc = Relocalization();//Reposition with

// The relocation was not successful, but the motion model tracking was successful

if(bOKMM && !bOKReloc)

{

mCurrentFrame.SetPose(TcwMM);

mCurrentFrame.mvpMapPoints = vpMPsMM;

mCurrentFrame.mvbOutlier = vbOutMM;

if(mbVO)

{

// Update the observed degree of MapPoints in the current frame

for(int i =0; i<mCurrentFrame.N; i++)

{

if(mCurrentFrame.mvpMapPoints[i] && !mCurrentFrame.mvbOutlier[i])

{

mCurrentFrame.mvpMapPoints[i]->IncreaseFound();

}

}

}

}

else if(bOKReloc)// As long as the relocation is successful, the whole tracking process is normal (positioning and tracking, more believe in relocation)

{

mbVO = false;

}

bOK = bOKReloc || bOKMM;

}

}

}Tracking step 2 After the initial attitude is obtained by inter frame matching, now track the local map to get more matching, and optimize the current Pose. Local map: current frame, MapPoints of current frame, and the common view relationship between current key frame and other key frames. In step 2.1, it is mainly two-to-two tracking (constant speed model tracks the previous frame and tracks the reference frame), Here, after searching for local key frames, collect all local MapPoints, and then projectively match the local MapPoints with the current frame to get more matching MapPoints for point optimization.

Step 3: update the mVelocity in the constant speed motion model TrackWithMotionModel

// Take the latest key frame as the reference frame, followed by the above code

mCurrentFrame.mpReferenceKF = mpReferenceKF;

if(!mbOnlyTracking)

{

if(bOK)

bOK = TrackLocalMap();

}

else

{

// mbVO true means that there are few matches to MapPoints in the map. We cannot retrieve

// a local map and therefore we do not perform TrackLocalMap(). Once the system relocalizes

// the camera we will use the local map again.

// Relocation successful

if(bOK && !mbVO)

bOK = TrackLocalMap();

}

if(bOK)

mState = OK;

else

mState=LOST;

// Update drawer

mpFrameDrawer->Update(this);

// If tracking were good, check if we insert a keyframe

if(bOK)

{

// Update motion model

if(!mLastFrame.mTcw.empty())

{

// Step 2.3: update the mVelocity in the constant speed motion model TrackWithMotionModel

cv::Mat LastTwc = cv::Mat::eye(4,4,CV_32F);

mLastFrame.GetRotationInverse().copyTo(LastTwc.rowRange(0,3).colRange(0,3));

mLastFrame.GetCameraCenter().copyTo(LastTwc.rowRange(0,3).col(3));

mVelocity = mCurrentFrame.mTcw*LastTwc; // It's actually Tcl

}

else

mVelocity = cv::Mat();

mpMapDrawer->SetCurrentCameraPose(mCurrentFrame.mTcw);

Step 4 Clears the MapPoints temporarily added for the current frame in UpdateLastFrame

5. Clear the temporary MapPoints, which are generated in the UpdateLastFrame function of TrackWithMotionModel (only binocular and rgbd)

6. Detect and insert key frames, and new MapPoints will be generated for binocular

// Step 2.4: clear the MapPoints temporarily added for the current frame in UpdateLastFrame

for(int i=0; i<mCurrentFrame.N; i++)//Traverse all mappoints in the current frame

{

MapPoint* pMP = mCurrentFrame.mvpMapPoints[i];

if(pMP)

// Exclude MapPoints added for tracking in the UpdateLastFrame function

if(pMP->Observations()<1)

{

mCurrentFrame.mvbOutlier[i] = false;

mCurrentFrame.mvpMapPoints[i]=static_cast<MapPoint*>(NULL);

}

}

// Delete temporal MapPoints

// Step 2.5: clear temporary MapPoints, which are generated in the UpdateLastFrame function of TrackWithMotionModel (only binocular and rgbd)

// In step 2.4, these MapPoints are only eliminated in the current frame, which is deleted from the MapPoints database here

// What is generated here is only to improve the inter frame tracking effect of binocular or rgbd camera. It is discarded after use and not added to the map

for(list<MapPoint*>::iterator lit = mlpTemporalPoints.begin(), lend = mlpTemporalPoints.end(); lit!=lend; lit++)

{

MapPoint* pMP = *lit;

delete pMP;

}

// This is not only to clear mlpTemporalPoints, but also to delete the MapPoint pointed by the pointer through delete pMP

mlpTemporalPoints.clear();

// Check if we need to insert a new keyframe

// Step 2.6: detect and insert key frames, and new MapPoints will be generated for binocular

if(NeedNewKeyFrame())

CreateNewKeyFrame();

// We allow points with high innovation (considered outliers by the Huber function)

// pass to the new keyframe, so that bundle adjustment will finally decide

// if they are outliers or not. We don't want next frame to estimate its position. We don't want to estimate its position in the next frame.

// with those points so we discard them in the frame.

// Delete those 3D map points detected as outlier s in bundle adjustment

for(int i=0; i<mCurrentFrame.N;i++)

{

if(mCurrentFrame.mvpMapPoints[i] && mCurrentFrame.mvbOutlier[i])

mCurrentFrame.mvpMapPoints[i]=static_cast<MapPoint*>(NULL);

}

}

// Reset if the camera get lost soon after initialization

// The tracking failed, and the relocation was not completed, so we had to Reset again

if(mState==LOST)

{

if(mpMap->KeyFramesInMap()<=5)

{

cout << "Track lost soon after initialisation, reseting..." << endl;

mpSystem->Reset();

return;

}

}

if(!mCurrentFrame.mpReferenceKF)

mCurrentFrame.mpReferenceKF = mpReferenceKF;

// Save the data of the previous frame

mLastFrame = Frame(mCurrentFrame);

}C. Record the pose information for trajectory reproduction

if(!mCurrentFrame.mTcw.empty())

{

// Calculate relative attitude T_currentFrame_referenceKeyFrame

cv::Mat Tcr = mCurrentFrame.mTcw*mCurrentFrame.mpReferenceKF->GetPoseInverse();

mlRelativeFramePoses.push_back(Tcr);

mlpReferences.push_back(mpReferenceKF);

mlFrameTimes.push_back(mCurrentFrame.mTimeStamp);

mlbLost.push_back(mState==LOST);

}

else

{

// This can happen if tracking is lost

// If the tracking fails, the last value is used for the relative pose

mlRelativeFramePoses.push_back(mlRelativeFramePoses.back());

mlpReferences.push_back(mlpReferences.back());

mlFrameTimes.push_back(mlFrameTimes.back());

mlbLost.push_back(mState==LOST);

}

}