This paper mainly introduces the traffic gray level of Knative Serving, and demonstrates how to create different Revisions and how to scale the traffic gray level among different Revisions through a rest-api example.

Deploy rest-api v1

- Code

Before testing, we need to write a rest-api code and be able to distinguish between different versions. Now I'm based on the official. Example Modifications have been made to remove github.com/gorilla/mux dependencies for ease of use and to replace them directly with the Golang system package net/http. This code can distinguish different versions by using RESOURCE environment variables.

package main import ( "fmt" "io/ioutil" "log" "net/http" "net/url" "os" "flag" ) var resource string func main() { flag.Parse() //router := mux.NewRouter().StrictSlash(true) resource = os.Getenv("RESOURCE") if resource == "" { resource = "NOT SPECIFIED" } root := "/" + resource path := root + "/{stockId}" http.HandleFunc("/", Index) http.HandleFunc(root, StockIndex) http.HandleFunc(path, StockPrice) if err := http.ListenAndServe(fmt.Sprintf(":%s", "8080"), nil); err != nil { log.Fatalf("ListenAndServe error:%s ", err.Error()) } } func Index(w http.ResponseWriter, r *http.Request) { fmt.Fprintf(w, "Welcome to the %s app! \n", resource) } func StockIndex(w http.ResponseWriter, r *http.Request) { fmt.Fprintf(w, "%s ticker not found!, require /%s/{ticker}\n", resource, resource) } func StockPrice(w http.ResponseWriter, r *http.Request) { stockId := r.URL.Query().Get("stockId") url := url.URL{ Scheme: "https", Host: "api.iextrading.com", Path: "/1.0/stock/" + stockId + "/price", } log.Print(url) resp, err := http.Get(url.String()) if err != nil { fmt.Fprintf(w, "%s not found for ticker : %s \n", resource, stockId) return } defer resp.Body.Close() body, err := ioutil.ReadAll(resp.Body) fmt.Fprintf(w, "%s price for ticker %s is %s\n", resource, stockId, string(body)) }

- Dockerfile

Create a file called Dockerfile and copy the following into the file. To compile the image, execute the docker build -- tag registry. cn-hangzhou. aliyuncs. com/knative-sample/rest-api-go: V1 -- file. / Dockerfile. command.

When you test, please change registry.cn-hangzhou.aliyuncs.com/knative-sample/rest-api-go:v1 into your own mirror warehouse address.

After compiling the image, execute docker push registry.cn-hangzhou.aliyuncs.com/knative-sample/rest-api-go:v1 to push the image to the image warehouse.

FROM registry.cn-hangzhou.aliyuncs.com/knative-sample/golang:1.12 as builder WORKDIR /go/src/github.com/knative-sample/rest-api-go COPY . . RUN CGO_ENABLED=0 GOOS=linux go build -v -o rest-api-go FROM registry.cn-hangzhou.aliyuncs.com/knative-sample/alpine-sh:3.9 COPY --from=builder /go/src/github.com/knative-sample/rest-api-go/rest-api-go /rest-api-go CMD ["/rest-api-go"]

- Service configuration

Now that the mirror is in place, we're starting to deploy Knative Service. Save the following in revision-v1.yaml, and then execute kubectl apply-f revision-v1.yaml to complete the deployment of Knative Service.

apiVersion: serving.knative.dev/v1alpha1 kind: Service metadata: name: stock-service-example namespace: default spec: template: metadata: name: stock-service-example-v1 spec: containers: - image: registry.cn-hangzhou.aliyuncs.com/knative-sample/rest-api-go:v1 env: - name: RESOURCE value: v1 readinessProbe: httpGet: path: / initialDelaySeconds: 0 periodSeconds: 3

The first installation will create a Revision called stock-service-example-v1, and will hit 100% of the traffic on stock-service-example-v1.

Verify the various resources of Serving

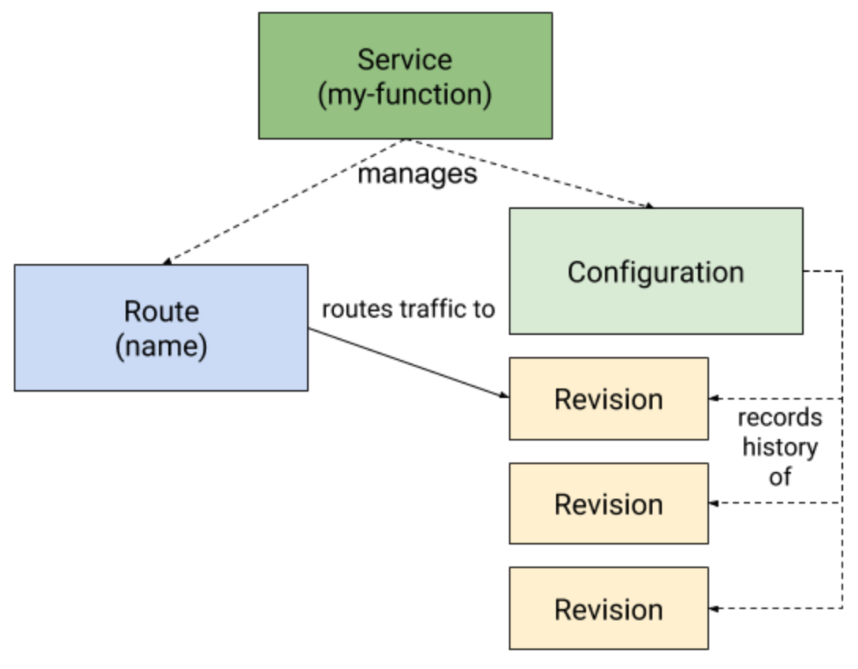

As shown in the figure below, let's first review the various resources that Serving involves. Next, let's take a look at the resource allocation of revision-v1.yaml just deployed.

- Knative Service

kubectl get ksvc stock-service-example --output yaml

- Knative Configuration

kubectl get configuration -l \ "serving.knative.dev/service=stock-service-example" --output yaml

- Knative Revision

kubectl get revision -l \ "serving.knative.dev/service=stock-service-example" --output yaml

- Knative Route

kubectl get route -l \ "serving.knative.dev/service=stock-service-example" --output yaml

Access rest-api services

The Service name we deployed is stock-service-example. Accessing this Service requires getting Istio Gateway's IP and then launching curl requests using stock-service-example Domain binding to Host. I wrote a script for easy testing. Create a run-test.sh file, copy the following into the file, and then grant the file executable rights. Execute this script to get the test results.

#!/bin/bash SVC_NAME="stock-service-example" export INGRESSGATEWAY=istio-ingressgateway export GATEWAY_IP=`kubectl get svc $INGRESSGATEWAY --namespace istio-system --output jsonpath="{.status.loadBalancer.ingress[*]['ip']}"` export DOMAIN_NAME=`kubectl get route ${SVC_NAME} --output jsonpath="{.status.url}"| awk -F/ '{print $3}'` curl -H "Host: ${DOMAIN_NAME}" http://${GATEWAY_IP}

Test results:

From the output of the following command, you can see that the information returned now is v1, indicating that the request hit v1.

└─# ./run-test.sh Welcome to the v1 app!

Gray 50% flow to v2

Modify Service to create v2 revision and create a revision-v2.yaml file as follows:

apiVersion: serving.knative.dev/v1alpha1 kind: Service metadata: name: stock-service-example namespace: default spec: template: metadata: name: stock-service-example-v2 spec: containers: - image: registry.cn-hangzhou.aliyuncs.com/knative-sample/rest-api-go:v1 env: - name: RESOURCE value: v2 readinessProbe: httpGet: path: / initialDelaySeconds: 0 periodSeconds: 3 traffic: - tag: v1 revisionName: stock-service-example-v1 percent: 50 - tag: v2 revisionName: stock-service-example-v2 percent: 50 - tag: latest latestRevision: true percent: 0

Comparing v1 and V2 versions, we can see that traffic: is added to Service in V2 version. Each Revision is specified in Traffic. Execute kubectl apply-f revision-v2.yaml to install the V2 version configuration. Then execute the test script and you can see that v1 and V2 are basically 50% of the returned results. Here is the result of my real test.

└─# ./run-test.sh Welcome to the v2 app! └─# ./run-test.sh Welcome to the v1 app! └─# ./run-test.sh Welcome to the v2 app! └─# ./run-test.sh Welcome to the v1 app!

Verify Revision in advance

The example of v2 shown above distributes traffic directly to v2 when v2 is created. If v2 has problems, 50% of the traffic will be abnormal. Let's show you how to verify that the new revision service is working before forwarding traffic. Let's create another v3 version.

Create a revision-v3.yaml file as follows:

apiVersion: serving.knative.dev/v1alpha1 kind: Service metadata: name: stock-service-example namespace: default spec: template: metadata: name: stock-service-example-v3 spec: containers: - image: registry.cn-hangzhou.aliyuncs.com/knative-sample/rest-api-go:v1 env: - name: RESOURCE value: v3 readinessProbe: httpGet: path: / initialDelaySeconds: 0 periodSeconds: 3 traffic: - tag: v1 revisionName: stock-service-example-v1 percent: 50 - tag: v2 revisionName: stock-service-example-v2 percent: 50 - tag: latest latestRevision: true percent: 0

Execute kubectl apply-f revision-v3.yaml to deploy V3 version. Then take a look at Revision:

└─# kubectl get revision NAME SERVICE NAME GENERATION READY REASON stock-service-example-v1 stock-service-example-v1 1 True stock-service-example-v2 stock-service-example-v2 2 True stock-service-example-v3 stock-service-example-v3 3 True

You can see that three reviews have been created so far.

Now let's take a look at the stock-service-example's actual effect:

└─# kubectl get ksvc stock-service-example -o yaml apiVersion: serving.knative.dev/v1beta1 kind: Service metadata: annotations: ... status: ... traffic: - latestRevision: false percent: 50 revisionName: stock-service-example-v1 tag: v1 url: http://v1-stock-service-example.default.example.com - latestRevision: false percent: 50 revisionName: stock-service-example-v2 tag: v2 url: http://v2-stock-service-example.default.example.com - latestRevision: true percent: 0 revisionName: stock-service-example-v3 tag: latest url: http://latest-stock-service-example.default.example.com url: http://stock-service-example.default.example.com

You can see that v3 Revision was created, but because traffic was not set, there would be no traffic forwarding. How many times do you execute. / run-test.sh at this time, you won't get the output of v3.

In Service's status.traffic configuration, you can see the latest Review configuration:

- latestRevision: true percent: 0 revisionName: stock-service-example-v3 tag: latest url: http://latest-stock-service-example.default.example.com

Each review has its own URL, so it only needs to initiate a request based on the V3 Review's URL to start testing.

I've written a test script. You can save the following script in the latest-run-test.sh file, and then execute the script to launch a request directly to the latest version:

#!/bin/bash export INGRESSGATEWAY=istio-ingressgateway export GATEWAY_IP=`kubectl get svc $INGRESSGATEWAY --namespace istio-system --output jsonpath="{.status.loadBalancer.ingress[*]['ip']}"` export DOMAIN_NAME=`kubectl get route ${SVC_NAME} --output jsonpath="{.status.url}"| awk -F/ '{print $3}'` export LAST_DOMAIN=`kubectl get ksvc stock-service-example --output jsonpath="{.status.traffic[?(@.tag=='latest')].url}"| cut -d'/' -f 3` curl -H "Host: ${LAST_DOMAIN}" http://${GATEWAY_IP}

Testing v3 version can distribute traffic to v3 version if there is no problem.

Next we create a file revision-v3-2.yaml, which reads as follows:

apiVersion: serving.knative.dev/v1alpha1 kind: Service metadata: name: stock-service-example namespace: default spec: template: metadata: name: stock-service-example-v3 spec: containers: - image: registry.cn-hangzhou.aliyuncs.com/knative-sample/rest-api-go:v1 env: - name: RESOURCE value: v3 readinessProbe: httpGet: path: / initialDelaySeconds: 0 periodSeconds: 3 traffic: - tag: v1 revisionName: stock-service-example-v1 percent: 40 - tag: v2 revisionName: stock-service-example-v2 percent: 30 - tag: v3 revisionName: stock-service-example-v3 percent: 30 - tag: latest latestRevision: true percent: 0

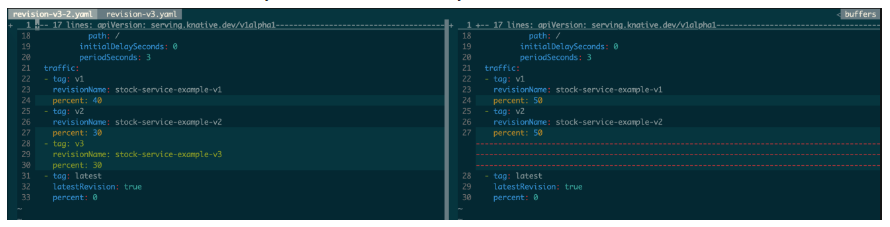

Use vimdiff to see the difference between revision-v3.yaml and revision-v3-2.yaml:

revision-v3-2.yaml increased traffic forwarding to v3. When executed. / run-test.sh, you can see that the ratio of v1, v2 and V 3 is basically 4:3:3.

└─# ./run-test.sh Welcome to the v1 app! └─# ./run-test.sh Welcome to the v2 app! └─# ./run-test.sh Welcome to the v1 app! └─# ./run-test.sh Welcome to the v2 app! └─# ./run-test.sh Welcome to the v3 app! ... ...

Version rollback

The Evision of Knative Service can not be modified. The Evision created by every update of Service Spec is kept in the kube-apiserver. If an application is released to a new version and finds a problem and wants to roll back to the old version, it only needs to specify the corresponding Revision, and then forward the traffic to the past.

Summary

The gray level and rollback of Knative Service are based on traffic. Workload(Pod) is automatically created based on the traffic coming from it. So traffic is the core driver in Knative Serving model. This is different from the traditional application publishing and gray model.

Assuming there is an app1 application, the traditional approach is to set the number of instances of the application (Pod in Kubernetes system), we assume that the number of instances is 10. If we want to publish gray scale, the traditional way is to publish a Pod first. At this time, the distribution of v1 and v2 is: v1 has 9 Pods and v2 has 1 Pod. If we want to continue to expand the gray scale, it is that the number of Pod in v2 becomes more and the number of Pod in v1 decreases, but the total number of Pod remains 10 unchanged.

In Knative Serving model, the number of Pod s is always self-adaptive according to the traffic, and there is no need to specify in advance. In the gray scale, only the gray scale of traffic between different versions can be specified. The number of instances of each review is self-adaptive according to the size of the traffic, and there is no need to specify in advance.

From the above comparison, we can find that the Knative Serving model can accurately control the range of gray influence, and ensure that only part of the gray traffic. However, the proportion of Pod gray level in the traditional model can not really represent the proportion of traffic. It is an indirect gray level method.

Links to the original text

This article is the original content of Yunqi Community, which can not be reproduced without permission.