When we use Traefik as Kubernetes' Ingress controller, it is naturally necessary for us to monitor it. In this article, we will explore how to use Prometheus and Grafana to monitor and alarm from the metrics indicators provided by Traefik.

install

First, you need an accessible Kubernetes cluster.

Deploy traifik

Here we use the simpler Helm method to install and deploy traifik. First, add traifik to Helm's warehouse using the following command:

$ helm repo add traefik https://helm.traefik.io/ $ helm repo update

Then we can deploy the latest version of Traefik in the Kube system namespace. In our example, we also need to ensure that the Prometheus indicator is enabled in the cluster, which can be passed to Helm -- metrics Prometheus = true flag. Here, we place all configurations in the following Traefik values Yaml file:

# traefik-values.yaml

# Simply use the hostPort mode

ports:

web:

port: 8000

hostPort: 80

websecure:

port: 8443

hostPort: 443

service:

enabled: false

# Do not expose dashboard

ingressRoute:

dashboard:

enabled: false

# Enable prometheus monitoring indicator

additionalArguments:

- --api.debug=true

- --metrics.prometheus=true

# By default, the cluster installed by kubedm has a stain on the master, which needs to be tolerated before deployment

# Here, we fix traefik in the master node

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Equal"

effect: "NoSchedule"

nodeSelector:

kubernetes.io/hostname: "master1"

Install directly using the following command:

$ helm install traefik traefik/traefik -n kube-system -f ./traefik-values.yaml NAME: traefik LAST DEPLOYED: Mon Apr 5 11:49:22 2021 NAMESPACE: kube-system STATUS: deployed REVISION: 1 TEST SUITE: None

Since we do not create an ingresroute object for the traifik Dashboard by default, we can use port forward to access it temporarily. Of course, we need to create a Service for the traifik Dashboard first:

# traefik-dashboard-service.yaml

apiVersion: v1

kind: Service

metadata:

name: traefik-dashboard

namespace: kube-system

labels:

app.kubernetes.io/instance: traefik

app.kubernetes.io/name: traefik-dashboard

spec:

type: ClusterIP

ports:

- name: traefik

port: 9000

targetPort: traefik

protocol: TCP

selector:

app.kubernetes.io/instance: traefik

app.kubernetes.io/name: traefik

Create directly, and then use port forwarding to access:

$ kubectl apply -f traefik-dashboard-service.yaml $ kubectl port-forward service/traefik-dashboard 9000:9000 -n kube-system Forwarding from 127.0.0.1:9000 -> 9000 Forwarding from [::1]:9000 -> 9000

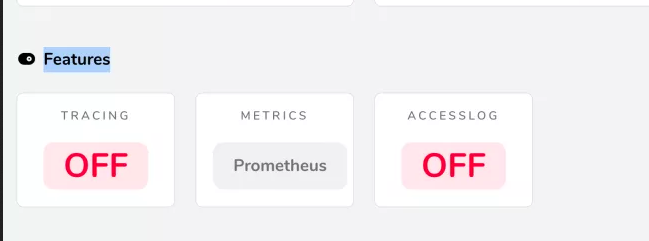

Then we can use the browser http://localhost:9000/dashboard/ (note) The trailing slash in the URL (which is required) accesses the traifik dashboard. Now you should see that the Prometheus indicator is enabled in the Features section of the dashboard.

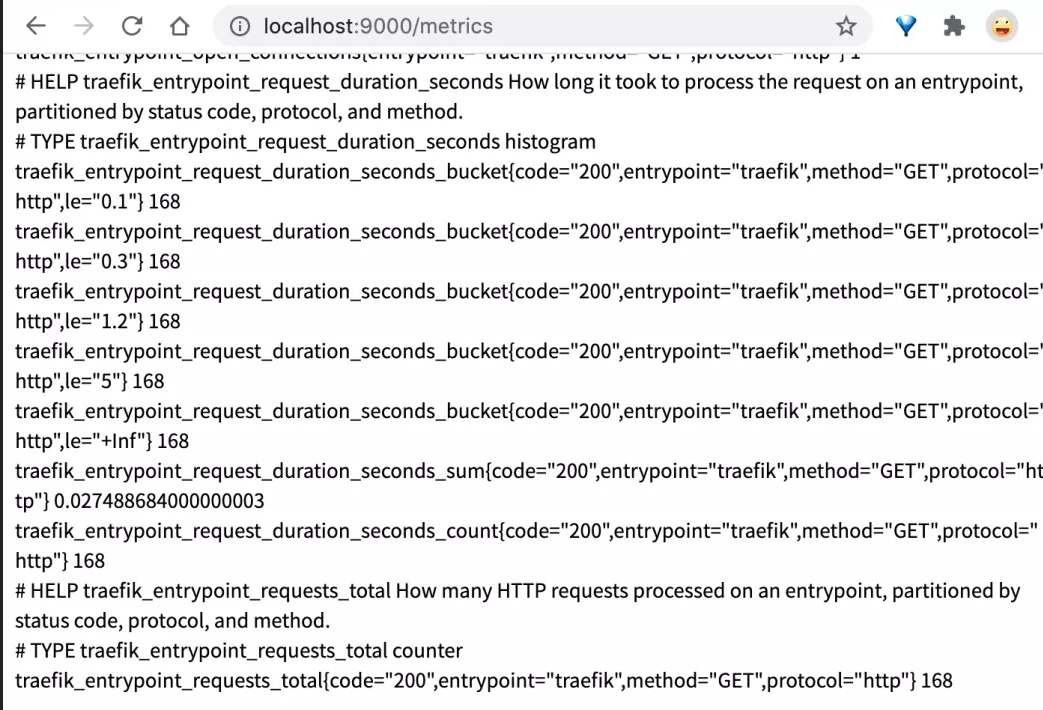

In addition, we can visit http://localhost:9000/metrics Endpoint to view some metrics indicators provided by Traefik:

Deploy Prometheus Stack

The complete tool chain of Prometheus consists of many components. If you want to install and configure completely manually, it will take a long time. Interested friends can refer to the relevant introduction of our article earlier. Similarly, here we directly use Helm Charts of Prometheus to deploy:

$ helm repo add prometheus-community https://github.com/prometheus-community/helm-charts $ helm repo update

The above resource library provides many charts. To view the complete list, you can use the search command:

$ helm search repo prometheus-community

Here, we need to install Kube Prometheus stack Chart, which will deploy the required related components:

$ helm install prometheus-stack prometheus-community/kube-prometheus-stack NAME: prometheus-stack LAST DEPLOYED: Mon Apr 5 12:25:22 2021 NAMESPACE: default STATUS: deployed REVISION: 1 NOTES: kube-prometheus-stack has been installed. Check its status by running: kubectl --namespace default get pods -l "release=prometheus-stack" Visit https://github.com/prometheus-operator/kube-prometheus for instructions on how to create & configure Alertmanager and Prometheus instances using the Operator.

Configure traifik monitoring

Prometheus Operator provides the CRD ServiceMonitor to configure the collection of monitoring indicators. Here we define an object as follows:

# traefik-service-monitor.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: traefik

namespace: default

labels:

app: traefik

release: prometheus-stack

spec:

jobLabel: traefik-metrics

selector:

matchLabels:

app.kubernetes.io/instance: traefik

app.kubernetes.io/name: traefik-dashboard

namespaceSelector:

matchNames:

- kube-system

endpoints:

- port: traefik

path: /metrics

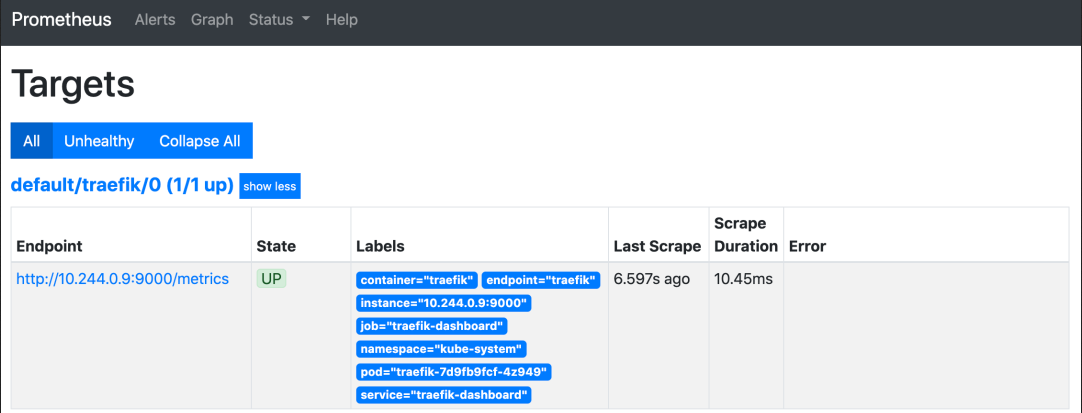

According to the above configuration, Prometheus will get the / metrics endpoint of the traefik dashboard service. The main thing to note is that the traefik dashboard service is created in the Kube system namespace, while the ServiceMonitor is deployed in the default namespace, so we use the namespaceSelector for namespace matching.

$ kubectl apply -f traefik-service-monitor.yaml

Next, we can verify whether Prometheus has started to capture the indicators of traifik.

Configuring traifik alarms

Next, we can also add an alarm rule. When the conditions match, the alarm will be triggered. Similarly, Prometheus Operator also provides a CRD object named promethesrule to configure the alarm rule:

# traefik-rules.yaml

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

annotations:

meta.helm.sh/release-name: prometheus-stack

meta.helm.sh/release-namespace: default

labels:

app: kube-prometheus-stack

release: prometheus-stack

name: traefik-alert-rules

namespace: default

spec:

groups:

- name: Traefik

rules:

- alert: TooManyRequest

expr: avg(traefik_entrypoint_open_connections{job="traefik-dashboard",namespace="kube-system"}) > 5

for: 1m

labels:

severity: critical

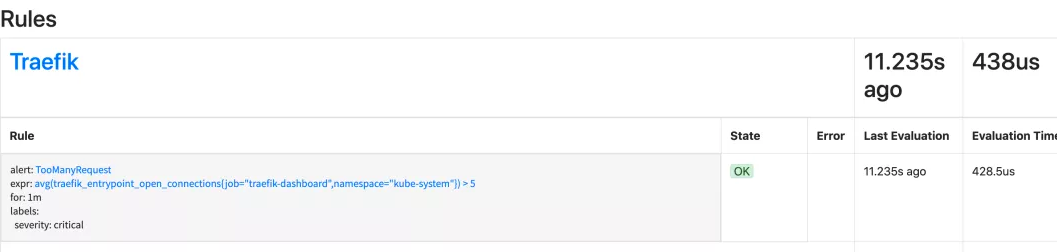

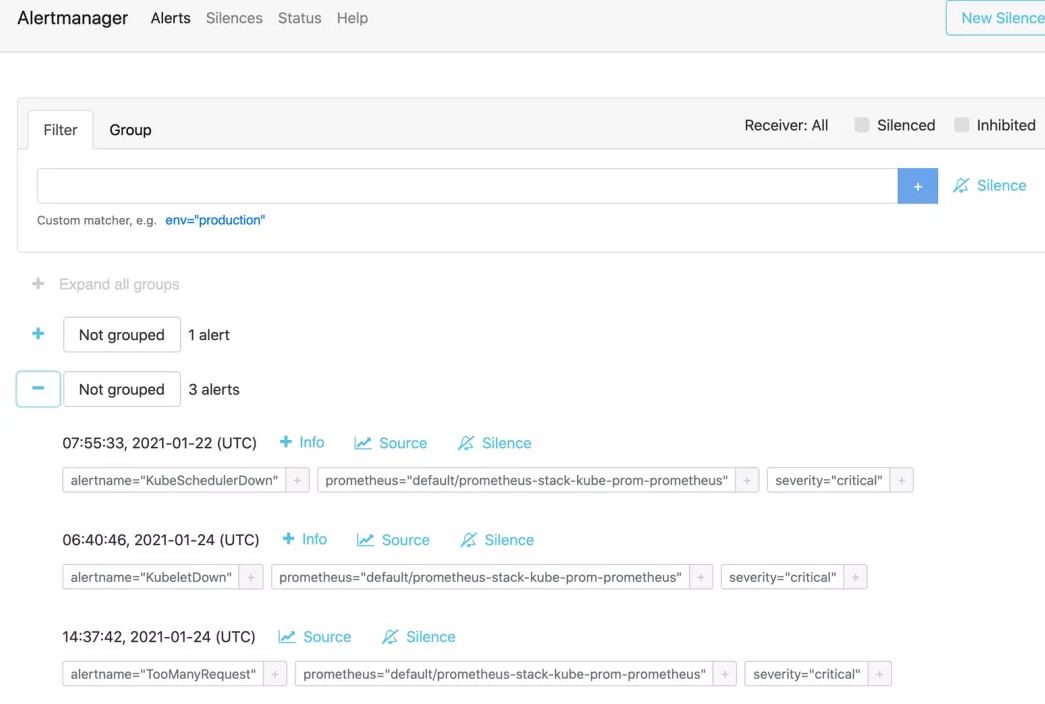

Here we define a rule: if more than 5 open connections trigger a TooManyRequest alarm within 1 minute, you can directly create this object:

$ kubectl apply -f traefik-rules.yaml

After creation, you can normally see the corresponding alarm rules on the status > rules page under the Dashboard of Promethues:

Grafana configuration

Grafana was already deployed when the helmet chart of Kube Prometheus stack was deployed. Next, we can configure a Dashboard for the monitoring indicators of Traefik. Similarly, first, we use port forwarding to access grafana:

$ kubectl port-forward service/rometheus-stack-grafana 10080:80

Then access the Grafana GUI( http://localhost:10080 )It will require the login name and password. The default login user name is admin and the password is Prom operator. The password can be obtained from the Kubernetes Secret object named Prometheus operator grafana.

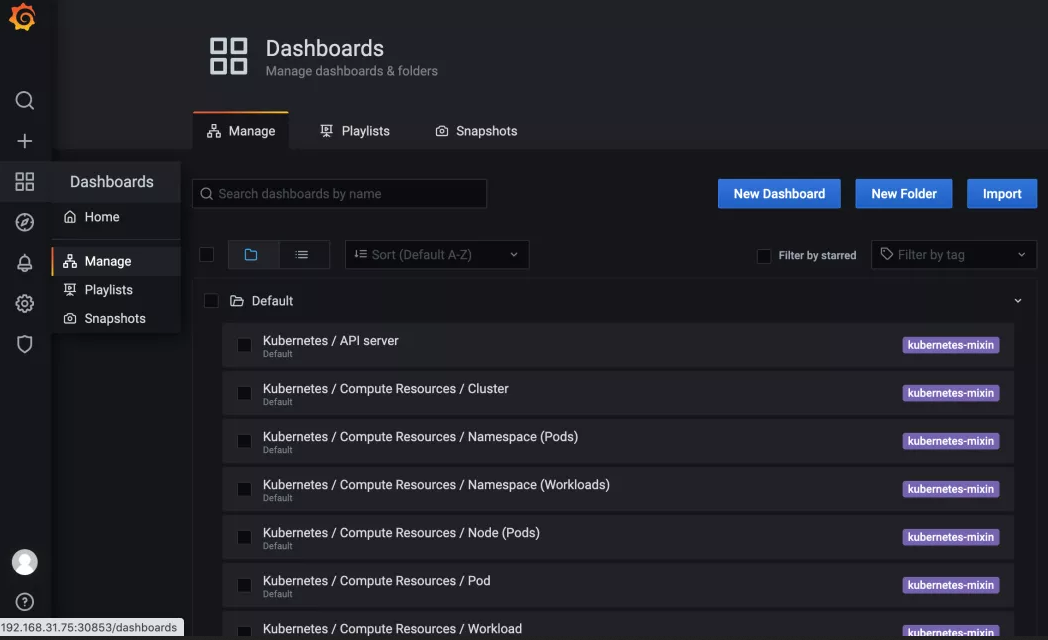

Of course, we can customize a Dashboard for traifik, or import an appropriate one from the official community of Grafana. Click the square icon on the left navigation bar and navigate to dashboards > manage to add a Dashboard.

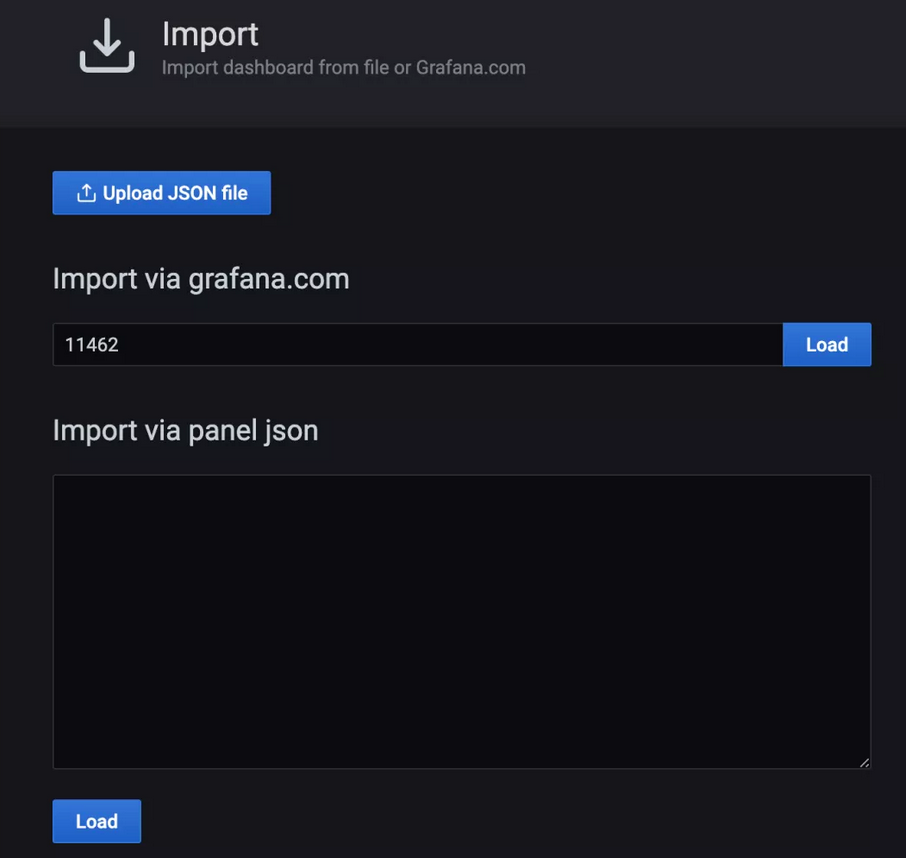

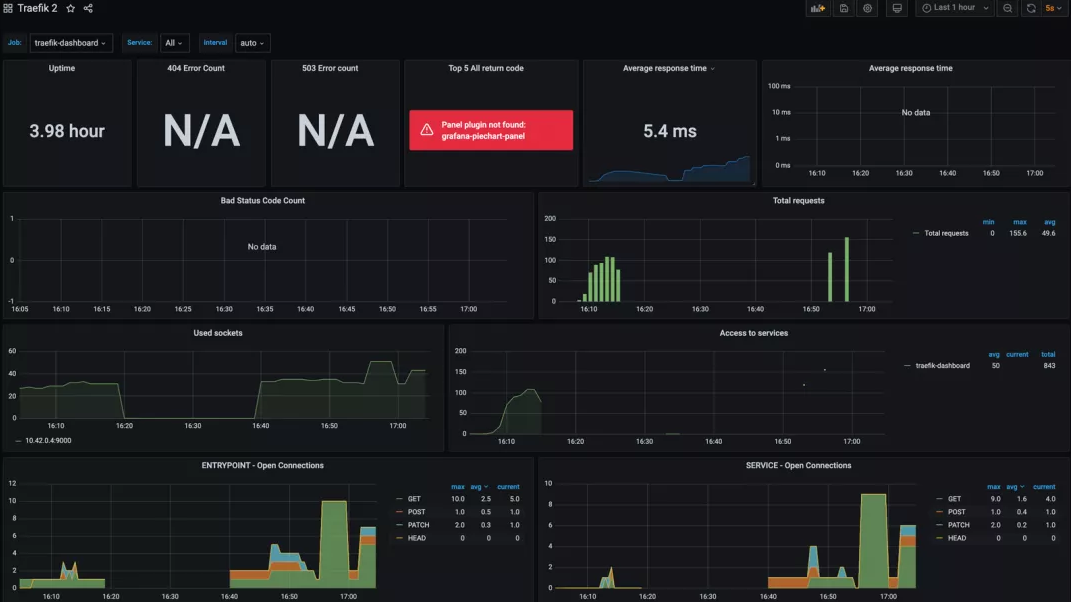

Click the Import button in the upper right corner and enter 11462 as the ID of the Dashboard, corresponding to the traifik 2 Dashboard contributed by user timoreymann.

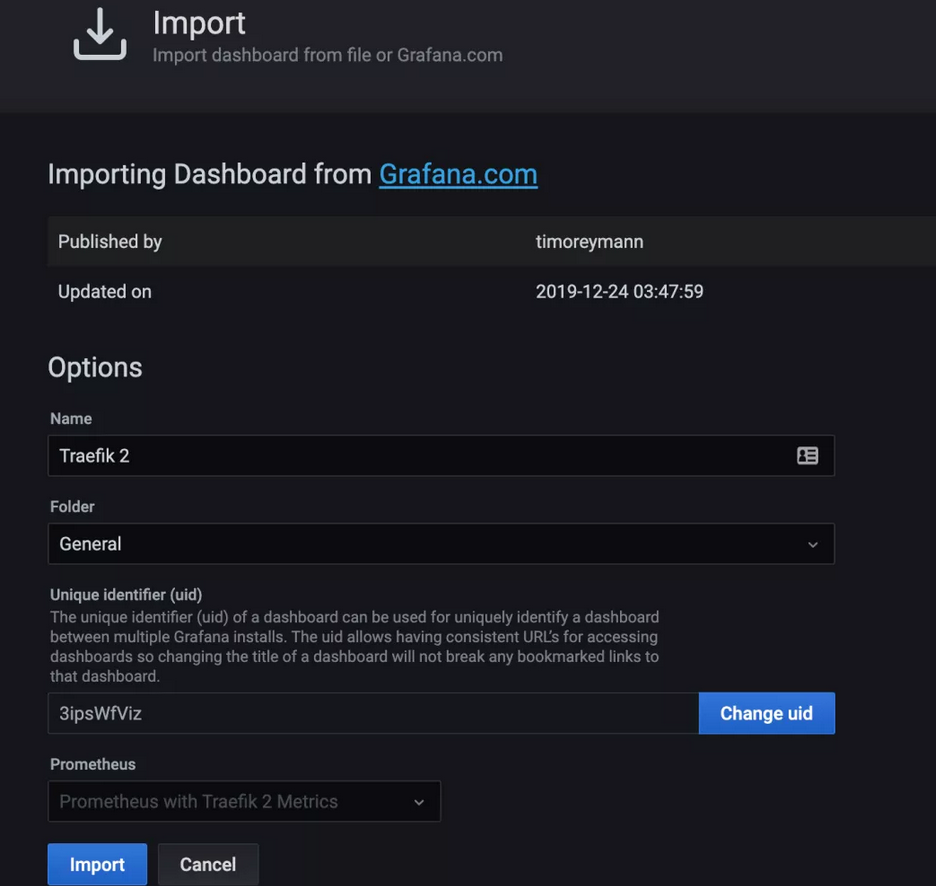

After clicking Load, you should see the relevant information of the imported dashboard.

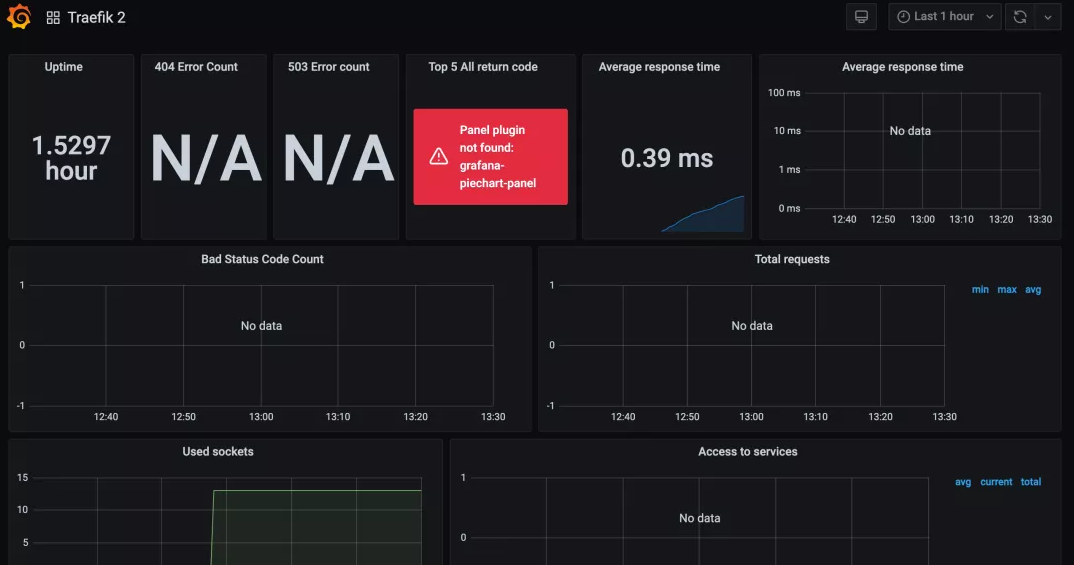

There is a drop-down menu at the bottom. Select Prometheus data source and click Import to generate the Dashboard as shown below.

test

Now, Traefik has started working, and the indicators have been obtained by Prometheus and Grafana. Next, we need to use an application to test. Here we deploy the HTTP bin service, which provides many endpoints that can be used to simulate different types of user traffic. The corresponding resource list file is as follows:

# httpbin.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin

labels:

app: httpbin

spec:

replicas: 1

selector:

matchLabels:

app: httpbin

template:

metadata:

labels:

app: httpbin

spec:

containers:

- image: kennethreitz/httpbin

name: httpbin

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: httpbin

spec:

ports:

- name: http

port: 8000

targetPort: 80

selector:

app: httpbin

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: httpbin

spec:

entryPoints:

- web

routes:

- match: Host(`httpbin.local`)

kind: Rule

services:

- name: httpbin

port: 8000

Directly create the above resource list:

$ kubectl apply -f httpbin.yaml deployment.apps/httpbin created service/httpbin created ingressroute.traefik.containo.us/httpbin created

The httpbin route will match httpbin Local, and then forward the request to the httpbin Service:

$ curl -I http://192.168.31.75 -H "host:httpbin.local" HTTP/1.1 200 OK Access-Control-Allow-Credentials: true Access-Control-Allow-Origin: * Content-Length: 9593 Content-Type: text/html; charset=utf-8 Date: Mon, 05 Apr 2021 05:43:16 GMT Server: gunicorn/19.9.0

The Traefik deployed here uses the hostPort mode, which is fixed to the master node. The IP address here is 192.168 31.75 is the IP address of the master node.

Next, we use ab to access the HTTPBin service to simulate some traffic. These requests will generate corresponding indicators, and execute the following script:

$ ab -c 5 -n 10000 -m PATCH -H "host:httpbin.local" -H "accept: application/json" http://192.168.31.75/patch $ ab -c 5 -n 10000 -m GET -H "host:httpbin.local" -H "accept: application/json" http://192.168.31.75/get $ ab -c 5 -n 10000 -m POST -H "host:httpbin.local" -H "accept: application/json" http://192.168.31.75/post

After a normal period of time, check Grafana's Dashboard and you can see that more information is displayed:

Including: normal operation time, average response time, total number of requests, request count based on HTTP methods and services, etc.

Finally, when we test the application traffic, Prometheus may trigger an alarm. The previously created TooManyRequest alarm will be displayed on the Alertmanager dashboard, and then you can configure the Receiver receiving alarm information as needed.

$ kubectl port-forward service/prometheus-stack-kube-prom-alertmanager 9093:9093 Forwarding from 127.0.0.1:9093 -> 9093

summary

In this article, we have seen that the process of connecting Traefik to Prometheus and Grafana to create visualization from Traefik metrics is very simple. After being familiar with these tools, we can also create some dashboards according to the actual needs to expose some key data of your environment.