Transformer course business dialogue robot rasa 3 X run command learning

- rasa data migrate

- rasa data validate

- rasa export

- rasa evaluate markers

- rasa x

Rasa official website

rasa data migrate

A unique data file whose domain format has changed between 2.0 and 3.0. You can automatically migrate 2.0 domains to 3.0 format.

You can start the migration by running the following command:

rasa data migrate

You can specify an input file or directory and an output file or directory using the following parameters:

rasa data migrate -d DOMAIN --out OUT_PATH

If no parameters are specified, the default domain path (domain.yml) is used for input and output files.

This command will also back up the 2.0 domain files to a different original_domain.yml, or original_domain,

Note that if the slot in the migration field is required by the form_ Slots, these slots will contain mapping conditions.

If an invalid domain file is provided, or the domain file is in 3.0 format, the slot or form is missing from the original file, or the slot or form is partially distributed in multiple domain files, an exception will be thrown and the migration process will be terminated. This is done to avoid duplication of migrated parts in the domain file. Be sure to group the definitions of all slots or forms into one file.

You can learn more about this command by running the following command:

(installingrasa) E:\starspace\my_rasa>rasa data migrate --help

usage: rasa data migrate [-h] [-v] [-vv] [--quiet] [-d DOMAIN] [--out OUT]

optional arguments:

-h, --help show this help message and exit

-d DOMAIN, --domain DOMAIN

Domain specification. This can be a single YAML file, or a directory that contains several files with domain specifications in it. The content of these files will

be read and merged together. (default: domain.yml)

--out OUT Path (for `yaml`) where to save migrated domain in Rasa 3.0 format. (default: domain.yml)

Python Logging Options:

-v, --verbose Be verbose. Sets logging level to INFO. (default: None)

-vv, --debug Print lots of debugging statements. Sets logging level to DEBUG. (default: None)

--quiet Be quiet! Sets logging level to WARNING. (default: None)

rasa data validate

You can check the domain, NLU data, or story data for errors and inconsistencies. To validate the data, run the following command:

rasa data validate

The verifier searches for errors in the data, for example, two intentions have some of the same training examples. The validator also checks if you have any stories in which different helper actions come from the same conversation history. Conflicts between stories can prevent the model from learning the correct pattern of dialogue.

If Max_ Pass history to config. Config For one or more policies in YML, use – Max history < max_ The history > flag provides the minimum of these values in the validator command.

You can also verify only the story structure by running the following command:

rasa data validate stories

Running rasa data validation does not test whether your rules are consistent with your story. However, during training, RulePolicy checks for conflicts between rules and stories. Any such conflict will suspend training.

In addition, if you use end-to-end stories, this may not capture all conflicts. Specifically, if two user inputs result in different tags, but the characterization is exactly the same, there may be conflicting operations after these inputs, but the tool will not report these operations.

To interrupt validation, even for small problems such as unused intentions or responses, you can use the – fail on warnings flag.

Check your story name: the rasa data validate stories command assumes that all story names are unique!

You can use rasa data validate data validation with other parameters, such as specifying the location of data and domain files:

usage: rasa data validate [-h] [-v] [-vv] [--quiet]

[--max-history MAX_HISTORY] [-c CONFIG]

[--fail-on-warnings] [-d DOMAIN]

[--data DATA [DATA ...]]

{stories} ...

positional arguments:

{stories}

stories Checks for inconsistencies in the story files.

optional arguments:

-h, --help show this help message and exit

--max-history MAX_HISTORY

Number of turns taken into account for story structure

validation. (default: None)

-c CONFIG, --config CONFIG

The policy and NLU pipeline configuration of your bot.

(default: config.yml)

--fail-on-warnings Fail validation on warnings and errors. If omitted

only errors will result in a non zero exit code.

(default: False)

-d DOMAIN, --domain DOMAIN

Domain specification. This can be a single YAML file,

or a directory that contains several files with domain

specifications in it. The content of these files will

be read and merged together. (default: domain.yml)

--data DATA [DATA ...]

Paths to the files or directories containing Rasa

data. (default: data)

Python Logging Options:

-v, --verbose Be verbose. Sets logging level to INFO. (default:

None)

-vv, --debug Print lots of debugging statements. Sets logging level

to DEBUG. (default: None)

--quiet Be quiet! Sets logging level to WARNING. (default:

None)

Run an example

(installingrasa) E:\starspace\my_rasa>rasa data validate The configuration for policies and pipeline was chosen automatically. It was written into the config file at 'config.yml'. 2021-12-30 19:49:01 INFO rasa.validator - Validating intents... 2021-12-30 19:49:01 INFO rasa.validator - Validating uniqueness of intents and stories... 2021-12-30 19:49:01 INFO rasa.validator - Validating utterances... 2021-12-30 19:49:01 INFO rasa.validator - Story structure validation... Processed story blocks: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 3/3 [00:00<00:00, 1504.77it/s, # trackers=1] 2021-12-30 19:49:01 INFO rasa.core.training.story_conflict - Considering all preceding turns for conflict analysis. 2021-12-30 19:49:01 INFO rasa.validator - No story structure conflicts found.

rasa export

To export events from the tracer store using the event agent, run:

rasa export

You can specify the location of the environment file, the minimum and maximum timestamps of events that should be published, and the session ID that should be published:

usage: rasa export [-h] [-v] [-vv] [--quiet] [--endpoints ENDPOINTS]

[--minimum-timestamp MINIMUM_TIMESTAMP]

[--maximum-timestamp MAXIMUM_TIMESTAMP]

[--conversation-ids CONVERSATION_IDS]

optional arguments:

-h, --help show this help message and exit

--endpoints ENDPOINTS

Endpoint configuration file specifying the tracker

store and event broker. (default: endpoints.yml)

--minimum-timestamp MINIMUM_TIMESTAMP

Minimum timestamp of events to be exported. The

constraint is applied in a 'greater than or equal'

comparison. (default: None)

--maximum-timestamp MAXIMUM_TIMESTAMP

Maximum timestamp of events to be exported. The

constraint is applied in a 'less than' comparison.

(default: None)

--conversation-ids CONVERSATION_IDS

Comma-separated list of conversation IDs to migrate.

If unset, all available conversation IDs will be

exported. (default: None)

Python Logging Options:

-v, --verbose Be verbose. Sets logging level to INFO. (default:

None)

-vv, --debug Print lots of debugging statements. Sets logging level

to DEBUG. (default: None)

--quiet Be quiet! Sets logging level to WARNING. (default:

None)

rasa evaluate markers

This feature is currently in the pilot phase and may be changed or deleted in the future. Share your feedback in the forum to help us prepare for production.

The following command applies the tag defined in the tag configuration file to an existing conversation stored in the tracker store and generates a. csv file containing extracted tag and summary statistics:

rasa evaluate markers all extracted_markers.csv

Configure the tag extraction process with the following parameters:

usage: rasa evaluate markers [-h] [-v] [-vv] [--quiet] [--config CONFIG] [--no-stats | --stats-file-prefix [STATS_FILE_PREFIX]] [--endpoints ENDPOINTS] [-d DOMAIN] output_filename {first_n,sample,all} ...

positional arguments:

output_filename The filename to write the extracted markers to (CSV format).

{first_n,sample,all}

first_n Select trackers sequentially until N are taken.

sample Select trackers by sampling N.

all Select all trackers.

optional arguments:

-h, --help show this help message and exit

--config CONFIG The config file(s) containing marker definitions. This can be a single YAML file, or a directory that contains several files with marker definitions in it. The content of these files will be read and

merged together. (default: markers.yml)

--no-stats Do not compute summary statistics. (default: True)

--stats-file-prefix [STATS_FILE_PREFIX]

The common file prefix of the files where we write out the compute statistics. More precisely, the file prefix must consist of a common path plus a common file prefix, to which suffixes `-overall.csv` and

`-per-session.csv` will be added automatically. (default: stats)

--endpoints ENDPOINTS

Configuration file for the tracker store as a yml file. (default: endpoints.yml)

-d DOMAIN, --domain DOMAIN

Domain specification. This can be a single YAML file, or a directory that contains several files with domain specifications in it. The content of these files will be read and merged together. (default:

domain.yml)

Python Logging Options:

-v, --verbose Be verbose. Sets logging level to INFO. (default: None)

-vv, --debug Print lots of debugging statements. Sets logging level to DEBUG. (default: None)

--quiet Be quiet! Sets logging level to WARNING. (default: None)

rasa x

RASAX is a tool for practicing session driven development. You can find more information here. You can start Rasa X in local mode by executing the following command

rasa x

To start Rasa X, you need to install Rasa X local mode and be located in the Rasa project directory.

The following parameters are available for rasa x:

usage: rasa x [-h] [-v] [-vv] [--quiet] [-m MODEL] [--data DATA [DATA ...]]

[-c CONFIG] [-d DOMAIN] [--no-prompt] [--production]

[--rasa-x-port RASA_X_PORT] [--config-endpoint CONFIG_ENDPOINT]

[--log-file LOG_FILE] [--use-syslog]

[--syslog-address SYSLOG_ADDRESS] [--syslog-port SYSLOG_PORT]

[--syslog-protocol SYSLOG_PROTOCOL] [--endpoints ENDPOINTS]

[-i INTERFACE] [-p PORT] [-t AUTH_TOKEN]

[--cors [CORS [CORS ...]]] [--enable-api]

[--response-timeout RESPONSE_TIMEOUT]

[--remote-storage REMOTE_STORAGE]

[--ssl-certificate SSL_CERTIFICATE] [--ssl-keyfile SSL_KEYFILE]

[--ssl-ca-file SSL_CA_FILE] [--ssl-password SSL_PASSWORD]

[--credentials CREDENTIALS] [--connector CONNECTOR]

[--jwt-secret JWT_SECRET] [--jwt-method JWT_METHOD]

optional arguments:

-h, --help show this help message and exit

-m MODEL, --model MODEL

Path to a trained Rasa model. If a directory is

specified, it will use the latest model in this

directory. (default: models)

--data DATA [DATA ...]

Paths to the files or directories containing stories

and Rasa NLU data. (default: data)

-c CONFIG, --config CONFIG

The policy and NLU pipeline configuration of your bot.

(default: config.yml)

-d DOMAIN, --domain DOMAIN

Domain specification. This can be a single YAML file,

or a directory that contains several files with domain

specifications in it. The content of these files will

be read and merged together. (default: domain.yml)

--no-prompt Automatic yes or default options to prompts and

oppressed warnings. (default: False)

--production Run Rasa X in a production environment. (default:

False)

--rasa-x-port RASA_X_PORT

Port to run the Rasa X server at. (default: 5002)

--config-endpoint CONFIG_ENDPOINT

Rasa X endpoint URL from which to pull the runtime

config. This URL typically contains the Rasa X token

for authentication. Example:

https://example.com/api/config?token=my_rasa_x_token

(default: None)

--log-file LOG_FILE Store logs in specified file. (default: None)

--use-syslog Add syslog as a log handler (default: False)

--syslog-address SYSLOG_ADDRESS

Address of the syslog server. --use-sylog flag is

required (default: localhost)

--syslog-port SYSLOG_PORT

Port of the syslog server. --use-sylog flag is

required (default: 514)

--syslog-protocol SYSLOG_PROTOCOL

Protocol used with the syslog server. Can be UDP

(default) or TCP (default: UDP)

--endpoints ENDPOINTS

Configuration file for the model server and the

connectors as a yml file. (default: endpoints.yml)

Python Logging Options:

-v, --verbose Be verbose. Sets logging level to INFO. (default:

None)

-vv, --debug Print lots of debugging statements. Sets logging level

to DEBUG. (default: None)

--quiet Be quiet! Sets logging level to WARNING. (default:

None)

Server Settings:

-i INTERFACE, --interface INTERFACE

Network interface to run the server on. (default:

0.0.0.0)

-p PORT, --port PORT Port to run the server at. (default: 5005)

-t AUTH_TOKEN, --auth-token AUTH_TOKEN

Enable token based authentication. Requests need to

provide the token to be accepted. (default: None)

--cors [CORS [CORS ...]]

Enable CORS for the passed origin. Use * to whitelist

all origins. (default: None)

--enable-api Start the web server API in addition to the input

channel. (default: False)

--response-timeout RESPONSE_TIMEOUT

Maximum time a response can take to process (sec).

(default: 3600)

--remote-storage REMOTE_STORAGE

Set the remote location where your Rasa model is

stored, e.g. on AWS. (default: None)

--ssl-certificate SSL_CERTIFICATE

Set the SSL Certificate to create a TLS secured

server. (default: None)

--ssl-keyfile SSL_KEYFILE

Set the SSL Keyfile to create a TLS secured server.

(default: None)

--ssl-ca-file SSL_CA_FILE

If your SSL certificate needs to be verified, you can

specify the CA file using this parameter. (default:

None)

--ssl-password SSL_PASSWORD

If your ssl-keyfile is protected by a password, you

can specify it using this paramer. (default: None)

Channels:

--credentials CREDENTIALS

Authentication credentials for the connector as a yml

file. (default: None)

--connector CONNECTOR

Service to connect to. (default: None)

JWT Authentication:

--jwt-secret JWT_SECRET

Public key for asymmetric JWT methods or shared

secretfor symmetric methods. Please also make sure to

use --jwt-method to select the method of the

signature, otherwise this argument will be

ignored.Note that this key is meant for securing the

HTTP API. (default: None)

--jwt-method JWT_METHOD

Method used for the signature of the JWT

authentication payload. (default: HS256)

rasa 3. The rasa x command is not supported in the X version

(installingrasa) E:\starspace\my_rasa>rasa x e:\anaconda3\envs\installingrasa\lib\site-packages\rasa\cli\x.py:356: UserWarning: Your version of rasa '3.0.4' is currently not supported by Rasa X. Running `rasa x` CLI command with rasa version higher or equal to 3.0.0 will result in errors. rasa.shared.utils.io.raise_warning( MissingDependencyException: Rasa X does not seem to be installed, but it is needed for this CLI command. You can find more information on how to install Rasa X in local mode in the documentation: https://rasa.com/docs/rasa-x/installation-and-setup/install/local-mode

Rasa website link:

https://rasa.com/docs/rasa/command-line-interface

Course Name: Business Dialogue robot Rasa core algorithm DIET and TED paper insider explanation

Course content:

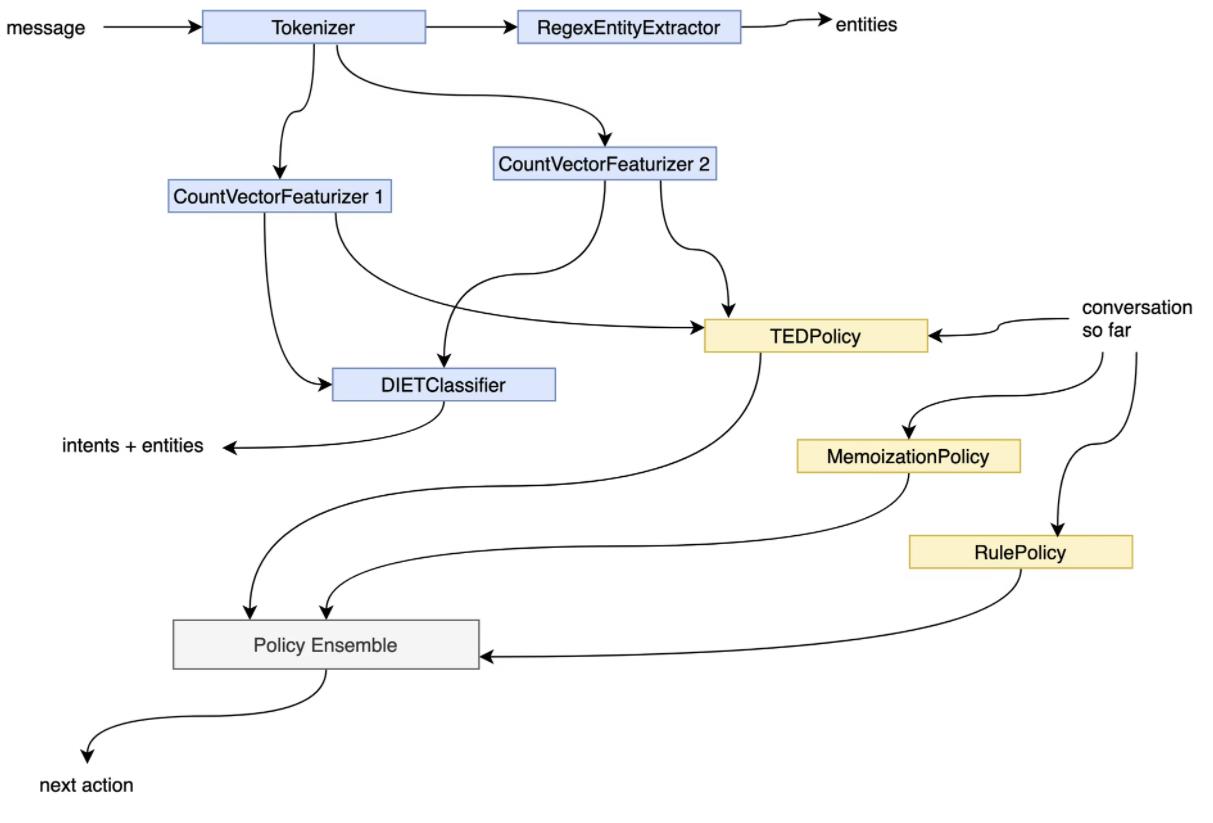

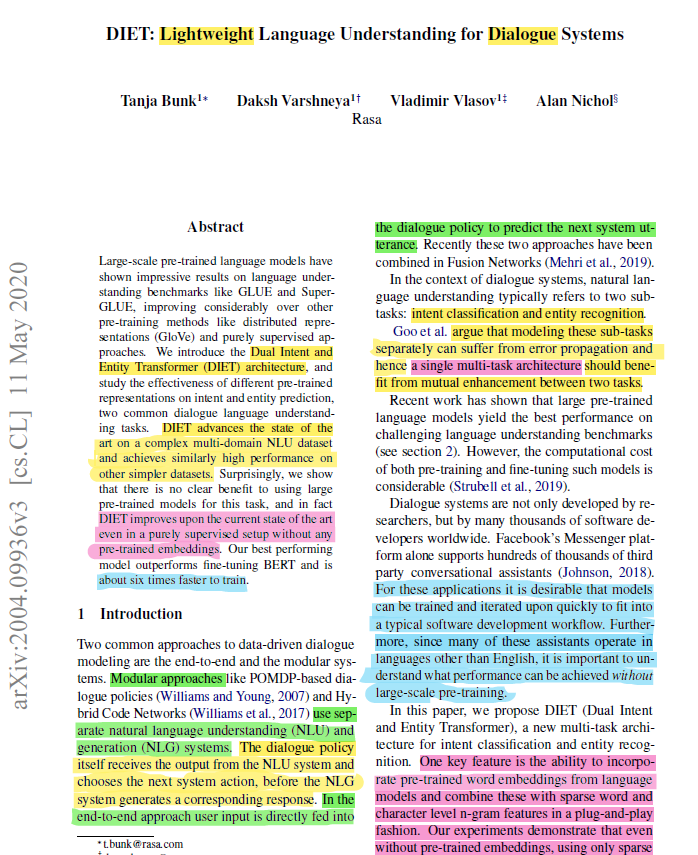

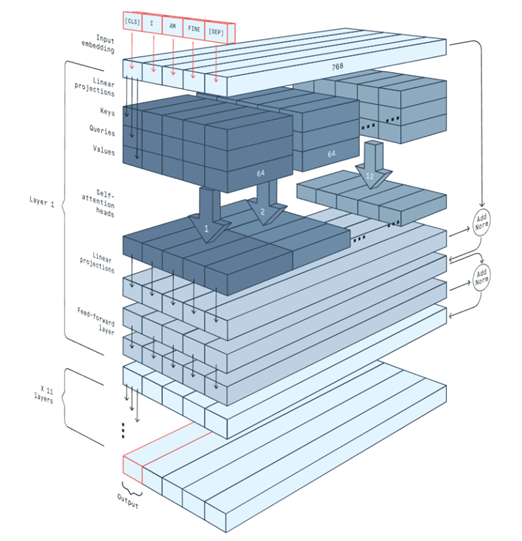

For an intelligent business dialogue system, language understanding NLU and Policies are the two cornerstones of its system core. The two heaviest papers published by Rasa team, DIET: Lightweight Language Understanding for Dialogue Systems and Dialogue Transformers, are the crystallization of the most core achievements in solving NLU and Policies based on years of exploration in the landing scene in the industry: DIET is the unified framework for Intent recognition and Entity information extraction, Transformer embedded dialogue (TED) based on Dialogue Transformers is a technical framework for multi round business dialogue information processing and dialogue Response. As Rasa kernel, DIET and Ted have been iteratively optimized in many versions, even though Rasa 3.0 X the core position of DIET and Ted can still be seen in the latest generation architecture:

It can be said that mastering these two papers is the core of mastering the essence of Rasa and the design mechanism behind it. Therefore, star dialogue robot has launched the business dialogue robot rasa core algorithm DIET and TED paper insider explanation course, which interprets all the architecture ideas, insider mechanisms, experimental analysis, best practices and other passwords contained in these two papers sentence by sentence in a way of stripping cocoons, To help friends interested in Transformer based dialogue robots master the essence of Rasa kernel.

In order to more effectively help students integrate rasa, the most successful industrial business dialogue robot platform, from the perspective of model algorithm, architecture design and source code implementation, in addition to analyzing nearly 2130 lines of source code of Rasa's core TED Policy and nearly 1825 lines of source code of DIET line by line in the course, the core graph of Rasa internal decryption framework is also added in the course In the complete analysis and Testing of GraphNode source code, GraphModelConfiguration, ExecutionContext, GraphNodeHook source code analysis, GraphComponent source code review and its application source code.

Course Name: Business Dialogue robot Rasa 3 X internals insider explanation and Rasa framework customization practice

Course introduction:

Rasa 3 Taking the new generation of Graph Computational Backend proposed by X as the core, starting from the Milestones in the iteration of Rasa version, this paper completely decrypts the technical evolution process and root causes behind "One Graph to Rule Them All", then decrypts its architecture, internal mechanism and operation process with GraphComponent as the core, and analyzes the interface implementation of custom Rasa Open Source platform Each step of component source code, component registration and use. Finally, a complete case is used as an example. Through nearly 2130 lines of source code analysis of Rasa's core TED Policy and nearly 1825 lines of source code analysis of DIET, learners can not only customize rasa framework, but also have the ability to appreciate a large number of source code and advanced dialogue system architecture design thinking.

Course content:

Lesson 1: rasa 3 Analysis of Retrieval Model for X internal decryption

1. What is One Graph to Rule them All

2. Why are all industrial dialogue robots stateful computing?

3. Rasa introduced Retrieval Model for insider decryption and problem analysis

Lesson 2: rasa 3 X internal decryption: an Intent insider analysis of the dialogue system

1. Analyze why intent should be removed from the perspective of information intent

2. Explain why intent should be removed from the perspective of Retrieval Intent

3. Explain why intents should be removed from the perspective of Multi intents

4. Why can't some intent s be defined?

Lesson 3: rasa 3 Analysis of End2End Learning insider of X internal decryption system

1,How end-to-end learning in Rasa works

2. Contextual NLU parsing

3,Fully end-to-end assistants

Lesson 4: rasa 3 X internals decryption of a new generation of scalable DAG diagram architecture

1. Analysis of traditional NLU/Policies architecture

2. DAG diagram architecture for Business Dialogue robot

3. Decryption of DAGs with Caches

4. Precautions for Example and Migration

Lesson 5: rasa 3 X internal decryption of customized Graph NLU and Policies components

1. Analysis of four requirements for customizing Graph Component based on Rasa

2. Graph Components analysis

3. Graph Components source code demonstration

Lesson 6: rasa 3 X internal decryption of custom GraphComponent

1. Analyze GraphComponent interface from Python Perspective

2. Detailed explanation of create and load of user-defined model

3. languages and Packages support for user-defined models

Lesson 7: rasa 3 Source code analysis of the custom component Persistence decrypted by X internal

1. Code example analysis of custom dialogue robot component

2. Analyze the Resource source code in Rasa line by line

3. In Rasa, ModelStorage and ModelMetadata are parsed line by line

Lesson 8: rasa 3 Source code analysis of custom component Registering for X internal decryption

1. Use Decorator to analyze the internal source code of Graph Component registration

2. Analysis of registration source code of different NLU and Policies components

3. Manually implement the whole process of Python Decorator similar to Rasa registration mechanism

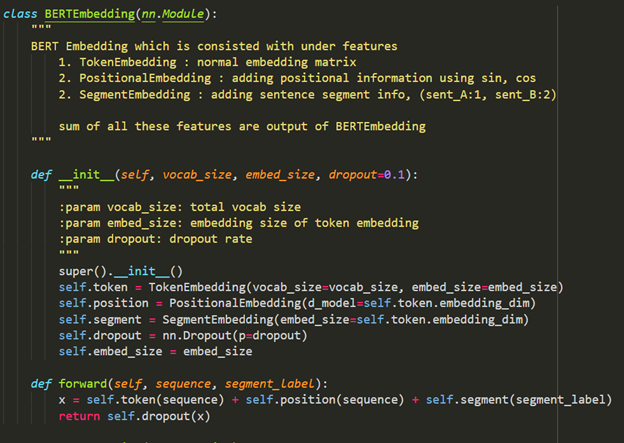

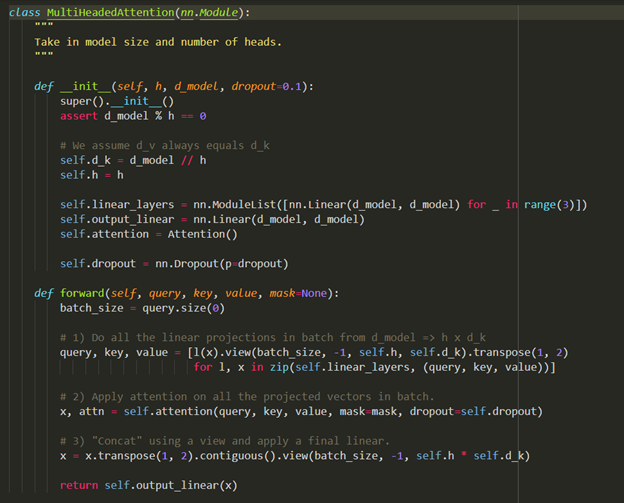

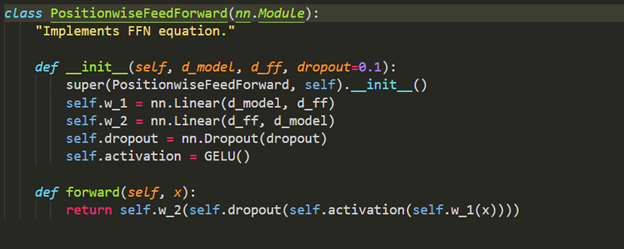

Lesson 9: custom components and common component source code analysis of Rasa internal decryption based on Transformer

1. Customize the source code analysis of Dense Message Featurizer and spark message featurizer

2. Source code analysis of Rasa Tokenizer and WhitespaceTokenizer

3. Source code analysis of countvectorsfeaturer and spacyfeaturer

Lesson 10: the core graph of Rasa internal decryption framework based on Transformer Complete analysis and testing of Py source code

1. GraphNode source code line by line analysis and Testing analysis

2. Source code analysis of GraphModelConfiguration, ExecutionContext and GraphNodeHook

3. GraphComponent source code review and its application source code

Lesson 11: framework of Rasa internal decryption based on Transformer DIETClassifier and TED

1. DIETClassifier and TED as GraphComponent implement the all in one Rasa architecture

2. Internal working mechanism analysis and source code annotation analysis of DIETClassifier

3. TED internal working mechanism analysis and source code annotation analysis

Lesson 12: rasa 3 Nearly 2130 lines of source code analysis of TED Policy decrypted by X internal

1. Analysis of TEDPolicy parent class Policy code

2. Complete analysis of TEDPolicy

3. TED code parsing inherited from TransformerRasaModel

Lesson 13: rasa 3 Analysis of nearly 1825 lines of source code of DIET decrypted by X internal

1. DIETClassifier code analysis

2. Code analysis of EntityExtractorMixin

3. DIET code analysis

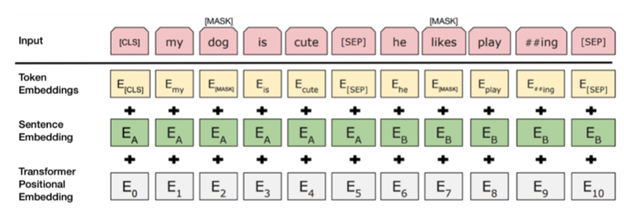

Course Name: 30 hours decryption of 10 classic dialogue robot papers and source code Q & A courses of the highest quality in the NLP field

Course introduction: Based on Gavin's reading of more than 3000 NLP papers in the process of making star sky Intelligent Business Dialogue robot, 10 NLP dialogue robot related papers with the most classic and highest quality in the past five years are selected, covering all key technologies and ideological framework in the field of intelligent dialogue robot such as multi round dialogue, state management and small data technology. All contents are carried out in a step-by-step manner. Gavin, the instructor, provides technical Q & A services around 10 papers a year.

Course content:

30 hours of video, Gavin annotated version of 10 papers, and one-year technical Q & a service for direct communication with Gavin. The course includes the following papers:

Annotated ConveRT Efficient and Accurate Conversational Representations from Transformers

Annotated Dialogue Transformers

Annotated A Simple Language Model for Task-Oriented Dialogue

Annotated DIET Lightweight Language Understanding for Dialogue Systems

Annotated BERT-DST Scalable End-to-End Dialogue State Tracking with Bidirectional Encoder Representations from Transformer

Annotated Few-shot Learning for Multi-label Intent Detection

Annotated Fine-grained Post-training for Improving Retrieval-based Dialogue Systems

Annotated Poly-encoders architectures and pre-training Pro

Annotated TOD-BERT Pre-trained Natural Language Understanding for Task-Oriented Dialogue

Annotated Recipes for building an open-domain chatbot

At the same time, in order to solve everyone's basic problems, the course is attached with "lesson 1: Star dialogue BERT Paper paper paper decryption, mathematical derivation and complete source code implementation".

Any technical questions will be answered by Gavin himself.

Course Name: Bayesian Transformer: architecture, algorithm, mathematics, source code, NLP competition

Course introduction:

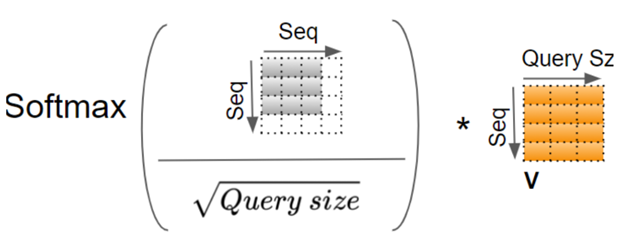

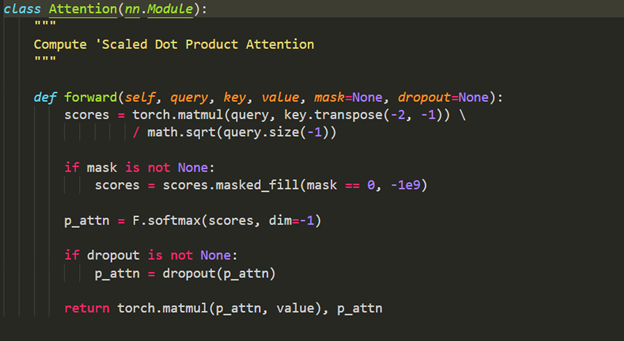

"One architecture rules everything" is Andrew NG's evaluation of transformer in reviewing the latest progress in the whole AI field at the end of 2021( https://read.deeplearning.ai/the-batch/issue-123/) . Transformer has now become a common engine at the bottom of major fields such as artificial intelligence NLP. Especially since 2018, various AI research in academia and specific applications in industry are directly or indirectly based on transformer.

This course is the wisdom crystallization of Gavin, founder of star dialogue robot, who has studied and used Transformer for many years. It is the first global NLP training course to decrypt Transformer from the perspective of Bayesian in terms of architecture, model algorithm, source code analysis, case practice and other aspects, Especially by opening the "black box" inside the neural network in Transformer, we can explain the hard core technology and subtle local details that are not easy to understand by ordinary learners and users of Transformer, but are of great significance to practice. Starting from Bayesian, the most grand mathematical principle at the bottom, all the architecture, logic and practice of Transformer are linked and natural.

This course not only has the complete source code of Transformer, GPT and BET to realize line by line analysis, but also helps you fully control Transformer from the perspective of actual combat through Transformer's analysis of film review data and complete code decryption of NLP reading and understanding competition on Kaggle. All codes can be obtained from Gavin, the instructor.

The course provides one-year technical Q & a service, and Gavin is responsible for answering all technical questions of the course.

Course content:

Lesson 1 complete demonstration of Bayesian Transformer ideas and mathematical principles

Lesson 2 Transformer paper source code complete implementation

Lesson 3 Transformer language model architecture, mathematical principles and insider mechanism

Lesson 4 GPT autoregressive language model architecture, mathematical principles and insider mechanism

Lesson 5 BERT self coding language model architecture, mathematical principles and insider mechanism

Lesson 6 detailed implementation of BERT pre training source code

Lesson 7 Bert fine tuning mathematical principle and case source code analysis

Lesson 8 Bert fine tuning named entity recognition NER principle analysis and source code practice

Lesson 9 BERT multi task deep optimization and cases (mathematical principles, hierarchical network insider and high-level CLS classification, etc.)

Lesson 10 analysis of film review data using BERT (data processing, model code, online deployment)

Lesson 11 decryption, mathematical derivation and complete source code implementation of BERT Paper

Lesson 12 Transformer's NLP competition in Kaggle

Example of course style:

"One architecture rules everything" (Transformer silicon valley gossip series from Gavin) season 1

"One architecture rules everything" (Transformer silicon valley gossip series from Gavin)

Season 1: the new generation of Rasa 3 Where on earth is x new?

Lesson 1: rasa 3 X decouples the architecture of Model and Framework

Lesson 2: rasa 3 X new generation computing backend, state management, etc

Lesson 3: rasa 3 X new generation Graph Architecture analysis

Lesson 4: rasa 3 What is the specific value of X's Graph Architecture?

Lesson 5: through Jieba and DIET source code to illustrate how to carry out rasa 3 Version migration of X custom components

Lesson 6: rasa 3 X new Slot management implementation mechanism

Lesson 7: rasa 3 What is the core value of X's Global Slot management?

Lesson 8: rasa 3 Analysis of Slot source code in X

Lesson 9: rasa 3 Decryption of the inside source code of Slot's influence on Conversation in X

Lesson 10: rasa 3 Analysis on the Influence of Slot on Conversation in X

Lesson 11: rasa 3 Analysis of different types of slots in X

Lesson 12: rasa 3 Detailed explanation of complete instances of Custom Slot Types in X

Lesson 13: rasa 3 Analysis of Slot Mappings and Mapping Conditions in X

Lesson 14: rasa 3 From about Slot in X_ Entity and its application in form

Lesson 15: rasa 3 From of Slot in X_ text,from_entity and from_trigger_intent parsing

Lesson 16: rasa 3 Analysis of Custom Slot Mappings and Initial slot values in X

Lesson 17: rasa 3 X about ValidationAction and FormValidationAction introduction and error analysis of documents

Lesson 18: rasa 3 Source code analysis of Slot operation in FormAction in X

Lesson 19: rasa 3 Extract for slot operation in X_ requested_ Slot source code analysis

Lesson 20: rasa 3 Extract for Slot operation in X_ custom_ Slots source code analysis

Lesson 21: rasa 3 New generation technology for measuring the quality of dialogue robot in X

Course Name: Season 2: thoroughly understand the Shannon entropy of NLP underlying kernel

Content introduction:

Entropy is the core concept of information measurement and model optimization in the NLP field. Mastering the underlying kernel of entropy can greatly improve the understanding of the essence of natural language processing and bring in-depth technical insight.

This series of videos starts with examples to fully reproduce the whole implementation process and application of Shannon's enterprise step by step. The contents include:

Lecture 1: why is Shannon entropy the core hard core concept at the bottom of NLP natural language?

Lesson 2: Amount of information analysis in Shannon entropy

Lecture 3: the relationship and difference between Information and Data in Shannon entropy and the problem analysis of Data expressing Information

Lesson 4: absolute minimum amount information storage and transmission analysis in Shannon entropy

Lesson 5: analysis of Entropy in Shannon Entropy

Lecture 6: Shannon entropy uses specific examples to illustrate the connotation of information quantity

Lecture 7: how does Shannon entropy compress information from the perspective of mathematical quantification?

Entropy: amount of information

Storage and transmission of information

Shannon's entropy

Concept of "Amount" of Information

Quantifying the Amount of Information

Example of Information Quantity

The Entropy Formula

Mathematical Approximation of Required Storage

Rasa series blog:

- Business Dialogue robot Rasa 3 X internal and Rasa framework customization practice

- Business Dialogue robot Rasa core algorithm DIET and TED paper details

- Business Dialogue robot rasa 3 Initial experience of X deployment and installation

- Business Dialogue robot rasa 3 x Playground

- Business Dialogue robot rasa 3 x Command Line Interface

- Business Dialogue robot rasa 3 X command rasa shell and rasa run

- Business Dialogue robot rasa 3 X commands rasa run actions, rasa test, rasa data split, rasa data convert nlu