Troubleshooting of Apache HttpClient connection pool leakage

Problem background

-

The main business of the business system is a data aggregation management platform, in which one function of the system is to synchronize all resources (large synchronization for short)

-

The service synchronization data request data tool is Feign adapted to Apache HttpClient. This request encapsulation is based on the service adaptation service encapsulation request api at that time

-

Feign version: 10.10 one

Problem source

- In the production environment, the large synchronization function (more than 20 tasks) found that it was stuck when running more than half of the tasks. This problem was not found in the test environment

Synchronous interface

public interface SyncHelper {

Order syncOrder();

void syncAllAccount();

void syncSingleAccount(Long accountId);

default boolean enableSync() {

return true;

}

}

Implementation of large synchronization function

@Slf4j

@Component

public class SyncAccountResourceListener {

@Autowired

private final List<SyncHelper> helpers;

// Single thread pool

private static final ExecutorService EXECUTOR = Executors.newSingleThreadExecutor(new ThreadFactoryBuilder()

.setDaemon(false)

.setNameFormat("h3c-sync-resource-%d")

.build()

);

public void sync(){

for (SyncHelper helper : helpers) {

if (Thread.currentThread().isInterrupted()) {

log.error("[{}] sync task interrupted,account:[{}]", className, accountId);

continue;

}

Future<?> future = EXECUTOR.submit(() -> helper.syncSingleAccount(accountId));

try {

future.get(helper.getTimeOut(), TimeUnit.SECONDS);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

} catch (ExecutionException|TimeoutException e) {

log.error("[{}] sync error,account:[{}]", className, accountId, e);

} finally {

future.cancel(true);

}

}

}

}

Troubleshooting steps

I wanted to solve the problem as quickly as possible. The synchronization progress list on the system shows that they are all stuck in the same synchronization class. Then I took a rough look at the codes of relevant synchronization classes and found that there are no relevant codes that may lead to dead circulation

Try to reproduce

- Test the big synchronization in the test environment and find no problem (including requesting round-trip data log and database sql print log), and successfully complete all synchronization tasks

- Then do unit tests for the stuck synchronization classes and execute them repeatedly. The results show that there is no problem

At this point, the question is even more confused. If it cannot be replicated in the test environment and local unit tests, how can there be related problems in production?

deadlock

At first, I didn't check the deadlock problem, because most of the synchronization didn't use multithreading

Possible causes

- Multithreading is used to run a task in a large synchronous resource using a single thread thread pool, and then the task times out. TimeOut fails to handle the task interruption, resulting in all subsequent tasks being blocked

- I see that some colleagues use multithreading to synchronize data, which is not very reasonable, similar to the following code:

List<SysDept> deptList = ......

List<CompletableFuture<CmdbUsageReport>> futureList = new ArrayList<>();

deptList.forEach(t -> futureList.add(

CompletableFuture.supplyAsync(() -> {

// Time consuming task

return report;

}, ioPool)));

CompletableFuture.allOf(futureList.toArray(new CompletableFuture[]{})).join();

List<CmdbUsageReport> reportList = futureList.stream().map(CompletableFuture::join).filter(Objects::nonNull).collect(toList());

// ......

Since the thread problem is checked, directly use the relevant analysis tools to analyze it and see what's going on

Analyze thread status

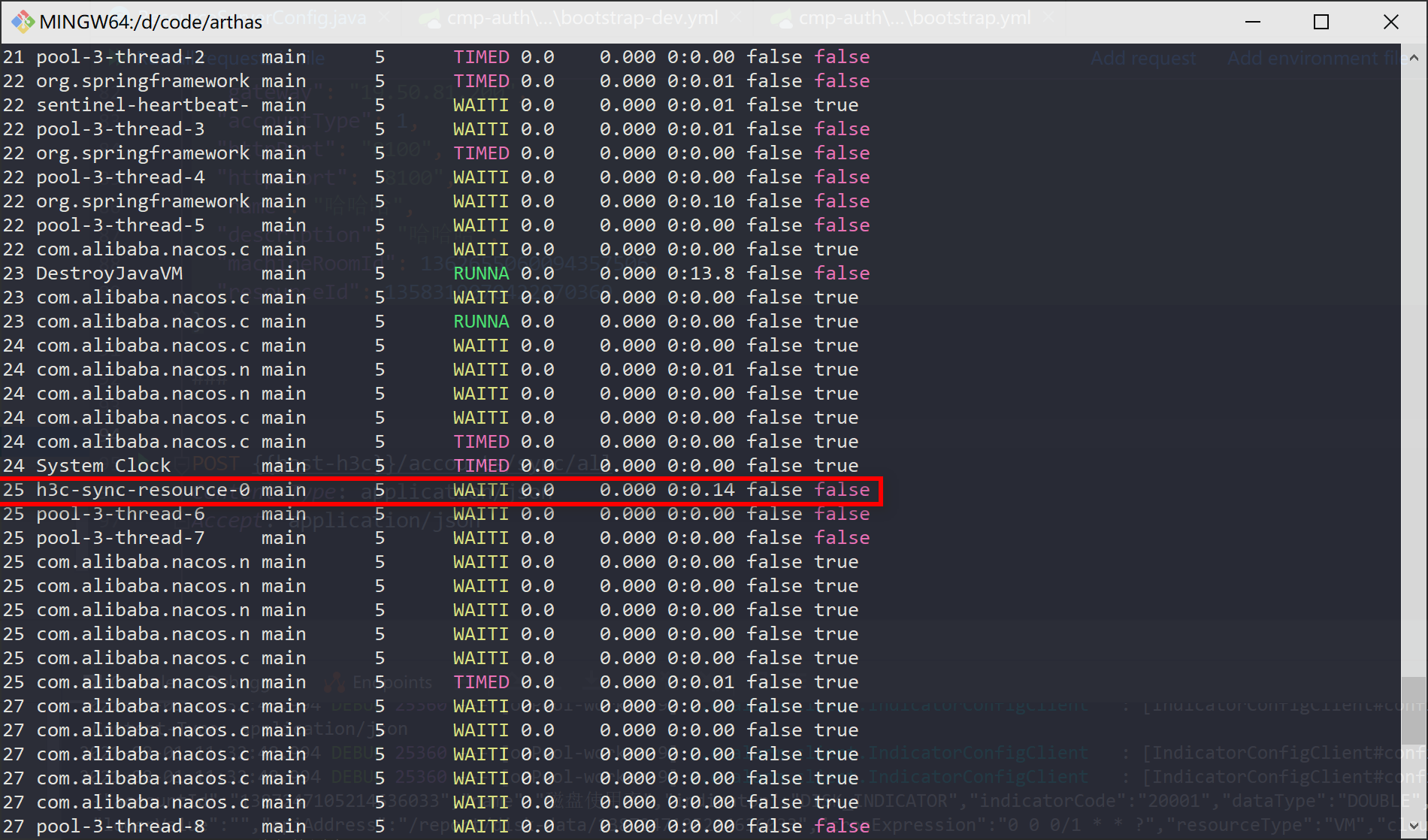

Use arthas or visual VM to view the thread status of synchronization tasks

Start the corresponding process of arthas attach

java -jar arthas-boot.jar

thread --all view simple information about all threads

Use the single thread pool to run synchronization tasks. The thread executing the thread pool also has a user-defined name, which is `h3c-sync-resource-0`(process ID 250 ,Thread status is `WAITING` )

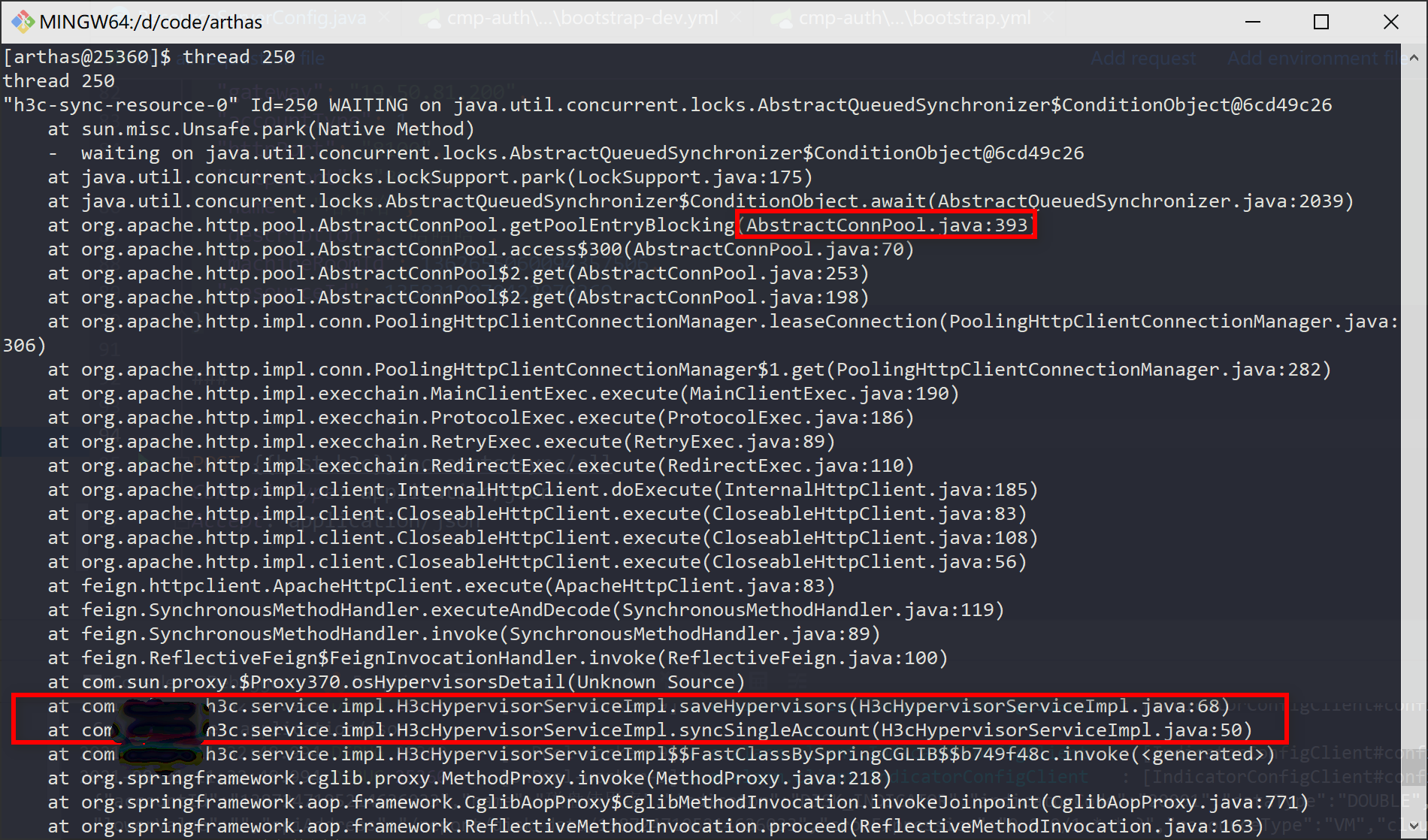

thread 250 view the details of the synchronization process

"h3c-sync-resource-0" - Thread t@195

java.lang.Thread.State: WAITING

at sun.misc.Unsafe.park(Native Method)

- parking to wait for <66bb3d00> (a java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject)

at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.await(AbstractQueuedSynchronizer.java:2039)

at org.apache.http.pool.AbstractConnPool.getPoolEntryBlocking(AbstractConnPool.java:393)

at org.apache.http.pool.AbstractConnPool.access$300(AbstractConnPool.java:70)

at org.apache.http.pool.AbstractConnPool$2.get(AbstractConnPool.java:253)

- locked <637b83f5> (a org.apache.http.pool.AbstractConnPool$2)

at org.apache.http.pool.AbstractConnPool$2.get(AbstractConnPool.java:198)

at org.apache.http.impl.conn.PoolingHttpClientConnectionManager.leaseConnection(PoolingHttpClientConnectionManager.java:306)

at org.apache.http.impl.conn.PoolingHttpClientConnectionManager$1.get(PoolingHttpClientConnectionManager.java:282)

at org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:190)

at org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:186)

at org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:89)

at org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110)

at org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:185)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:83)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:108)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:56)

at feign.httpclient.ApacheHttpClient.execute(ApacheHttpClient.java:83)

at feign.SynchronousMethodHandler.executeAndDecode(SynchronousMethodHandler.java:119)

at feign.SynchronousMethodHandler.invoke(SynchronousMethodHandler.java:89)

at feign.ReflectiveFeign$FeignInvocationHandler.invoke(ReflectiveFeign.java:100)

at com.sun.proxy.$Proxy360.osAggregates(Unknown Source)

According to the thread information, you can see that the key code is stuck at the place requested by feign, and then you can see that it is apache http client dead lock deadlock

AbstractConnPool.java:393 at org apache. http. pool. AbstractConnPool. getPoolEntryBlocking

Looking at the code in detail, it is found that the problem is caused by the idle connection blocking waiting of the http client connection pool

Source tracking

Now visit the http client official website to see a simple demo example. The demo case access address is: httpcomponents-client-quickstart

You will see a simple use case:

try (CloseableHttpClient httpclient = HttpClients.createDefault()) {

HttpGet httpGet = new HttpGet("http://httpbin.org/get");

// The underlying HTTP connection is still held by the response object

// to allow the response content to be streamed directly from the network socket.

// In order to ensure correct deallocation of system resources

// the user MUST call CloseableHttpResponse#close() from a finally clause.

// Please note that if response content is not fully consumed the underlying

// connection cannot be safely re-used and will be shut down and discarded

// by the connection manager.

try (CloseableHttpResponse response1 = httpclient.execute(httpGet)) {

System.out.println(response1.getCode() + " " + response1.getReasonPhrase());

HttpEntity entity1 = response1.getEntity();

// do something useful with the response body

// and ensure it is fully consumed

EntityUtils.consume(entity1);

}

}

- The official simply uses the form of java7 try with resources to use httpclient and automatically release resources after use

- The official document also explains that if the connection is safe, recycled and reused, you need to use entityutils Consumer consumes the response content and closes the stream

- Finally, the thread blocking and waiting problem may be caused by not consuming the response content in time

Track Feign source code

Now let's take a look at the general process and source code of Apache HttpClient converting Feign request. The method of HttpClient converting Feign Response: Feign HttpClient. ApacheHttpClient. toFeignBody

Response.Body toFeignBody(HttpResponse httpResponse) {

final HttpEntity entity = httpResponse.getEntity();

if (entity == null) {

return null;

}

return new Response.Body() {

@Override

public Integer length() {

// If it is transfer encoding: chunked length must return null

return entity.getContentLength() >= 0 && entity.getContentLength() <= Integer.MAX_VALUE

? (int) entity.getContentLength()

: null;

}

@Override

public boolean isRepeatable() {

return entity.isRepeatable();

}

@Override

public InputStream asInputStream() throws IOException {

// Simply pass InputStream

return entity.getContent();

}

@SuppressWarnings("deprecation")

@Override

public Reader asReader() throws IOException {

return new InputStreamReader(asInputStream(), UTF_8);

}

@Override

public Reader asReader(Charset charset) throws IOException {

Util.checkNotNull(charset, "charset should not be null");

return new InputStreamReader(asInputStream(), charset);

}

@Override

public void close() throws IOException {

// Resource recovery method

EntityUtils.consume(entity);

}

};

}

Because the response content needs to be completely consumed before it can return to the connection pool to reuse the connection, org apache. http. util. EntityUtils. The approximate code of consumption is as follows:

public static void consume(final HttpEntity entity) throws IOException {

if (entity == null) {

return;

}

if (entity.isStreaming()) {

// Still in the transmission state, get the stream

final InputStream inStream = entity.getContent();

// close directly closes the recycle resource

if (inStream != null) {

inStream.close();

}

}

}

EntityUtils. The connection process of consumer consuming response content and safely reusing is as follows. If you are interested, you can have a look. There will be no long discussion here:

org.apache.http.util.EntityUtils.consume org.apache.http.impl.execchain.ResponseEntityProxy.getContent Packaged into automatic release connection EofSensorInputStream org.apache.http.conn.EofSensorInputStream.close org.apache.http.conn.EofSensorInputStream.checkClose org.apache.http.impl.execchain.ResponseEntityProxy.streamClosed org.apache.http.impl.execchain.ResponseEntityProxy.releaseConnection

feign.AsyncResponseHandler#handleResponse

void handleResponse(CompletableFuture<Object> resultFuture,

String configKey,

Response response,

Type returnType,

long elapsedTime) {

// copied fairly liberally from SynchronousMethodHandler

boolean shouldClose = true;

try {

if (logLevel != Level.NONE) {

// If the log level is not NONE, the log will be output

response = logger.logAndRebufferResponse(configKey, logLevel, response,

elapsedTime);

}

if (Response.class == returnType) {

if (response.body() == null) {

resultFuture.complete(response);

} else if (response.body().length() == null

|| response.body().length() > MAX_RESPONSE_BUFFER_SIZE) {

// If it is transfer encoding: chunked length must return null, resulting in the following finally block not closing the recycling of resources

shouldClose = false;

resultFuture.complete(response);

} else {

// Ensure the response body is disconnected

// InputStream is converted to byte [] to recycle resources and back to response

final byte[] bodyData = Util.toByteArray(response.body().asInputStream());

resultFuture.complete(response.toBuilder().body(bodyData).build());

}

} else if (response.status() >= 200 && response.status() < 300) {

if (isVoidType(returnType)) {

resultFuture.complete(null);

} else {

final Object result = decode(response, returnType);

shouldClose = closeAfterDecode;

resultFuture.complete(result);

}

} else if (decode404 && response.status() == 404 && !isVoidType(returnType)) {

final Object result = decode(response, returnType);

shouldClose = closeAfterDecode;

resultFuture.complete(result);

} else {

resultFuture.completeExceptionally(errorDecoder.decode(configKey, response));

}

} catch (final IOException e) {

if (logLevel != Level.NONE) {

logger.logIOException(configKey, logLevel, e, elapsedTime);

}

resultFuture.completeExceptionally(errorReading(response.request(), response, e));

} catch (final Exception e) {

resultFuture.completeExceptionally(e);

} finally {

if (shouldClose) {

ensureClosed(response.body());

}

}

}

feign.slf4j.Slf4jLogger.logAndRebufferResponse

protected Response logAndRebufferResponse(String configKey,

Level logLevel,

Response response,

long elapsedTime)

throws IOException {

if (logger.isDebugEnabled()) {

// DEBUG level calls the parent class method for log output

return super.logAndRebufferResponse(configKey, logLevel, response, elapsedTime);

}

return response;

}

feign.Logger.logAndRebufferResponse

protected Response logAndRebufferResponse(String configKey,

Level logLevel,

Response response,

long elapsedTime)

throws IOException {

String reason =

response.reason() != null && logLevel.compareTo(Level.NONE) > 0 ? " " + response.reason()

: "";

int status = response.status();

log(configKey, "<--- HTTP/1.1 %s%s (%sms)", status, reason, elapsedTime);

if (logLevel.ordinal() >= Level.HEADERS.ordinal()) {

// Log output at a level greater than HEADERS

for (String field : response.headers().keySet()) {

for (String value : valuesOrEmpty(response.headers(), field)) {

log(configKey, "%s: %s", field, value);

}

}

int bodyLength = 0;

if (response.body() != null && !(status == 204 || status == 205)) {

// HTTP 204 No Content "...response MUST NOT include a message-body"

// HTTP 205 Reset Content "...response MUST NOT include an entity"

if (logLevel.ordinal() >= Level.FULL.ordinal()) {

log(configKey, ""); // CRLF

}

// It is crucial to read the InputStream and convert it to byte [], and call InputStream close for resource recovery

byte[] bodyData = Util.toByteArray(response.body().asInputStream());

bodyLength = bodyData.length;

if (logLevel.ordinal() >= Level.FULL.ordinal() && bodyLength > 0) {

log(configKey, "%s", decodeOrDefault(bodyData, UTF_8, "Binary data"));

}

log(configKey, "<--- END HTTP (%s-byte body)", bodyLength);

// Because the current response Body () is consumed and saved back to response

return response.toBuilder().body(bodyData).build();

} else {

log(configKey, "<--- END HTTP (%s-byte body)", bodyLength);

}

}

return response;

}

feign.Util.toByteArray

public static byte[] toByteArray(InputStream in) throws IOException {

checkNotNull(in, "in");

try {

ByteArrayOutputStream out = new ByteArrayOutputStream();

copy(in, out);

return out.toByteArray();

} finally {

// closeable close closes the recycle resource

ensureClosed(in);

}

}

track Feign Slf4jLogger `feign/Logger.java:84` , find `feign.Logger#logAndRebufferResponse ` will read the content of 'body' once greater than`HEADERS` level:`logLevel.ordinal() >= Level.HEADERS.ordinal()`,rearwards `byte[] bodyData = Util.toByteArray(response.body().asInputStream());`,Make a copy of the data, and then close Drop the original `InputStream`

Thinking about reading source code

After the source code analysis arrives at this place, it is found that the corresponding content will be automatically released under normal circumstances:

-

Enable log (Feign log level is greater than / equal to headers & & logger level is less than / equal to DEBUG)

- The original response information will be copied Body() and release InputStream

-

Close log (Feign log level is less than headers & & logger level is greater than DEBUG)

- Response. class == returnType && (response.body(). length() == null || response. body(). length() > MAX_ RESPONSE_ BUFFER_ Size) in this case, the response information will not be released

- If closeAfterDecode is false, the response information will not be released

Verification problem

The Feign log level of the production environment is full (greater than HEADERS), but the log level enabled by the Logger is INFO. Try the following steps to reproduce the problem

- Build Apache httpclient to minimize the number of connection pools

CLIENT = new ApacheHttpClient(

HttpClientBuilder.create()

.setSSLSocketFactory(

new SSLConnectionSocketFactory(trustAllSslSocketFactory(),

(hostname, session) -> true)

)

// Set maximum route

.setMaxConnPerRoute(1)

// Sets the maximum total number of connections

.setMaxConnTotal(1)

.setDefaultRequestConfig(

RequestConfig.custom()

.setConnectTimeout(CONNECT_TIMEOUT)

.setSocketTimeout(REQ_TIMEOUT)

.build()

)

.useSystemProperties()

.build()

- The unit test Logger level configuration of local synchronization is equal to info & & feign, and the log level is greater than / equal to HEADERS. The unit test result synchronization task will deadlock after a period of time

- The unit test Logger level configuration of local synchronization is equal to debug & & feign, and the log level is less than HEADERS. The unit test result synchronization task will deadlock after a period of time

- The configuration of the locally synchronized unit test Logger level is equal to debug & & feign, and the log level is greater than or equal to headers (this is the configuration of the test and development environment). No deadlock is found in the unit test result synchronization task after a period of time

After unit testing and source code analysis, the problem result is very obvious: the connection pool connection cannot be safely reused because the response information is not fully consumed and released

Trace the root cause of the problem

Review feign. Com from the source code analysis above Asyncresponsehandler#handleresponse source code, closeAfterDecode is true by default, so the response content will not be released only under the following conditions:

Response.class == returnType && (response.body().length() == null || response.body().length() > MAX_RESPONSE_BUFFER_SIZE)

Check whether the item code has a Response return type and the length of the request Response body is empty

Recall that when requesting the corresponding api, the token will be asynchronously verified and refreshed. To verify the token, there is no need to process the return value. Previously, the Response is used and the return code is judged. The following are some key codes:

Verify token Feign interface

@RequestLine("GET /sys/identity/v2/tokens")

@Headers({"X-Subject-Token: {token}"})

Response verifyToken(@Param("token") String token);

Verify token code

@Override

public boolean verifyToken(H3CClientConfig config, String token) {

IdentityApi identityApi = Feigns.h3c(IdentityApi.class, config);

Response response = identityApi.verifyToken(token);

return response.status() == HttpStatus.OK.value();

}

Request response log

GET https://19.50.81.200:8100/sys/identity/v2/tokens HTTP/1.1 200 OK Date: Sun, 05 Sep 2021 02:55:09 GMT Content-Type: application/json; charset=UTF-8 Transfer-Encoding: chunked Connection: keep-alive X-Subject-Token: eyJjdHkiOiJKV1QiLCJlbmMiOiJBMTI4Q0JDLUhTMjU2IiwiYWxnIjoiZGlyIn0..yWaex8RVEvBvw1D-kk_LMQ.IwiMpoRWSPsUZiEpr09tL7WDZ1-vIRZqFsGqQq2CV4wBp6S8mBIhICI3Ce2sE_TLA_A2oX_NnMpAf5D4C_DwunJaiJ3lnD51Sg1bxWao_gXnPS7JdfpyaRXY-rtPMaxs-0FisUuyVlKfQh3Ab8t3WsCLzU9Yz7sQ367CKtW1z32ttafrWRlotLN0y7XX3ZRz7Ttznm2cZ5Ae79MEPQF1-hbKiGoz4B8kR1NRgeL-arlpa8qtgERYEEtr-VtJgydDpylusItc_uOtPqwEh0HAgYQjJovF75pej5WlCgdzYVQMr08OGT0JnBrReWYxl0h2P0xxZQtNcM2d0T54TebvvRhQKRyywvasQ064FS4B4mGN-8E3TZkxSfSfr4OWZ1Nmwpr3xFGBOSVpKf5-AufCoXPW3yGu3vFSpCahoKq01n9_gd4UbKLE82Cwou4uZf4VMZ7A7hOAdWYo_geb1bTzLUyTdDSUgbS8XiiYCOpaA4euv409ELE22U77F940M2DO2y8lbaDuk4iAv3QIp5gCGg.9pzTvRPM-FAMa-17a2J5kQ

I did not process the Response response in the above code, but just judged the Response code. However, through the request round-trip log, I found that the Response information of the request Response is a little different: there is no content length field and the Response header is transfer encoding: chunked

About HTTP chunked

The response header of chunked InputStream is transfer encoding: chunked, which is an unknown length and has no content length field. The length of the last data block must be 0. It can also be understood that the http message ends with 0\r\n\r\n.

Just meet the conditions for leakage class == returnType && (Response.body(). length() == null || Response. body(). length() > MAX_ Response_ BUFFER_ Size) if the Response is not released in time, the connection of the connection pool cannot be safely reused.

Problem summary

Finally, it was found that the root cause of the problem was that the request connection pool could not be released normally due to the leakage of the input stream. The return value of the feign request used the Response and was not wrapped in the form of try with resources, resulting in the failure to execute the close method

Key to troubleshooting:

- Feign source code needs to have a certain debugging and analysis ability

- Master conventional application performance analysis methods

- Understand the differences between production and test environments in business. It is best to keep the configuration of test environment and production consistent as far as possible to find problems as soon as possible

Solution

When the Feign request return value uses the Response response type, use java7 try with resources or try finally to release resources in time