Author: Yao Wang, Truman Tian

This example shows how to use the Relay python front end to build a neural network and generate a runtime library for Nvidia GPU with TVM. Note that you need to build TVM with cuda and llvm enabled.

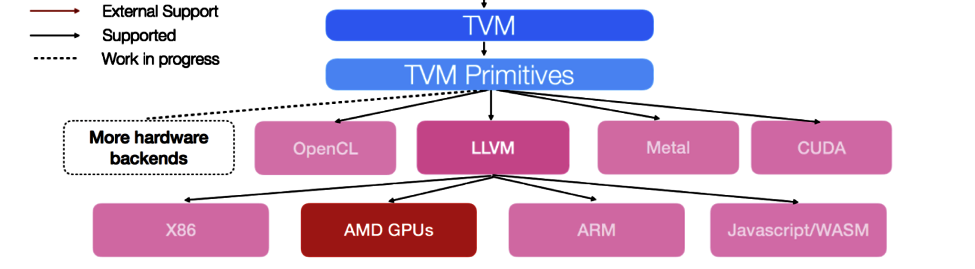

Overview of hardware backend supported by TVM

The following figure shows the hardware backend currently supported by TVM:

In this tutorial, we will select cuda and llvm as the target backend. First, let's import Relay and TVM.

In this tutorial, we will select cuda and llvm as the target backend. First, let's import Relay and TVM.

import numpy as np from tvm import relay from tvm.relay import testing import tvm from tvm import te from tvm.contrib import graph_executor import tvm.testing

Defining neural networks in real

First, let's define a neural network with a Relay python front end. For simplicity, we will use the predefined resnet-18 network in relay. Parameters are initialized using Xavier initializer. Relay also supports other model formats, such as MXNet, CoreML, ONNX, and Tensorflow.

In this tutorial, we assume that we will reason on our device and the batch size is set to 1. The input image is an RGB color image with a size of 224 * 224. We can call TVM relay. expr. TupleWrapper. Astext() displays the network structure.

batch_size = 1

num_class = 1000

image_shape = (3, 224, 224)

data_shape = (batch_size,) + image_shape

out_shape = (batch_size, num_class)

mod, params = relay.testing.resnet.get_workload(

num_layers=18, batch_size=batch_size, image_shape=image_shape

)

# set show_meta_data=True if you want to show meta data

print(mod.astext(show_meta_data=False))

compile

The next step is to compile the model using the Relay/TVM pipeline. You can specify the optimization level of compilation. At present, this value can be 0 to 3. Optimizing pass includes operator fusion, precomputation, layout transformation and so on.

relay.build() returns three components: the execution diagram in json format, the TVM module library of the function specially compiled for this diagram on the target hardware, and the parameter blob of the model. In the compilation process, Relay performs graph level optimization, while TVM performs sheet level optimization, so as to provide optimized runtime modules for model services.

We will first compile for Nvidia GPU. Behind the scenes, relay Build () first performs some graph level optimization, such as pruning, fusion, etc., and then registers the operator (i.e. the node of the optimization graph) to the TVM implementation to generate TVM module. In order to generate the module library, TVM will first convert the high-level IR into the low-level inherent IR of the specified target backend, in this case CUDA. The machine code will then be generated as a module library.

opt_level = 3

target = tvm.target.cuda()

with tvm.transform.PassContext(opt_level=opt_level):

lib = relay.build(mod, target, params=params)

Out:

/workspace/python/tvm/target/target.py:282: UserWarning: Try specifying cuda arch by adding 'arch=sm_xx' to your target.

warnings.warn("Try specifying cuda arch by adding 'arch=sm_xx' to your target.")

Run build library

Now we can create a graphics actuator and run the module on the Nvidia GPU

# create random input

dev = tvm.cuda()

data = np.random.uniform(-1, 1, size=data_shape).astype("float32")

# create module

module = graph_executor.GraphModule(lib["default"](dev))

# set input and parameters

module.set_input("data", data)

# run

module.run()

# get output

out = module.get_output(0, tvm.nd.empty(out_shape)).numpy()

# Print first 10 elements of output

print(out.flatten()[0:10])

Out: [0.00089283 0.00103331 0.0009094 0.00102275 0.00108751 0.00106737 0.00106262 0.00095838 0.00110792 0.00113151]

Compile and load modules

We can also save graphics, libraries, and parameters to files and then load them in the deployment environment.

# save the graph, lib and params into separate files

from tvm.contrib import utils

temp = utils.tempdir()

path_lib = temp.relpath("deploy_lib.tar")

lib.export_library(path_lib)

print(temp.listdir())

Out: ['deploy_lib.tar']

# load the module back. loaded_lib = tvm.runtime.load_module(path_lib) input_data = tvm.nd.array(data) module = graph_executor.GraphModule(loaded_lib["default"](dev)) module.run(data=input_data) out_deploy = module.get_output(0).numpy() # Print first 10 elements of output print(out_deploy.flatten()[0:10]) # check whether the output from deployed module is consistent with original one tvm.testing.assert_allclose(out_deploy, out, atol=1e-5)

Out: [0.00089283 0.00103331 0.0009094 0.00102275 0.00108751 0.00106737 0.00106262 0.00095838 0.00110792 0.00113151]