With the help of page table mechanism and interrupt exception handling mechanism, this experiment completes the implementation of page fault exception handling and fifo page replacement algorithm. ucore established mm_struct and vma_struct data structure, which describes the legal memory space required by ucore simulation application. vma_ The address range described by struct is the legal virtual address range of the program.

struct vma_struct {

struct mm_struct *vm_mm;//Indicates the mm to which the vma belongs. All Vmas in each MM belong to the virtual memory space of the same page directory table

uintptr_t vm_start; // Starting position of virtual memory space

uintptr_t vm_end; // End of virtual memory space

uint32_t vm_flags; // Attributes of this contiguous virtual memory space (read-only, read-write, executable)

list_entry_t list_link;//This structure is used to establish linked lists

};Each process will have a mm_struct is used to manage virtual memory and physical memory

struct mm_struct {

list_entry_t mmap_list;// Bidirectional chain header, linking all Vmas belonging to this mm

struct vma_struct *mmap_cache;// Points to the virtual memory space currently in use. Due to the "locality" principle, the program will often use the virtual memory space in use. This attribute will make it unnecessary to query the linked list, and the query will be accelerated by more than 30%.

pde_t *pgdir; // Point to the page directory table to which the vma belongs

int map_count; // Contains the number of Vmas

void *sm_priv; // The attributes used for virtual memory replacement algorithm are universal by using void * pointer

};Involving VMA_ The operation function of struct includes three Vmas_ create,insert_vma_struct,find_vma, which is to create, insert linked list and find.

Involving mm_struct has only two operation functions_ Create and MM_ Destruction, the function is the same as literal.

Page Fault exception handling

During the execution of the program, the CPU cannot access the corresponding physical memory, and the CPU will generate this exception once.

The main causes of abnormalities are as follows:

1. Target page frame does not exist

2. The corresponding physical page frame is not in memory

3. Access rights not met

In one of the above cases, page fault is generated, and the CPU will store the linear address of the exception in CR2, and save the value errorCode representing the page access exception type in the interrupt stack. See lab1 for the specific process.

Do in ucore_ Pgfault function is the main function to complete the page access exception. It obtains the address of the page access exception according to the CR2 types of CPU and finds out whether this address will be within the address range of a VMA and whether it meets the correct read-write permission according to the error type of errorCode.

If the range is legal and the permission is correct, allocate a free memory page for mapping, or exchange the exchanged memory pages back, refresh the TLB, and call iret interrupt to return.

If the address is not within the scope of VMA or the permission is insufficient, it will be considered as an illegal access.

Page replacement mechanism

In the design of the operating system, a basic principle is that not all physical pages can be exchanged. Only the pages mapped to the user space and directly accessed by the user program can be exchanged, while the pages in the kernel space directly used by the kernel cannot be exchanged. The operating system is the key code to execute, which needs to ensure the efficiency and real-time of operation, and if the kernel code or data used in the process of page missing are replaced, the whole kernel will face the risk of collapse.

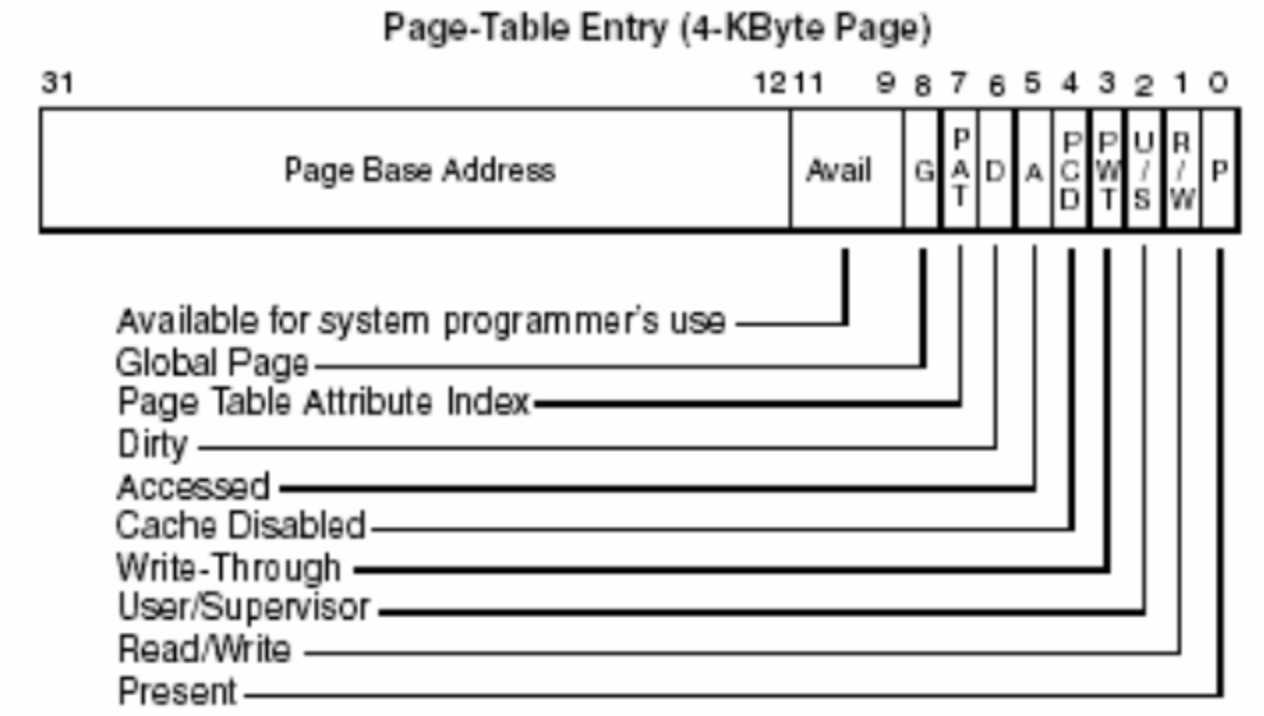

ucore makes full use of PTE in design. Normally, PTE is composed of page base address, reserved bit available for software, global flag bit G, page attribute table index flag PAT, page size flag, dirty bit, access bit, page level cache disable flag, page level direct write flag, user / management flag, read / write flag and existence flag

We should note that when the "exist" flag of a page table entry or page directory table entry is cleared, the operating system can use the remaining bits of the table entry to store some other information, such as the location of the page on the disk storage system.

In ucore, a page is replaced to 8 sectors (0.5KB / sector) of the hard disk. The lowest present bit of the PTE should be 0 (that is, PTE_P is marked as empty, indicating that the virtual real address mapping relationship does not exist). The next 7 bits are temporarily reserved and can be used as various extensions; The high 24 bit address originally used to represent the page frame number can just be used to represent the position of the starting sector of this page on the hard disk (which sector starts from).

When to execute the swap in, check_ mm_ The data structure of struct variable represents all the current legal virtual memory space collections, do_ When the pgfault function is called, it will judge whether the address generating the exception belongs to check_mm_struct is the legal virtual address space represented by a vma and saved in the swap file on the hard disk. If so, swap will be called_ The in function completes the page switching in.

There are two kinds of timing for performing swap out in ucore. The first is a positive strategy. The system periodically takes the initiative to swap some infrequently used pages_ Out to the hard disk, even if the memory is not full. The second is the negative strategy. Only when there is no free physical page allocation, can we start to find the infrequent page swap_out.

In lab3, the second strategy is adopted.

Data structure of page replacement algorithm

In order to indicate that the physical Page can be swapped out, the Page data structure is extended:

struct Page {

......

list_entry_t pra_page_link;

uintptr_t pra_vaddr;

};pra_page_link is used to construct a linked list sorted by the first access time of the page. The header represents the page with the closest first access time, and the footer represents the page with the farthest first access time.

pra_vaddr can be used to record the starting address of the virtual page corresponding to this physical page.

Because ucore has only one kernel page table in lab3, when the page is exchanged to the disk, the high 24 bit offset of the corresponding PTE * 8 = the starting sector number of the corresponding disk exchange.

Build a framework swap for managing virtual memory page replacement with lab2_ Manager, which provides the default implementation of fifo_swap_manager.

Code part

alloc_ The pages function has been changed in lab3

//alloc_pages - call pmm->alloc_pages to allocate a continuous n*PAGESIZE memory

struct Page *

alloc_pages(size_t n) {

struct Page *page=NULL;

bool intr_flag;

while (1)

{

// Close the interrupt to avoid being interrupted when the data structure inside the physical memory manager changes during memory allocation, resulting in data errors

local_intr_save(intr_flag);

{

// Allocate n physical pages

page = pmm_manager->alloc_pages(n);

}

// Resume interrupt control bit

local_intr_restore(intr_flag);

// If one of the following conditions is met, the while loop will jump out

// page != null indicates that the allocation was successful

// If n > 1, it indicates that the application is not due to page missing exception (otherwise, n=1)

// If swap_init_ok == 0 indicates that paging mode is not enabled

if (page != NULL || n > 1 || swap_init_ok == 0) break;

extern struct mm_struct *check_mm_struct;

//cprintf("page %x, call swap_out in alloc_pages %d\n",page, n);

// Try to replace a physical page into swap disk swap sector to free up a new physical page

// If the exchange is successful, the next cycle in theory is PMM_ manager->alloc_ Pages (1) will have a chance to allocate free physical pages successfully

swap_out(check_mm_struct, n, 0);

}

//cprintf("n %d,get page %x, No %d in alloc_pages\n",n,page,(page-pages));

return page;

}do_pgfault function

int do_pgfault(struct mm_struct *mm, uint32_t error_code, uintptr_t addr) {

int ret = -E_INVAL;

//try to find a vma which include addr

// Try to query from the vma linked list block associated with mm whether there is a vma block matching the current addr linear address

struct vma_struct *vma = find_vma(mm, addr);

// The number of global page exception handling increases by 1

pgfault_num++;

//If the addr is in the range of a mm's vma?

if (vma == NULL || vma->vm_start > addr) {

// If there is no match to vma

cprintf("not valid addr %x, and can not find it in vma\n", addr);

goto failed;

}

// The page access exception error code has 32 bits. Bit 0 is 1, indicating that the corresponding physical page does not exist; Bit 1 is 1, indicating a write exception (for example, a read-only page is written); Bit 2 is 1, indicating that the access permission is abnormal (for example, the user mode program accesses the data in the kernel space)

// The module of 3 mainly judges the values of bit0 and bit1

switch (error_code & 3) {

default:

// bit0 and bit1 are both 1. The accessed mapping page table entry exists, and a write exception occurs

// Description a page missing exception has occurred

case 2:

// bit0 is 0, bit1 is 1, the accessed mapping page table entry does not exist, and a write exception occurs

if (!(vma->vm_flags & VM_WRITE)) {

// The virtual memory space mapped by the corresponding vma block is not writable, and the permission verification fails

cprintf("do_pgfault failed: error code flag = write AND not present, but the addr's vma cannot write\n");

// Jump failed and return directly

goto failed;

}

// If the verification is passed, it indicates that a page missing exception has occurred

break;

case 1:

// bit0 is 1, bit1 is 0, the accessed mapping page table entry exists, and a read exception (possibly an access permission exception) occurs

cprintf("do_pgfault failed: error code flag = read AND present\n");

// Jump failed and return directly

goto failed;

case 0:

// bit0 is 0, bit1 is 0, the accessed mapping page table entry does not exist, and a read exception occurs

if (!(vma->vm_flags & (VM_READ | VM_EXEC))) {

// The virtual memory space mapped by the corresponding vma is unreadable and executable

cprintf("do_pgfault failed: error code flag = read AND not present, but the addr's vma cannot read or exec\n");

goto failed;

}

// If the verification is passed, it indicates that a page missing exception has occurred

}

// Construct the perm permission of the missing page table item that needs to be set

uint32_t perm = PTE_U;

if (vma->vm_flags & VM_WRITE) {

perm |= PTE_W;

}

// Construct the linear address of the missing page table item to be set (round down according to PGSIZE for page alignment)

addr = ROUNDDOWN(addr, PGSIZE);

ret = -E_NO_MEM;

// Page table entry pointer (PTE) for mapping

pte_t *ptep=NULL;

// Get the page table entry of addr linear address in the page table associated with mm

// The third parameter = 1 means that if the corresponding page table item does not exist, you need to create a new page table item

if ((ptep = get_pte(mm->pgdir, addr, 1)) == NULL) {

cprintf("get_pte in do_pgfault failed\n");

goto failed;

}

// If each bit of the corresponding page table item is 0, it indicates that it does not exist before. You need to set the corresponding data to map the linear address to the physical address

if (*ptep == 0) {

// In the Page table pointed to by pgdir, the secondary Page table entry corresponding to the la linear address is mapped with a newly allocated physical Page

if (pgdir_alloc_page(mm->pgdir, addr, perm) == NULL) {

cprintf("pgdir_alloc_page in do_pgfault failed\n");

goto failed;

}

}

else {

// If it is not all 0, it may have been swapped to the swap disk before

if(swap_init_ok) {

// If the swap disk virtual memory exchange mechanism is enabled

struct Page *page=NULL;

// Exchange the physical Page data corresponding to the linear address of addr from disk to physical memory (make the Page pointer point to the physical Page after successful exchange)

if ((ret = swap_in(mm, addr, &page)) != 0) {

// swap_ The return value of in is not 0, which indicates that the swap in failed

cprintf("swap_in in do_pgfault failed\n");

goto failed;

}

// Establish a mapping relationship between the exchanged page and the secondary page table item corresponding to addr in the mm - > padir page table (perm identifies each permission bit of the secondary page table)

page_insert(mm->pgdir, page, addr, perm);

// The current page is exchangeable. Add it to the management of the global virtual memory exchange manager

swap_map_swappable(mm, addr, page, 1);

page->pra_vaddr = addr;

}

else {

// If the swap disk virtual memory swap mechanism is not turned on, but it is executed so far, a problem occurs

cprintf("no swap_init_ok but ptep is %x, failed\n",*ptep);

goto failed;

}

}

// Returning 0 means that the missing page exception is handled successfully

ret = 0;

failed:

return ret;

}pgdir_alloc_page to establish a mapping virtual real relationship:

// In the Page table pointed to by pgdir, the secondary Page table entry corresponding to la linear address is mapped with a newly allocated physical Page

struct Page *

pgdir_alloc_page(pde_t *pgdir, uintptr_t la, uint32_t perm) {

// Allocate a new physical page for mapping la

struct Page *page = alloc_page();

if (page != NULL) { // != null allocation succeeded

// Establish the mapping relationship between la corresponding secondary page table entry (located in pgdir page table) and page physical page base address

if (page_insert(pgdir, page, la, perm) != 0) {

// Mapping failed, releasing the allocated physical page

free_page(page);

return NULL;

}

// If the swap partition function is enabled

if (swap_init_ok){

// Set the physical page of the newly mapped page to be exchangeable and manage it in the global swap manager

swap_map_swappable(check_mm_struct, la, page, 0);

// Set the virtual memory associated with this physical page

page->pra_vaddr=la;

// Verify that the newly allocated physical page is referenced exactly 1 times

assert(page_ref(page) == 1);

}

}

return page;

}swap_fifo.c

static int

_fifo_init_mm(struct mm_struct *mm)

{

// Initialize the first in first out linked list queue

list_init(&pra_list_head);

mm->sm_priv = &pra_list_head;

return 0;

}

static int

_fifo_map_swappable(struct mm_struct *mm, uintptr_t addr, struct Page *page, int swap_in)

{

// Get mm_ First in first out linked list queue associated with struct

list_entry_t *head=(list_entry_t*) mm->sm_priv;

// Get the swap linked list node corresponding to the parameter page structure

list_entry_t *entry=&(page->pra_page_link);

assert(entry != NULL && head != NULL);

//record the page access situlation

/*LAB3 EXERCISE 2: YOUR CODE*/

//(1)link the most recent arrival page at the back of the pra_list_head qeueue.

// Add it to the head of the queue (first in first out, and the latest page is mounted on the head)

list_add(head, entry);

return 0;

}

/*

* (4)_fifo_swap_out_victim: According FIFO PRA, we should unlink the earliest arrival page in front of pra_list_head qeueue,

* then assign the value of *ptr_page to the addr of this page.

*/

static int

_fifo_swap_out_victim(struct mm_struct *mm, struct Page ** ptr_page, int in_tick)

{

// Get mm_ First in first out linked list queue associated with struct

list_entry_t *head=(list_entry_t*) mm->sm_priv;

assert(head != NULL);

assert(in_tick==0);

/* Select the victim */

/*LAB3 EXERCISE 2: YOUR CODE*/

//(1) unlink the earliest arrival page in front of pra_list_head qeueue

//(2) assign the value of *ptr_page to the addr of this page

/* Select the tail */

// Find the previous node of the head node (the previous node of the two-way circular linked list head = the tail node of the queue)

list_entry_t *le = head->prev;

assert(head!=le);

// Get the page structure corresponding to the tail node

struct Page *p = le2page(le, pra_page_link);

// Delete the le node from the first in first out linked list queue

list_del(le);

assert(p !=NULL);

// Order ptr_page refers to the selected page

*ptr_page = p;

return 0;

}