When the server program is updated or restarted, if we directly kill -9 the old process and start the new process, there will be the following problems:

- The server will directly exit the old process if the connection request is not finished

- When a new request is called, the service has not been restarted, resulting in connection rejected

- Even to exit the program, directly killing - 9 will still interrupt the request being processed

The direct feeling is that during the restart process, users will not be able to provide normal services for a period of time; At the same time, the rude shutdown of the service may also pollute the state services such as the database on which the business depends.

Therefore, in the process of service restart or re release, we should achieve seamless switching between old and new services, and ensure zero downtime of changed services!

As a micro service framework, how does go zero help developers exit gracefully? Let's have a look.

Graceful exit

Before realizing graceful restart, the first problem to be solved is how to exit gracefully:

For http services, the general idea is to close the listen to fd, ensure that no new requests come in, process the incoming requests, and then exit.

go native http provides server Shutdown(), let's see how it is implemented:

- Set the inShutdown flag

- Turn off listeners to ensure that no new requests come in

- Wait for all active links to become idle

- Exit function, end

Explain the meaning of these steps:

inShutdown

func (srv *Server) ListenAndServe() error {

if srv.shuttingDown() {

return ErrServerClosed

}

....

// Actual listening port; Generate a listener

ln, err := net.Listen("tcp", addr)

if err != nil {

return err

}

// Carry out actual logic processing and inject the listener into

return srv.Serve(tcpKeepAliveListener{ln.(*net.TCPListener)})

}

func (s *Server) shuttingDown() bool {

return atomic.LoadInt32(&s.inShutdown) != 0

}ListenAndServe is a necessary function for http to start the Server. The first sentence in it is to judge whether the Server has been shut down.

inShutdown is an atomic variable. Non-0 means it is closed.

listeners

func (srv *Server) Serve(l net.Listener) error {

...

// Add the injected listener to the internal map

// Facilitate subsequent control of requests linked from the listener

if !srv.trackListener(&l, true) {

return ErrServerClosed

}

defer srv.trackListener(&l, false)

...

}Register the listener in the internal listeners map in Serve, get it directly from the listeners in ShutDown, and then execute the listener Close(), after TCP waves four times, the new request will not enter.

closeIdleConns

To put it simply: change the active link recorded in the current Server into an idle state and return.

close

func (srv *Server) Serve(l net.Listener) error {

...

for {

rw, err := l.Accept()

// At this time, an error will occur in accept, because the listener has been closed earlier

if err != nil {

select {

// Another sign: doneChan

case <-srv.getDoneChan():

return ErrServerClosed

default:

}

}

}

}When the listener in getDoneChan has been closed earlier, push in the channel of doneChan.

To sum up: Shutdown can gracefully terminate services without interrupting already active links.

But at some point after the service is started, how does the program know that the service is interrupted? When the service is interrupted, how do I notify the program and then call Shutdown for processing? Next, let's look at the function of the system signal notification function

Service interruption

At this time, we must rely on the signal provided by the OS itself. Correspondingly, the Notify of signal provides the ability of system signal notification.

github.com/tal-tech/go-zero/blob/m...

func init() {

go func() {

var profiler Stopper

signals := make(chan os.Signal, 1)

signal.Notify(signals, syscall.SIGUSR1, syscall.SIGUSR2, syscall.SIGTERM)

for {

v := <-signals

switch v {

case syscall.SIGUSR1:

dumpGoroutines()

case syscall.SIGUSR2:

if profiler == nil {

profiler = StartProfile()

} else {

profiler.Stop()

profiler = nil

}

case syscall.SIGTERM:

// Where graceful shutdown is being performed

gracefulStop(signals)

default:

logx.Error("Got unregistered signal:", v)

}

}

}()

}SIGUSR1 - > dump the goroutine status, which is very useful in error analysis

Sigusr2 - > turn on / off all indicator monitoring and control the duration of profiling by yourself

SIGTERM - > truly enable gracefulStop and gracefully close

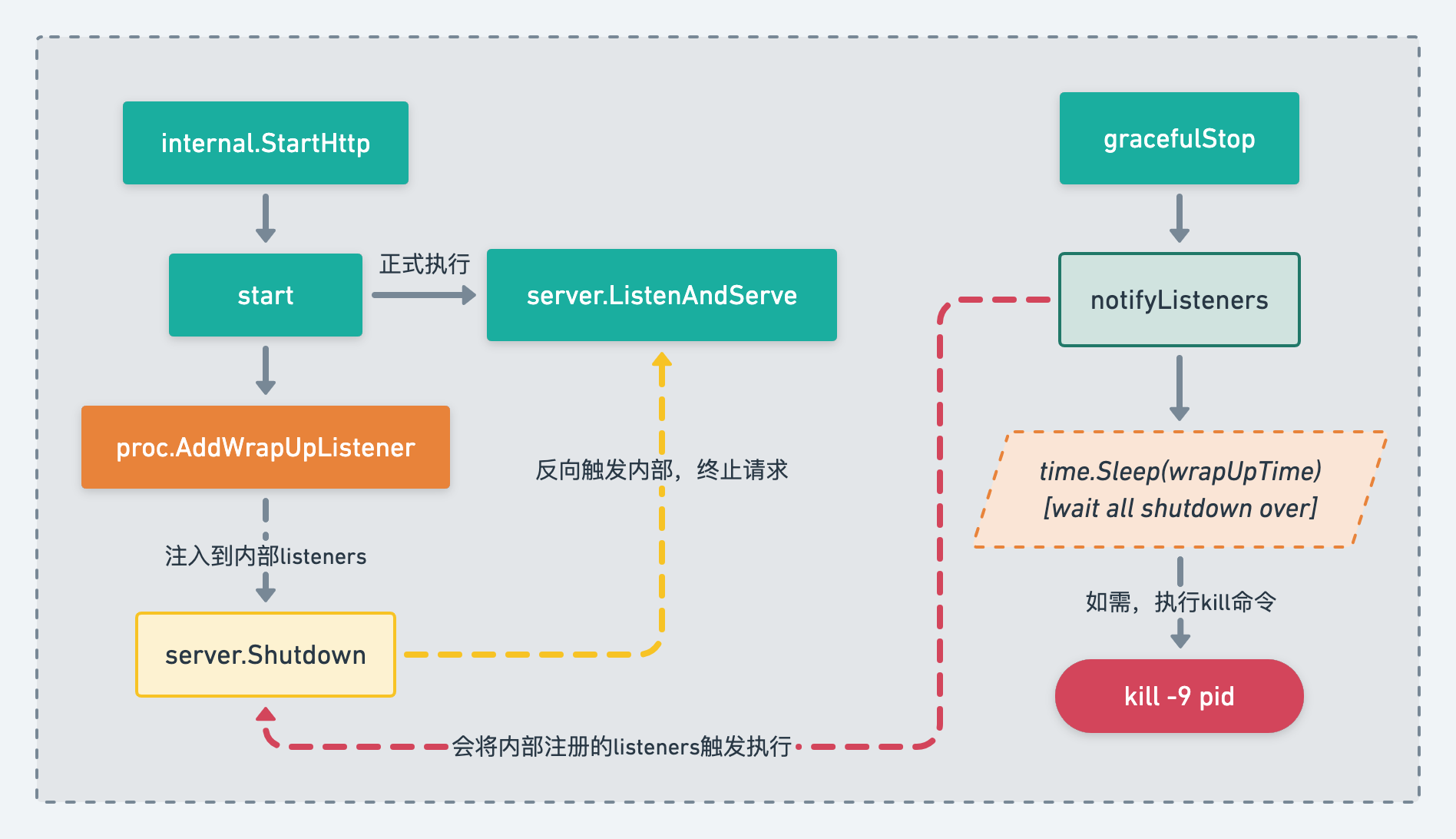

The process of gracefulStop is as follows:

- Cancel the monitoring signal. After all, you have to quit. You don't need to monitor again

- wrap up to close the current service request and resources

- time.Sleep(), wait for resource processing to complete, and then close it

- shutdown, notify exit

- If the main goroutine has not exited, it will actively send SIGKILL to exit the process

In this way, the service will no longer accept new requests. The active requests of the service wait for the processing to be completed, and also wait for the resources to be closed (database connection, etc.). If there is a timeout, it will be forced to exit.

Overall process

At present, all our go programs run in the docker container, so in the process of service publishing, k8s will send a SIGTERM signal to the container, and then the program in the container receives the signal and starts to execute ShutDown:

Here, the whole elegant closing process is sorted out.

But there is also smooth restart, which depends on k8s. The basic process is as follows:

- Start new pod before exiting old pod

- The old pod continues to process the accepted requests and will not accept new requests

- How new pod accepts and processes new requests

- old pod exit

In this way, even if the whole service is restarted successfully, if the new pod is not started successfully, the old pod can also provide services without affecting the current online services.

Project address

Welcome to go zero and star support us!

Wechat communication group

Focus on the "micro service practice" official account and click on the exchange group to get the community community's two-dimensional code.