1. One of the ways to create resources is to create resources by command, understand the actions after the command runs, and summarize the origin of Pod name by viewing resources.

When we execute the command to create resources, the deployment controller will manage the pod through the replicaset controller. Next, we will analyze through an example. After we execute the command to create resources, what does k8s do (we can find the rule by its NAME)?

Run a deployment

[root@master ~]# kubectl run test01 --image=nginx:latest --replicas=2 #Run a nginx container and specify 2 copies [root@master ~]# kubectl get deployments. #View deployment controller NAME READY UP-TO-DATE AVAILABLE AGE test01 2/2 2 2 48s #You can see that the name of deployment is test01 we specified [root@master ~]# kubectl get replicasets. #Then look at the replicasets controller #Note: replicasets can be abbreviated as "rs" NAME DESIRED CURRENT READY AGE test01-799bb6cd4d 2 2 2 119s #You can see that the NAME of replicasets is a string of ID numbers appended to the NAME of deployment. [root@master ~]# kubectl get pod #View the name of the pod NAME READY STATUS RESTARTS AGE test01-799bb6cd4d-d88wd 1/1 Running 0 3m18s test01-799bb6cd4d-x8wpm 1/1 Running 0 3m18s #As you can see, the NAME of the pod is just a piece of ID appended to the replicasets above

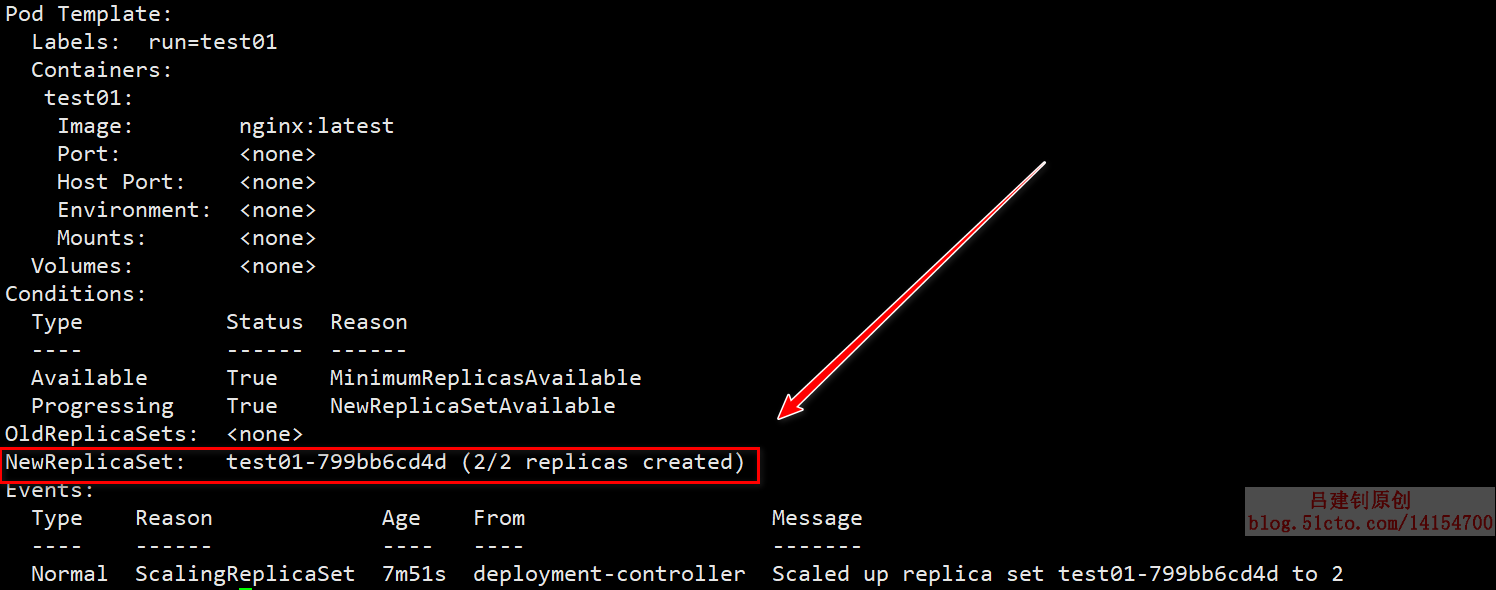

At the same time, you can view the details of each resource object to verify the above statement, as follows:

[root@master ~]# kubectl describe deployments test01 #View details of test01

The returned information is as follows. You can see that it generates a new replicasets controller, as follows:

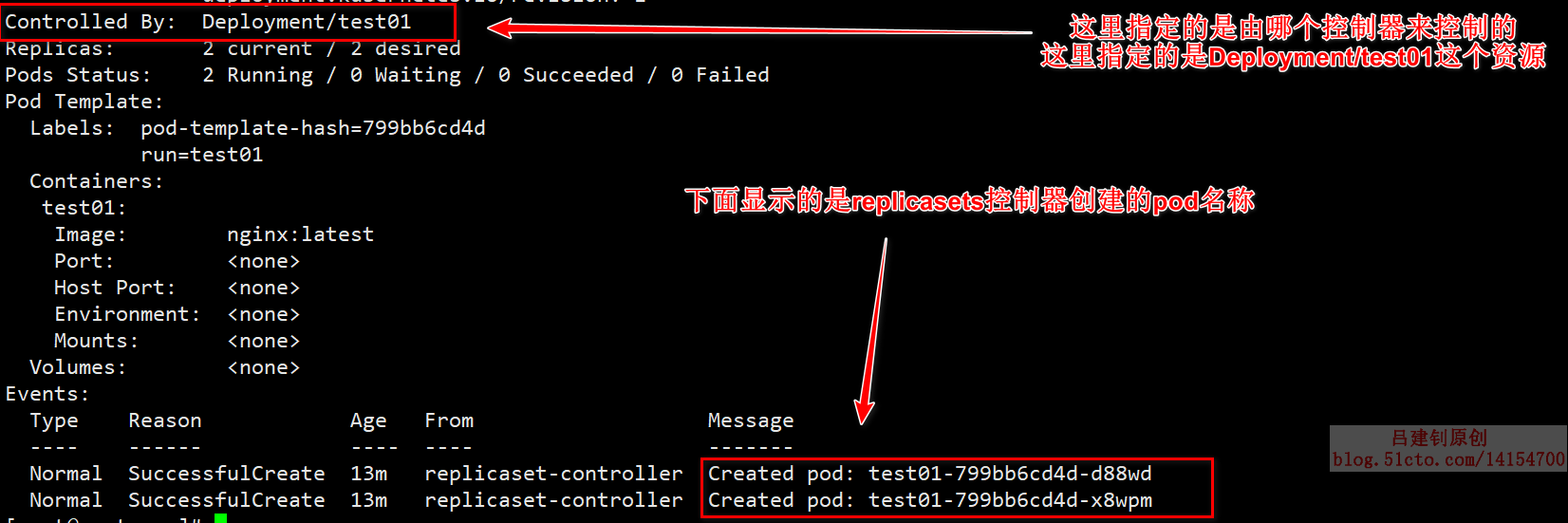

Now, look at its replicasets details, as follows:

2. If you want the client to access the deployed service, what do you need to do? Where are the key points?

If the client is required to access the k8s deployed services, a service resource object must be created, and its type must be NodePort. The client contacts the proxy in the k8s cluster through the port mapped by the resource object access service, so as to access the deployed services.

The implementation process is as follows:

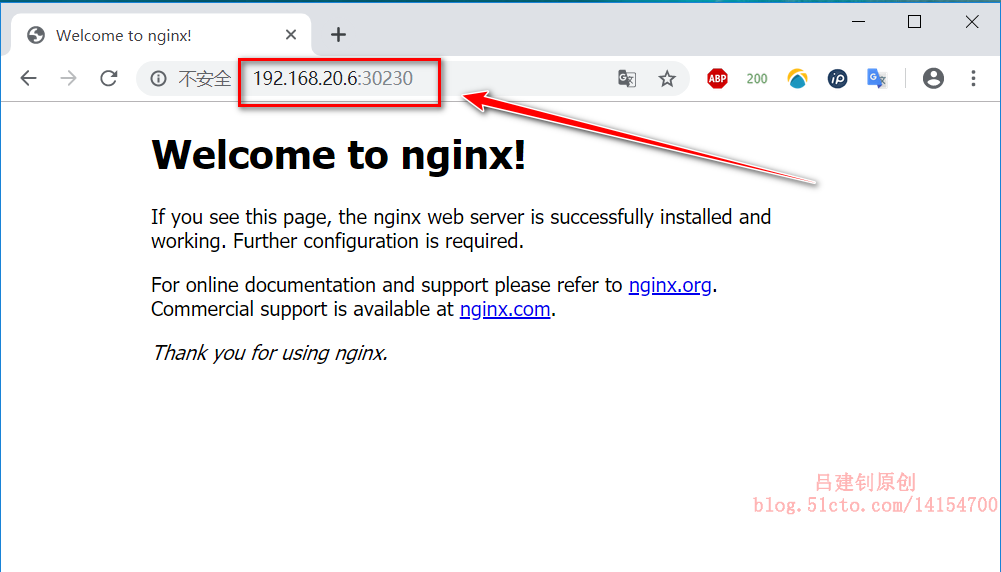

[root@master ~]# kubectl run test02 --image=nginx:latest --port=80 --replicas=2 #Create deployment resource object based on nginx image, and map port 80 of container to host [root@master ~]# kubectl expose deployment test02 --name=web01 --port=80 --type=NodePort #Create a service to map the 80 port of test02 deployed [root@master ~]# kubectl get svc web01 #View the information of the created web01 service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE web01 NodePort 10.103.223.105 <none> 80:30230/TCP 86s #You can see that the deployed service port is mapped to 30230 of the host,

When the client accesses port 30230 of any node in the k8s cluster, it can access the homepage of the service, as follows:

3. Build a registry warehouse,. Based on the nginx custom image, change the default access interface to: hello k8s. This is version 1.10. Based on this image, a Deployment resource object is run, with 4 replicas.

#Build a registry warehouse and assign it to a private warehouse at the nodes in the cluster

[root@master ~]# docker run -tid --name registry -p 5000:5000 --restart always registry:latest

[root@master ~]# vim /usr/lib/systemd/system/docker.service #Edit configuration files

ExecStart=/usr/bin/dockerd -H unix:// --insecure-registry 192.168.20.6:5000

#Send the modified profile to other nodes in the k8s cluster

[root@master ~]# scp /usr/lib/systemd/system/docker.service root@node01:/usr/lib/systemd/system/

[root@master ~]# scp /usr/lib/systemd/system/docker.service root@node02:/usr/lib/systemd/system/

#All nodes that have changed the docker profile need to do the following for the changes to take effect

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl restart docker

#Make a custom image

[root@master ~]# vim Dockerfile

FROM nginx:latest

ADD index.html /usr/share/nginx/html/

[root@master ~]# echo "hello k8s" > index.html

[root@master ~]# docker build -t 192.168.20.6:5000/nginx:1.10 .

[root@master ~]# docker push 192.168.20.6:5000/nginx:1.10 #Upload to private warehouse

#Run a Deployment resource object based on a custom image, with 4 replicas.

[root@master ~]# kubectl run test03 --image=192.168.20.6:5000/nginx:1.10 --port=80 --replicas=4

[root@master ~]# kubectl get pod -o wide | grep test03 | awk '{print $6}' #View IP addresses for four replicas

10.244.2.20

10.244.1.18

10.244.1.19

10.244.2.21

#Next, access the above four IP addresses in the k8s cluster to see the services they provide, as follows:

#Access test

[root@master ~]# curl 10.244.2.21

hello k8s

[root@master ~]# curl 10.244.2.20

hello k8sIV. update and expand the above Deployment resource object. The number of replicas is updated to 6. The image is still a custom image, and the default access interface is changed to Hello update

#Update image and upload to private warehouse

[root@master ~]# echo "Hello update" > index.html

[root@master ~]# docker build -t 192.168.20.6:5000/nginx:2.20 .

[root@master ~]# docker push 192.168.20.6:5000/nginx:2.20

#Update resource objects and expand capacity

[root@master ~]# kubectl set image deployment test03 test03=192.168.20.6:5000/nginx:2.20 && kubectl scale deployment test03 --replicas=6

#View the IP address of the pod

[root@master ~]# kubectl get pod -o wide | grep test03 | awk '{print $6}'

10.244.2.24

10.244.2.22

10.244.1.21

10.244.2.23

10.244.1.22

10.244.1.20

#Access test

[root@master ~]# curl 10.244.1.20

Hello update

[root@master ~]# curl 10.244.1.22

Hello update

5. Roll back the Deployment resource object, and check the content of the access interface and the number of replicas for the final version.

[root@master ~]# kubectl rollout undo deployment test03 #Performing a rollback operation

[root@master ~]# kubectl get pod -o wide | grep test03 | wc -l

#Check whether the number of replicas is 6

6

#Access test

[root@master ~]# kubectl get pod -o wide | grep test03 | awk '{print $6}'

10.244.1.23

10.244.2.27

10.244.1.24

10.244.1.25

10.244.2.26

10.244.2.25

[root@master ~]# curl 10.244.2.25

hello k8s

[root@master ~]# curl 10.244.2.26

hello k8s————————Thank you for reading————————