catalogue

1. Use TF Function into tensorflow function

2. Use decorator to convert python function into function in tensorflow

3. Show the function structure of tensorflow

4. Processing method of variables in Python function

5. Limit the input type of the function

5.1 why do you want to limit the type of function input parameters?

5.2} use TF Input in function_ Signature defines the input parameter type

6.1 view the diagram of concrete function with diagram definition

6.2 view the operations of the diagram

6.2. 1 view the input and output of an operation

6.2. 2. View an operation in the diagram through name

6.4 definition of view diagram

7. Summary: the significance of transforming python function into tensorflow graph function

In the tensorflow library function, various optimizations are made, such as for the compiler

Is it better to turn python functions into tensorflow library functions in some way?

1. Use TF Function into tensorflow function

First, write python functions

# Writing python functions

def scaled_elu(z, scale=1.0, alpha=1.0):

# z >= 0 ? scale * z: scale * alpha * tf. nn. ELU (z) ternary operator

is_positive = tf.greater_equal(z, 0.0)

# tf.where indicates the ternary operator

return scale * tf.where(is_positive, z, alpha * tf.nn.elu(z))

print(scaled_elu(-3.))

print(scaled_elu([-3., 0., 3]))Use TF Function into tensorflow function

scaled_elu_tf = tf.function(scaled_elu) print(scaled_elu_tf(-3.)) print(scaled_elu_tf([-3., 0., 3]))

scaled_elu_tf and scaled_ What is the relationship between ELU?

print(scaled_elu_tf.python_function is scaled_elu)

The above output is True. scaled_elu_ The python function of TF is scaled_elu

scaled_elu_tf and scaled_ What's the difference with ELU? Will the performance be improved as we predicted before?

%timeit scaled_elu(tf.random.normal((10000, 10000))) %timeit scaled_elu_tf(tf.random.normal((10000, 10000)))

The output result is:

Visible} scaled_elu_tf ratio scaled_elu runs a little faster. It can be seen that the tensorflow function has better time performance than the python function.

2. Use decorator to convert python function into function in tensorflow

@tf.function

def scaled_elu_tf_2(z, scale=1.0, alpha=1.0):

# z >= 0 ? scale * z: scale * alpha * tf. nn. ELU (z) ternary operator

is_positive = tf.greater_equal(z, 0.0)

# tf.where indicates the ternary operator

return scale * tf.where(is_positive, z, alpha * tf.nn.elu(z))

print(scaled_elu_tf_2(-3.))

print(scaled_elu_tf_2([-3., 0., 3]))Test time performance improvement

scaled_elu_2 = scaled_elu_tf_2.python_function %timeit scaled_elu_2(tf.random.normal((10000, 10000))) %timeit scaled_elu_tf_2(tf.random.normal((10000, 10000)))

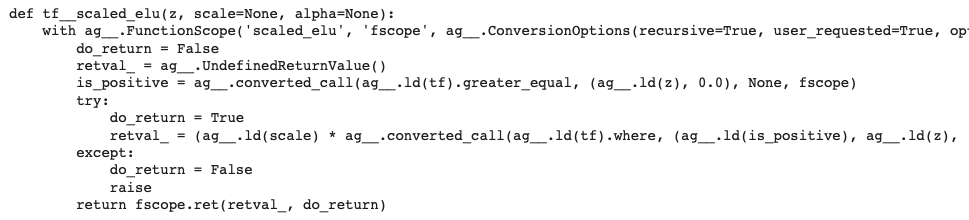

3. Show the function structure of tensorflow

from IPython.display import display, Markdown

def display_tf_code(func):

code = tf.autograph.to_code(func)

display(Markdown('```python\n{}\n```'.format(code)))

display_tf_code(scaled_elu)The structure is shown below

4. Processing method of variables in Python function

note: variable must be in TF Initialize outside of function

var = tf.Variable(0.)

@tf.function

def add_21():

return var.assign_add(21)

print(add_21())Q: What if you define variable in it?

A: Will throw an exception

In the process of defining neural networks, variable s are used more.

Therefore, when defining the neural network, the variables need to be initialized before being converted to tensorflow function.

5. Limit the input type of the function

# Here is a tensorflow diagram with input parameters

@tf.function

def cube(z):

return tf.pow(z, 3)

print(cube(tf.constant([1., 2., 3.]))) # The input is of float type

print(cube(tf.constant([1, 2, 3]))) # Input is of type intAs shown above, if the input of the function is not limited, either the number of float type or the number of int type can be passed in.

5.1 why do you want to limit the type of function input parameters?

First, limiting the type of input parameter will make the input type more explicit,

Secondly, because there is no type information in python, it is easy to cause transmission errors. If the input parameter type is limited, the error of parameter transmission will be reduced.

5.2} use TF Input in function_ Signature defines the input parameter type

@tf.function(input_signature=[tf.TensorSpec([None], tf.int32, name='x')])

def cube(z):

return tf.pow(z, 3)

try:

print(cube(tf.constant([1., 2., 3.]))) # The input is of float type

except ValueError as ex:

print(ex)

print(cube)

print(cube(tf.constant([1, 2, 3]))) # Input is of type intAs shown in the above code: type restrictions are made on the input parameters of the function, which are of type int. Therefore, when data of type float is passed in, an exception will be triggered.

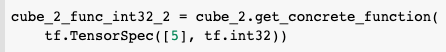

5.3 using get_concrete_function defines the input parameter type and converts function into a concreteFunction with a graph definition

The function has input_ Save cannot be used until the signature attribute is_ Model saves the model.

In the process of savedModel, you can also use get_concrete_function function to put a TF The ordinary python function marked with function is transformed into a function with graph definition.

- @tf.function py func -> tf.graph

- get_concrete_function -> add input signature ->savedModel

# @tf.function py func -> tf.graph

# get_concrete_function -> add input signature ->savedModel

@tf.function()

def cube_2(z):

return tf.pow(z, 3)

cube_2_func_int32 = cube_2.get_concrete_function(

tf.TensorSpec([None], tf.int32))

print(cube_2_func_int32)

print(cube_2_func_int32(tf.constant([1, 2, 3]))) # Input is of type intIn addition to using types, you can also use specific values to limit the type of input parameters.

cube_2_func_int32_2 = cube_2.get_concrete_function(

tf.TensorSpec([5], tf.int32))

cube_2_func_int32_3 = cube_2.get_concrete_function(

tf.constant([5]))

cube_2_func_int32_4 = cube_2.get_concrete_function(

tf.constant([1, 2, 5]))

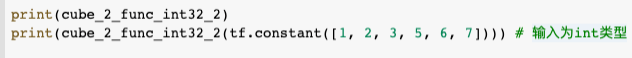

print(cube_2_func_int32_2)

print(cube_2_func_int32_2(tf.constant([1, 2, 3, 5, 6, 7]))) # Input is of type int

print(cube_2_func_int32_3)

print(cube_2_func_int32_3(tf.constant([1, 2, 3, 5, 6, 7]))) # Input is of type int

print(cube_2_func_int32_4)

print(cube_2_func_int32_4(tf.constant(10))) # Input is of type intnote: This is only type sensitive, not shape sensitive

As follows:

Output is:

Defines the name of the input parameter.

tf. Input in function_ TF is also used in signature Tensorspec, you can also define the name of the input parameter.

# tf. Input in function_ TF is also used in signature TensorSpec

cube_2_func_int32_name = cube_2.get_concrete_function(

tf.TensorSpec([None], tf.int32, name='t'))

print(cube_2_func_int32_name)6. View the diagram

note: Although TF You can also add input to a function in function_ signature. But it must be through get_concrete_function can be TF Function is converted to an object with a graph definition.

6.1 view the diagram of concrete function with diagram definition

cube_2_func_int32_name.graph

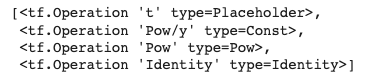

6.2 view the operations of the diagram

cube_2_func_int32_name.graph.get_operations()

pow_op = cube_2_func_int32_name.graph.get_operations()[2] print(pow_op)

6.2. 1 view the input and output of an operation

print(pow_op.inputs) print(pow_op.outputs)

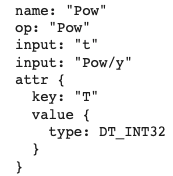

6.2. 2. View an operation in the diagram through name

cube_2_func_int32_name.graph.get_operation_by_name('Identity')6.3 view tensor by name

cube_2_func_int32_name.graph.get_tensor_by_name('t:0')

cube_2_func_int32_name.graph.get_tensor_by_name('Pow/y:0')

cube_2_func_int32_name.graph.get_tensor_by_name('Pow:0')

cube_2_func_int32_name.graph.get_tensor_by_name('Identity:0')6.4 definition of view diagram

cube_2_func_int32_name.graph.as_graph_def()

7. Summary: the significance of transforming python function into tensorflow graph function

(1) How to save a model

(2) After saving the model, what if you load it in?

(3) When loading a model as an information, if you use c + + or other languages, you often use get_operation_by_name, get_tensor_by_name and other functions