There are two main ways to migrate data between HDFS and relational databases:

- The file is generated according to the file format required by the database, and then imported by the import tool provided by the database

- Import using JDBC

MapReduce provides DBInputFormat and DBOutputFormat by default, which are used for database reading and database writing respectively

1. Demand

now use DBOutputFormat to import the student information processed by MapReduce into mysql

2. Data set

Zhang Mingming 45

Li Chengyou 78

Zhang huican 56

Wang Lu 56

Chen Dongming 67

Chen guo31

Li Huaming 32

Zhang Mingdong 12

Li Mingguo 34

Chen Daoliang 35

Chen Jiayong 78

Chen minhao 13

Chen pan 78

Chen Xuecheng 18

3. Realization

package com.buaa;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.filecache.DistributedCache;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.db.DBConfiguration;

import org.apache.hadoop.mapreduce.lib.db.DBOutputFormat;

import org.apache.hadoop.mapreduce.lib.db.DBWritable;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

/**

* @ProjectName DBOutputormatDemo

* @PackageName com.buaa

* @ClassName MysqlDBOutputormatDemo

* @Description TODO

* @Author Liu Jichao

* @Date 2016-05-06 09:15:57

*/

@SuppressWarnings({ "unused", "deprecation" })

public class MysqlDBOutputormatDemo extends Configured implements Tool {

/**

* Implement DBWritable

*

* TblsWritable You need to write data to mysql

*/

public static class TblsWritable implements Writable, DBWritable {

String tbl_name;

int tbl_age;

public TblsWritable() {

}

public TblsWritable(String name, int age) {

this.tbl_name = name;

this.tbl_age = age;

}

@Override

public void write(PreparedStatement statement) throws SQLException {

statement.setString(1, this.tbl_name);

statement.setInt(2, this.tbl_age);

}

@Override

public void readFields(ResultSet resultSet) throws SQLException {

this.tbl_name = resultSet.getString(1);

this.tbl_age = resultSet.getInt(2);

}

@Override

public void write(DataOutput out) throws IOException {

out.writeUTF(this.tbl_name);

out.writeInt(this.tbl_age);

}

@Override

public void readFields(DataInput in) throws IOException {

this.tbl_name = in.readUTF();

this.tbl_age = in.readInt();

}

public String toString() {

return new String(this.tbl_name + " " + this.tbl_age);

}

}

public static class StudentMapper extends Mapper<LongWritable, Text, LongWritable, Text>{

@Override

protected void map(LongWritable key, Text value,Context context) throws IOException, InterruptedException {

context.write(key, value);

}

}

public static class StudentReducer extends Reducer<LongWritable, Text, TblsWritable, TblsWritable> {

@Override

protected void reduce(LongWritable key, Iterable<Text> values,Context context) throws IOException, InterruptedException {

// values has only one value, because key s do not have the same value

StringBuilder value = new StringBuilder();

for(Text text : values){

value.append(text);

}

String[] studentArr = value.toString().split("\t");

if(StringUtils.isNotBlank(studentArr[0])){

/*

* Name and age (separated by tab in the middle)

* Zhang Mingming 45

*/

String name = studentArr[0].trim();

int age = 0;

try{

age = Integer.parseInt(studentArr[1].trim());

}catch(NumberFormatException e){

}

context.write(new TblsWritable(name, age), null);

}

}

}

public static void main(String[] args) throws Exception {

// Data input path and output path

String[] args0 = {

"hdfs://ljc:9000/buaa/student/student.txt"

};

int ec = ToolRunner.run(new Configuration(), new MysqlDBOutputormatDemo(), args0);

System.exit(ec);

}

@Override

public int run(String[] arg0) throws Exception {

// Read configuration file

Configuration conf = new Configuration();

DBConfiguration.configureDB(conf, "com.mysql.jdbc.Driver",

"jdbc:mysql://172.26.168.2:3306/test", "hadoop", "123");

// Create a new task

Job job = new Job(conf, "DBOutputormatDemo");

// Set main class

job.setJarByClass(MysqlDBOutputormatDemo.class);

// enter path

FileInputFormat.addInputPath(job, new Path(arg0[0]));

// Mapper

job.setMapperClass(StudentMapper.class);

// Reducer

job.setReducerClass(StudentReducer.class);

// mapper output format

job.setOutputKeyClass(LongWritable.class);

job.setOutputValueClass(Text.class);

// The default input format is TextInputFormat

// job.setInputFormatClass(TextInputFormat.class);

// Output format

job.setOutputFormatClass(DBOutputFormat.class);

// Which tables and fields are exported to

DBOutputFormat.setOutput(job, "student", "name", "age");

// Add mysql database jar

// job.addArchiveToClassPath(new Path("hdfs://ljc:9000/lib/mysql/mysql-connector-java-5.1.31.jar"));

// DistributedCache.addFileToClassPath(new Path("hdfs://ljc:9000/lib/mysql/mysql-connector-java-5.1.31.jar"), conf);

//Submit task

return job.waitForCompletion(true)?0:1;

}

}

mr program is very simple. It just reads the contents of the file. Here, we mainly focus on how to import the result set processed by mr into MySQL.

The table in the database is student. Write the corresponding bean class TblsWritable for the student table. This class needs to implement Writable interface and DBWritable interface.

1. Writable interface

@Override

public void write(DataOutput out) throws IOException {

out.writeUTF(this.tbl_name);

out.writeInt(this.tbl_age);

}

@Override

public void readFields(DataInput in) throws IOException {

this.tbl_name = in.readUTF();

this.tbl_age = in.readInt();

}

The above two methods correspond to the Writable interface and use object serialization. I won't say more here. Why serialization? Refer to: Hadoop serialization

2.DBWritable interface

@Override

public void write(PreparedStatement statement) throws SQLException {

statement.setString(1, this.tbl_name);

statement.setInt(2, this.tbl_age);

}

@Override

public void readFields(ResultSet resultSet) throws SQLException {

this.tbl_name = resultSet.getString(1);

this.tbl_age = resultSet.getInt(2);

}

the above two methods correspond to the DBWriteable interface. The readFields method is responsible for reading the database data from the result set (note that the subscript of the ResultSet starts from 1) and reading a column filtered in the query SQL at one time. The Write method is responsible for writing data to the database and writing each column of each row in turn.

Finally, configure the Job, as shown in the following code

DBConfiguration.configureDB(conf, "com.mysql.jdbc.Driver", "jdbc:mysql://172.26.168.2:3306/test", "hadoop", "123")

The above configuration mainly includes the following items:

- Name of database driver: com mysql. jdbc. Driver

- Database URL: jdbc:mysql://172.26.168.2:3306/test

- User name: hadoop

- Password: 123

The following items need to be configured:

- Database table and name of each column: dboutputformat setOutput(job, “student”, “name”, “age”);

- The output format is changed to: job setOutputFormatClass(DBOutputFormat.class);

Tip: it should be reminded that DBOutputFormat runs in MapReduce mode and will connect to the database in parallel. Here, you need to set the number of map s and reduce appropriately to control the number of parallel connections within a reasonable range

4. Operation effect

5. Precautions

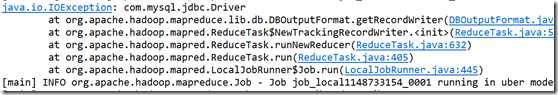

Running the project may report the following errors

resolvent:

there are three solutions, but I like the third.

1. Add the package under ${HADOOP_HOME}/lib under each node. Restarting the cluster is generally an original method.

2. Transfer the package to the cluster as follows

hadoop fs -put mysql-connector-java-5.1.31.jar /lib/mysql

before the mr program submits the job, add one of the following two statements

// first

DistributedCache.addFileToClassPath(new Path("hdfs://ljc:9000/lib/mysql/mysql-connector-java-5.1.31.jar"), conf);

This statement is not recommended. The following statement is recommended

// the second

job.addArchiveToClassPath(new Path("hdfs://ljc:9000/lib/mysql/mysql-connector-java-5.1.31.jar"));

Note: in this way, when running locally, it still reports "java.io.IOException: com.mysql.jdbc.Driver", but it can be put into hadoop running environment

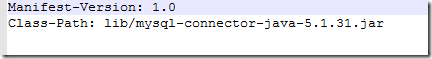

3. Type the dependent jar into the project, and then configure manifest Class path option in MF file

For specific configuration, please refer to "By specifying the package of manifest.mf file"

This article is reprinted

Original address: https://www.cnblogs.com/codeOfLife/p/5464613.html