brief introduction

This paper will introduce how to use OpenBox open source black box optimization system to optimize the super parameters of LightGBM model.

OpenBox is an open source system designed for black box optimization (project address: https://github.com/thomas-young-2013/open-box ), based on Bayesian optimization, the black box optimization problem is solved efficiently. For the typical problem of superparametric optimization - black box optimization, OpenBox shows excellent performance and can achieve better model performance results in a shorter time.

OpenBox has a wide range of usage scenarios. It supports not only traditional single objective black box optimization, but also multi-objective optimization, optimization with constraints, multiple parameter types, migration learning, distributed parallel verification, multi-precision optimization, etc. In addition to local installation and optimization call, OpenBox also provides online optimization services. Users can visually monitor and manage the optimization process through web pages, or deploy private optimization services. Next, we will introduce how to use the OpenBox system locally to tune the parameters of the LightGBM model.

LightGBM super parameter optimization tutorial

LightGBM is a high-performance gradient promotion framework based on decision tree. When using it, users need to specify some super parameters in the model, including learning rate, maximum number of leaf nodes of the tree, feature sampling ratio, etc. Although LightGBM provides default values for hyperparameters, we can achieve better performance of the model by adjusting the hyperparameters. Manually adjusting multiple super parameters will consume a lot of verification resources and manpower. Using OpenBox system to adjust parameters can reduce manual participation and efficiently search better results in less verification times.

Before using OpenBox for optimization, we need to define task search space (i.e. super parameter space) and optimization objective function. OpenBox encapsulates the super parameter space and objective function of LightGBM model. Users can call it conveniently through the following code (training and verification data are required to define the objective function):

from openbox.utils.tuning import get_config_space, get_objective_function

config_space = get_config_space('lightgbm')

# please prepare your data (x_train, x_val, y_train, y_val) first

objective_function = get_objective_function('lightgbm', x_train, x_val, y_train, y_val)

In order to better show the details of task definition and meet your customization needs, we will introduce LightGBM hyperparameter space and objective function definition methods respectively. You can also directly jump to the "perform optimization" section to see how to use OpenBox to perform hyperparametric optimization on LightGBM.

Defining a hyperparametric space

First, we use the ConfigSpace library to define the super parameter space. In this example, our hyperparameter space contains seven hyperparameters. Since the maximum number of leaves ("num_leaves") can control the tree depth to some extent, we set the maximum tree depth ("max_depth") to Constant.

from openbox.utils.config_space import ConfigurationSpace

from openbox.utils.config_space import UniformFloatHyperparameter, \

Constant, UniformIntegerHyperparameter

def get_config_space():

cs = ConfigurationSpace()

n_estimators = UniformIntegerHyperparameter("n_estimators", 100, 1000, default_value=500, q=50)

num_leaves = UniformIntegerHyperparameter("num_leaves", 31, 2047, default_value=128)

max_depth = Constant('max_depth', 15)

learning_rate = UniformFloatHyperparameter("learning_rate", 1e-3, 0.3, default_value=0.1, log=True)

min_child_samples = UniformIntegerHyperparameter("min_child_samples", 5, 30, default_value=20)

subsample = UniformFloatHyperparameter("subsample", 0.7, 1, default_value=1, q=0.1)

colsample_bytree = UniformFloatHyperparameter("colsample_bytree", 0.7, 1, default_value=1, q=0.1)

cs.add_hyperparameters([n_estimators, num_leaves, max_depth, learning_rate, min_child_samples, subsample, colsample_bytree])

return cs

config_space = get_config_space()

Define objective function

Next, we define the optimization objective function. The function input is the model super parameter, and the return value is the model balance error rate. be careful:

-

OpenBox optimizes the target in the direction of minimization.

-

In order to support multi-objective and constrained optimization scenarios, the return value is in the form of dictionary, and the optimization objectives are expressed in tuples or lists. (single value can also be returned in single target unconstrained scenario)

The objective function contains the process of training the LightGBM model with the training set, predicting and calculating the balance error rate with the verification set. Here, we use the sklearn interface lgbm classifier provided by LightGBM.

from sklearn.model_selection import train_test_split

from sklearn.datasets import load_digits

from sklearn.metrics import balanced_accuracy_score

from lightgbm import LGBMClassifier

# prepare your data

X, y = load_digits(return_X_y=True)

x_train, x_val, y_train, y_val = train_test_split(X, y, test_size=0.2, stratify=y, random_state=1)

def objective_function(config):

# convert Configuration to dict

params = config.get_dictionary()

# fit model

model = LGBMClassifier(**params)

model.fit(x_train, y_train)

# predict and calculate loss

y_pred = model.predict(x_val)

loss = 1 - balanced_accuracy_score(y_val, y_pred) # OpenBox minimizes the objective

# return result dictionary

result = dict(objs=(loss, ))

return result

Execution optimization

After defining the task and objective function, you can call the OpenBox Bayesian optimization framework SMBO to perform optimization. We set the number of optimization rounds (max_runs) to 100, which means that we will adjust the parameters of LightGBM model for 100 rounds. The maximum verification time per round (time_limit_per_trial) is set to 180 seconds, and the overtime task will be terminated. After optimization, the optimization results can be printed.

from openbox.optimizer.generic_smbo import SMBO

bo = SMBO(objective_function,

config_space,

max_runs=100,

time_limit_per_trial=180,

task_id='tuning_lightgbm')

history = bo.run()

The print optimization results are as follows:

print(history) +------------------------------------------------+ | Parameters | Optimal Value | +-------------------------+----------------------+ | colsample_bytree | 0.800000 | | learning_rate | 0.018402 | | max_depth | 15 | | min_child_samples | 15 | | n_estimators | 200 | | num_leaves | 723 | | subsample | 0.800000 | +-------------------------+----------------------+ | Optimal Objective Value | 0.022305877305877297 | +-------------------------+----------------------+ | Num Configs | 100 | +-------------------------+----------------------+

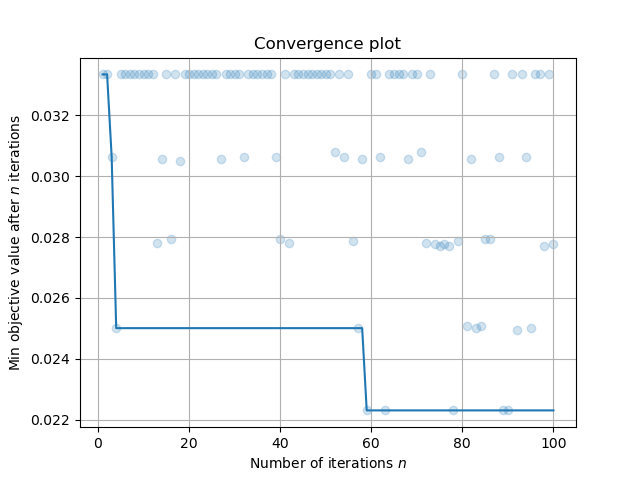

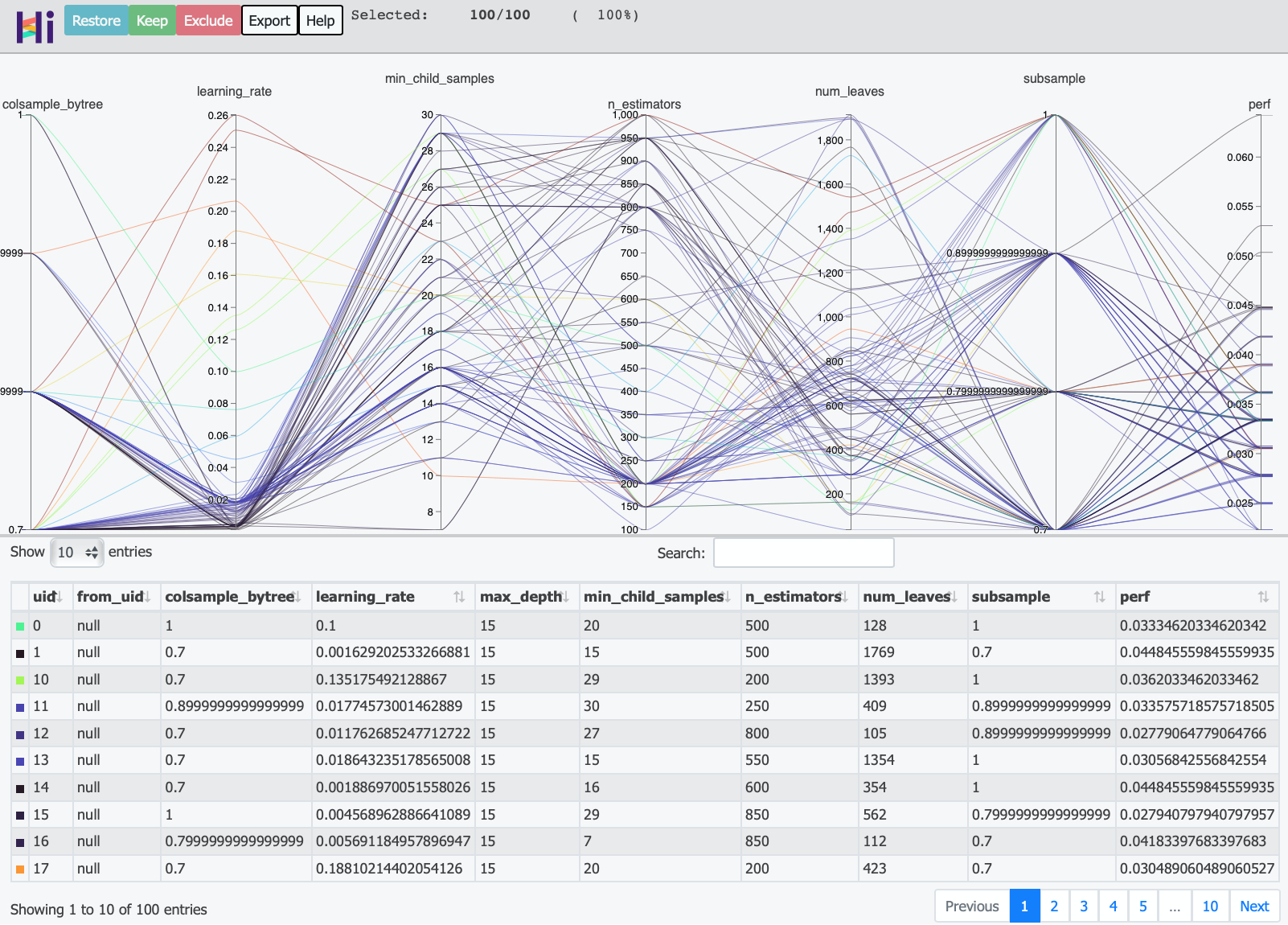

We can draw the convergence curve to further observe the results. In the Jupiter notebook environment, you can also view HiPlot visualization results.

history.plot_convergence() history.visualize_jupyter()

The left figure above is the curve of the optimal error rate of the model with the number of verification, and the right figure is the HiPlot visual chart reflecting the relationship between the super parameters and the results in the optimization history.

The system also integrates the super parameter sensitivity analysis function. According to this task, the importance of super parameters is analyzed as follows:

+--------------------------------+ | Parameters | Importance | +-------------------+------------+ | learning_rate | 0.293457 | | min_child_samples | 0.101243 | | n_estimators | 0.076895 | | num_leaves | 0.069107 | | colsample_bytree | 0.051856 | | subsample | 0.010067 | | max_depth | 0.000000 | +-------------------+------------+

In this task, the first three super parameters that have the greatest impact on the performance of the model are learning_rate,min_child_samples and n_estimators.

In addition, OpenBox system also supports defining tasks in the form of dictionary. If you are interested in understanding, please visit our website Tutorial documentation.

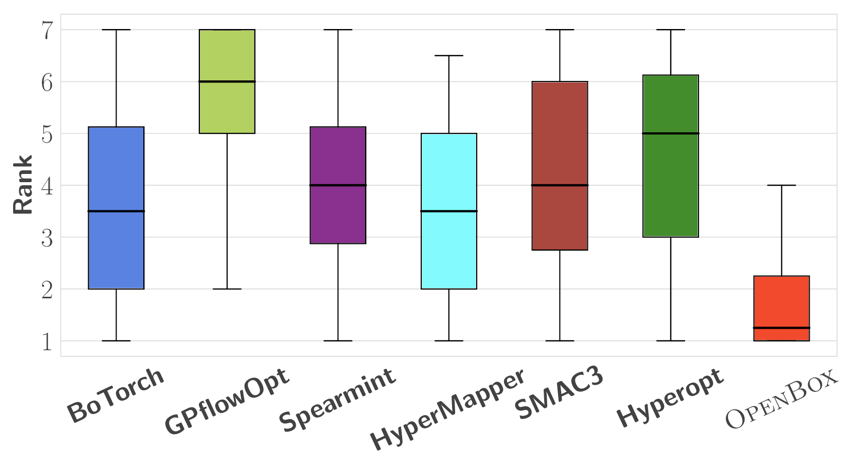

OpenBox performance test results

OpenBox system has excellent performance for hyperparametric optimization problems. We experimentally compared the performance of each hyperparametric optimization (black box optimization) system. The following figure shows the ranking of each system after superparametric optimization of LightGBM model on 25 data sets:

In the problem of super parameter optimization, the performance of OpenBox system exceeds that of the existing system.

summary

This paper introduces the method of local super parameter optimization of LightGBM model using OpenBox system, and shows the performance experimental results of the system on the problem of super parameter optimization.

If you are interested in learning more about the usage of OpenBox (such as multi-objective, scenes with constraints, parallel verification, service usage, etc.), please read our tutorial document: https://open-box.readthedocs.io.

OpenBox project is open source in Github. Project address: https://github.com/thomas-young-2013/open-box . More developers are welcome to participate in our open source projects.