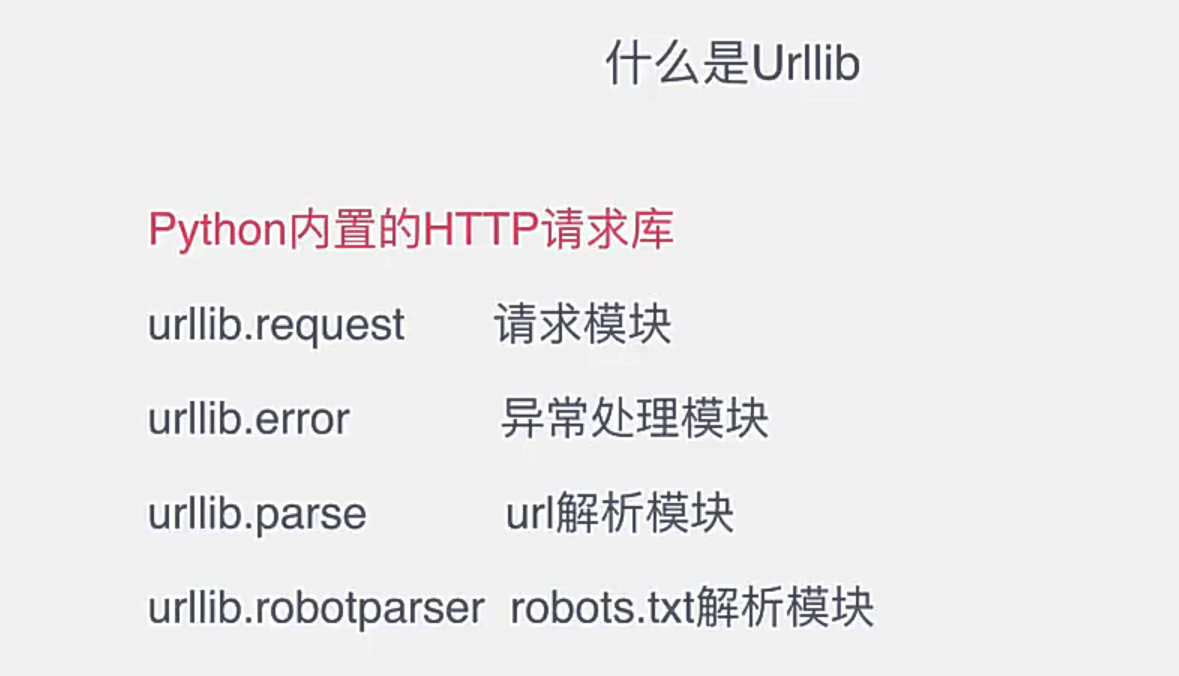

Four modules of urlib

urrlib.request

urrlib.error

urrlib.parse

urrlib.robotparser

Get page source code

import urllib.request response=urllib.request.urlopen("http://www.baidu.com") print(response.read().decode('utf-8')) #Get Baidu's source code

post request

import urllib.parse import urllib.request data=bytes(urllib.parse.urlencode({"name":"hello"}),encoding='utf-8') response=urllib.request.urlopen("http://httpbin.org/post",data=data) print(response.read().decode('utf-8'))

The data here is the passed parameter. If the parameter is byte stream encoded content (i.e. byte type), it needs to be converted by the bytes () method, and the request method is POST

Timeout tests

import urllib.request import urllib.error import socket try: response=urllib.request.urlopen("http://httpbin.org/get",timeout=0.1) except urllib.error.URLError as e: if isinstance(e.reason,socket.timeout): print("TIME OUT")

The time parameter is passed in here

response

1. Response type

import urllib.request response=urllib.request.urlopen("http://httpbin.org/get") print(type(response))

The returned result is: < class' http.client.HTTPResponse '>

2. status code

3. response head

4. response body

import urllib.request response=urllib.request.urlopen("http://www.python.org") print(response.status)#Response state print(response.getheaders())#Get header information print(response.getheader('Server'))

response with multiple parameters

from urllib import request,parse url='http://httpbin.org/post' headers={ 'User-Agent':'Mozillia/4.0(comoatible;MSIE 5.5;Windows NT)', 'Host':'httpbin.org' } dict={ 'name':'Germey' } data=bytes(parse.urlencode(dict),encoding='utf-8') req=request.Request(url=url,data=data,headers=headers,method='POST') response=request.urlopen(req) print(response.read().decode('utf-8'))

Advanced usage handler

In some requests, cookies and proxy settings are needed, so Handler is used

mport urllib.request proxy_handler=urllib.request.ProxyHandler({ 'http':'http://127.0.0.1:9743' 'https':'https://127.0.0.1:9743' }) opener=urllib.request.build_opener(proxy_handler) resopnse=opener.open('http://httpbin.org/get') print(resopnse.read())

cookies()

import http.cookiejar,urllib.request cookie=http.cookiejar.CookieJar() handler=urllib.request.HTTPCookieProcessor(cookie) opener=urllib.request.build_opener(handler) response=opener.open("http://www.baidu.com") for item in cookie: print(item.name+"="+item.value) # Declare a CookieJar object, use HTTP cookieprocessor to build a Handler, and finally use build ﹣ openr to execute the open method

exception handling

from urllib import request,error try: response=request.urllib.urlopen('http://cuiqingcai.com/index.html') except error.HTTPError as e: print(e.reason,e.code,e.headers,seq='\n') #HTTPError is a subclass of URLError except error.URLError as e: print(e.reason) else: print('request successfully')

URL analysis:

Part of URL:

What parts does a URL contain? For example, for example, "http://www.baidu.com/index.html? Name = MO & age = 25 × Dowell", in this example, we can divide it into six parts;

1. Transmission protocol: http, https

2. Domain name: for example, www.baidu.com is the name of the website. Baidu.com is the primary domain name, WWW is the server

3. Port: if it is not filled in, port 80 will be used by default

4. Path: http://www.baidu.com/ path 1 / path 1.2. /Represents the root directory

5. Parameters carried:? name=mo

6. Hash value: ×× dowell

--------

Original link: https://blog.csdn.net/qq_/article/details/83689928

urlparse: split operation for url

==urllib.parse.urlparse(urlstring, scheme='', allow_fragments=True)

url: the url to be resolved

scheme = ': if the parsed url does not have a protocol, you can set the default protocol. If the url has a protocol, setting this parameter is invalid

allow_fragments=True: whether to ignore the anchor point. By default, True means not to ignore, False means ignore

from urllib.parse import urlparse result=urlparse('www.baidu.com/index.html;user?id=5#comment',scheme='https') print(result) #Print result: parseresult (scheme =, netloc = ', path =, www.baidu. COM / index. HTML', params =, user ', query =, id = 5', segment =, comment ')

urlunparse (combination)

import urllib.parse import urlunparse data=['http','www.baidu.com','index.html','user','a=6','comment'] print(urlunparse(data))

urljoin

For the urljoin method, we can provide a base uurl (basic link) as the first parameter and a new link as the second parameter. This method will analyze the scheme, neloc and path of base uurl to supplement the missing part of the link, and finally return the result

from urllib.parse import urljoin print(urljoin("http://www.baidu.com","FAQ.html")) print(urljoin("http://www.baidu.com","https://cuiqinghua.com/FAQ.html")) print(urljoin("http://www.baidu.com","?category=2")) #Print results #http://www.baidu.com/FAQ.html #https://cuiqinghua.com/FAQ.html #http://www.baidu.com?category=2

urlencode can convert dictionary objects to parameters

from urllib.parse import urlencode params={ 'name':'germey' 'age':22 } base_url='http://www.baidu.com?' url=base_url+urlencode(params) print(url)

quote

This method can convert the content to url format. When the url has Chinese parameters, it may cause garbled code

from urllib.parse import quote keyword="wallpaper" url="https://www.baidu/s?wd"+quote(keyword) print(url) #https://www.baidu/s?wd%E5%A3%81%E7%BA%B8

unquote

This method can decode the url

from urllib.parse import unquote url='https://www.baidu/s?wd%E5%A3%81%E7%BA%B8' print(unquote(url)) `#https://www.baidu/s?wd wallpaper``